mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

15705a0bd3

@ -0,0 +1,188 @@

|

||||

Linux 上查看系统/服务器运行时间的 11 种方法

|

||||

======

|

||||

|

||||

你是否想知道自己的 Linux 系统正常运行了多长时间而没有宕机?系统是什么时候启动的?

|

||||

|

||||

Linux 上有多个查看服务器/系统运行时间的命令,大多数用户喜欢使用标准并且很有名的 `uptime` 命令获取这些具体的信息。

|

||||

|

||||

服务器的运行时间对一些用户来说不那么重要,但是当服务器运行诸如在线商城<ruby>门户<rt>portal</rt></ruby>、网上银行门户等<ruby>关键任务应用<rt>mission-critical applications</rt></ruby>时,它对于<ruby>服务器管理员<rt>server adminstrators</rt></ruby>来说就至关重要。

|

||||

|

||||

它必须做到零宕机,因为一旦停机就会影响到数百万用户。

|

||||

|

||||

正如我所说,许多命令都可以让用户看到 Linux 服务器的运行时间。在这篇教程里我会教你如何使用下面 11 种方式来查看。

|

||||

|

||||

<ruby>正常运行时间<rt>uptime</rt></ruby>指的是服务器自从上次关闭或重启以来经过的时间。

|

||||

|

||||

`uptime` 命令获取 `/proc` 文件中的详细信息并输出正常运行时间,而 `/proc` 文件并不适合人直接看。

|

||||

|

||||

以下这些命令会输出系统运行和启动的时间。也会显示一些额外的信息。

|

||||

|

||||

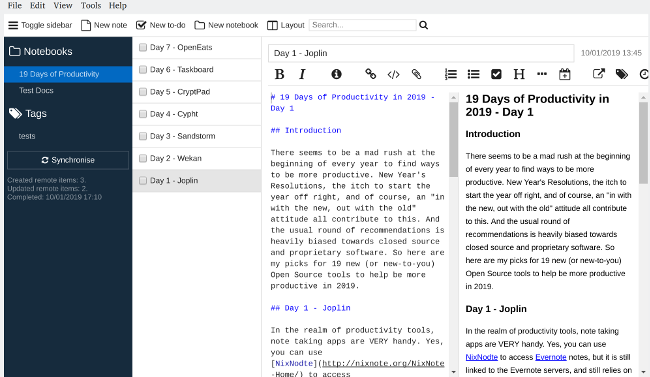

### 方法 1:使用 uptime 命令

|

||||

|

||||

`uptime` 命令会告诉你系统运行了多长时间。它会用一行显示以下信息。

|

||||

|

||||

当前时间、系统运行时间、当前登录用户的数量、过去 1 分钟/5 分钟/15 分钟系统负载的均值。

|

||||

|

||||

```

|

||||

# uptime

|

||||

|

||||

08:34:29 up 21 days, 5:46, 1 user, load average: 0.06, 0.04, 0.00

|

||||

```

|

||||

|

||||

### 方法 2:使用 w 命令

|

||||

|

||||

`w` 命令为每个登录进系统的用户,每个用户当前所做的事情,所有活动的负载对计算机的影响提供了一个快速的概要。这个单一命令结合了多个 Unix 程序:`who`、`uptime`,和 `ps -a` 的结果。

|

||||

|

||||

```

|

||||

# w

|

||||

|

||||

08:35:14 up 21 days, 5:47, 1 user, load average: 0.26, 0.09, 0.02

|

||||

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

|

||||

root pts/1 103.5.134.167 08:34 0.00s 0.01s 0.00s w

|

||||

```

|

||||

|

||||

### 方法 3:使用 top 命令

|

||||

|

||||

`top` 命令是 Linux 上监视实时系统进程的基础命令之一。它显示系统信息和运行进程的信息,例如正常运行时间、平均负载、运行的任务、登录用户数量、CPU 数量 & CPU 利用率、内存 & 交换空间信息。

|

||||

|

||||

**推荐阅读:**[TOP 命令监视服务器性能的例子][1]

|

||||

|

||||

```

|

||||

# top -c

|

||||

|

||||

top - 08:36:01 up 21 days, 5:48, 1 user, load average: 0.12, 0.08, 0.02

|

||||

Tasks: 98 total, 1 running, 97 sleeping, 0 stopped, 0 zombie

|

||||

Cpu(s): 0.0%us, 0.3%sy, 0.0%ni, 99.7%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

|

||||

Mem: 1872888k total, 1454644k used, 418244k free, 175804k buffers

|

||||

Swap: 2097148k total, 0k used, 2097148k free, 1098140k cached

|

||||

|

||||

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

|

||||

1 root 20 0 19340 1492 1172 S 0.0 0.1 0:01.04 /sbin/init

|

||||

2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 [kthreadd]

|

||||

3 root RT 0 0 0 0 S 0.0 0.0 0:00.00 [migration/0]

|

||||

4 root 20 0 0 0 0 S 0.0 0.0 0:34.32 [ksoftirqd/0]

|

||||

5 root RT 0 0 0 0 S 0.0 0.0 0:00.00 [stopper/0]

|

||||

```

|

||||

|

||||

### 方法 4:使用 who 命令

|

||||

|

||||

`who` 命令列出当前登录进计算机的用户。`who` 命令与 `w` 命令类似,但后者还包含额外的数据和统计信息。

|

||||

|

||||

```

|

||||

# who -b

|

||||

system boot 2018-04-12 02:48

|

||||

```

|

||||

|

||||

### 方法 5:使用 last 命令

|

||||

|

||||

`last` 命令列出最近登录过的用户。`last` 回溯 `/var/log/wtmp` 文件并显示自从文件创建后登录进(出)的用户。

|

||||

|

||||

```

|

||||

# last reboot -F | head -1 | awk '{print $5,$6,$7,$8,$9}'

|

||||

Thu Apr 12 02:48:04 2018

|

||||

```

|

||||

|

||||

### 方法 6:使用 /proc/uptime 文件

|

||||

|

||||

这个文件中包含系统上次启动后运行时间的详细信息。`/proc/uptime` 的输出相当精简。

|

||||

|

||||

第一个数字是系统自从启动的总秒数。第二个数字是总时间中系统空闲所花费的时间,以秒为单位。

|

||||

|

||||

```

|

||||

# cat /proc/uptime

|

||||

1835457.68 1809207.16

|

||||

```

|

||||

|

||||

### 方法 7:使用 tuptime 命令

|

||||

|

||||

`tuptime` 是一个汇报系统运行时间的工具,输出历史信息并作以统计,保留重启之间的数据。和 `uptime` 命令很像,但输出更有意思一些。

|

||||

|

||||

```

|

||||

$ tuptime

|

||||

```

|

||||

|

||||

### 方法 8:使用 htop 命令

|

||||

|

||||

`htop` 是运行在 Linux 上的一个交互式进程查看器,是 Hisham 使用 ncurses 库开发的。`htop` 比起 `top` 有很多的特性和选项。

|

||||

|

||||

**推荐阅读:** [使用 Htop 命令监控系统资源][2]

|

||||

|

||||

```

|

||||

# htop

|

||||

|

||||

CPU[| 0.5%] Tasks: 48, 5 thr; 1 running

|

||||

Mem[||||||||||||||||||||||||||||||||||||||||||||||||||| 165/1828MB] Load average: 0.10 0.05 0.01

|

||||

Swp[ 0/2047MB] Uptime: 21 days, 05:52:35

|

||||

|

||||

PID USER PRI NI VIRT RES SHR S CPU% MEM% TIME+ Command

|

||||

29166 root 20 0 110M 2484 1240 R 0.0 0.1 0:00.03 htop

|

||||

29580 root 20 0 11464 3500 1032 S 0.0 0.2 55:15.97 /bin/sh ./OSWatcher.sh 10 1

|

||||

1 root 20 0 19340 1492 1172 S 0.0 0.1 0:01.04 /sbin/init

|

||||

486 root 16 -4 10780 900 348 S 0.0 0.0 0:00.07 /sbin/udevd -d

|

||||

748 root 18 -2 10780 932 360 S 0.0 0.0 0:00.00 /sbin/udevd -d

|

||||

```

|

||||

|

||||

### 方法 9:使用 glances 命令

|

||||

|

||||

`glances` 是一个跨平台的基于 curses 库的监控工具,它是使用 python 编写的。可以说它非常强大,仅用一点空间就能获得很多信息。它使用 psutil 库从系统中获取信息。

|

||||

|

||||

`glances` 可以监控 CPU、内存、负载、进程、网络接口、磁盘 I/O、<ruby>磁盘阵列<rt>RAID</rt></ruby>、传感器、文件系统(与文件夹)、容器、监视器、Alert 日志、系统信息、运行时间、<ruby>快速查看<rt>Quicklook</rt></ruby>(CPU,内存、负载)等。

|

||||

|

||||

**推荐阅读:** [Glances (集大成)– Linux 上高级的实时系统运行监控工具][3]

|

||||

|

||||

```

|

||||

glances

|

||||

|

||||

ubuntu (Ubuntu 17.10 64bit / Linux 4.13.0-37-generic) - IP 192.168.1.6/24 Uptime: 21 days, 05:55:15

|

||||

|

||||

CPU [|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 90.6%] CPU - 90.6% nice: 0.0% ctx_sw: 4K MEM \ 78.4% active: 942M SWAP - 5.9% LOAD 2-core

|

||||

MEM [||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 78.0%] user: 55.1% irq: 0.0% inter: 1797 total: 1.95G inactive: 562M total: 12.4G 1 min: 4.35

|

||||

SWAP [|||| 5.9%] system: 32.4% iowait: 1.8% sw_int: 897 used: 1.53G buffers: 14.8M used: 749M 5 min: 4.38

|

||||

idle: 7.6% steal: 0.0% free: 431M cached: 273M free: 11.7G 15 min: 3.38

|

||||

|

||||

NETWORK Rx/s Tx/s TASKS 211 (735 thr), 4 run, 207 slp, 0 oth sorted automatically by memory_percent, flat view

|

||||

docker0 0b 232b

|

||||

enp0s3 12Kb 4Kb Systemd 7 Services loaded: 197 active: 196 failed: 1

|

||||

lo 616b 616b

|

||||

_h478e48e 0b 232b CPU% MEM% VIRT RES PID USER NI S TIME+ R/s W/s Command

|

||||

63.8 18.9 2.33G 377M 2536 daygeek 0 R 5:57.78 0 0 /usr/lib/firefox/firefox -contentproc -childID 1 -isForBrowser -intPrefs 6:50|7:-1|19:0|34:1000|42:20|43:5|44:10|51

|

||||

DefaultGateway 83ms 78.5 10.9 3.46G 217M 2039 daygeek 0 S 21:07.46 0 0 /usr/bin/gnome-shell

|

||||

8.5 10.1 2.32G 201M 2464 daygeek 0 S 8:45.69 0 0 /usr/lib/firefox/firefox -new-window

|

||||

DISK I/O R/s W/s 1.1 8.5 2.19G 170M 2653 daygeek 0 S 2:56.29 0 0 /usr/lib/firefox/firefox -contentproc -childID 4 -isForBrowser -intPrefs 6:50|7:-1|19:0|34:1000|42:20|43:5|44:10|51

|

||||

dm-0 0 0 1.7 7.2 2.15G 143M 2880 daygeek 0 S 7:10.46 0 0 /usr/lib/firefox/firefox -contentproc -childID 6 -isForBrowser -intPrefs 6:50|7:-1|19:0|34:1000|42:20|43:5|44:10|51

|

||||

sda1 9.46M 12K 0.0 4.9 1.78G 97.2M 6125 daygeek 0 S 1:36.57 0 0 /usr/lib/firefox/firefox -contentproc -childID 7 -isForBrowser -intPrefs 6:50|7:-1|19:0|34:1000|42:20|43:5|44:10|51

|

||||

```

|

||||

|

||||

### 方法 10:使用 stat 命令

|

||||

|

||||

`stat` 命令显示指定文件或文件系统的详细状态。

|

||||

|

||||

```

|

||||

# stat /var/log/dmesg | grep Modify

|

||||

Modify: 2018-04-12 02:48:04.027999943 -0400

|

||||

```

|

||||

|

||||

### 方法 11:使用 procinfo 命令

|

||||

|

||||

`procinfo` 从 `/proc` 文件夹下收集一些系统数据并将其很好的格式化输出在标准输出设备上。

|

||||

|

||||

```

|

||||

# procinfo | grep Bootup

|

||||

Bootup: Fri Apr 20 19:40:14 2018 Load average: 0.16 0.05 0.06 1/138 16615

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/11-methods-to-find-check-system-server-uptime-in-linux/

|

||||

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[LuuMing](https://github.com/LuuMing)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.2daygeek.com/author/magesh/

|

||||

[1]:https://www.2daygeek.com/top-command-examples-to-monitor-server-performance/

|

||||

[2]:https://www.2daygeek.com/htop-command-examples-to-monitor-system-resources/

|

||||

[3]:https://www.2daygeek.com/install-glances-advanced-real-time-linux-system-performance-monitoring-tool-on-centos-fedora-ubuntu-debian-opensuse-arch-linux/

|

||||

107

sources/talk/20150513 XML vs JSON.md

Normal file

107

sources/talk/20150513 XML vs JSON.md

Normal file

@ -0,0 +1,107 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (XML vs JSON)

|

||||

[#]: via: (https://www.cs.tufts.edu/comp/150IDS/final_papers/tstras01.1/FinalReport/FinalReport.html#xml-advantages)

|

||||

[#]: author: (TOM STRASSNER tomstrassner@gmail.com)

|

||||

|

||||

XML vs JSON

|

||||

======

|

||||

|

||||

### Introduction

|

||||

|

||||

XML and JSON are the two most common formats for data interchange in the Web today. XML was created by the W3C in 1996, and JSON was publicly specified by Douglas Crockford in 2002. Although their purposes are not identical, they are frequently used to accomplish the same task, which is data interchange. Both have well-documented open standards on the Web ([RFC 7159][1], [RFC 4825][2]), and both are human and machine-readable. Neither one is absolutely superior to the other, as each is better suited for different use cases.

|

||||

|

||||

### XML Advantages

|

||||

|

||||

There are several advantages that XML has over JSON. One of the biggest differences between the two is that in XML you can put metadata into the tags in the form of attributes. With JSON, the programmer could accomplish the same goal that metadata achieves by making the entity an object and adding the attributes as members of the object. However, the way XML does it may often be preferable, given the slightly misleading nature of turning something into an object that is not one in the client program. For example, if your C++ program sends an int via JSON and needs metadata to be sent along with it, you would have to make it an object, with one name/value pair for the actual value of the int, and more name/value pairs for each attribute. The program that receives the JSON would read it as an object, when in fact it is not one. While this is a viable solution, it defies one of JSON’s key advantages: “JSON's structures look like conventional programming language structures. No restructuring is necessary.”[2]

|

||||

|

||||

Although I will argue later that this can also be a drawback of XML, its mechanism to resolve name conflicts, prefixes, gives it power that JSON does not have. With prefixes, the programmer has the ability to name two different kinds of entities the same thing.[1] This would be advantageous in situations where the different entities should have the same name in the client program, perhaps if they are used in entirely different scopes.

|

||||

|

||||

Another advantage of XML is that most browsers render it in a highly readable and organized way. The tree structure of XML lends itself well to this formatting, and allows for browsers to let users to naturally collapse individual tree elements. This feature would be particularly useful in debugging.

|

||||

|

||||

One of the most significant advantages that XML has over JSON is its ability to communicate mixed content, i.e. strings that contain structured markup. In order to handle this with XML, the programmer need only put the marked-up text within a child tag of the parent in which it belongs. Similar to the metadata situation, since JSON only contains data, there is no such simple way to indicate markup. It would again require storing metadata as data, which could be considered an abuse of the format.

|

||||

|

||||

### JSON Advantages

|

||||

|

||||

JSON has several advantages as well. One of the most obvious of these is that JSON is significantly less verbose than XML, because XML necessitates opening and closing tags (or in some cases less verbose self-closing tags), and JSON uses name/value pairs, concisely delineated by “{“ and “}” for objects, “[“ and “]” for arrays, “,” to separate pairs, and “:” to separate name from value. Even when zipped (using gzip), JSON is still smaller and it takes less time to zip it.[6] As determined by Sumaray and Makki as well as Nurseitov, Paulson, Reynolds, and Izurieta in their experimental findings, JSON outperforms XML in a number of ways. First, naturally following from its conciseness, JSON files that contain the same information as their XML counterparts are almost always significantly smaller, which leads to faster transmission and processing. Second, difference in size aside, both groups found that JSON was serialized and deserialized drastically faster than XML.[3][4] Third, the latter study determined that JSON processing outdoes XML in CPU resource utilization. They found that JSON used less total resources, more user CPU, and less system CPU. The experiment used RedHat machines, and RedHat claims that higher user CPU usage is preferable.[3] Unsurprisingly, the Sumaray and Makki study determined that JSON performance is superior to XML on mobile devices too.[4] This makes sense, given that JSON uses less resources, and mobile devices are less powerful than desktop machines.

|

||||

|

||||

Yet another advantage that JSON has over XML is that its representation of objects and arrays allows for direct mapping onto the corresponding data structures in the host language, such as objects, records, structs, dictionaries, hash tables, keyed lists, and associative arrays for objects, and arrays, vectors, lists, and sequences for arrays.[2] Although it is perfectly possible to represent these structures in XML, it is only as a function of the parsing, and it takes more code to serialize and deserialize properly. It also would not always be obvious to the reader of arbitrary XML what tags represent an object and what tags represent an array, especially because nested tags can just as easily be structured markup instead. The curly braces and brackets of JSON definitively show the structure of the data. However, this advantage does come with the caveat explained above, that the JSON can inaccurately represent the data if the need arises to send metadata.

|

||||

|

||||

Although XML supports namespaces and prefixes, JSON’s handling of name collisions is less verbose than prefixes, and arguably feels more natural with the program using it; in JSON, each object is its own namespace, so names may be repeated as long as they are in different scopes. This may be preferable, as in most programming languages members of different objects can have the same name, because they are distinguished by the names of the objects to which they belong.

|

||||

|

||||

Perhaps the most significant advantage that JSON has over XML is that JSON is a subset of JavaScript, so code to parse and package it fits very naturally into JavaScript code. This seems highly beneficial for JavaScript programs, but does not directly benefit any programs that use languages other than JavaScript. However, this drawback has been largely overcome, as currently the JSON website lists over 175 tools for 64 different programming languages that exist to integrate JSON processing. While I cannot speak to the quality of most of these tools, it is clear that the developer community has embraced JSON and has made it simple to use in many different platforms.

|

||||

|

||||

### Purposes

|

||||

|

||||

Simply put, XML’s purpose is document markup. This is decidedly not a purpose of JSON, so XML should be used whenever this is what needs to be done. It accomplishes this purpose by giving semantic meaning to text through its tree-like structure and ability to represent mixed content. Data structures can be represented in XML, but that is not its purpose.

|

||||

|

||||

JSON’s purpose is structured data interchange. It serves this purpose by directly representing objects, arrays, numbers, strings, and booleans. Its purpose is distinctly not document markup. As described above, JSON does not have a natural way to represent mixed content.

|

||||

|

||||

### Software

|

||||

|

||||

The following major public APIs uses XML only: Amazon Product Advertising API.

|

||||

|

||||

The following major APIs use JSON only: Facebook Graph API, Google Maps API, Twitter API, AccuWeather API, Pinterest API, Reddit API, Foursquare API.

|

||||

|

||||

The following major APIs use both XML and JSON: Google Cloud Storage, Linkedin API, Flickr API

|

||||

|

||||

Of the top 10 most popular APIs according to Programmable Web[9], along with a couple more popular ones, only one supports XML and not JSON. Several support both, and several support only JSON. Among developer APIs for modern and popular websites, JSON clearly seems to be preferred. This also indicates that more app developers that use these APIs prefer JSON. This is likely a result of its reputation as the faster and leaner of the two. Furthermore, most of these APIs communicate data rather than documents, so JSON would be more appropriate. For example, Facebook is mainly concerned with communicating data about users and posts, Google Maps deals in coordinates and information about entities on their maps, and AccuWeather just sends weather data. Overall, it is impossible to say whether JSON or XML is currently used more in APIs, but the trend is certainly swinging towards JSON.[10][11]

|

||||

|

||||

The following major desktop software uses XML only: Microsoft Word, Apache OpenOffice, LibreOffice.

|

||||

|

||||

It makes sense for software that is mainly concerned with document creation, manipulation, and storage to use XML rather than JSON. Also, all three of these programs support mixed content, which JSON does not do well. For example, if a user is typing up an essay in Microsoft Word, they may put different font, size, color, positioning, and styling on different blocks of text. XML naturally represents these properties with nested tags and attributes.

|

||||

|

||||

The following major databases support XML: IBM DB2, Microsoft SQL Server, Oracle Database, PostgresSQL, BaseX, eXistDB, MarkLogic, MySQL.

|

||||

|

||||

The following major databases support JSON: MongoDB, CouchDB, eXistDB, Elastisearch, BaseX, MarkLogic, OrientDB, Oracle Database, PostgresSQL, Riak.

|

||||

|

||||

For a long time, SQL and the relational database model dominated the market. Corporate giants like Oracle and Microsoft have always marketed such databases. However, in the last decade, there has been a major rise in popularity of NoSQL databases. As this has coincided with the rise of JSON, most NoSQL databases support JSON, and some, such as MongoDB, CouchDB, and Riak use JSON to store their data. These databases have two important qualities that make them better suited for modern websites: they are generally more scalable than relational SQL databases, and they are designed to the core to run in the Web.[10] Since JSON is more lightweight and a subset of JavaScript, it suits NoSQL databases well, and helps facilitate these two qualities. In addition, many older databases have added support for JSON, such as Oracle Database and PostgresSQL. Conversion between XML and JSON is a hassle, so naturally, as more developers use JSON for their apps, more database companies have incentive to support it.[7]

|

||||

|

||||

### Future

|

||||

|

||||

One of the most heavily anticipated changes in the Internet is the “Internet of Things”, i.e. the addition to the Internet of non-computer devices such as watches, thermostats, televisions, refrigerators, etc. This movement is well underway, and is expected to explode in the near future, as predictions for the number of devices in the Internet of Things in 2020 range from about 26 billion to 200 billion.[12][13][13] Almost all of these devices are smaller and less powerful than laptop and desktop computers. Many of them only run embedded systems. Thus, when they have the need to exchange data with other entities in the Web, the lighter and faster JSON would naturally be preferable to XML.[10] Also, with the recent rapid rise of JSON use in the Web relative to XML, new devices may benefit more from speaking JSON. This highlights an example of Metcalf’s Law; whether XML or JSON or something entirely new becomes the most popular format in the Web, newly added devices and all existing devices will benefit much more if the newly added devices speak the most popular language.

|

||||

|

||||

With the creation and recent rapid increase in popularity of Node.js, a server-side JavaScript framework, along with NoSQL databases like MongoDB, full-stack JavaScript development has become a reality. This bodes well for the future of JSON, as with these new apps, JSON is spoken at every level of the stack, which generally makes the apps very fast and lightweight. This is a desirable trait for any app, so this trend towards full-stack JavaScript is not likely to die out anytime soon.[10]

|

||||

|

||||

Another existing trend in the world of app development is toward REST and away from SOAP.[11][15][16] Both XML and JSON can be used with REST, but SOAP exclusively uses XML.

|

||||

|

||||

The given trends indicate that JSON will continue to dominate the Web, and XML use will continue to decrease. This should not be overblown, however, because XML is still very heavily used in the Web, and it is the only option for apps that use SOAP. Given the widespread migration from SOAP to REST, the rise of NoSQL databases and full-stack JavaScript, and the far superior performance of JSON, I believe that JSON will soon be much more widely used than XML in the Web. There seem to be very few applications where XML is the better choice.

|

||||

|

||||

### References

|

||||

|

||||

1. [XML Tutorial](http://www.w3schools.com/xml/default.asp)

|

||||

2. [Introducing JSON](http://www.json.org/)

|

||||

3. [Comparison of JSON and XML Data Interchange Formats: A Case Study](http://www.cs.montana.edu/izurieta/pubs/caine2009.pdf)

|

||||

4. [A comparison of data serialization formats for optimal efficiency on a mobile platform](http://dl.acm.org/citation.cfm?id=2184810)

|

||||

5. [JSON vs. XML: The Debate](http://ajaxian.com/archives/json-vs-xml-the-debate)

|

||||

6. [JSON vs. XML: Some hard numbers about verbosity](http://www.codeproject.com/Articles/604720/JSON-vs-XML-Some-hard-numbers-about-verbosity)

|

||||

7. [How JSON sparked NoSQL -- and will return to the RDBMS fold](http://www.infoworld.com/article/2608293/nosql/how-json-sparked-nosql----and-will-return-to-the-rdbms-fold.html)

|

||||

8. [Did You Say "JSON Support" in Oracle 12.1.0.2?](https://blogs.oracle.com/OTN-DBA-DEV-Watercooler/entry/did_you_say_json_support)

|

||||

9. [Most Popular APIs: At Least One Will Surprise You](http://www.programmableweb.com/news/most-popular-apis-least-one-will-surprise-you/2014/01/23)

|

||||

10. [Why JSON will continue to push XML out of the picture](https://www.centurylinkcloud.com/blog/post/why-json-will-continue-to-push-xml-out-of-the-picture/)

|

||||

11. [Thousands of APIs Paint a Bright Future for the Web](http://www.webmonkey.com/2011/03/thousand-of-apis-paint-a-bright-future-for-the-web/)

|

||||

12. [A Simple Explanation Of 'The Internet Of Things’](http://www.forbes.com/sites/jacobmorgan/2014/05/13/simple-explanation-internet-things-that-anyone-can-understand/)

|

||||

13. [Proofpoint Uncovers Internet of Things (IoT) Cyberattack](http://www.proofpoint.com/about-us/press-releases/01162014.php)

|

||||

14. [The Internet of Things: New Threats Emerge in a Connected World](http://www.symantec.com/connect/blogs/internet-things-new-threats-emerge-connected-world)

|

||||

15. [3,000 Web APIs: Trends From A Quickly Growing Directory](http://www.programmableweb.com/news/3000-web-apis-trends-quickly-growing-directory/2011/03/08)

|

||||

16. [How REST replaced SOAP on the Web: What it means to you](http://www.infoq.com/articles/rest-soap)

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cs.tufts.edu/comp/150IDS/final_papers/tstras01.1/FinalReport/FinalReport.html#xml-advantages

|

||||

|

||||

作者:[TOM STRASSNER][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: tomstrassner@gmail.com

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://tools.ietf.org/html/rfc7159

|

||||

[2]: https://tools.ietf.org/html/rfc4825

|

||||

@ -1,3 +1,5 @@

|

||||

translating by hopefully2333

|

||||

|

||||

5 ways DevSecOps changes security

|

||||

======

|

||||

|

||||

|

||||

@ -1,64 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (bestony)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (5 resolutions for open source project maintainers)

|

||||

[#]: via: (https://opensource.com/article/18/12/resolutions-open-source-project-maintainers)

|

||||

[#]: author: (Ben Cotton https://opensource.com/users/bcotton)

|

||||

|

||||

5 resolutions for open source project maintainers

|

||||

======

|

||||

No matter how you say it, good communication is essential to strong open source communities.

|

||||

|

||||

|

||||

I'm generally not big on New Year's resolutions. I have no problem with self-improvement, of course, but I tend to anchor around other parts of the calendar. Even so, there's something about taking down this year's free calendar and replacing it with next year's that inspires some introspection.

|

||||

|

||||

In 2017, I resolved to not share articles on social media until I'd read them. I've kept to that pretty well, and I'd like to think it has made me a better citizen of the internet. For 2019, I'm thinking about resolutions to make me a better open source software maintainer.

|

||||

|

||||

Here are some resolutions I'll try to stick to on the projects where I'm a maintainer or co-maintainer.

|

||||

|

||||

### 1\. Include a code of conduct

|

||||

|

||||

Jono Bacon included "not enforcing the code of conduct" in his article "[7 mistakes you're probably making][1]." Of course, to enforce a code of conduct, you must first have a code of conduct. I plan on defaulting to the [Contributor Covenant][2], but you can use whatever you like. As with licenses, it's probably best to use one that's already written instead of writing your own. But the important thing is to find something that defines how you want your community to behave, whatever that looks like. Once it's written down and enforced, people can decide for themselves if it looks like the kind of community they want to be a part of.

|

||||

|

||||

### 2\. Make the license clear and specific

|

||||

|

||||

You know what really stinks? Unclear licenses. "This software is licensed under the GPL" with no further text doesn't tell me much. Which version of the [GPL][3]? Do I get to pick? For non-code portions of a project, "licensed under a Creative Commons license" is even worse. I love the [Creative Commons licenses][4], but there are several different licenses with significantly different rights and obligations. So, I will make it very clear which variant and version of a license applies to my projects. I will include the full text of the license in the repo and a concise note in the other files.

|

||||

|

||||

Sort of related to this is using an [OSI][5]-approved license. It's tempting to come up with a new license that says exactly what you want it to say, but good luck if you ever need to enforce it. Will it hold up? Will the people using your project understand it?

|

||||

|

||||

### 3\. Triage bug reports and questions quickly

|

||||

|

||||

Few things in technology scale as poorly as open source maintainers. Even on small projects, it can be hard to find the time to answer every question and fix every bug. But that doesn't mean I can't at least acknowledge the person. It doesn't have to be a multi-paragraph reply. Even just labeling the GitHub issue shows that I saw it. Maybe I'll get to it right away. Maybe I'll get to it a year later. But it's important for the community to see that, yes, there is still someone here.

|

||||

|

||||

### 4\. Don't push features or bug fixes without accompanying documentation

|

||||

|

||||

For as much as my open source contributions over the years have revolved around documentation, my projects don't reflect the importance I put on it. There aren't many commits I can push that don't require some form of documentation. New features should obviously be documented at (or before!) the time they're committed. But even bug fixes should get an entry in the release notes. If nothing else, a push is a good opportunity to also make a commit to improving the docs.

|

||||

|

||||

### 5\. Make it clear when I'm abandoning a project

|

||||

|

||||

I'm really bad at saying "no" to things. I told the editors I'd write one or two articles for [Opensource.com][6] and here I am almost 60 articles later. Oops. But at some point, the things that once held my interests no longer do. Maybe the project is unnecessary because its functionality got absorbed into a larger project. Maybe I'm just tired of it. But it's unfair to the community (and potentially dangerous, as the recent [event-stream malware injection][7] showed) to leave a project in limbo. Maintainers have the right to walk away whenever and for whatever reason, but it should be clear that they have.

|

||||

|

||||

Whether you're an open source maintainer or contributor, if you know other resolutions project maintainers should make, please share them in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/12/resolutions-open-source-project-maintainers

|

||||

|

||||

作者:[Ben Cotton][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bcotton

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/article/17/8/mistakes-open-source-avoid

|

||||

[2]: https://www.contributor-covenant.org/

|

||||

[3]: https://opensource.org/licenses/gpl-license

|

||||

[4]: https://creativecommons.org/share-your-work/licensing-types-examples/

|

||||

[5]: https://opensource.org/

|

||||

[6]: http://Opensource.com

|

||||

[7]: https://arstechnica.com/information-technology/2018/11/hacker-backdoors-widely-used-open-source-software-to-steal-bitcoin/

|

||||

@ -0,0 +1,89 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (NSA to Open Source its Reverse Engineering Tool GHIDRA)

|

||||

[#]: via: (https://itsfoss.com/nsa-ghidra-open-source)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

NSA to Open Source its Reverse Engineering Tool GHIDRA

|

||||

======

|

||||

|

||||

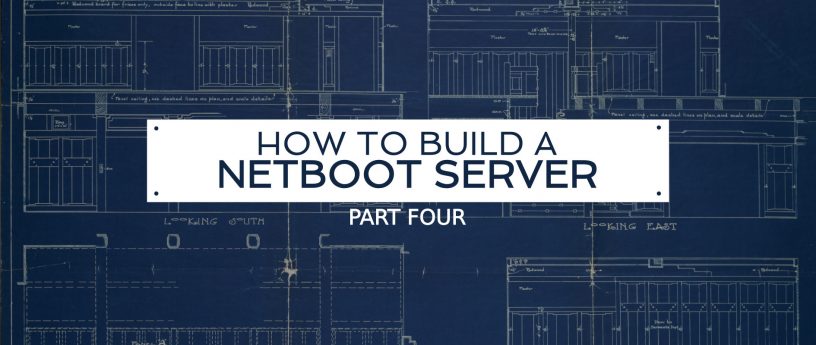

GHIDRA – NSA’s reverse engineering tool is getting ready for a free public release this March at the [RSA Conference 2019][1] to be held in San Francisco.

|

||||

|

||||

The National Security Agency (NSA) did not officially announce this – however – a senior NSA advisor, Robert Joyce’s [session description][2] on the official RSA conference website revealed about it before any official statement or announcement.

|

||||

|

||||

Here’s what it mentioned:

|

||||

|

||||

![][3]

|

||||

Image Credits: [Twitter][4]

|

||||

|

||||

In case the text in the image isn’t properly visible, let me quote the description here:

|

||||

|

||||

> NSA has developed a software reverse engineering framework known as GHIDRA, which will be demonstrated for the first time at RSAC 2019. An interactive GUI capability enables reverse engineers to leverage an integrated set of features that run on a variety of platforms including Windows, Mac OS, and Linux and supports a variety of processor instruction sets. The GHISDRA platform includes all the features expected in high-end commercial tools, with new and expanded functionality NSA uniquely developed. and will be released for free public use at RSA.

|

||||

|

||||

### What is GHIDRA?

|

||||

|

||||

GHIDRA is a software reverse engineering framework developed by [NSA][5] that is in use by the agency for more than a decade.

|

||||

|

||||

Basically, a software reverse engineering tool helps to dig up the source code of a proprietary program which further gives you the ability to detect virus threats or potential bugs. You should read how [reverse engineering][6] works to know more.

|

||||

|

||||

The tool is is written in Java and quite a few people compared it to high-end commercial reverse engineering tools available like [IDA][7].

|

||||

|

||||

A [Reddit thread][8] involves more detailed discussion where you will find some ex-employees giving good amount of details before the availability of the tool.

|

||||

|

||||

![NSA open source][9]

|

||||

|

||||

### GHIDRA was a secret tool, how do we know about it?

|

||||

|

||||

The existence of the tool was uncovered in a series of leaks by [WikiLeaks][10] as part of [Vault 7 documents of CIA][11].

|

||||

|

||||

### Is it going to be open source?

|

||||

|

||||

We do think that the reverse engineering tool to be released could be made open source. Even though there is no official confirmation mentioning “open source” – but a lot of people do believe that NSA is definitely targeting the open source community to help improve their tool while also reducing their effort to maintain this tool.

|

||||

|

||||

This way the tool can remain free and the open source community can help improve GHIDRA as well.

|

||||

|

||||

You can also check out the existing [Vault 7 document at WikiLeaks][12] to come up with your prediction.

|

||||

|

||||

### Is NSA doing a good job here?

|

||||

|

||||

The reverse engineering tool is going to be available for Windows, Linux, and Mac OS for free.

|

||||

|

||||

Of course, we care about the Linux platform here – which could be a very good option for people who do not want to or cannot afford a thousand dollar license for a reverse engineering tool with the best-in-class features.

|

||||

|

||||

### Wrapping Up

|

||||

|

||||

If GHIDRA becomes open source and is available for free, it would definitely help a lot of researchers and students and on the other side – the competitors will be forced to adjust their pricing.

|

||||

|

||||

What are your thoughts about it? Is it a good thing? What do you think about the tool going open sources Let us know what you think in the comments below.

|

||||

|

||||

![][13]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/nsa-ghidra-open-source

|

||||

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/ankush/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.rsaconference.com/events/us19

|

||||

[2]: https://www.rsaconference.com/events/us19/agenda/sessions/16608-come-get-your-free-nsa-reverse-engineering-tool

|

||||

[3]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/01/come-get-your-free-nsa.jpg?fit=800%2C337&ssl=1

|

||||

[4]: https://twitter.com/0xffff0800/status/1080909700701405184

|

||||

[5]: http://nsa.gov

|

||||

[6]: https://en.wikipedia.org/wiki/Reverse_engineering

|

||||

[7]: https://en.wikipedia.org/wiki/Interactive_Disassembler

|

||||

[8]: https://www.reddit.com/r/ReverseEngineering/comments/ace2m3/come_get_your_free_nsa_reverse_engineering_tool/

|

||||

[9]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/01/nsa-open-source.jpeg?resize=800%2C450&ssl=1

|

||||

[10]: https://www.wikileaks.org/

|

||||

[11]: https://en.wikipedia.org/wiki/Vault_7

|

||||

[12]: https://wikileaks.org/ciav7p1/cms/page_9536070.html

|

||||

[13]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/01/nsa-open-source.jpeg?fit=800%2C450&ssl=1

|

||||

@ -0,0 +1,95 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (What metrics matter: A guide for open source projects)

|

||||

[#]: via: (https://opensource.com/article/19/1/metrics-guide-open-source-projects)

|

||||

[#]: author: (Gordon Haff https://opensource.com/users/ghaff)

|

||||

|

||||

What metrics matter: A guide for open source projects

|

||||

======

|

||||

5 principles for deciding what to measure.

|

||||

|

||||

|

||||

"Without data, you're just a person with an opinion."

|

||||

|

||||

Those are the words of W. Edwards Deming, the champion of statistical process control, who was credited as one of the inspirations for what became known as the Japanese post-war economic miracle of 1950 to 1960. Ironically, Japanese manufacturers like Toyota were far more receptive to Deming’s ideas than General Motors and Ford were.

|

||||

|

||||

Community management is certainly an art. It’s about mentoring. It’s about having difficult conversations with people who are hurting the community. It’s about negotiation and compromise. It’s about interacting with other communities. It’s about making connections. In the words of Red Hat’s Diane Mueller, it’s about "nurturing conversations."

|

||||

|

||||

However, it’s also about metrics and data.

|

||||

|

||||

Some have much in common with software development projects more broadly. Others are more specific to the management of the community itself. I think of deciding what to measure and how as adhering to five principles.

|

||||

|

||||

### 1. Recognize that behaviors aren't independent of the measurements you choose to highlight.

|

||||

|

||||

In 2008, Daniel Ariely published Predictably Irrational, one of a number of books written around that time that introduced behavioral psychology and behavioral economics to the general public. One memorable quote from that book is the following: “Human beings adjust behavior based on the metrics they’re held against. Anything you measure will impel a person to optimize his score on that metric. What you measure is what you’ll get. Period.”

|

||||

|

||||

This shouldn’t be surprising. It’s a finding that’s been repeatedly confirmed by research. It should also be familiar to just about anyone with business experience. It’s certainly not news to anyone in sales management, for example. Base sales reps’ (or their managers’) bonuses solely on revenue, and they’ll try to discount whatever it takes to maximize revenue, even if it puts margin in the toilet. Conversely, want the sales force to push a new product line—which will probably take extra effort—but skip the spiffs? Probably not happening.

|

||||

|

||||

And lest you think I’m unfairly picking on sales, this behavior is pervasive, all the way up to the CEO, as Ariely describes in a 2010 Harvard Business Review article: “CEOs care about stock value because that’s how we measure them. If we want to change what they care about, we should change what we measure.”

|

||||

|

||||

Developers and other community members are not immune.

|

||||

|

||||

### 2. You need to choose relevant metrics.

|

||||

|

||||

There’s a lot of folk wisdom floating around about what’s relevant and important that’s not necessarily true. My colleague [Dave Neary offers an example from baseball][1]: “In the late '90s, the key measurements that were used to measure batter skill were RBI (runs batted in) and batting average (how often a player got on base with a hit, divided by the number of at-bats). The Oakland A’s were the first major league team to recruit based on a different measurement of player performance: on-base percentage. This measures how often they get to first base, regardless of how it happens.”

|

||||

|

||||

Indeed, the whole revolution of sabermetrics in baseball and elsewhere, which was popularized in Michael Lewis’ Moneyball, often gets talked about in terms of introducing data in a field that historically was more about gut feel and personal experience. But it was also about taking a game that had actually always been fairly numbers-obsessed and coming up with new metrics based on mostly existing data to better measure player value. (The data revolution going on in sports today is more about collecting much more data through video and other means than was previously available.)

|

||||

|

||||

### 3. Quantity may not lead to quality.

|

||||

|

||||

As a corollary, collecting lots of tangential but easy-to-capture data isn’t better than just selecting a few measurements you’ve determined are genuinely useful. In a world where online behavior can be tracked with great granularity and displayed in colorful dashboards, it’s tempting to be distracted by sheer data volume, even when it doesn’t deliver any great insight into community health and trajectory.

|

||||

|

||||

This may seem like an obvious point: Why measure something that isn’t relevant? In practice, metrics often get chosen because they’re easy to measure, not because they’re particularly useful. They tend to be more about inputs than outputs: The number of developers. The number of forum posts. The number of commits. Collectively, measures like this often get called vanity metrics. They’re ubiquitous, but most people involved with community management don’t think much of them.

|

||||

|

||||

Number of downloads may be the worst of the bunch. It’s true that, at some level, they’re an indication of interest in a project. That’s something. But it’s sufficiently distant from actively using the project, much less engaging with the project deeply, that it’s hard to view downloads as a very useful number.

|

||||

|

||||

Is there any harm in these vanity metrics? Yes, to the degree that you start thinking that they’re something to base action on. Probably more seriously, stakeholders like company management or industry observers can come to see them as meaningful indicators of project health.

|

||||

|

||||

### 4. Understand what measurements really mean and how they relate to each other.

|

||||

|

||||

Neary makes this point to caution against myopia. “In one project I worked on,” he says, ”some people were concerned about a recent spike in the number of bug reports coming in because it seemed like the project must have serious quality issues to resolve. However, when we looked at the numbers, it turned out that many of the bugs were coming in because a large company had recently started using the project. The increase in bug reports was actually a proxy for a big influx of new users, which was a good thing.”

|

||||

|

||||

In practice, you often have to measure through proxies. This isn’t an inherent problem, but the further you get between what you want to measure and what you’re actually measuring, the harder it is to connect the dots. It’s fine to track progress in closing bugs, writing code, and adding new features. However, those don’t necessarily correlate with how happy users are or whether the project is doing a good job of working towards its long-term objectives, whatever those may be.

|

||||

|

||||

### 5. Different measurements serve different purposes.

|

||||

|

||||

Some measurements may be non-obvious but useful for tracking the success of a project and community relative to internal goals. Others may be better suited for a press release or other external consumption. For example, as a community manager, you may really care about the number of meetups, mentoring sessions, and virtual briefings your community has held over the past three months. But it’s the number of contributions and contributors that are more likely to grab the headlines. You probably care about those too. But maybe not as much, depending upon your current priorities.

|

||||

|

||||

Still, other measurements may relate to the goals of any sponsoring organizations. The measurements most relevant for projects tied to commercial products are likely to be different from pure community efforts.

|

||||

|

||||

Because communities differ and goals differ, it’s not possible to simply compile a metrics checklist, but here are some ideas to think about:

|

||||

|

||||

Consider qualitative metrics in addition to quantitative ones. Conducting surveys and other studies can be time-consuming, especially if they’re rigorous enough to yield better-than-anecdotal data. It also requires rigor to construct studies so that they can be used to track changes over time. In other words, it’s a lot easier to measure quantitative contributor activity than it is to suss out if the community members are happier about their participation today than they were a year ago. However, given the importance of culture to the health of a community, measuring it in a systematic way can be a worthwhile exercise.

|

||||

|

||||

Breadth of community, including how many are unaffiliated with commercial entities, is important for many projects. The greater the breadth, the greater the potential leverage of the open source development process. It can also be instructive to see how companies and individuals are contributing. Projects can be explicitly designed to better accommodate casual contributors.

|

||||

|

||||

Are new contributors able to have an impact, or are they ignored? How long does it take for code contributions to get committed? How long does it take for a reported bug to be fixed or otherwise responded to? If they asked a question in a forum, did anyone answer them? In other words, are you letting contributors contribute?

|

||||

|

||||

Advancement within the project is also an important metric. [Mikeal Rogers of the Node.js community][2] explains: “The shift that we made was to create a support system and an education system to take a user and turn them into a contributor, first at a very low level, and educate them to bring them into the committer pool and eventually into the maintainer pool. The end result of this is that we have a wide range of skill sets. Rather than trying to attract phenomenal developers, we’re creating new phenomenal developers.”

|

||||

|

||||

Whatever metrics you choose, don’t forget why you made them metrics in the first place. I find a helpful question to ask is: “What am I going to do with this number?” If the answer is to just put it in a report or in a press release, that’s not a great answer. Metrics should be measurements that tell you either that you’re on the right path or that you need to take specific actions to course-correct.

|

||||

|

||||

For this reason, Stormy Peters, who handles community leads at Red Hat, [argues for keeping it simple][3]. She writes, “It’s much better to have one or two key metrics than to worry about all the possible metrics. You can capture all the possible metrics, but as a project, you should focus on moving one. It’s also better to have a simple metric that correlates directly to something in the real world than a metric that is a complicated formula or ration between multiple things. As project members make decisions, you want them to be able to intuitively feel whether or not it will affect the project’s key metric in the right direction.”

|

||||

|

||||

The article is adapted from [How Open Source Ate Software][4] by Gordon Haff (Apress 2018).

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/metrics-guide-open-source-projects

|

||||

|

||||

作者:[Gordon Haff][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ghaff

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://community.redhat.com/blog/2014/07/when-metrics-go-wrong/

|

||||

[2]: https://opensource.com/article/17/3/nodejs-community-casual-contributors

|

||||

[3]: https://medium.com/open-source-communities/3-important-things-to-consider-when-measuring-your-success-50e21ad82858

|

||||

[4]: https://www.apress.com/us/book/9781484238936

|

||||

@ -0,0 +1,76 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (You (probably) don't need Kubernetes)

|

||||

[#]: via: (https://arp242.net/weblog/dont-need-k8s.html)

|

||||

[#]: author: (Martin Tournoij https://arp242.net/)

|

||||

|

||||

You (probably) don't need Kubernetes

|

||||

======

|

||||

|

||||

This may perhaps be an unpopular opinion, but the overwhelming majority of companies are probably better off not using k8s.

|

||||

|

||||

You know those old “Hello world according to programmer skill” jokes that start with `printf("hello, world\n")` for a junior programmer and end with some convoluted Java OOP design pattern solution for senior software architect engineer? This is kind of like that.

|

||||

|

||||

* Junior sysops

|

||||

`./binary`

|

||||

* Experienced sysops

|

||||

`./binary` on EC2.

|

||||

* devops

|

||||

Self-deployed CI pipeline to run `./binary` on EC2.

|

||||

* Senior cloud orchestration engineer

|

||||

k8s orchestrated self-deployed CI pipeline to run `./binary` on E2C platform.

|

||||

|

||||

|

||||

|

||||

¯\\_(ツ)_/¯

|

||||

|

||||

That doesn’t mean that Kubernetes or any of these things are bad per se, just as Java or OOP aren’t bad per se, but rather that they’re horribly misapplied in many cases, just as using several Java OOP design patterns are horribly misapplied to a hello world program. For most companies the sysops requirements are fundamentally not very complex, and applying k8s to them makes litle sense.

|

||||

|

||||

Complexity creates work by its very nature, and I’m skeptical that using k8s is a time-saver for most users. It’s like spending a day on a script to automate some 10-minute task that you do once a month. That’s not a good time investment (especially since the chances are you’ll have to invest further time in the future by expanding or debugging that script at some point).

|

||||

|

||||

Your deployments probably should be automated – lest you [end up like Knightmare][1] – but k8s can often be replaced by a simple shell script.

|

||||

|

||||

In our own company the sysops team spent a lot of time setting up k8s. They also had to spend a lot of time on updating to a newer version a while ago (1.6 ➙ 1.8). And the result is something no one really understands without really diving in to k8s, and even then it’s hard (those YAML files, yikes!)

|

||||

|

||||

Before I could debug and fix deploy issues myself. Now that’s a lot harder. I understand the basic concepts, but that’s not all that useful when actually debugging practical issues. I don’t deal with k8s often enough to justify learning this.

|

||||

|

||||

That k8s is really hard is not a novel insight, which is why there are a host of “k8s made easy” solutions out there. The idea of adding another layer on top of k8s to “make it easier” strikes me as, ehh, unwise. It’s not like that complexity disappears; you’ve just hidden it.

|

||||

|

||||

I have said this many times before: when determining if something is “easy” then my prime concern is not how easy something is to write, but how easy something is to debug when things fail. Wrapping k8s will not make things easier to debug, quite the opposite: it will make it even harder.

|

||||

|

||||

There is a famous Blaise Pascal quote:

|

||||

|

||||

> All human evil comes from a single cause, man’s inability to sit still in a room.

|

||||

|

||||

k8s – and to lesser extent, Docker – seem to be an example of that. A lot of people seem lost in the excitement of the moment and are “k8s al the things!”, just as some people were lost in the excitement when Java OOP was new, so everything has to be converted from the “old” way to the “new” ones, even though the “old” ways still worked fine.

|

||||

|

||||

Sometimes the IT industry is pretty silly.

|

||||

|

||||

Or to summarize this post [with a Tweet][2]:

|

||||

|

||||

> 2014 - We must adopt #microservices to solve all problems with monoliths

|

||||

> 2016 - We must adopt #docker to solve all problems with microservices

|

||||

> 2018 - We must adopt #kubernetes to solve all problems with docker

|

||||

|

||||

You can mail me at [martin@arp242.net][3] or [create a GitHub issue][4] for feedback, questions, etc.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://arp242.net/weblog/dont-need-k8s.html

|

||||

|

||||

作者:[Martin Tournoij][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://arp242.net/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://dougseven.com/2014/04/17/knightmare-a-devops-cautionary-tale/

|

||||

[2]: https://twitter.com/sahrizv/status/1018184792611827712

|

||||

[3]: mailto:martin@arp242.net

|

||||

[4]: https://github.com/Carpetsmoker/arp242.net/issues/new

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

Getting started with Turtl, an open source alternative to Evernote

|

||||

======

|

||||

|

||||

|

||||

@ -1,52 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Powers of two, powers of Linux: 2048 at the command line)

|

||||

[#]: via: (https://opensource.com/article/18/12/linux-toy-2048)

|

||||

[#]: author: (Jason Baker https://opensource.com/users/jason-baker)

|

||||

|

||||

Powers of two, powers of Linux: 2048 at the command line

|

||||

======

|

||||

Looking for a terminal-based game to pass the time? Look no further than 2048-cli.

|

||||

|

||||

|

||||

Hello and welcome to today's installment of the Linux command-line toys advent calendar. Every day, we look at a different toy for your terminal: it could be a game or any simple diversion that helps you have fun.

|

||||

|

||||

Maybe you have seen various selections from our calendar before, but we hope there’s at least one new thing for everyone.

|

||||

|

||||

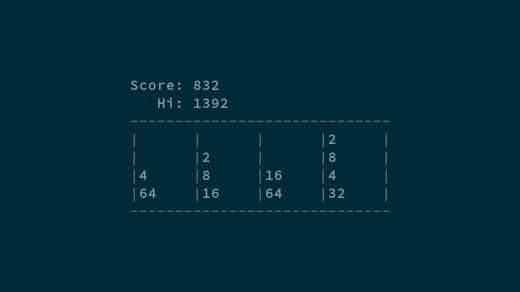

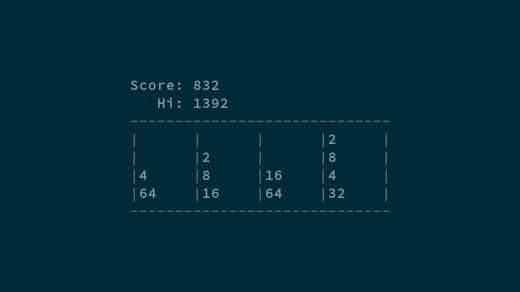

Today's toy is a [command-line version][1] of one of my all-time favorite casual games, [2048][2] (which itself is a clone of another clone).

|

||||

|

||||

To play, you just slide blocks up, down, left, and right to combine matching pairs and increment numbers, until you've made a block that is 2048 in size. The catch (and the challenge), is that you can't just move one block; instead, you move every block on the screen.

|

||||

|

||||

It's simple, fun, and easy to get lost in it for hours. This 2048 clone, [2048-][1][cli][1], is by Marc Tiehuis and written in C, and made available as open source under an MIT license. You can find the source code [on GitHub][1], where you can also get installation instructions for your platform. Since it was packaged for Fedora, for me, installing it was as simple as:

|

||||

|

||||

```

|

||||

$ sudo dnf install 2048-cli

|

||||

```

|

||||

|

||||

That's it, have fun!

|

||||

|

||||

|

||||

|

||||

Do you have a favorite command-line toy that you think I ought to profile? The calendar for this series is mostly filled out but I've got a few spots left. Let me know in the comments below, and I'll check it out. If there's space, I'll try to include it. If not, but I get some good submissions, I'll do a round-up of honorable mentions at the end.

|

||||

|

||||

Check out yesterday's toy, [Play Tetris at your Linux terminal][3], and check back tomorrow for another!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/12/linux-toy-2048

|

||||

|

||||

作者:[Jason Baker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/jason-baker

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://github.com/tiehuis/2048-cli

|

||||

[2]: https://github.com/gabrielecirulli/2048

|

||||

[3]: https://opensource.com/article/18/12/linux-toy-tetris

|

||||

@ -0,0 +1,131 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (s-tui: A Terminal Tool To Monitor CPU Temperature, Frequency, Power And Utilization In Linux)

|

||||

[#]: via: (https://www.2daygeek.com/s-tui-stress-terminal-ui-monitor-linux-cpu-temperature-frequency/)

|

||||

[#]: author: (Prakash Subramanian https://www.2daygeek.com/author/prakash/)

|

||||

|

||||

s-tui: A Terminal Tool To Monitor CPU Temperature, Frequency, Power And Utilization In Linux

|

||||

======

|

||||

|

||||

By default every Linux administrator would go with **[lm_sensors to monitor CPU temperature][1]**.

|

||||

|

||||

lm_sensors (Linux monitoring sensors) is a free and open-source application that provides tools and drivers for monitoring temperatures, voltage, and fans.

|

||||

|

||||

It’s a CLI utility and if you are looking for alternative tools.

|

||||

|

||||

I would suggest you to go for s-tui.

|

||||

|

||||

It’s a Stress Terminal UI which helps administrator to view CPU temperature with colors.

|

||||

|

||||

### What is s-tui

|

||||

|

||||

s-tui is a terminal UI for monitoring your computer. s-tui allows to monitor CPU temperature, frequency, power and utilization in a graphical way from the terminal.

|

||||

|

||||

Also, shows performance dips caused by thermal throttling, it requires minimal resources and doesn’t requires X-server. It was written in Python and requires root privilege to use this.

|

||||

|

||||

s-tui is a self-contained application which can run out-of-the-box and doesn’t need config files to drive its core features.

|

||||

|

||||

s-tui uses psutil to probe some of your hardware information. If your hardware is not supported, you might not see all the information.

|

||||

|

||||

Running s-tui as root gives access to the maximum Turbo Boost frequency available to your CPU when stressing all cores.

|

||||

|

||||

It uses Stress utility in the background to check the temperature of its components do not exceed their acceptable range by imposes certain types of compute stress on your system.

|

||||

|

||||

Running an overclocked PC is fine as long as it is stable and that the temperature of its components do not exceed their acceptable range.

|

||||

|

||||

There are several programs available to assess system stability through stress testing the system and thereby the overclock level.

|

||||

|

||||

### How to Install s-tui In Linux

|

||||

|

||||

It was written in Python and pip installation is a recommended method to install s-tui on Linux. Make sure you should have installed python-pip package on your system. If no, use the following command to install it.

|

||||

|

||||

For Debian/Ubuntu users, use **[Apt Command][2]** or **[Apt-Get Command][3]** to install pip package.

|

||||

|

||||

```

|

||||

$ sudo apt install python-pip stress

|

||||

```

|

||||

|

||||

For Archlinux users, use **[Pacman Command][4]** to install pip package.

|

||||

|

||||

```

|

||||

$ sudo pacman -S python-pip stress

|

||||

```

|

||||

|

||||

For Fedora users, use **[DNF Command][5]** to install pip package.

|

||||

|

||||

```

|

||||

$ sudo dnf install python-pip stress

|

||||

```

|

||||

|

||||

For CentOS/RHEL users, use **[YUM Command][6]** to install pip package.

|

||||

|

||||

```

|

||||

$ sudo yum install python-pip stress

|

||||

```

|

||||

|

||||

For openSUSE users, use **[Zypper Command][7]** to install pip package.

|

||||

|

||||

```

|

||||

$ sudo zypper install python-pip stress

|

||||

```

|

||||

|

||||

Finally run the following **[pip command][8]** to install s-tui tool in Linux.

|

||||

|

||||

For Python 2.x:

|

||||

|

||||

```

|

||||

$ sudo pip install s-tui

|

||||

```

|

||||

|

||||

For Python 3.x:

|

||||

|

||||

```

|

||||

$ sudo pip3 install s-tui

|

||||

```

|

||||

|

||||

### How to Access s-tui

|

||||

|

||||

As i told in the beginning of the article. It requires root privilege to get all the information from your system. Just run the following command to launch s-tui.

|

||||

|

||||

```

|

||||

$ sudo s-tui

|

||||

```

|

||||

|

||||

![][10]

|

||||

|

||||

By default it enable hardware monitoring and select the “Stress” option to do the stress test on your system.

|

||||

![][11]

|

||||

|

||||

To check other options, navigate to help page.

|

||||

|

||||

```

|

||||

$ s-tui --help

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/s-tui-stress-terminal-ui-monitor-linux-cpu-temperature-frequency/

|

||||

|

||||

作者:[Prakash Subramanian][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/prakash/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.2daygeek.com/view-check-cpu-hard-disk-temperature-linux/

|

||||

[2]: https://www.2daygeek.com/apt-command-examples-manage-packages-debian-ubuntu-systems/

|

||||

[3]: https://www.2daygeek.com/apt-get-apt-cache-command-examples-manage-packages-debian-ubuntu-systems/

|

||||

[4]: https://www.2daygeek.com/pacman-command-examples-manage-packages-arch-linux-system/

|

||||

[5]: https://www.2daygeek.com/dnf-command-examples-manage-packages-fedora-system/

|

||||

[6]: https://www.2daygeek.com/yum-command-examples-manage-packages-rhel-centos-systems/

|

||||

[7]: https://www.2daygeek.com/zypper-command-examples-manage-packages-opensuse-system/

|

||||

[8]: https://www.2daygeek.com/install-pip-manage-python-packages-linux/

|

||||

[9]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[10]: https://www.2daygeek.com/wp-content/uploads/2018/12/s-tui-stress-terminal-ui-monitor-linux-cpu-temperature-frequency-1.jpg

|

||||

[11]: https://www.2daygeek.com/wp-content/uploads/2018/12/s-tui-stress-terminal-ui-monitor-linux-cpu-temperature-frequency-2.jpg

|

||||

116

sources/tech/20190104 Managing dotfiles with rcm.md

Normal file

116

sources/tech/20190104 Managing dotfiles with rcm.md

Normal file

@ -0,0 +1,116 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Managing dotfiles with rcm)

|

||||

[#]: via: (https://fedoramagazine.org/managing-dotfiles-rcm/)

|

||||

[#]: author: (Link Dupont https://fedoramagazine.org/author/linkdupont/)

|

||||

|

||||

Managing dotfiles with rcm

|

||||

======

|

||||

|

||||

|

||||

|

||||

A hallmark feature of many GNU/Linux programs is the easy-to-edit configuration file. Nearly all common free software programs store configuration settings inside a plain text file, often in a structured format like JSON, YAML or [“INI-like”][1]. These configuration files are frequently found hidden inside a user’s home directory. However, a basic ls won’t reveal them. UNIX standards require that any file or directory name that begins with a period (or “dot”) is considered “hidden” and will not be listed in directory listings unless requested by the user. For example, to list all files using the ls program, pass the -a command-line option.

|

||||

|

||||

Over time, these configuration files become highly customized, and managing them becomes increasingly more challenging as time goes on. Not only that, but keeping them synchronized between multiple computers is a common challenge in large organizations. Finally, many users find a sense of pride in their unique configuration settings and want an easy way to share them with friends. That’s where **rcm** steps in.

|

||||

|

||||

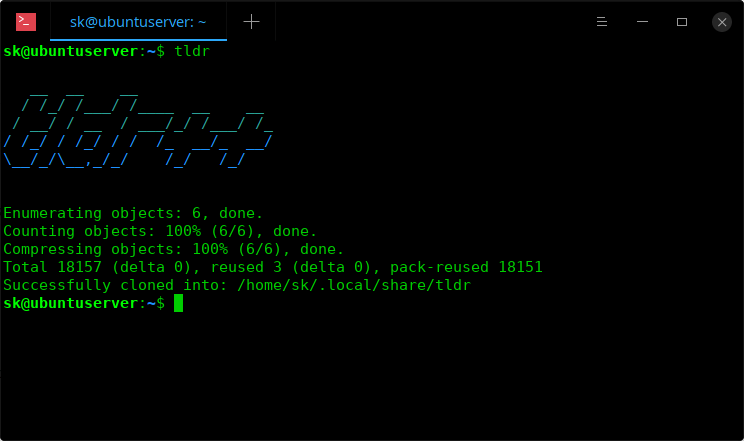

**rcm** is a “rc” file management suite (“rc” is another convention for naming configuration files that has been adopted by some GNU/Linux programs like screen or bash). **rcm** provides a suite of commands to manage and list files it tracks. Install **rcm** using **dnf**.

|

||||

|

||||

### Getting started

|

||||

|

||||

By default, **rcm** uses ~/.dotfiles for storing all the dotfiles it manages. A managed dotfile is actually stored inside ~/.dotfiles, and a symlink is placed in the expected file’s location. For example, if ~/.bashrc is tracked by **rcm** , a long listing would look like this.

|

||||

|

||||

```

|

||||

[link@localhost ~]$ ls -l ~/.bashrc

|

||||

lrwxrwxrwx. 1 link link 27 Dec 16 05:19 .bashrc -> /home/link/.dotfiles/bashrc

|

||||

[link@localhost ~]$

|

||||

```

|

||||

|

||||

**rcm** consists of 4 commands:

|

||||

|

||||

* mkrc – convert a file into a dotfile managed by rcm

|

||||

* lsrc – list files managed by rcm

|

||||

* rcup – synchronize dotfiles managed by rcm

|

||||