mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-18 02:00:18 +08:00

Translated by cposture

This commit is contained in:

commit

156bef4209

@ -0,0 +1,104 @@

|

||||

在 Linux 上管理加密密钥的最佳体验

|

||||

=============================================

|

||||

|

||||

存储 SSH 的加密秘钥和记住密码一直是一个让人头疼的问题。但是不幸的是,在当前这个充满了恶意黑客和攻击的世界中,基本的安全预防是必不可少的。对于许多普通用户来说,大多数人只能是记住密码,也可能寻找到一个好程序去存储密码,正如我们提醒这些用户不要在每个网站采用相同的密码。但是对于在各个 IT 领域的人们,我们需要将这个事情提高一个层面。我们需要使用像 SSH 密钥这样的加密秘钥,而不只是密码。

|

||||

|

||||

设想一个场景:我有一个运行在云上的服务器,用作我的主 git 库。我有很多台工作电脑,所有这些电脑都需要登录到这个中央服务器去做 push 与 pull 操作。这里我设置 git 使用 SSH。当 git 使用 SSH 时,git 实际上是以 SSH 的方式登录到服务器,就好像你通过 SSH 命令打开一个服务器的命令行一样。为了把这些配置好,我在我的 .ssh 目录下创建一个配置文件,其中包含一个有服务器名字、主机名、登录用户、密钥文件路径等信息的主机项。之后我可以通过输入如下命令来测试这个配置是否正确。

|

||||

|

||||

ssh gitserver

|

||||

|

||||

很快我就可以访问到服务器的 bash shell。现在我可以配置 git 使用相同配置项以及存储的密钥来登录服务器。这很简单,只是有一个问题:对于每一个我要用它登录服务器的电脑,我都需要有一个密钥文件,那意味着需要密钥文件会放在很多地方。我会在当前这台电脑上存储这些密钥文件,我的其他电脑也都需要存储这些。就像那些有特别多的密码的用户一样,我们这些 IT 人员也被这些特别多的密钥文件淹没。怎么办呢?

|

||||

|

||||

### 清理

|

||||

|

||||

在我们开始帮助你管理密钥之前,你需要有一些密钥应该怎么使用的基础知识,以及明白我们下面的提问的意义所在。同时,有个前提,也是最重要的,你应该知道你的公钥和私钥该放在哪里。然后假设你应该知道:

|

||||

|

||||

1. 公钥和私钥之间的差异;

|

||||

2. 为什么你不可以从公钥生成私钥,但是反之则可以?

|

||||

3. `authorized_keys` 文件的目的以及里面包含什么内容;

|

||||

4. 如何使用私钥去登录一个你的对应公钥存储在其上的 `authorized_keys` 文件中的服务器。

|

||||

|

||||

|

||||

|

||||

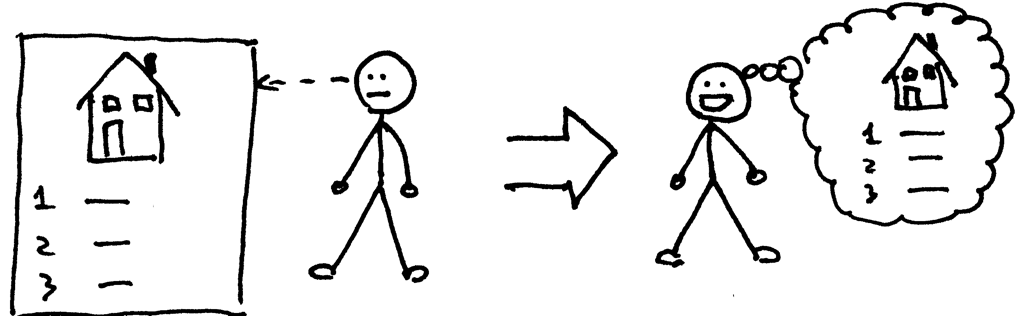

这里有一个例子。当你在亚马逊的网络服务上创建一个云服务器,你必须提供一个用于连接你的服务器的 SSH 密钥。每个密钥都有一个公开的部分(公钥)和私密的部分(私钥)。你要想让你的服务器安全,乍看之下你可能应该将你的私钥放到服务器上,同时你自己带着公钥。毕竟,你不想你的服务器被公开访问,对吗?但是实际上的做法正好是相反的。

|

||||

|

||||

你应该把自己的公钥放到 AWS 服务器,同时你持有用于登录服务器的私钥。你需要保护好私钥,并让它处于你的控制之中,而不是放在一些远程服务器上,正如上图中所示。

|

||||

|

||||

原因如下:如果公钥被其他人知道了,它们不能用于登录服务器,因为他们没有私钥。进一步说,如果有人成功攻入你的服务器,他们所能找到的只是公钥,他们不可以从公钥生成私钥。同时,如果你在其他的服务器上使用了相同的公钥,他们不可以使用它去登录别的电脑。

|

||||

|

||||

这就是为什么你要把你自己的公钥放到你的服务器上以便通过 SSH 登录这些服务器。你持有这些私钥,不要让这些私钥脱离你的控制。

|

||||

|

||||

但是还有一点麻烦。试想一下我 git 服务器的例子。我需要做一些抉择。有时我登录架设在别的地方的开发服务器,而在开发服务器上,我需要连接我的 git 服务器。如何使我的开发服务器连接 git 服务器?显然是通过使用私钥,但这样就会有问题。在该场景中,需要我把私钥放置到一个架设在别的地方的服务器上,这相当危险。

|

||||

|

||||

一个进一步的场景:如果我要使用一个密钥去登录许多的服务器,怎么办?如果一个入侵者得到这个私钥,这个人就能用这个私钥得到整个服务器网络的权限,这可能带来一些严重的破坏,这非常糟糕。

|

||||

|

||||

同时,这也带来了另外一个问题,我真的应该在这些其他服务器上使用相同的密钥吗?因为我刚才描述的,那会非常危险的。

|

||||

|

||||

最后,这听起来有些混乱,但是确实有一些简单的解决方案。让我们有条理地组织一下。

|

||||

|

||||

(注意,除了登录服务器,还有很多地方需要私钥密钥,但是我提出的这个场景可以向你展示当你使用密钥时你所面对的问题。)

|

||||

|

||||

### 常规口令

|

||||

|

||||

当你创建你的密钥时,你可以选择是否包含一个密钥使用时的口令。有了这个口令,私钥文件本身就会被口令所加密。例如,如果你有一个公钥存储在服务器上,同时你使用私钥去登录服务器的时候,你会被提示输入该口令。没有口令,这个密钥是无法使用的。或者你也可以配置你的密钥不需要口令,然后只需要密钥文件就可以登录服务器了。

|

||||

|

||||

一般来说,不使用口令对于用户来说是更方便的,但是在很多情况下我强烈建议使用口令,原因是,如果私钥文件被偷了,偷密钥的人仍然不可以使用它,除非他或者她可以找到口令。在理论上,这个将节省你很多时间,因为你可以在攻击者发现口令之前,从服务器上删除公钥文件,从而保护你的系统。当然还有一些使用口令的其它原因,但是在很多场合这个原因对我来说更有价值。(举一个例子,我的 Android 平板上有 VNC 软件。平板上有我的密钥。如果我的平板被偷了之后,我会马上从服务器上删除公钥,使得它的私钥没有作用,无论有没有口令。)但是在一些情况下我不使用口令,是因为我正在登录的服务器上没有什么有价值的数据,这取决于情境。

|

||||

|

||||

### 服务器基础设施

|

||||

|

||||

你如何设计自己服务器的基础设施将会影响到你如何管理你的密钥。例如,如果你有很多用户登录,你将需要决定每个用户是否需要一个单独的密钥。(一般来说,应该如此;你不会想在用户之间共享私钥。那样当一个用户离开组织或者失去信任时,你可以删除那个用户的公钥,而不需要必须给其他人生成新的密钥。相似地,通过共享密钥,他们能以其他人的身份登录,这就更糟糕了。)但是另外一个问题是你如何配置你的服务器。举例来说,你是否使用像 Puppet 这样工具配置大量的服务器?你是否基于你自己的镜像创建大量的服务器?当你复制你的服务器,是否每一个的密钥都一样?不同的云服务器软件允许你配置如何选择;你可以让这些服务器使用相同的密钥,也可以给每一个服务器生成一个新的密钥。

|

||||

|

||||

如果你在操作这些复制的服务器,如果用户需要使用不同的密钥登录两个不同但是大部分都一样的系统,它可能导致混淆。但是另一方面,服务器共享相同的密钥会有安全风险。或者,第三,如果你的密钥有除了登录之外的需要(比如挂载加密的驱动),那么你会在很多地方需要相同的密钥。正如你所看到的,你是否需要在不同的服务器上使用相同的密钥不是我能为你做的决定;这其中有权衡,你需要自己去决定什么是最好的。

|

||||

|

||||

最终,你可能会有:

|

||||

|

||||

- 需要登录的多个服务器

|

||||

- 多个用户登录到不同的服务器,每个都有自己的密钥

|

||||

- 每个用户使用多个密钥登录到不同的服务器

|

||||

|

||||

(如果你正在别的情况下使用密钥,这个同样的普适理论也能应用于如何使用密钥,需要多少密钥,它们是否共享,你如何处理公私钥等方面。)

|

||||

|

||||

### 安全方法

|

||||

|

||||

了解你的基础设施和特有的情况,你需要组合一个密钥管理方案,它会指导你如何去分发和存储你的密钥。比如,正如我之前提到的,如果我的平板被偷了,我会从我服务器上删除公钥,我希望这在平板在用于访问服务器之前完成。同样的,我会在我的整体计划中考虑以下内容:

|

||||

|

||||

1. 私钥可以放在移动设备上,但是必须包含口令;

|

||||

2. 必须有一个可以快速地从服务器上删除公钥的方法。

|

||||

|

||||

在你的情况中,你可能决定你不想在自己经常登录的系统上使用口令;比如,这个系统可能是一个开发者一天登录多次的测试机器。这没有问题,但是你需要调整一点你的规则。你可以添加一条规则:不可以通过移动设备登录该机器。换句话说,你需要根据自己的状况构建你的准则,不要假设某个方案放之四海而皆准。

|

||||

|

||||

### 软件

|

||||

|

||||

至于软件,令人吃惊的是,现实世界中并没有很多好的、可靠的存储和管理私钥的软件解决方案。但是应该有吗?考虑下这个,如果你有一个程序存储你所有服务器的全部密钥,并且这个程序被一个快捷的密钥锁住,那么你的密钥就真的安全了吗?或者类似的,如果你的密钥被放置在你的硬盘上,用于 SSH 程序快速访问,密钥管理软件是否真正提供了任何保护吗?

|

||||

|

||||

但是对于整体基础设施和创建/管理公钥来说,有许多的解决方案。我已经提到了 Puppet,在 Puppet 的世界中,你可以创建模块以不同的方式管理你的服务器。这个想法是服务器是动态的,而且不需要精确地复制彼此。[这里有一个聪明的方法](http://manuel.kiessling.net/2014/03/26/building-manageable-server-infrastructures-with-puppet-part-4/),在不同的服务器上使用相同的密钥,但是对于每一个用户使用不同的 Puppet 模块。这个方案可能适合你,也可能不适合你。

|

||||

|

||||

或者,另一个选择就是完全换个不同的档位。在 Docker 的世界中,你可以采取一个不同的方式,正如[关于 SSH 和 Docker 博客](http://blog.docker.com/2014/06/why-you-dont-need-to-run-sshd-in-docker/)所描述的那样。

|

||||

|

||||

但是怎么样管理私钥?如果你搜索过的话,你无法找到很多可以选择的软件,原因我之前提到过;私钥存放在你的硬盘上,一个管理软件可能无法提到更多额外的安全。但是我使用这种方法来管理我的密钥:

|

||||

|

||||

首先,我的 `.ssh/config` 文件中有很多的主机项。我要登录的都有一个主机项,但是有时我对于一个单独的主机有不止一项。如果我有很多登录方式,就会出现这种情况。对于放置我的 git 库的服务器来说,我有两个不同的登录项;一个限制于 git,另一个用于一般用途的 bash 访问。这个为 git 设置的登录选项在机器上有极大的限制。还记得我之前说的我存储在远程开发机器上的 git 密钥吗?好了。虽然这些密钥可以登录到我其中一个服务器,但是使用的账号是被严格限制的。

|

||||

|

||||

其次,大部分的私钥都包含口令。(对于需要多次输入口令的情况,考虑使用 [ssh-agent](http://blog.docker.com/2014/06/why-you-dont-need-to-run-sshd-in-docker/)。)

|

||||

|

||||

再次,我有一些我想要更加小心地保护的服务器,我不会把这些主机项放在我的 host 文件中。这更加接近于社会工程方面,密钥文件还在,但是可能需要攻击者花费更长的时间去找到这个密钥文件,分析出来它们对应的机器。在这种情况下,我就需要手动打出来一条长长的 SSH 命令。(没那么可怕。)

|

||||

|

||||

同时你可以看出来我没有使用任何特别的软件去管理这些私钥。

|

||||

|

||||

## 无放之四海而皆准的方案

|

||||

|

||||

我们偶尔会在 linux.com 收到一些问题,询问管理密钥的好软件的建议。但是退一步看,这个问题事实上需要重新思考,因为没有一个普适的解决方案。你问的问题应该基于你自己的状况。你是否简单地尝试找到一个位置去存储你的密钥文件?你是否寻找一个方法去管理多用户问题,其中每个人都需要将他们自己的公钥插入到 `authorized_keys` 文件中?

|

||||

|

||||

通过这篇文章,我已经囊括了这方面的基础知识,希望到此你明白如何管理你的密钥,并且,只有当你问出了正确的问题,无论你寻找任何软件(甚至你需要另外的软件),它都会出现。

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linux.com/learn/tutorials/838235-how-to-best-manage-encryption-keys-on-linux

|

||||

|

||||

作者:[Jeff Cogswell][a]

|

||||

译者:[mudongliang](https://github.com/mudongliang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linux.com/community/forums/person/62256

|

||||

51

published/20160629 USE TASK MANAGER EQUIVALENT IN LINUX.md

Normal file

51

published/20160629 USE TASK MANAGER EQUIVALENT IN LINUX.md

Normal file

@ -0,0 +1,51 @@

|

||||

在 Linux 下使用任务管理器

|

||||

====================================

|

||||

|

||||

|

||||

|

||||

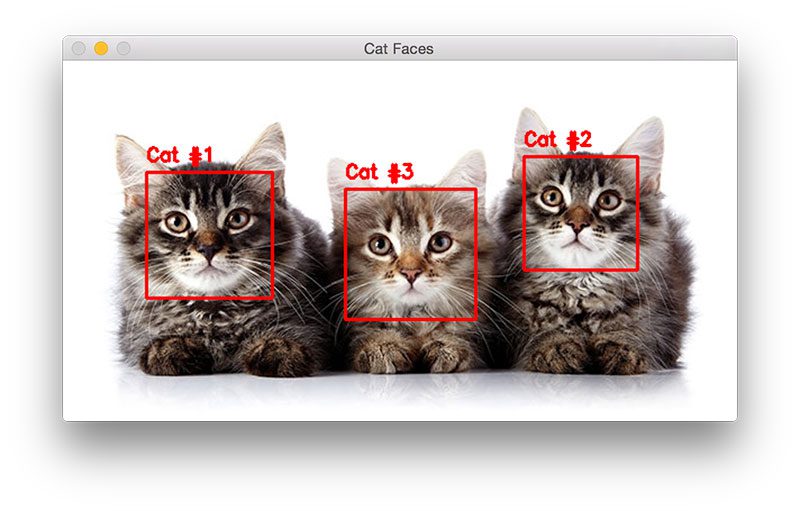

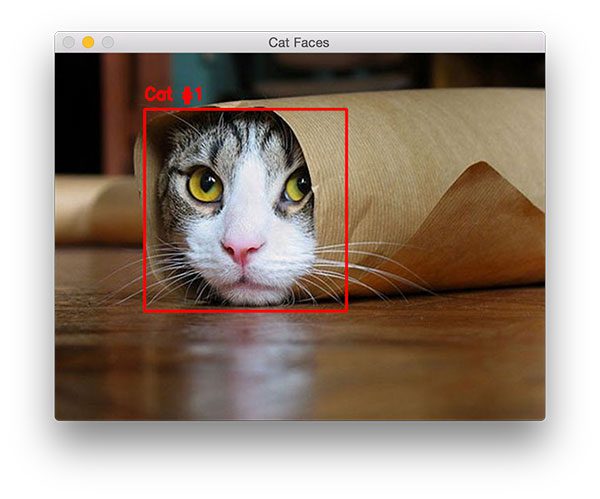

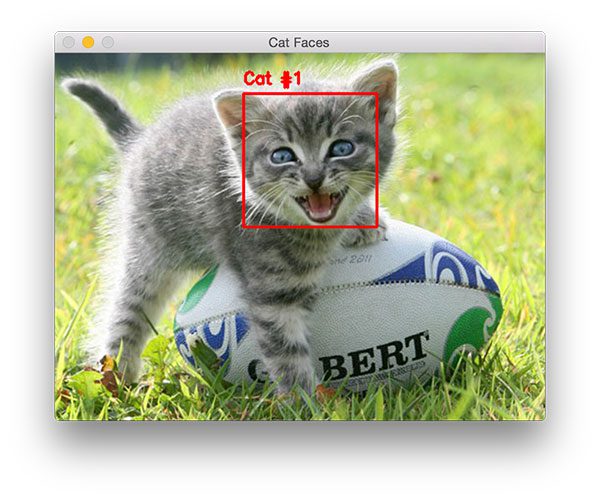

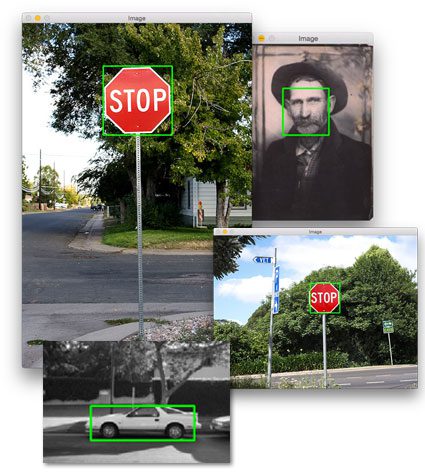

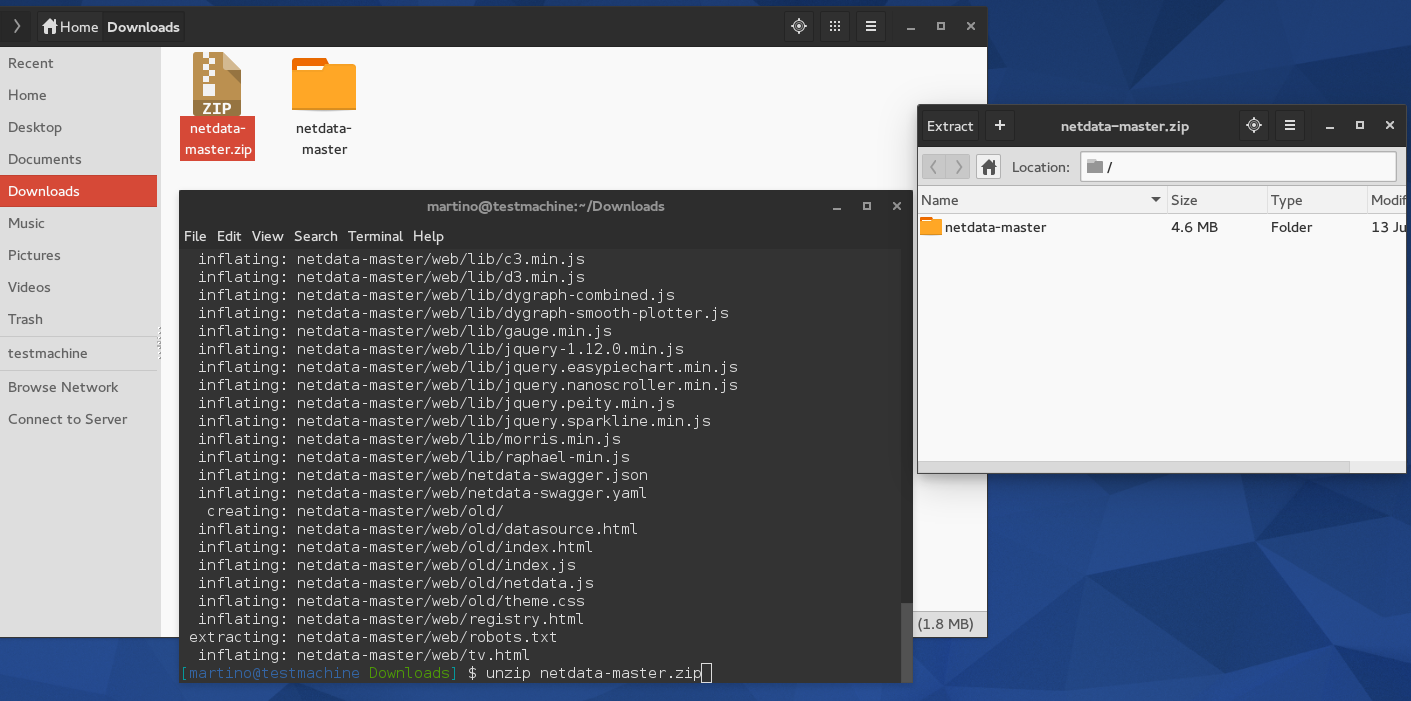

有很多 Linux 初学者经常问起的问题,“**Linux 有任务管理器吗?**”,“**怎样在 Linux 上打开任务管理器呢?**”

|

||||

|

||||

来自 Windows 的用户都知道任务管理器非常有用。你可以在 Windows 中按下 `Ctrl+Alt+Del` 打开任务管理器。这个任务管理器向你展示了所有的正在运行的进程和它们消耗的内存,你可以从任务管理器程序中选择并杀死一个进程。

|

||||

|

||||

当你刚使用 Linux 的时候,你也会寻找一个**在 Linux 相当于任务管理器**的一个东西。一个 Linux 使用专家更喜欢使用命令行的方式查找进程和消耗的内存等等,但是你不用必须使用这种方式,至少在你初学 Linux 的时候。

|

||||

|

||||

所有主流的 Linux 发行版都有一个类似于任务管理器的东西。大部分情况下,它叫系统监视器(System Monitor),不过实际上它依赖于你的 Linux 的发行版及其使用的[桌面环境][1]。

|

||||

|

||||

在这篇文章中,我们将会看到如何在以 GNOME 为[桌面环境][2]的 Linux 上找到并使用任务管理器。

|

||||

|

||||

### 在使用 GNOME 桌面环境的 Linux 上的任务管理器等价物

|

||||

|

||||

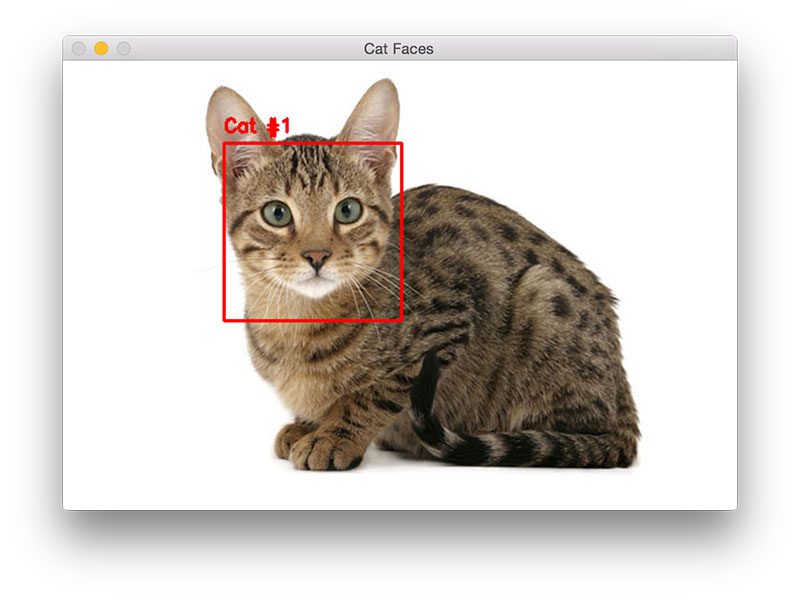

使用 GNOME 时,按下 super 键(Windows 键)来查找任务管理器:

|

||||

|

||||

|

||||

|

||||

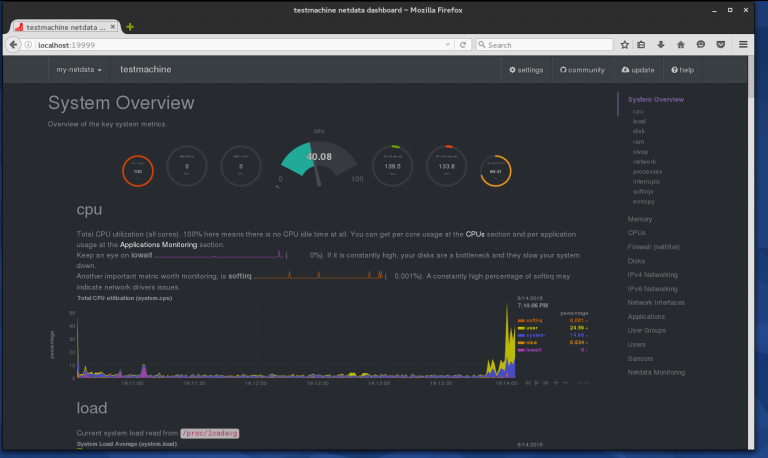

当你启动系统监视器的时候,它会向你展示所有正在运行的进程及其消耗的内存。

|

||||

|

||||

|

||||

|

||||

你可以选择一个进程并且点击“终止进程(End Process)”来杀掉它。

|

||||

|

||||

|

||||

|

||||

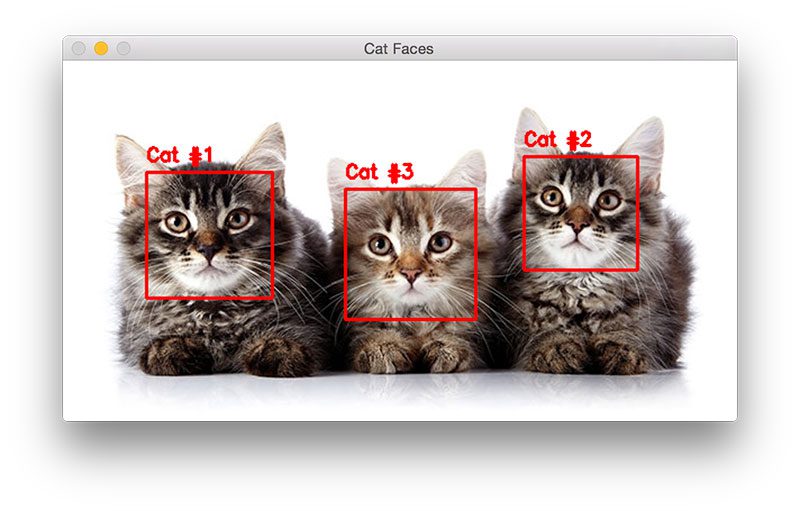

你也可以在资源(Resources)标签里面看到关于一些统计数据,例如 CPU 的每个核心的占用,内存用量、网络用量等。

|

||||

|

||||

|

||||

|

||||

这是图形化的方式。如果你想使用命令行,在终端里运行“top”命令然后你就可以看到所有运行的进程及其消耗的内存。你也可以很容易地使用命令行[杀死进程][3]。

|

||||

|

||||

这就是关于在 Fedora Linux 上任务管理器的知识。我希望这个教程帮你学到了知识,如果你有什么问题,请尽管问。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/task-manager-linux/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

译者:[xinglianfly](https://github.com/xinglianfly)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject)原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]: https://wiki.archlinux.org/index.php/desktop_environment

|

||||

[2]: https://itsfoss.com/best-linux-desktop-environments/

|

||||

[3]: https://itsfoss.com/how-to-find-the-process-id-of-a-program-and-kill-it-quick-tip/

|

||||

@ -1,77 +0,0 @@

|

||||

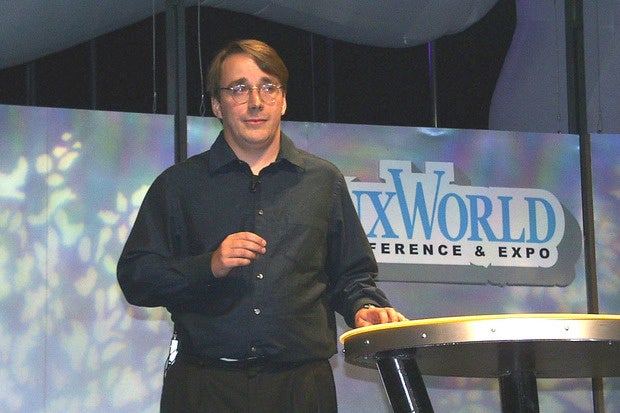

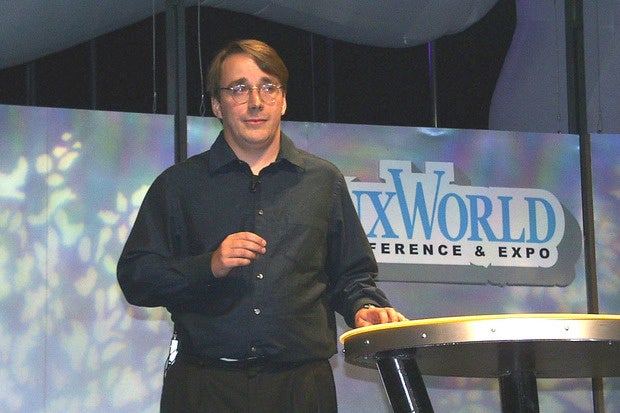

How bad a boss is Linus Torvalds?

|

||||

================================================================================

|

||||

|

||||

|

||||

*Linus Torvalds addressed a packed auditorium of Linux enthusiasts during his speech at the LinuxWorld show in San Jose, California, on August 10, 1999. Credit: James Niccolai*

|

||||

|

||||

**It depends on context. In the world of software development, he’s what passes for normal. The question is whether that situation should be allowed to continue.**

|

||||

|

||||

I've known Linus Torvalds, Linux's inventor, for over 20 years. We're not chums, but we like each other.

|

||||

|

||||

Lately, Torvalds has been getting a lot of flack for his management style. Linus doesn't suffer fools gladly. He has one way of judging people in his business of developing the Linux kernel: How good is your code?

|

||||

|

||||

Nothing else matters. As Torvalds said earlier this year at the Linux.conf.au Conference, "I'm not a nice person, and I don't care about you. [I care about the technology and the kernel][1] -- that's what's important to me."

|

||||

|

||||

Now, I can deal with that kind of person. If you can't, you should avoid the Linux kernel community, where you'll find a lot of this kind of meritocratic thinking. Which is not to say that I think everything in Linuxland is hunky-dory and should be impervious to calls for change. A meritocracy I can live with; a bastion of male dominance where women are subjected to scorn and disrespect is a problem.

|

||||

|

||||

That's why I see the recent brouhaha about Torvalds' management style -- or more accurately, his total indifference to the personal side of management -- as nothing more than standard operating procedure in the world of software development. And at the same time, I see another instance that has come to light as evidence of a need for things to really change.

|

||||

|

||||

The first situation arose with the [release of Linux 4.3][2], when Torvalds used the Linux Kernel Mailing List to tear into a developer who had inserted some networking code that Torvalds thought was -- well, let's say "crappy." "[[A]nd it generates [crappy] code.][3] It looks bad, and there's no reason for it." He goes on in this vein for quite a while. Besides the word "crap" and its earthier synonym, he uses the word "idiotic" pretty often.

|

||||

|

||||

Here's the thing, though. He's right. I read the code. It's badly written and it does indeed seem to have been designed to use the new "overflow_usub()" function just for the sake of using it.

|

||||

|

||||

Now, some people see this diatribe as evidence that Torvalds is a bad-tempered bully. I see a perfectionist who, within his field, doesn't put up with crap.

|

||||

|

||||

Many people have told me that this is not how professional programmers should act. People, have you ever worked with top developers? That's exactly how they act, at Apple, Microsoft, Oracle and everywhere else I've known them.

|

||||

|

||||

I've heard Steve Jobs rip a developer to pieces. I've cringed while a senior Oracle developer lead tore into a room of new programmers like a piranha through goldfish.

|

||||

|

||||

In Accidental Empires, his classic book on the rise of PCs, Robert X. Cringely described Microsoft's software management style when Bill Gates was in charge as a system where "Each level, from Gates on down, screams at the next, goading and humiliating them." Ah, yes, that's the Microsoft I knew and hated.

|

||||

|

||||

The difference between the leaders at big proprietary software companies and Torvalds is that he says everything in the open for the whole world to see. The others do it in private conference rooms. I've heard people claim that Torvalds would be fired in their company. Nope. He'd be right where he is now: on top of his programming world.

|

||||

|

||||

Oh, and there's another difference. If you get, say, Larry Ellison mad at you, you can kiss your job goodbye. When you get Torvalds angry at your work, you'll get yelled at in an email. That's it.

|

||||

|

||||

You see, Torvalds isn't anyone's boss. He's the guy in charge of a project with about 10,000 contributors, but he has zero hiring and firing authority. He can hurt your feelings, but that's about it.

|

||||

|

||||

That said, there is a serious problem within both open-source and proprietary software development circles. No matter how good a programmer you are, if you're a woman, the cards are stacked against you.

|

||||

|

||||

No case shows this better than that of Sarah Sharp, an Intel developer and formerly a top Linux programmer. [In a post on her blog in October][4], she explained why she had stopped contributing to the Linux kernel more than a year earlier: "I finally realized that I could no longer contribute to a community where I was technically respected, but I could not ask for personal respect.... I did not want to work professionally with people who were allowed to get away with subtle sexist or homophobic jokes."

|

||||

|

||||

Who can blame her? I can't. Torvalds, like almost every software manager I've ever known, I'm sorry to say, has permitted a hostile work environment.

|

||||

|

||||

He would probably say that it's not his job to ensure that Linux contributors behave with professionalism and mutual respect. He's concerned with the code and nothing but the code.

|

||||

|

||||

As Sharp wrote:

|

||||

|

||||

> I have the utmost respect for the technical efforts of the Linux kernel community. They have scaled and grown a project that is focused on maintaining some of the highest coding standards out there. The focus on technical excellence, in combination with overloaded maintainers, and people with different cultural and social norms, means that Linux kernel maintainers are often blunt, rude, or brutal to get their job done. Top Linux kernel developers often yell at each other in order to correct each other's behavior.

|

||||

>

|

||||

> That's not a communication style that works for me. …

|

||||

>

|

||||

> Many senior Linux kernel developers stand by the right of maintainers to be technically and personally brutal. Even if they are very nice people in person, they do not want to see the Linux kernel communication style change.

|

||||

|

||||

She's right.

|

||||

|

||||

Where I differ from other observers is that I don't think that this problem is in any way unique to Linux or open-source communities. With five years of work in the technology business and 25 years as a technology journalist, I've seen this kind of immature boy behavior everywhere.

|

||||

|

||||

It's not Torvalds' fault. He's a technical leader with a vision, not a manager. The real problem is that there seems to be no one in the software development universe who can set a supportive tone for teams and communities.

|

||||

|

||||

Looking ahead, I hope that companies and organizations, such as the Linux Foundation, can find a way to empower community managers or other managers to encourage and enforce civil behavior.

|

||||

|

||||

We won't, unfortunately, find that kind of managerial finesse in our pure technical or business leaders. It's not in their DNA.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.computerworld.com/article/3004387/it-management/how-bad-a-boss-is-linus-torvalds.html

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.computerworld.com/author/Steven-J.-Vaughan_Nichols/

|

||||

[1]:http://www.computerworld.com/article/2874475/linus-torvalds-diversity-gaffe-brings-out-the-best-and-worst-of-the-open-source-world.html

|

||||

[2]:http://www.zdnet.com/article/linux-4-3-released-after-linus-torvalds-scraps-brain-damage-code/

|

||||

[3]:http://lkml.iu.edu/hypermail/linux/kernel/1510.3/02866.html

|

||||

[4]:http://sarah.thesharps.us/2015/10/05/closing-a-door/

|

||||

@ -1,49 +0,0 @@

|

||||

Growing a career alongside Linux

|

||||

==================================

|

||||

|

||||

|

||||

|

||||

My Linux story started in 1998 and continues today. Back then, I worked for The Gap managing thousands of desktops running [OS/2][1] (and a few years later, [Warp 3.0][2]). As an OS/2 guy, I was really happy then. The desktops hummed along and it was quite easy to support thousands of users with the tools the GAP had built. Changes were coming, though.

|

||||

|

||||

In November of 1998, I received an invitation to join a brand new startup which would focus on Linux in the enterprise. This startup became quite famous as [Linuxcare][2].

|

||||

|

||||

### My time at Linuxcare

|

||||

|

||||

I had played with Linux a bit, but had never considered delivering it to enterprise customers. Mere months later (which is a turn of the corner in startup time and space), I was managing a line of business that let enterprises get their hardware, software, and even books certified on a few flavors of Linux that were popular back then.

|

||||

|

||||

I supported customers like IBM, Dell, and HP in ensuring their hardware ran Linux successfully. You hear a lot now about preloading Linux on hardware today, but way back then I was invited to Dell to discuss getting a laptop certified to run Linux for an upcoming trade show. Very exciting times! We also supported IBM and HP on a number of certification efforts that spanned a few years.

|

||||

|

||||

Linux was changing fast, much like it always has. It gained hardware support for more key devices like sound, network, graphics. At around that time, I shifted from RPM-based systems to [Debian][3] for my personal use.

|

||||

|

||||

### Using Linux through the years

|

||||

|

||||

Fast forward some years and I worked at a number of companies that did Linux as hardened appliances, Linux as custom software, and Linux in the data center. By the mid 2000s, I was busy doing consulting for that rather large software company in Redmond around some analysis and verification of Linux compared to their own solutions. My personal use had not changed though—I would still run Debian testing systems on anything I could.

|

||||

|

||||

I really appreciated the flexibility of a distribution that floated and was forever updated. Debian is one of the most fun and well supported distributions and has the best community I've ever been a part of.

|

||||

|

||||

When I look back at my own adoption of Linux, I remember with fondness the numerous Linux Expo trade shows in San Jose, San Francisco, Boston, and New York in the early and mid 2000's. At Linuxcare we always did fun and funky booths, and walking the show floor always resulted in getting re-acquainted with old friends. Rumors of work were always traded, and the entire thing underscored the fun of using Linux in real endeavors.

|

||||

|

||||

The rise of virtualization and cloud has really made the use of Linux even more interesting. When I was with Linuxcare, we partnered with a small 30-person company in Palo Alto. We would drive to their offices and get things ready for a trade show that they would attend with us. Who would have ever known that little startup would become VMware?

|

||||

|

||||

I have so many stories, and there were so many people I was so fortunate to meet and work with. Linux has evolved in so many ways and has become so important. And even with its increasing importance, Linux is still fun to use. I think its openness and the ability to modify it has contributed to a legion of new users, which always astounds me.

|

||||

|

||||

### Today

|

||||

|

||||

I've moved away from doing mainstream Linux things over the past five years. I manage large scale infrastructure projects that include a variety of OSs (both proprietary and open), but my heart has always been with Linux.

|

||||

|

||||

The constant evolution and fun of using Linux has been a driving force for me for over the past 18 years. I started with the 2.0 Linux kernel and have watched it become what it is now. It's a remarkable thing. An organic thing. A cool thing.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/3/my-linux-story-michael-perry

|

||||

|

||||

作者:[Michael Perry][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

[a]: https://opensource.com/users/mpmilestogo

|

||||

[1]: https://en.wikipedia.org/wiki/OS/2

|

||||

[2]: https://archive.org/details/IBMOS2Warp3Collection

|

||||

[3]: https://en.wikipedia.org/wiki/Linuxcare

|

||||

[4]: https://www.debian.org/

|

||||

[5]:

|

||||

@ -0,0 +1,73 @@

|

||||

xinglianfly translate

|

||||

Writing online multiplayer game with python and asyncio - part 1

|

||||

===================================================================

|

||||

|

||||

Have you ever combined async with Python? Here I’ll tell you how to do it and show it on a [working example][1] - a popular Snake game, designed for multiple players.

|

||||

|

||||

[Play gmae][2]

|

||||

|

||||

### 1. Introduction

|

||||

|

||||

Massive multiplayer online games are undoubtedly one of the main trends of our century, in both tech and cultural domains. And while for a long time writing a server for a MMO game was associated with massive budgets and complex low-level programming techniques, things are rapidly changing in the recent years. Modern frameworks based on dynamic languages allow handling thousands of parallel user connections on moderate hardware. At the same time, HTML 5 and WebSockets standards enabled the creation of real-time graphics-based game clients that run directly in web browser, without any extensions.

|

||||

|

||||

Python may be not the most popular tool for creating scalable non-blocking servers, especially comparing to node.js popularity in this area. But the latest versions of Python are aimed to change this. The introduction of [asyncio][3] library and a special [async/await][4] syntax makes asynchronous code look as straightforward as regular blocking code, which now makes Python a worthy choice for asynchronous programming. So I will try to utilize these new features to demonstrate a way to create an online multiplayer game.

|

||||

|

||||

### 2. Getting asynchronous

|

||||

|

||||

A game server should handle a maximum possible number of parallel users' connections and process them all in real time. And a typical solution - creating threads, doesn't solve a problem in this case. Running thousands of threads requires CPU to switch between them all the time (it is called context switching), which creates big overhead, making it very ineffective. Even worse with processes, because, in addition, they do occupy too much memory. In Python there is even one more problem - regular Python interpreter (CPython) is not designed to be multithreaded, it aims to achieve maximum performance for single-threaded apps instead. That's why it uses GIL (global interpreter lock), a mechanism which doesn't allow multiple threads to run Python code at the same time, to prevent uncontrolled usage of the same shared objects. Normally the interpreter switches to another thread when currently running thread is waiting for something, usually a response from I/O (like a response from web server for example). This allows having non-blocking I/O operations in your app, because every operation blocks only one thread instead of blocking the whole server. However, it also makes general multithreading idea nearly useless, because it doesn't allow you to execute python code in parallel, even on multi-core CPU. While at the same time it is completely possible to have non-blocking I/O in one single thread, thus eliminating the need of heavy context-switching.

|

||||

|

||||

Actually, a single-threaded non-blocking I/O is a thing you can do in pure python. All you need is a standard [select][5] module which allows you to write an event loop waiting for I/O from non-blocking sockets. However, this approach requires you to define all the app logic in one place, and soon your app becomes a very complex state-machine. There are frameworks that simplify this task, most popular are [tornado][6] and [twisted][7]. They are utilized to implement complex protocols using callback methods (and this is similar to node.js). The framework runs its own event loop invoking your callbacks on the defined events. And while this may be a way to go for some, it still requires programming in callback style, what makes your code fragmented. Compare this to just writing synchronous code and running multiple copies concurrently, like we would do with normal threads. Why wouldn't this be possible in one thread?

|

||||

|

||||

And this is where the concept of microthreads come in. The idea is to have concurrently running tasks in one thread. When you call a blocking function in one task, behind the scenes it calls a "manager" (or "scheduler") that runs an event loop. And when there is some event ready to process, a manager passes execution to a task waiting for it. That task will also run until it reaches a blocking call, and then it will return execution to a manager again.

|

||||

|

||||

>Microthreads are also called lightweight threads or green threads (a term which came from Java world). Tasks which are running concurrently in pseudo-threads are called tasklets, greenlets or coroutines.

|

||||

|

||||

One of the first implementations of microthreads in Python was [Stackless Python][8]. It got famous because it is used in a very successful online game [EVE online][9]. This MMO game boasts about a persistent universe, where thousands of players are involved in different activities, all happening in the real time. Stackless is a standalone Python interpreter which replaces standard function calling stack and controls the flow directly to allow minimum possible context-switching expenses. Though very effective, this solution remained less popular than "soft" libraries that work with a standard interpreter. Packages like [eventlet][10] and [gevent][11] come with patching of a standard I/O library in the way that I/O function pass execution to their internal event loop. This allows turning normal blocking code into non-blocking in a very simple way. The downside of this approach is that it is not obvious from the code, which calls are non-blocking. A newer version of Python introduced native coroutines as an advanced form of generators. Later in Python 3.4 they included asyncio library which relies on native coroutines to provide single-thread concurrency. But only in python 3.5 coroutines became an integral part of python language, described with the new keywords async and await. Here is a simple example, which illustrates using asyncio to run concurrent tasks:

|

||||

|

||||

```

|

||||

import asyncio

|

||||

|

||||

async def my_task(seconds):

|

||||

print("start sleeping for {} seconds".format(seconds))

|

||||

await asyncio.sleep(seconds)

|

||||

print("end sleeping for {} seconds".format(seconds))

|

||||

|

||||

all_tasks = asyncio.gather(my_task(1), my_task(2))

|

||||

loop = asyncio.get_event_loop()

|

||||

loop.run_until_complete(all_tasks)

|

||||

loop.close()

|

||||

```

|

||||

|

||||

We launch two tasks, one sleeps for 1 second, the other - for 2 seconds. The output is:

|

||||

|

||||

```

|

||||

start sleeping for 1 seconds

|

||||

start sleeping for 2 seconds

|

||||

end sleeping for 1 seconds

|

||||

end sleeping for 2 seconds

|

||||

```

|

||||

|

||||

As you can see, coroutines do not block each other - the second task starts before the first is finished. This is happening because asyncio.sleep is a coroutine which returns execution to a scheduler until the time will pass. In the next section, we will use coroutine-based tasks to create a game loop.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-asyncio-getting-asynchronous/

|

||||

|

||||

作者:[Kyrylo Subbotin][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-asyncio-getting-asynchronous/

|

||||

[1]: http://snakepit-game.com/

|

||||

[2]: http://snakepit-game.com/

|

||||

[3]: https://docs.python.org/3/library/asyncio.html

|

||||

[4]: https://docs.python.org/3/whatsnew/3.5.html#whatsnew-pep-492

|

||||

[5]: https://docs.python.org/2/library/select.html

|

||||

[6]: http://www.tornadoweb.org/

|

||||

[7]: http://twistedmatrix.com/

|

||||

[8]: http://www.stackless.com/

|

||||

[9]: http://www.eveonline.com/

|

||||

[10]: http://eventlet.net/

|

||||

[11]: http://www.gevent.org/

|

||||

@ -0,0 +1,234 @@

|

||||

chunyang-wen translating

|

||||

Writing online multiplayer game with python and asyncio - Part 2

|

||||

==================================================================

|

||||

|

||||

|

||||

|

||||

Have you ever made an asynchronous Python app? Here I’ll tell you how to do it and in the next part, show it on a [working example][1] - a popular Snake game, designed for multiple players.

|

||||

|

||||

see the intro and theory about how to [Get Asynchronous [part 1]][2]

|

||||

|

||||

[Play the game][3]

|

||||

|

||||

### 3. Writing game loop

|

||||

|

||||

The game loop is a heart of every game. It runs continuously to get player's input, update state of the game and render the result on the screen. In online games the loop is divided into client and server parts, so basically there are two loops which communicate over the network. Usually, client role is to get player's input, such as keypress or mouse movement, pass this data to a server and get back the data to render. The server side is processing all the data coming from players, updating game's state, doing necessary calculations to render next frame and passes back the result, such as new placement of game objects. It is very important not to mix client and server roles without a solid reason. If you start doing game logic calculations on the client side, you can easily go out of sync with other clients, and your game can also be created by simply passing any data from the client side.

|

||||

|

||||

A game loop iteration is often called a tick. Tick is an event meaning that current game loop iteration is over and the data for the next frame(s) is ready.

|

||||

In the next examples we will use the same client, which connects to a server from a web page using WebSocket. It runs a simple loop which passes pressed keys' codes to the server and displays all messages that come from the server. [Client source code is located here][4].

|

||||

|

||||

#### Example 3.1: Basic game loop

|

||||

|

||||

[Example 3.1 source code][5]

|

||||

|

||||

We will use [aiohttp][6] library to create a game server. It allows creating web servers and clients based on asyncio. A good thing about this library is that it supports normal http requests and websockets at the same time. So we don't need other web servers to render game's html page.

|

||||

|

||||

Here is how we run the server:

|

||||

|

||||

```

|

||||

app = web.Application()

|

||||

app["sockets"] = []

|

||||

|

||||

asyncio.ensure_future(game_loop(app))

|

||||

|

||||

app.router.add_route('GET', '/connect', wshandler)

|

||||

app.router.add_route('GET', '/', handle)

|

||||

|

||||

web.run_app(app)

|

||||

```

|

||||

|

||||

web.run_app is a handy shortcut to create server's main task and to run asyncio event loop with it's run_forever() method. I suggest you check the source code of this method to see how the server is actually created and terminated.

|

||||

|

||||

An app is a dict-like object which can be used to share data between connected clients. We will use it to store a list of connected sockets. This list is then used to send notification messages to all connected clients. A call to asyncio.ensure_future() will schedule our main game_loop task which sends 'tick' message to clients every 2 seconds. This task will run concurrently in the same asyncio event loop along with our web server.

|

||||

|

||||

There are 2 web request handlers: handle just serves a html page and wshandler is our main websocket server's task which handles interaction with game clients. With every connected client a new wshandler task is launched in the event loop. This task adds client's socket to the list, so that game_loop task may send messages to all the clients. Then it echoes every keypress back to the client with a message.

|

||||

|

||||

In the launched tasks we are running worker loops over the main event loop of asyncio. A switch between tasks happens when one of them uses await statement to wait for a coroutine to finish. For instance, asyncio.sleep just passes execution back to a scheduler for a given amount of time, and ws.receive() is waiting for a message from websocket, while the scheduler may switch to some other task.

|

||||

|

||||

After you open the main page in a browser and connect to the server, just try to press some keys. Their codes will be echoed back from the server and every 2 seconds this message will be overwritten by game loop's 'tick' message which is sent to all clients.

|

||||

|

||||

So we have just created a server which is processing client's keypresses, while the main game loop is doing some work in the background and updates all clients periodically.

|

||||

|

||||

#### Example 3.2: Starting game loop by request

|

||||

|

||||

[Example 3.2 source code][7]

|

||||

|

||||

In the previous example a game loop was running continuously all the time during the life of the server. But in practice, there is usually no sense to run game loop when no one is connected. Also, there may be different game "rooms" running on one server. In this concept one player "creates" a game session (a match in a multiplayer game or a raid in MMO for example) so other players may join it. Then a game loop runs while the game session continues.

|

||||

|

||||

In this example we use a global flag to check if a game loop is running, and we start it when the first player connects. In the beginning, a game loop is not running, so the flag is set to False. A game loop is launched from the client's handler:

|

||||

|

||||

```

|

||||

if app["game_is_running"] == False:

|

||||

asyncio.ensure_future(game_loop(app))

|

||||

```

|

||||

|

||||

This flag is then set to True at the start of game loop() and then back to False in the end, when all clients are disconnected.

|

||||

|

||||

#### Example 3.3: Managing tasks

|

||||

|

||||

[Example 3.3 source code][8]

|

||||

|

||||

This example illustrates working with task objects. Instead of storing a flag, we store game loop's task directly in our application's global dict. This may be not an optimal thing to do in a simple case like this, but sometimes you may need to control already launched tasks.

|

||||

```

|

||||

if app["game_loop"] is None or \

|

||||

app["game_loop"].cancelled():

|

||||

app["game_loop"] = asyncio.ensure_future(game_loop(app))

|

||||

```

|

||||

|

||||

Here ensure_future() returns a task object that we store in a global dict; and when all users disconnect, we cancel it with

|

||||

|

||||

```

|

||||

app["game_loop"].cancel()

|

||||

```

|

||||

|

||||

This cancel() call tells scheduler not to pass execution to this coroutine anymore and sets its state to cancelled which then can be checked by cancelled() method. And here is one caveat worth to mention: when you have external references to a task object and exception happens in this task, this exception will not be raised. Instead, an exception is set to this task and may be checked by exception() method. Such silent fails are not useful when debugging a code. Thus, you may want to raise all exceptions instead. To do so you need to call result() method of unfinished task explicitly. This can be done in a callback:

|

||||

|

||||

```

|

||||

app["game_loop"].add_done_callback(lambda t: t.result())

|

||||

```

|

||||

|

||||

Also if we are going to cancel this task in our code and we don't want to have CancelledError exception, it has a point checking its "cancelled" state:

|

||||

```

|

||||

app["game_loop"].add_done_callback(lambda t: t.result()

|

||||

if not t.cancelled() else None)

|

||||

```

|

||||

|

||||

Note that this is required only if you store a reference to your task objects. In the previous examples all exceptions are raised directly without additional callbacks.

|

||||

|

||||

#### Example 3.4: Waiting for multiple events

|

||||

|

||||

[Example 3.4 source code][9]

|

||||

|

||||

In many cases, you need to wait for multiple events inside client's handler. Beside a message from a client, you may need to wait for different types of things to happen. For instance, if your game's time is limited, you may wait for a signal from timer. Or, you may wait for a message from other process using pipes. Or, for a message from a different server in the network, using a distributed messaging system.

|

||||

|

||||

This example is based on example 3.1 for simplicity. But in this case we use Condition object to synchronize game loop with connected clients. We do not keep a global list of sockets here as we are using sockets only within the handler. When game loop iteration ends, we notify all clients using Condition.notify_all() method. This method allows implementing publish/subscribe pattern within asyncio event loop.

|

||||

|

||||

To wait for two events in the handler, first, we wrap awaitable objects in a task using ensure_future()

|

||||

|

||||

```

|

||||

if not recv_task:

|

||||

recv_task = asyncio.ensure_future(ws.receive())

|

||||

if not tick_task:

|

||||

await tick.acquire()

|

||||

tick_task = asyncio.ensure_future(tick.wait())

|

||||

```

|

||||

|

||||

Before we can call Condition.wait(), we need to acquire a lock behind it. That is why, we call tick.acquire() first. This lock is then released after calling tick.wait(), so other coroutines may use it too. But when we get a notification, a lock will be acquired again, so we need to release it calling tick.release() after received notification.

|

||||

|

||||

We are using asyncio.wait() coroutine to wait for two tasks.

|

||||

|

||||

```

|

||||

done, pending = await asyncio.wait(

|

||||

[recv_task,

|

||||

tick_task],

|

||||

return_when=asyncio.FIRST_COMPLETED)

|

||||

```

|

||||

|

||||

It blocks until either of tasks from the list is completed. Then it returns 2 lists: tasks which are done and tasks which are still running. If the task is done, we set it to None so it may be created again on the next iteration.

|

||||

|

||||

#### Example 3.5: Combining with threads

|

||||

|

||||

[Example 3.5 source code][10]

|

||||

|

||||

In this example we combine asyncio loop with threads by running the main game loop in a separate thread. As I mentioned before, it's not possible to perform real parallel execution of python code with threads because of GIL. So it is not a good idea to use other thread to do heavy calculations. However, there is one reason to use threads with asyncio: this is the case when you need to use other libraries which do not support asyncio. Using these libraries in the main thread will simply block execution of the loop, so the only way to use them asynchronously is to run in a different thread.

|

||||

|

||||

We run game loop using run_in_executor() method of asyncio loop and ThreadPoolExecutor. Note that game_loop() is not a coroutine anymore. It is a function that is executed in another thread. However, we need to interact with the main thread to notify clients on the game events. And while asyncio itself is not threadsafe, it has methods which allow running your code from another thread. These are call_soon_threadsafe() for normal functions and run_coroutine_threadsafe() for coroutines. We will put a code which notifies clients about game's tick to notify() coroutine and runs it in the main event loop from another thread.

|

||||

|

||||

```

|

||||

def game_loop(asyncio_loop):

|

||||

print("Game loop thread id {}".format(threading.get_ident()))

|

||||

async def notify():

|

||||

print("Notify thread id {}".format(threading.get_ident()))

|

||||

await tick.acquire()

|

||||

tick.notify_all()

|

||||

tick.release()

|

||||

|

||||

while 1:

|

||||

task = asyncio.run_coroutine_threadsafe(notify(), asyncio_loop)

|

||||

# blocking the thread

|

||||

sleep(1)

|

||||

# make sure the task has finished

|

||||

task.result()

|

||||

```

|

||||

|

||||

When you launch this example, you will see that "Notify thread id" is equal to "Main thread id", this is because notify() coroutine is executed in the main thread. While sleep(1) call is executed in another thread, and, as a result, it will not block the main event loop.

|

||||

|

||||

#### Example 3.6: Multiple processes and scaling up

|

||||

|

||||

[Example 3.6 source code][11]

|

||||

|

||||

One threaded server may work well, but it is limited to one CPU core. To scale the server beyond one core, we need to run multiple processes containing their own event loops. So we need a way for processes to interact with each other by exchanging messages or sharing game's data. Also in games, it is often required to perform heavy calculations, such as path finding and alike. These tasks are sometimes not possible to complete quickly within one game tick. It is not recommended to perform time-consuming calculations in coroutines, as it will block event processing, so in this case, it may be reasonable to pass the heavy task to other process running in parallel.

|

||||

|

||||

The easiest way to utilize multiple cores is to launch multiple single core servers, like in the previous examples, each on a different port. You can do this with supervisord or similar process-controller system. Then, you may use a load balancer, such as HAProxy, to distribute connecting clients between the processes. There are different ways for processes to interact wich each other. One is to use network-based systems, which allows you to scale to multiple servers as well. There are already existing adapters to use popular messaging and storage systems with asyncio. Here are some examples:

|

||||

|

||||

- [aiomcache][12] for memcached client

|

||||

- [aiozmq][13] for zeroMQ

|

||||

- [aioredis][14] for Redis storage and pub/sub

|

||||

|

||||

You can find many other packages like this on github and pypi, most of them have "aio" prefix.

|

||||

|

||||

Using network services may be effective to store persistent data and exchange some kind of messages. But its performance may be not enough if you need to perform real-time data processing that involves inter-process communications. In this case, a more appropriate way may be using standard unix pipes. asyncio has support for pipes and there is a [very low-level example of the server which uses pipes][15] in aiohttp repository.

|

||||

|

||||

In the current example, we will use python's high-level [multiprocessing][16] library to instantiate new process to perform heavy calculations on a different core and to exchange messages with this process using multiprocessing.Queue. Unfortunately, the current implementation of multiprocessing is not compatible with asyncio. So every blocking call will block the event loop. But this is exactly the case where threads will be helpful because if we run multiprocessing code in a different thread, it will not block our main thread. All we need is to put all inter-process communications to another thread. This example illustrates this technique. It is very similar to multi-threading example above, but we create a new process from a thread.

|

||||

|

||||

```

|

||||

def game_loop(asyncio_loop):

|

||||

# coroutine to run in main thread

|

||||

async def notify():

|

||||

await tick.acquire()

|

||||

tick.notify_all()

|

||||

tick.release()

|

||||

|

||||

queue = Queue()

|

||||

|

||||

# function to run in a different process

|

||||

def worker():

|

||||

while 1:

|

||||

print("doing heavy calculation in process {}".format(os.getpid()))

|

||||

sleep(1)

|

||||

queue.put("calculation result")

|

||||

|

||||

Process(target=worker).start()

|

||||

|

||||

while 1:

|

||||

# blocks this thread but not main thread with event loop

|

||||

result = queue.get()

|

||||

print("getting {} in process {}".format(result, os.getpid()))

|

||||

task = asyncio.run_coroutine_threadsafe(notify(), asyncio_loop)

|

||||

task.result()

|

||||

```

|

||||

|

||||

Here we run worker() function in another process. It contains a loop doing heavy calculations and putting results to the queue, which is an instance of multiprocessing.Queue. Then we get the results and notify clients in the main event loop from a different thread, exactly as in the example 3.5. This example is very simplified, it doesn't have a proper termination of the process. Also, in a real game, we would probably use the second queue to pass data to the worker.

|

||||

|

||||

There is a project called [aioprocessing][17], which is a wrapper around multiprocessing that makes it compatible with asyncio. However, it uses exactly the same approach as described in this example - creating processes from threads. It will not give you any advantage, other than hiding these tricks behind a simple interface. Hopefully, in the next versions of Python, we will get a multiprocessing library based on coroutines and supports asyncio.

|

||||

|

||||

>Important! If you are going to run another asyncio event loop in a different thread or sub-process created from main thread/process, you need to create a loop explicitly, using asyncio.new_event_loop(), otherwise, it will not work.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-writing-game-loop/

|

||||

|

||||

作者:[Kyrylo Subbotin][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-writing-game-loop/

|

||||

[1]: http://snakepit-game.com/

|

||||

[2]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-asyncio-getting-asynchronous/

|

||||

[3]: http://snakepit-game.com/

|

||||

[4]: https://github.com/7WebPages/snakepit-game/blob/master/simple/index.html

|

||||

[5]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_basic.py

|

||||

[6]: http://aiohttp.readthedocs.org/

|

||||

[7]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_handler.py

|

||||

[8]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_global.py

|

||||

[9]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_wait.py

|

||||

[10]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_thread.py

|

||||

[11]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_process.py

|

||||

[12]: https://github.com/aio-libs/aiomcache

|

||||

[13]: https://github.com/aio-libs/aiozmq

|

||||

[14]: https://github.com/aio-libs/aioredis

|

||||

[15]: https://github.com/KeepSafe/aiohttp/blob/master/examples/mpsrv.py

|

||||

[16]: https://docs.python.org/3.5/library/multiprocessing.html

|

||||

[17]: https://github.com/dano/aioprocessing

|

||||

@ -0,0 +1,320 @@

|

||||

wyangsun translating

|

||||

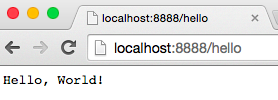

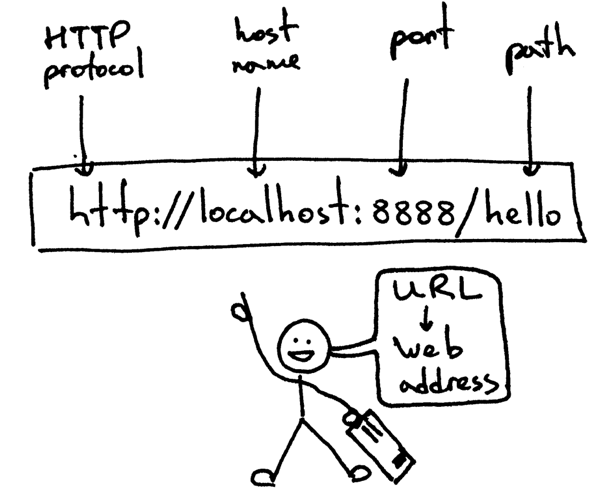

How to build and deploy a Facebook Messenger bot with Python and Flask, a tutorial

|

||||

==========================================================================

|

||||

|

||||

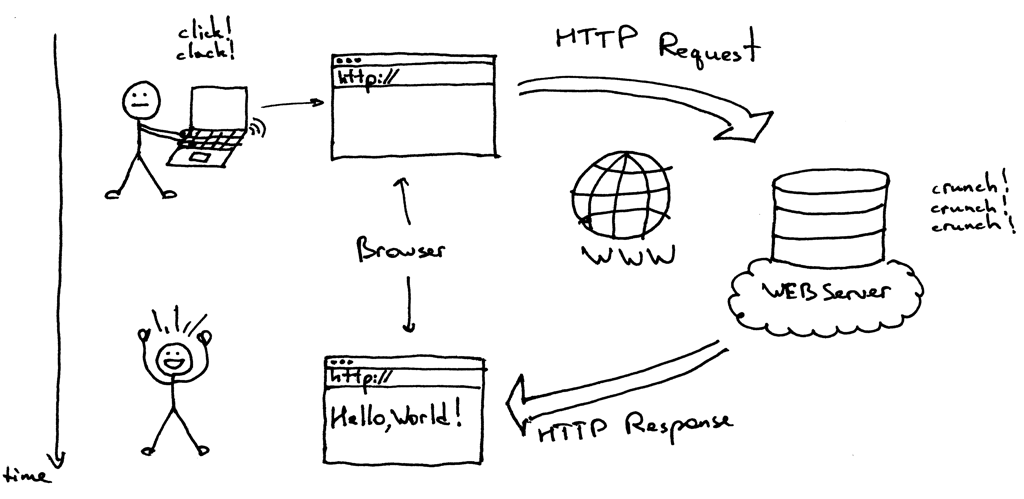

This is my log of how I built a simple Facebook Messenger bot. The functionality is really simple, it’s an echo bot that will just print back to the user what they write.

|

||||

|

||||

This is something akin to the Hello World example for servers, the echo server.

|

||||

|

||||

The goal of the project is not to build the best Messenger bot, but rather to get a feel for what it takes to build a minimal bot and how everything comes together.

|

||||

|

||||

- [Tech Stack][1]

|

||||

- [Bot Architecture][2]

|

||||

- [The Bot Server][3]

|

||||

- [Deploying to Heroku][4]

|

||||

- [Creating the Facebook App][5]

|

||||

- [Conclusion][6]

|

||||

|

||||

### Tech Stack

|

||||

|

||||

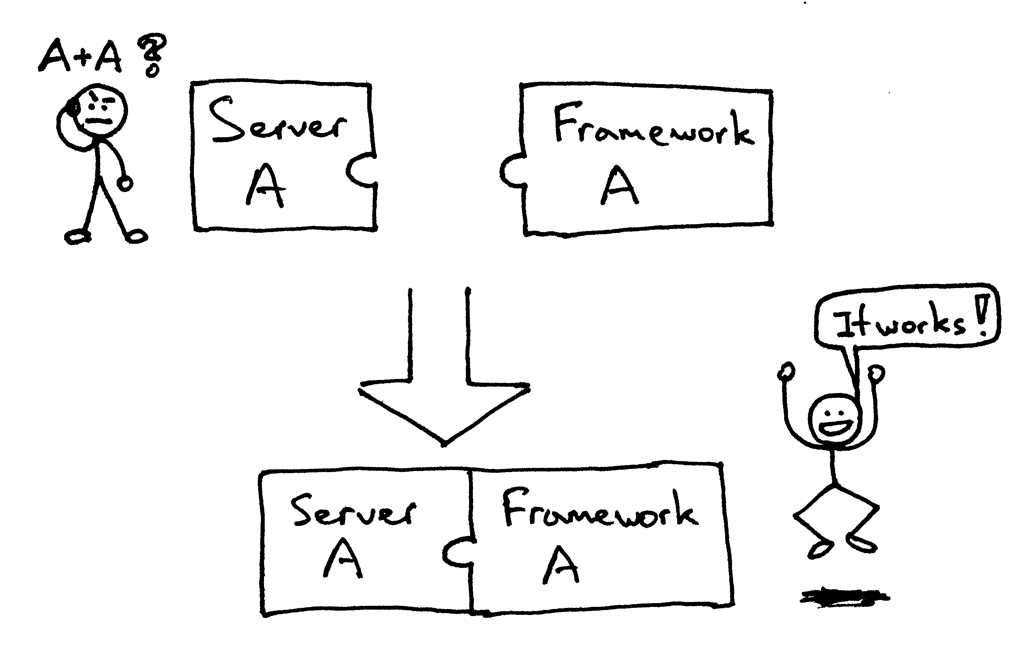

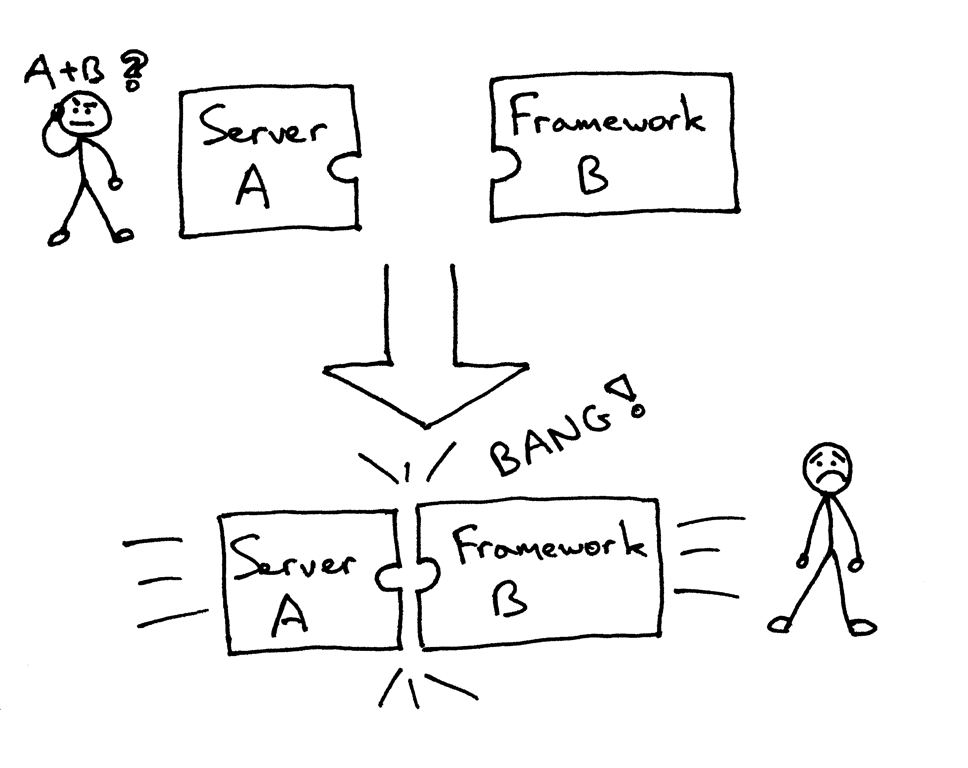

The tech stack that was used is:

|

||||

|

||||

- [Heroku][7] for back end hosting. The free-tier is more than enough for a tutorial of this level. The echo bot does not require any sort of data persistence so a database was not used.

|

||||

- [Python][8] was the language of choice. The version that was used is 2.7 however it can easily be ported to Python 3 with minor alterations.

|

||||

- [Flask][9] as the web development framework. It’s a very lightweight framework that’s perfect for small scale projects/microservices.

|

||||

- Finally the [Git][10] version control system was used for code maintenance and to deploy to Heroku.

|

||||

- Worth mentioning: [Virtualenv][11]. This python tool is used to create “environments” clean of python libraries so you can only install the necessary requirements and minimize the app footprint.

|

||||

|

||||

### Bot Architecture

|

||||

|

||||

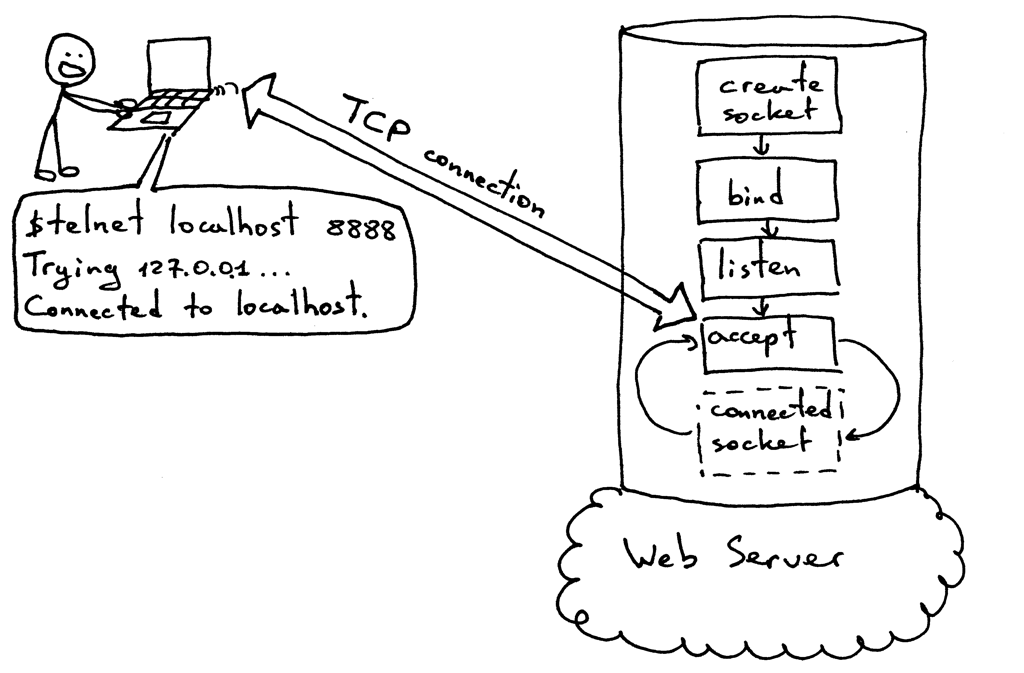

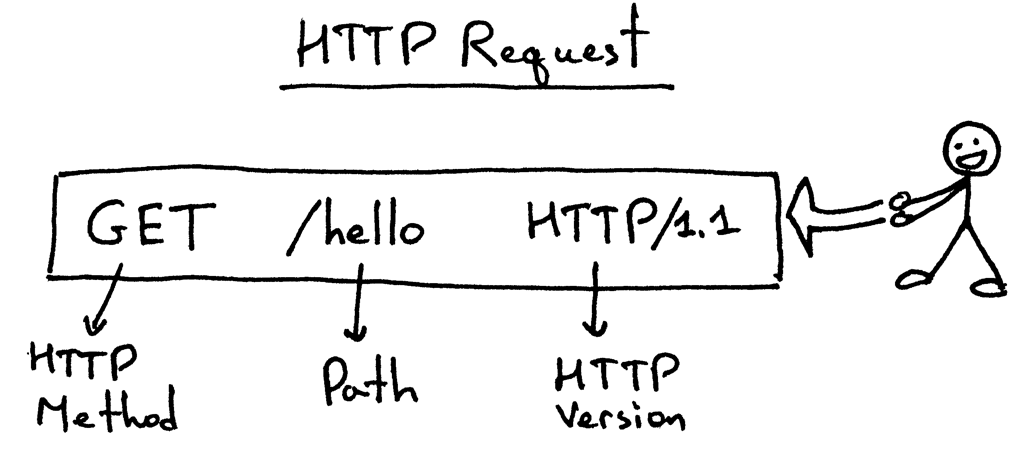

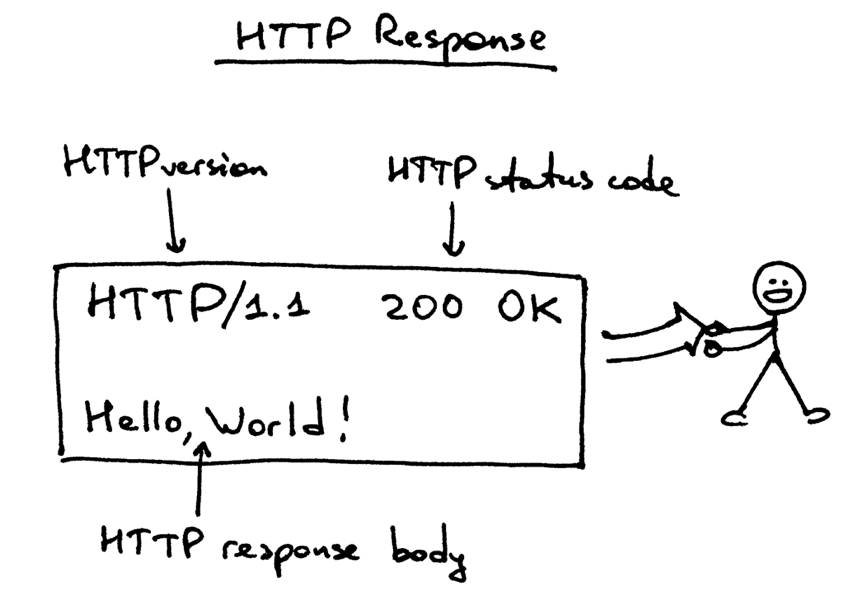

Messenger bots are constituted by a server that responds to two types of requests:

|

||||

|

||||

- GET requests are being used for authentication. They are sent by Messenger with an authentication code that you register on FB.

|

||||

- POST requests are being used for the actual communication. The typical workflow is that the bot will initiate the communication by sending the POST request with the data of the message sent by the user, we will handle it, send a POST request of our own back. If that one is completed successfully (a 200 OK status is returned) we also respond with a 200 OK code to the initial Messenger request.

|

||||

For this tutorial the app will be hosted on Heroku, which provides a nice and easy interface to deploy apps. As mentioned the free tier will suffice for this tutorial.

|

||||

|

||||

After the app has been deployed and is running, we’ll create a Facebook app and link it to our app so that messenger knows where to send the requests that are meant for our bot.

|

||||

|

||||

### The Bot Server

|

||||

The basic server code was taken from the following [Chatbot][12] project by Github user [hult (Magnus Hult)][13], with a few modifications to the code to only echo messages and a couple bugfixes I came across. This is the final version of the server code:

|

||||

|

||||

```

|

||||

from flask import Flask, request

|

||||

import json

|

||||

import requests

|

||||

|

||||

app = Flask(__name__)

|

||||

|

||||

# This needs to be filled with the Page Access Token that will be provided

|

||||

# by the Facebook App that will be created.

|

||||

PAT = ''

|

||||

|

||||

@app.route('/', methods=['GET'])

|

||||

def handle_verification():

|

||||

print "Handling Verification."

|

||||

if request.args.get('hub.verify_token', '') == 'my_voice_is_my_password_verify_me':

|

||||

print "Verification successful!"

|

||||

return request.args.get('hub.challenge', '')

|

||||

else:

|

||||

print "Verification failed!"

|

||||

return 'Error, wrong validation token'

|

||||

|

||||

@app.route('/', methods=['POST'])

|

||||

def handle_messages():

|

||||

print "Handling Messages"

|

||||

payload = request.get_data()

|

||||

print payload

|

||||

for sender, message in messaging_events(payload):

|

||||

print "Incoming from %s: %s" % (sender, message)

|

||||

send_message(PAT, sender, message)

|

||||

return "ok"

|

||||

|

||||

def messaging_events(payload):

|

||||

"""Generate tuples of (sender_id, message_text) from the

|

||||

provided payload.

|

||||

"""

|

||||

data = json.loads(payload)

|

||||

messaging_events = data["entry"][0]["messaging"]

|

||||

for event in messaging_events:

|

||||

if "message" in event and "text" in event["message"]:

|

||||

yield event["sender"]["id"], event["message"]["text"].encode('unicode_escape')

|

||||

else:

|

||||

yield event["sender"]["id"], "I can't echo this"

|

||||

|

||||

|

||||

def send_message(token, recipient, text):

|

||||

"""Send the message text to recipient with id recipient.

|

||||

"""

|

||||

|

||||

r = requests.post("https://graph.facebook.com/v2.6/me/messages",

|

||||

params={"access_token": token},

|

||||

data=json.dumps({

|

||||

"recipient": {"id": recipient},

|

||||

"message": {"text": text.decode('unicode_escape')}

|

||||

}),

|

||||

headers={'Content-type': 'application/json'})

|

||||

if r.status_code != requests.codes.ok:

|

||||

print r.text

|

||||

|

||||

if __name__ == '__main__':

|

||||

app.run()

|

||||

```

|

||||

|

||||

Let’s break down the code. The first part is the imports that will be needed:

|

||||

|

||||

```

|

||||

from flask import Flask, request

|

||||

import json

|

||||

import requests

|

||||

```

|

||||

|

||||

Next we define the two functions (using the Flask specific app.route decorators) that will handle the GET and POST requests to our bot.

|

||||

|

||||

```

|

||||

@app.route('/', methods=['GET'])

|

||||

def handle_verification():

|

||||

print "Handling Verification."

|

||||

if request.args.get('hub.verify_token', '') == 'my_voice_is_my_password_verify_me':

|

||||

print "Verification successful!"

|

||||

return request.args.get('hub.challenge', '')

|

||||

else:

|

||||

print "Verification failed!"

|

||||

return 'Error, wrong validation token'

|

||||

```

|

||||

|

||||

The verify_token object that is being sent by Messenger will be declared by us when we create the Facebook app. We have to validate the one we are being have against itself. Finally we return the “hub.challenge” back to Messenger.

|

||||

|

||||

The function that handles the POST requests is a bit more interesting.

|

||||

|

||||

```

|

||||

@app.route('/', methods=['POST'])

|

||||

def handle_messages():

|

||||

print "Handling Messages"

|

||||

payload = request.get_data()

|

||||

print payload

|

||||

for sender, message in messaging_events(payload):

|

||||

print "Incoming from %s: %s" % (sender, message)

|

||||

send_message(PAT, sender, message)

|

||||

return "ok"

|

||||

```

|

||||

|

||||

When called we grab the massage payload, use function messaging_events to break it down and extract the sender user id and the actual message sent, generating a python iterator that we can loop over. Notice that in each request sent by Messenger it is possible to have more than one messages.

|

||||

|

||||

```

|

||||

def messaging_events(payload):

|

||||

"""Generate tuples of (sender_id, message_text) from the

|

||||

provided payload.

|

||||

"""

|

||||

data = json.loads(payload)

|

||||

messaging_events = data["entry"][0]["messaging"]

|

||||

for event in messaging_events:

|

||||

if "message" in event and "text" in event["message"]:

|

||||

yield event["sender"]["id"], event["message"]["text"].encode('unicode_escape')

|

||||

else:

|

||||

yield event["sender"]["id"], "I can't echo this"

|

||||

```

|

||||

|

||||

While iterating over each message we call the send_message function and we perform the POST request back to Messnger using the Facebook Graph messages API. During this time we still have not responded to the original Messenger request which we are blocking. This can lead to timeouts and 5XX errors.

|

||||

|

||||

The above was spotted during an outage due to a bug I came across, which was occurred when the user was sending emojis which are actual unicode ids, however Python was miss-encoding. We ended up sending back garbage.

|

||||

|

||||

This POST request back to Messenger would never finish, and that in turn would cause 5XX status codes to be returned to the original request, rendering the service unusable.

|

||||

|

||||

This was fixed by escaping the messages with `encode('unicode_escape')` and then just before we sent back the message decode it with `decode('unicode_escape')`.

|

||||

|

||||

```

|

||||

def send_message(token, recipient, text):

|

||||

"""Send the message text to recipient with id recipient.

|

||||

"""

|

||||

|

||||

r = requests.post("https://graph.facebook.com/v2.6/me/messages",

|

||||

params={"access_token": token},

|

||||

data=json.dumps({

|

||||

"recipient": {"id": recipient},

|

||||

"message": {"text": text.decode('unicode_escape')}

|

||||

}),

|

||||

headers={'Content-type': 'application/json'})

|

||||

if r.status_code != requests.codes.ok:

|

||||

print r.text

|

||||

```

|

||||

|

||||

### Deploying to Heroku

|

||||

|

||||

Once the code was built to my liking it was time for the next step.

|

||||

Deploy the app.

|

||||

|

||||

Sure, but how?

|

||||

|

||||

I have deployed apps before to Heroku (mainly Rails) however I was always following a tutorial of some sort, so the configuration has already been created. In this case though I had to start from scratch.

|

||||

|

||||

Fortunately it was the official [Heroku documentation][14] to the rescue. The article explains nicely the bare minimum required for running an app.

|

||||

|

||||

Long story short, what we need besides our code are two files. The first file is the “requirements.txt” file which is a list of of the library dependencies required to run the application.

|

||||

|

||||

The second file required is the “Procfile”. This file is there to inform the Heroku how to run our service. Again the bare minimum needed for this file is the following:

|

||||

|

||||

>web: gunicorn echoserver:app

|

||||

|

||||

The way this will be interpreted by heroku is that our app is started by running the echoserver.py file and the app will be using gunicorn as the web server. The reason we are using an additional webserver is performance related and is explained in the above Heroku documentation:

|

||||

|

||||

>Web applications that process incoming HTTP requests concurrently make much more efficient use of dyno resources than web applications that only process one request at a time. Because of this, we recommend using web servers that support concurrent request processing whenever developing and running production services.

|

||||

|

||||

>The Django and Flask web frameworks feature convenient built-in web servers, but these blocking servers only process a single request at a time. If you deploy with one of these servers on Heroku, your dyno resources will be underutilized and your application will feel unresponsive.

|

||||

|

||||

>Gunicorn is a pure-Python HTTP server for WSGI applications. It allows you to run any Python application concurrently by running multiple Python processes within a single dyno. It provides a perfect balance of performance, flexibility, and configuration simplicity.

|

||||

|

||||

Going back to our “requirements.txt” file let’s see how it binds with the Virtualenv tool that was mentioned.

|

||||

|

||||

At anytime, your developement machine may have a number of python libraries installed. When deploying applications you don’t want to have these libraries loaded as it makes it hard to make out which ones you actually use.

|

||||

|

||||

What Virtualenv does is create a new blank virtual enviroment so that you can only install the libraries that your app requires.

|

||||

|

||||

You can check which libraries are currently installed by running the following command:

|

||||

|

||||

```

|

||||

kostis@KostisMBP ~ $ pip freeze

|

||||

cycler==0.10.0

|

||||

Flask==0.10.1

|

||||

gunicorn==19.6.0

|

||||

itsdangerous==0.24

|

||||

Jinja2==2.8

|

||||

MarkupSafe==0.23

|

||||

matplotlib==1.5.1

|

||||

numpy==1.10.4

|

||||

pyparsing==2.1.0

|

||||

python-dateutil==2.5.0

|

||||

pytz==2015.7

|

||||

requests==2.10.0

|

||||

scipy==0.17.0

|

||||

six==1.10.0

|

||||

virtualenv==15.0.1

|

||||

Werkzeug==0.11.10

|

||||

```

|

||||

|

||||

Note: The pip tool should already be installed on your machine along with Python.

|

||||

|

||||

If not check the [official site][15] for how to install it.

|

||||

|

||||

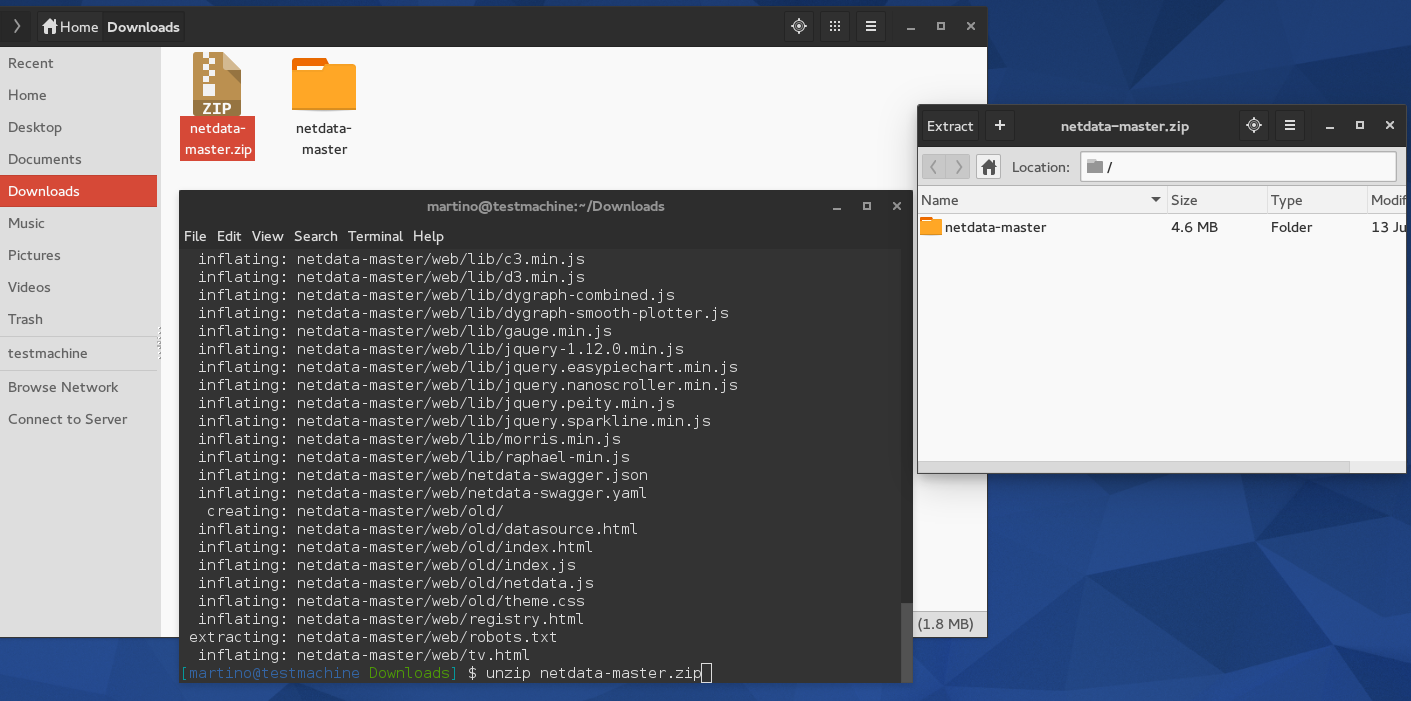

Now let’s use Virtualenv to create a new blank enviroment. First we create a new folder for our project, and change dir into it:

|

||||

|

||||

```

|

||||

kostis@KostisMBP projects $ mkdir echoserver

|

||||

kostis@KostisMBP projects $ cd echoserver/

|

||||

kostis@KostisMBP echoserver $

|

||||

```

|

||||

|

||||

Now let’s create a new enviroment called echobot. To activate it you run the following source command, and checking with pip freeze we can see that it’s now empty.

|

||||

|

||||

```

|

||||

kostis@KostisMBP echoserver $ virtualenv echobot

|

||||

kostis@KostisMBP echoserver $ source echobot/bin/activate

|

||||

(echobot) kostis@KostisMBP echoserver $ pip freeze

|

||||

(echobot) kostis@KostisMBP echoserver $

|

||||

```

|

||||

|

||||

We can start installing the libraries required. The ones we’ll need are flask, gunicorn, and requests and with them installed we create the requirements.txt file:

|

||||

|

||||

```

|

||||

(echobot) kostis@KostisMBP echoserver $ pip install flask

|

||||

(echobot) kostis@KostisMBP echoserver $ pip install gunicorn

|

||||

(echobot) kostis@KostisMBP echoserver $ pip install requests

|

||||

(echobot) kostis@KostisMBP echoserver $ pip freeze

|

||||

click==6.6

|

||||

Flask==0.11

|

||||

gunicorn==19.6.0

|

||||

itsdangerous==0.24

|

||||

Jinja2==2.8

|

||||

MarkupSafe==0.23

|

||||

requests==2.10.0

|

||||

Werkzeug==0.11.10

|

||||

(echobot) kostis@KostisMBP echoserver $ pip freeze > requirements.txt

|

||||

```

|

||||

|

||||

After all the above have been run, we create the echoserver.py file with the python code and the Procfile with the command that was mentioned, and we should end up with the following files/folders:

|

||||

|

||||

```

|

||||

(echobot) kostis@KostisMBP echoserver $ ls

|

||||

Procfile echobot echoserver.py requirements.txt

|

||||

```

|

||||

|

||||

We are now ready to upload to Heroku. We need to do two things. The first is to install the Heroku toolbet if it’s not already installed on your system (go to [Heroku][16] for details). The second is to create a new Heroku app through the [web interface][17].

|

||||

|

||||

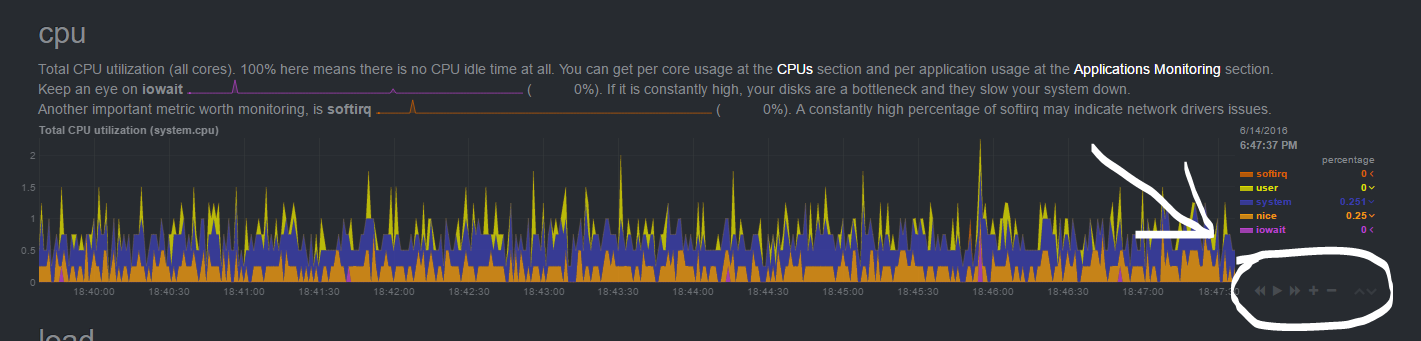

Click on the big plus sign on the top right and select “Create new app”.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://tsaprailis.com/2016/06/02/How-to-build-and-deploy-a-Facebook-Messenger-bot-with-Python-and-Flask-a-tutorial/

|

||||

|

||||

作者:[Konstantinos Tsaprailis][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://github.com/kostistsaprailis

|

||||

[1]: http://tsaprailis.com/2016/06/02/How-to-build-and-deploy-a-Facebook-Messenger-bot-with-Python-and-Flask-a-tutorial/#tech-stack

|

||||

[2]: http://tsaprailis.com/2016/06/02/How-to-build-and-deploy-a-Facebook-Messenger-bot-with-Python-and-Flask-a-tutorial/#bot-architecture

|

||||

[3]: http://tsaprailis.com/2016/06/02/How-to-build-and-deploy-a-Facebook-Messenger-bot-with-Python-and-Flask-a-tutorial/#the-bot-server

|

||||

[4]: http://tsaprailis.com/2016/06/02/How-to-build-and-deploy-a-Facebook-Messenger-bot-with-Python-and-Flask-a-tutorial/#deploying-to-heroku

|

||||

[5]: http://tsaprailis.com/2016/06/02/How-to-build-and-deploy-a-Facebook-Messenger-bot-with-Python-and-Flask-a-tutorial/#creating-the-facebook-app

|

||||

[6]: http://tsaprailis.com/2016/06/02/How-to-build-and-deploy-a-Facebook-Messenger-bot-with-Python-and-Flask-a-tutorial/#conclusion

|

||||

[7]: https://www.heroku.com

|

||||

[8]: https://www.python.org

|

||||

[9]: http://flask.pocoo.org

|

||||

[10]: https://git-scm.com

|

||||

[11]: https://virtualenv.pypa.io/en/stable

|

||||

[12]: https://github.com/hult/facebook-chatbot-python

|

||||

[13]: https://github.com/hult

|

||||

[14]: https://devcenter.heroku.com/articles/python-gunicorn

|

||||

[15]: https://pip.pypa.io/en/stable/installing

|

||||

[16]: https://toolbelt.heroku.com

|

||||