mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-03 01:10:13 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

14c18c1f32

@ -1,3 +1,3 @@

|

||||

language: c

|

||||

script:

|

||||

- make -s check

|

||||

- sh ./scripts/check.sh

|

||||

|

||||

58

Makefile

58

Makefile

@ -1,58 +0,0 @@

|

||||

DIR_PATTERN := (news|talk|tech)

|

||||

NAME_PATTERN := [0-9]{8} [a-zA-Z0-9_.,() -]*\.md

|

||||

|

||||

RULES := rule-source-added \

|

||||

rule-translation-requested \

|

||||

rule-translation-completed \

|

||||

rule-translation-revised \

|

||||

rule-translation-published

|

||||

.PHONY: check match $(RULES)

|

||||

|

||||

CHANGE_FILE := /tmp/changes

|

||||

|

||||

check: $(CHANGE_FILE)

|

||||

echo 'PR #$(TRAVIS_PULL_REQUEST) Changes:'

|

||||

cat $(CHANGE_FILE)

|

||||

echo

|

||||

echo 'Check for rules...'

|

||||

make -k $(RULES) 2>/dev/null | grep '^Rule Matched: '

|

||||

|

||||

$(CHANGE_FILE):

|

||||

git --no-pager diff $(TRAVIS_BRANCH) origin/master --no-renames --name-status > $@

|

||||

|

||||

rule-source-added:

|

||||

echo 'Unmatched Files:'

|

||||

egrep -v '^A\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) || true

|

||||

echo '[End of Unmatched Files]'

|

||||

[ $(shell egrep '^A\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) -ge 1 ]

|

||||

[ $(shell egrep -v '^A\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 0 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

rule-translation-requested:

|

||||

[ $(shell egrep '^M\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell cat $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

rule-translation-completed:

|

||||

[ $(shell egrep '^D\s*"?sources/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell egrep '^A\s*"?translated/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell cat $(CHANGE_FILE) | wc -l) = 2 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

rule-translation-revised:

|

||||

[ $(shell egrep '^M\s*"?translated/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell cat $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

rule-translation-published:

|

||||

[ $(shell egrep '^D\s*"?translated/$(DIR_PATTERN)/$(NAME_PATTERN)"?' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell egrep '^A\s*"?published/$(NAME_PATTERN)' $(CHANGE_FILE) | wc -l) = 1 ]

|

||||

[ $(shell cat $(CHANGE_FILE) | wc -l) = 2 ]

|

||||

echo 'Rule Matched: $(@)'

|

||||

|

||||

badge:

|

||||

mkdir -p build/badge

|

||||

./lctt-scripts/show_status.sh -s published >build/badge/published.svg

|

||||

./lctt-scripts/show_status.sh -s translated >build/badge/translated.svg

|

||||

./lctt-scripts/show_status.sh -s translating >build/badge/translating.svg

|

||||

./lctt-scripts/show_status.sh -s sources >build/badge/sources.svg

|

||||

9

scripts/check.sh

Normal file

9

scripts/check.sh

Normal file

@ -0,0 +1,9 @@

|

||||

#!/bin/bash

|

||||

# PR 检查脚本

|

||||

set -e

|

||||

|

||||

CHECK_DIR="$(dirname "$0")/check"

|

||||

# sh "${CHECK_DIR}/check.sh" # 需要依赖,暂时禁用

|

||||

sh "${CHECK_DIR}/collect.sh"

|

||||

sh "${CHECK_DIR}/analyze.sh"

|

||||

sh "${CHECK_DIR}/identify.sh"

|

||||

43

scripts/check/analyze.sh

Normal file

43

scripts/check/analyze.sh

Normal file

@ -0,0 +1,43 @@

|

||||

#!/bin/sh

|

||||

# PR 文件变更分析

|

||||

set -e

|

||||

|

||||

# 加载公用常量和函数

|

||||

# shellcheck source=common.inc.sh

|

||||

. "$(dirname "$0")/common.inc.sh"

|

||||

|

||||

################################################################################

|

||||

# 读入:

|

||||

# - /tmp/changes # 文件变更列表

|

||||

# 写出:

|

||||

# - /tmp/stats # 文件变更统计

|

||||

################################################################################

|

||||

|

||||

# 执行分析并将统计输出到标准输出

|

||||

do_analyze() {

|

||||

cat /dev/null > /tmp/stats

|

||||

OTHER_REGEX='^$'

|

||||

for TYPE in 'SRC' 'TSL' 'PUB'; do

|

||||

for STAT in 'A' 'M' 'D'; do

|

||||

# 统计每个类别的每个操作

|

||||

REGEX="$(get_operation_regex "$STAT" "$TYPE")"

|

||||

OTHER_REGEX="${OTHER_REGEX}|${REGEX}"

|

||||

eval "${TYPE}_${STAT}=\"\$(grep -Ec '$REGEX' /tmp/changes)\"" || true

|

||||

eval echo "${TYPE}_${STAT}=\$${TYPE}_${STAT}"

|

||||

done

|

||||

done

|

||||

|

||||

# 统计其他操作

|

||||

OTHER="$(grep -Evc "$OTHER_REGEX" /tmp/changes)" || true

|

||||

echo "OTHER=$OTHER"

|

||||

|

||||

# 统计变更总数

|

||||

TOTAL="$(wc -l < /tmp/changes )"

|

||||

echo "TOTAL=$TOTAL"

|

||||

}

|

||||

|

||||

|

||||

echo "[分析] 统计文件变更……"

|

||||

do_analyze > /tmp/stats

|

||||

echo "[分析] 已写入统计结果:"

|

||||

cat /tmp/stats

|

||||

10

scripts/check/check.sh

Normal file

10

scripts/check/check.sh

Normal file

@ -0,0 +1,10 @@

|

||||

#!/bin/bash

|

||||

# 检查脚本状态

|

||||

set -e

|

||||

|

||||

################################################################################

|

||||

# 暂时仅供开发使用

|

||||

################################################################################

|

||||

|

||||

shellcheck -e SC2034 -x mock/stats.sh "$(dirname "$0")"/*.sh \

|

||||

&& echo '[检查] ShellCheck 通过'

|

||||

37

scripts/check/collect.sh

Normal file

37

scripts/check/collect.sh

Normal file

@ -0,0 +1,37 @@

|

||||

#!/bin/bash

|

||||

# PR 文件变更收集

|

||||

set -e

|

||||

|

||||

################################################################################

|

||||

# 读入:(无)

|

||||

# 写出:

|

||||

# - /tmp/changes # 文件变更列表

|

||||

################################################################################

|

||||

|

||||

|

||||

echo "[收集] 计算 PR 分支与目标分支的分叉点……"

|

||||

|

||||

TARGET_BRANCH="${TRAVIS_BRANCH:-master}"

|

||||

echo "[收集] 目标分支设定为:${TARGET_BRANCH}"

|

||||

|

||||

MERGE_BASE='HEAD^'

|

||||

[ "$TRAVIS_PULL_REQUEST" != 'false' ] \

|

||||

&& MERGE_BASE="$(git merge-base "$TARGET_BRANCH" HEAD)"

|

||||

echo "[收集] 找到分叉节点:${MERGE_BASE}"

|

||||

|

||||

echo "[收集] 变更摘要:"

|

||||

git --no-pager show --summary "${MERGE_BASE}..HEAD"

|

||||

|

||||

{

|

||||

git --no-pager log --oneline "${MERGE_BASE}..HEAD" | grep -Eq '绕过检查' && {

|

||||

touch /tmp/bypass

|

||||

echo "[收集] 已标记为绕过检查项"

|

||||

}

|

||||

} || true

|

||||

|

||||

echo "[收集] 写出文件变更列表……"

|

||||

|

||||

git diff "$MERGE_BASE" HEAD --no-renames --name-status > /tmp/changes

|

||||

echo "[收集] 已写出文件变更列表:"

|

||||

cat /tmp/changes

|

||||

{ [ -z "$(cat /tmp/changes)" ] && echo "(无变更)"; } || true

|

||||

30

scripts/check/common.inc.sh

Normal file

30

scripts/check/common.inc.sh

Normal file

@ -0,0 +1,30 @@

|

||||

#!/bin/sh

|

||||

|

||||

################################################################################

|

||||

# 公用常量和函数

|

||||

################################################################################

|

||||

|

||||

# 定义类别目录

|

||||

export SRC_DIR='sources' # 未翻译

|

||||

export TSL_DIR='translated' # 已翻译

|

||||

export PUB_DIR='published' # 已发布

|

||||

|

||||

# 定义匹配规则

|

||||

export CATE_PATTERN='(news|talk|tech)' # 类别

|

||||

export FILE_PATTERN='[0-9]{8} [a-zA-Z0-9_.,() -]*\.md' # 文件名

|

||||

|

||||

# 用法:get_operation_regex 状态 类型

|

||||

#

|

||||

# 状态为:

|

||||

# - A:添加

|

||||

# - M:修改

|

||||

# - D:删除

|

||||

# 类型为:

|

||||

# - SRC:未翻译

|

||||

# - TSL:已翻译

|

||||

# - PUB:已发布

|

||||

get_operation_regex() {

|

||||

STAT="$1"

|

||||

TYPE="$2"

|

||||

echo "^${STAT}\\s+\"?$(eval echo "\$${TYPE}_DIR")/"

|

||||

}

|

||||

86

scripts/check/identify.sh

Normal file

86

scripts/check/identify.sh

Normal file

@ -0,0 +1,86 @@

|

||||

#!/bin/bash

|

||||

# 匹配 PR 规则

|

||||

set -e

|

||||

|

||||

################################################################################

|

||||

# 读入:

|

||||

# - /tmp/stats

|

||||

# 写出:(无)

|

||||

################################################################################

|

||||

|

||||

# 加载公用常量和函数

|

||||

# shellcheck source=common.inc.sh

|

||||

. "$(dirname "$0")/common.inc.sh"

|

||||

|

||||

echo "[匹配] 加载统计结果……"

|

||||

# 加载统计结果

|

||||

# shellcheck source=mock/stats.sh

|

||||

. /tmp/stats

|

||||

|

||||

# 定义 PR 规则

|

||||

|

||||

# 绕过检查:绕过 PR 检查

|

||||

rule_bypass_check() {

|

||||

[ -f /tmp/bypass ] && echo "匹配规则:绕过检查"

|

||||

}

|

||||

|

||||

# 添加原文:添加至少一篇原文

|

||||

rule_source_added() {

|

||||

[ "$SRC_A" -ge 1 ] \

|

||||

&& [ "$TOTAL" -eq "$SRC_A" ] && echo "匹配规则:添加原文 ${SRC_A} 篇"

|

||||

}

|

||||

|

||||

# 申领翻译:只能申领一篇原文

|

||||

rule_translation_requested() {

|

||||

[ "$SRC_M" -eq 1 ] \

|

||||

&& [ "$TOTAL" -eq 1 ] && echo "匹配规则:申领翻译"

|

||||

}

|

||||

|

||||

# 提交译文:只能提交一篇译文

|

||||

rule_translation_completed() {

|

||||

[ "$SRC_D" -eq 1 ] && [ "$TSL_A" -eq 1 ] \

|

||||

&& [ "$TOTAL" -eq 2 ] && echo "匹配规则:提交译文"

|

||||

}

|

||||

|

||||

# 校对译文:只能校对一篇

|

||||

rule_translation_revised() {

|

||||

[ "$TSL_M" -eq 1 ] \

|

||||

&& [ "$TOTAL" -eq 1 ] && echo "匹配规则:校对译文"

|

||||

}

|

||||

|

||||

# 发布译文:发布多篇译文

|

||||

rule_translation_published() {

|

||||

[ "$TSL_D" -ge 1 ] && [ "$PUB_A" -ge 1 ] && [ "$TSL_D" -eq "$PUB_A" ] \

|

||||

&& [ "$TOTAL" -eq $(("$TSL_D" + "$PUB_A")) ] \

|

||||

&& echo "匹配规则:发布译文 ${PUB_A} 篇"

|

||||

}

|

||||

|

||||

# 定义常见错误

|

||||

|

||||

# 未知错误

|

||||

error_undefined() {

|

||||

echo "未知错误:无匹配规则,请尝试只对一篇文章进行操作"

|

||||

}

|

||||

|

||||

# 申领多篇

|

||||

error_translation_requested_multiple() {

|

||||

[ "$SRC_M" -gt 1 ] \

|

||||

&& echo "匹配错误:申领多篇,请一次仅申领一篇"

|

||||

}

|

||||

|

||||

# 执行检查并输出匹配项目

|

||||

do_check() {

|

||||

rule_bypass_check \

|

||||

|| rule_source_added \

|

||||

|| rule_translation_requested \

|

||||

|| rule_translation_completed \

|

||||

|| rule_translation_revised \

|

||||

|| rule_translation_published \

|

||||

|| {

|

||||

error_translation_requested_multiple \

|

||||

|| error_undefined

|

||||

exit 1

|

||||

}

|

||||

}

|

||||

|

||||

do_check

|

||||

13

scripts/check/mock/stats.sh

Normal file

13

scripts/check/mock/stats.sh

Normal file

@ -0,0 +1,13 @@

|

||||

#!/bin/sh

|

||||

# 给 ShellCheck 用的 Mock 统计

|

||||

SRC_A=0

|

||||

SRC_M=0

|

||||

SRC_D=0

|

||||

TSL_A=0

|

||||

TSL_M=0

|

||||

TSL_D=0

|

||||

PUB_A=0

|

||||

PUB_M=0

|

||||

PUB_D=0

|

||||

OTHER=0

|

||||

TOTAL=0

|

||||

122

sources/talk/20180916 The Rise and Demise of RSS.md

Normal file

122

sources/talk/20180916 The Rise and Demise of RSS.md

Normal file

@ -0,0 +1,122 @@

|

||||

The Rise and Demise of RSS

|

||||

======

|

||||

There are two stories here. The first is a story about a vision of the web’s future that never quite came to fruition. The second is a story about how a collaborative effort to improve a popular standard devolved into one of the most contentious forks in the history of open-source software development.

|

||||

|

||||

In the late 1990s, in the go-go years between Netscape’s IPO and the Dot-com crash, everyone could see that the web was going to be an even bigger deal than it already was, even if they didn’t know exactly how it was going to get there. One theory was that the web was about to be revolutionized by syndication. The web, originally built to enable a simple transaction between two parties—a client fetching a document from a single host server—would be broken open by new standards that could be used to repackage and redistribute entire websites through a variety of channels. Kevin Werbach, writing for Release 1.0, a newsletter influential among investors in the 1990s, predicted that syndication “would evolve into the core model for the Internet economy, allowing businesses and individuals to retain control over their online personae while enjoying the benefits of massive scale and scope.” He invited his readers to imagine a future in which fencing aficionados, rather than going directly to an “online sporting goods site” or “fencing equipment retailer,” could buy a new épée directly through e-commerce widgets embedded into their favorite website about fencing. Just like in the television world, where big networks syndicate their shows to smaller local stations, syndication on the web would allow businesses and publications to reach consumers through a multitude of intermediary sites. This would mean, as a corollary, that consumers would gain significant control over where and how they interacted with any given business or publication on the web.

|

||||

|

||||

RSS was one of the standards that promised to deliver this syndicated future. To Werbach, RSS was “the leading example of a lightweight syndication protocol.” Another contemporaneous article called RSS the first protocol to realize the potential of XML. It was going to be a way for both users and content aggregators to create their own customized channels out of everything the web had to offer. And yet, two decades later, RSS [appears to be a dying technology][1], now used chiefly by podcasters and programmers with tech blogs. Moreover, among that latter group, RSS is perhaps used as much for its political symbolism as its actual utility. Though of course some people really do have RSS readers, stubbornly adding an RSS feed to your blog, even in 2018, is a reactionary statement. That little tangerine bubble has become a wistful symbol of defiance against a centralized web increasingly controlled by a handful of corporations, a web that hardly resembles the syndicated web of Werbach’s imagining.

|

||||

|

||||

The future once looked so bright for RSS. What happened? Was its downfall inevitable, or was it precipitated by the bitter infighting that thwarted the development of a single RSS standard?

|

||||

|

||||

### Muddied Water

|

||||

|

||||

RSS was invented twice. This meant it never had an obvious owner, a state of affairs that spawned endless debate and acrimony. But it also suggests that RSS was an important idea whose time had come.

|

||||

|

||||

In 1998, Netscape was struggling to envision a future for itself. Its flagship product, the Netscape Navigator web browser—once preferred by 80% of web users—was quickly losing ground to Internet Explorer. So Netscape decided to compete in a new arena. In May, a team was brought together to start work on what was known internally as “Project 60.” Two months later, Netscape announced “My Netscape,” a web portal that would fight it out with other portals like Yahoo, MSN, and Excite.

|

||||

|

||||

The following year, in March, Netscape announced an addition to the My Netscape portal called the “My Netscape Network.” My Netscape users could now customize their My Netscape page so that it contained “channels” featuring the most recent headlines from sites around the web. As long as your favorite website published a special file in a format dictated by Netscape, you could add that website to your My Netscape page, typically by clicking an “Add Channel” button that participating websites were supposed to add to their interfaces. A little box containing a list of linked headlines would then appear.

|

||||

|

||||

![A My Netscape Network Channel][2]

|

||||

|

||||

The special file that participating websites had to publish was an RSS file. In the My Netscape Network announcement, Netscape explained that RSS stood for “RDF Site Summary.” This was somewhat of a misnomer. RDF, or the Resource Description Framework, is basically a grammar for describing certain properties of arbitrary resources. (See [my article about the Semantic Web][3] if that sounds really exciting to you.) In 1999, a draft specification for RDF was being considered by the W3C. Though RSS was supposed to be based on RDF, the example RSS document Netscape actually released didn’t use any RDF tags at all, even if it declared the RDF XML namespace. In a document that accompanied the Netscape RSS specification, Dan Libby, one of the specification’s authors, explained that “in this release of MNN, Netscape has intentionally limited the complexity of the RSS format.” The specification was given the 0.90 version number, the idea being that subsequent versions would bring RSS more in line with the W3C’s XML specification and the evolving draft of the RDF specification.

|

||||

|

||||

RSS had been cooked up by Libby and another Netscape employee, Ramanathan Guha. Guha previously worked for Apple, where he came up with something called the Meta Content Framework. MCF was a format for representing metadata about anything from web pages to local files. Guha demonstrated its power by developing an application called [HotSauce][4] that visualized relationships between files as a network of nodes suspended in 3D space. After leaving Apple for Netscape, Guha worked with a Netscape consultant named Tim Bray to produce an XML-based version of MCF, which in turn became the foundation for the W3C’s RDF draft. It’s no surprise, then, that Guha and Libby were keen to incorporate RDF into RSS. But Libby later wrote that the original vision for an RDF-based RSS was pared back because of time constraints and the perception that RDF was “‘too complex’ for the ‘average user.’”

|

||||

|

||||

While Netscape was trying to win eyeballs in what became known as the “portal wars,” elsewhere on the web a new phenomenon known as “weblogging” was being pioneered. One of these pioneers was Dave Winer, CEO of a company called UserLand Software, which developed early content management systems that made blogging accessible to people without deep technical fluency. Winer ran his own blog, [Scripting News][5], which today is one of the oldest blogs on the internet. More than a year before Netscape announced My Netscape Network, on December 15th, 1997, Winer published a post announcing that the blog would now be available in XML as well as HTML.

|

||||

|

||||

Dave Winer’s XML format became known as the Scripting News format. It was supposedly similar to Microsoft’s Channel Definition Format (a “push technology” standard submitted to the W3C in March, 1997), but I haven’t been able to find a file in the original format to verify that claim. Like Netscape’s RSS, it structured the content of Winer’s blog so that it could be understood by other software applications. When Netscape released RSS 0.90, Winer and UserLand Software began to support both formats. But Winer believed that Netscape’s format was “woefully inadequate” and “missing the key thing web writers and readers need.” It could only represent a list of links, whereas the Scripting News format could represent a series of paragraphs, each containing one or more links.

|

||||

|

||||

In June, 1999, two months after Netscape’s My Netscape Network announcement, Winer introduced a new version of the Scripting News format, called ScriptingNews 2.0b1. Winer claimed that he decided to move ahead with his own format only after trying but failing to get anyone at Netscape to care about RSS 0.90’s deficiencies. The new version of the Scripting News format added several items to the `<header>` element that brought the Scripting News format to parity with RSS. But the two formats continued to differ in that the Scripting News format, which Winer nicknamed the “fat” syndication format, could include entire paragraphs and not just links.

|

||||

|

||||

Netscape got around to releasing RSS 0.91 the very next month. The updated specification was a major about-face. RSS no longer stood for “RDF Site Summary”; it now stood for “Rich Site Summary.” All the RDF—and there was almost none anyway—was stripped out. Many of the Scripting News tags were incorporated. In the text of the new specification, Libby explained:

|

||||

|

||||

> RDF references removed. RSS was originally conceived as a metadata format providing a summary of a website. Two things have become clear: the first is that providers want more of a syndication format than a metadata format. The structure of an RDF file is very precise and must conform to the RDF data model in order to be valid. This is not easily human-understandable and can make it difficult to create useful RDF files. The second is that few tools are available for RDF generation, validation and processing. For these reasons, we have decided to go with a standard XML approach.

|

||||

|

||||

Winer was enormously pleased with RSS 0.91, calling it “even better than I thought it would be.” UserLand Software adopted it as a replacement for the existing ScriptingNews 2.0b1 format. For a while, it seemed that RSS finally had a single authoritative specification.

|

||||

|

||||

### The Great Fork

|

||||

|

||||

A year later, the RSS 0.91 specification had become woefully inadequate. There were all sorts of things people were trying to do with RSS that the specification did not address. There were other parts of the specification that seemed unnecessarily constraining—each RSS channel could only contain a maximum of 15 items, for example.

|

||||

|

||||

By that point, RSS had been adopted by several more organizations. Other than Netscape, which seemed to have lost interest after RSS 0.91, the big players were Dave Winer’s UserLand Software; O’Reilly Net, which ran an RSS aggregator called Meerkat; and Moreover.com, which also ran an RSS aggregator focused on news. Via mailing list, representatives from these organizations and others regularly discussed how to improve on RSS 0.91. But there were deep disagreements about what those improvements should look like.

|

||||

|

||||

The mailing list in which most of the discussion occurred was called the Syndication mailing list. [An archive of the Syndication mailing list][6] is still available. It is an amazing historical resource. It provides a moment-by-moment account of how those deep disagreements eventually led to a political rupture of the RSS community.

|

||||

|

||||

On one side of the coming rupture was Winer. Winer was impatient to evolve RSS, but he wanted to change it only in relatively conservative ways. In June, 2000, he published his own RSS 0.91 specification on the UserLand website, meant to be a starting point for further development of RSS. It made no significant changes to the 0.91 specification published by Netscape. Winer claimed in a blog post that accompanied his specification that it was only a “cleanup” documenting how RSS was actually being used in the wild, which was needed because the Netscape specification was no longer being maintained. In the same post, he argued that RSS had succeeded so far because it was simple, and that by adding namespaces or RDF back to the format—some had suggested this be done in the Syndication mailing list—it “would become vastly more complex, and IMHO, at the content provider level, would buy us almost nothing for the added complexity.” In a message to the Syndication mailing list sent around the same time, Winer suggested that these issues were important enough that they might lead him to create a fork:

|

||||

|

||||

> I’m still pondering how to move RSS forward. I definitely want ICE-like stuff in RSS2, publish and subscribe is at the top of my list, but I am going to fight tooth and nail for simplicity. I love optional elements. I don’t want to go down the namespaces and schema road, or try to make it a dialect of RDF. I understand other people want to do this, and therefore I guess we’re going to get a fork. I have my own opinion about where the other fork will lead, but I’ll keep those to myself for the moment at least.

|

||||

|

||||

Arrayed against Winer were several other people, including Rael Dornfest of O’Reilly, Ian Davis (responsible for a search startup called Calaba), and a precocious, 14-year-old Aaron Swartz, who all thought that RSS needed namespaces in order to accommodate the many different things everyone wanted to do with it. On another mailing list hosted by O’Reilly, Davis proposed a namespace-based module system, writing that such a system would “make RSS as extensible as we like rather than packing in new features that over-complicate the spec.” The “namespace camp” believed that RSS would soon be used for much more than the syndication of blog posts, so namespaces, rather than being a complication, were the only way to keep RSS from becoming unmanageable as it supported more and more use cases.

|

||||

|

||||

At the root of this disagreement about namespaces was a deeper disagreement about what RSS was even for. Winer had invented his Scripting News format to syndicate the posts he wrote for his blog. Guha and Libby at Netscape had designed RSS and called it “RDF Site Summary” because in their minds it was a way of recreating a site in miniature within Netscape’s online portal. Davis, writing to the Syndication mailing list, explained his view that RSS was “originally conceived as a way of building mini sitemaps,” and that now he and others wanted to expand RSS “to encompass more types of information than simple news headlines and to cater for the new uses of RSS that have emerged over the last 12 months.” Winer wrote a prickly reply, stating that his Scripting News format was in fact the original RSS and that it had been meant for a different purpose. Given that the people most involved in the development of RSS disagreed about why RSS had even been created, a fork seems to have been inevitable.

|

||||

|

||||

The fork happened after Dornfest announced a proposed RSS 1.0 specification and formed the RSS-DEV Working Group—which would include Davis, Swartz, and several others but not Winer—to get it ready for publication. In the proposed specification, RSS once again stood for “RDF Site Summary,” because RDF had had been added back in to represent metadata properties of certain RSS elements. The specification acknowledged Winer by name, giving him credit for popularizing RSS through his “evangelism.” But it also argued that just adding more elements to RSS without providing for extensibility with a module system—that is, what Winer was suggesting—”sacrifices scalability.” The specification went on to define a module system for RSS based on XML namespaces.

|

||||

|

||||

Winer was furious that the RSS-DEV Working Group had arrogated the “RSS 1.0” name for themselves. In another mailing list about decentralization, he described what the RSS-DEV Working Group had done as theft. Other members of the Syndication mailing list also felt that the RSS-DEV Working Group should not have used the name “RSS” without unanimous agreement from the community on how to move RSS forward. But the Working Group stuck with the name. Dan Brickley, another member of the RSS-DEV Working Group, defended this decision by arguing that “RSS 1.0 as proposed is solidly grounded in the original RSS vision, which itself had a long heritage going back to MCF (an RDF precursor) and related specs (CDF etc).” He essentially felt that the RSS 1.0 effort had a better claim to the RSS name than Winer did, since RDF had originally been a part of RSS. The RSS-DEV Working Group published a final version of their specification in December. That same month, Winer published his own improvement to RSS 0.91, which he called RSS 0.92, on UserLand’s website. RSS 0.92 made several small optional improvements to RSS, among which was the addition of the `<enclosure>` tag soon used by podcasters everywhere. RSS had officially forked.

|

||||

|

||||

It’s not clear to me why a better effort was not made to involve Winer in the RSS-DEV Working Group. He was a prominent contributor to the Syndication mailing list and obviously responsible for much of RSS’ popularity, as the members of the Working Group themselves acknowledged. But Tim O’Reilly, founder and CEO of O’Reilly, explained in a UserLand discussion group that Winer more or less refused to participate:

|

||||

|

||||

> A group of people involved in RSS got together to start thinking about its future evolution. Dave was part of the group. When the consensus of the group turned in a direction he didn’t like, Dave stopped participating, and characterized it as a plot by O’Reilly to take over RSS from him, despite the fact that Rael Dornfest of O’Reilly was only one of about a dozen authors of the proposed RSS 1.0 spec, and that many of those who were part of its development had at least as long a history with RSS as Dave had.

|

||||

|

||||

To this, Winer said:

|

||||

|

||||

> I met with Dale [Dougherty] two weeks before the announcement, and he didn’t say anything about it being called RSS 1.0. I spoke on the phone with Rael the Friday before it was announced, again he didn’t say that they were calling it RSS 1.0. The first I found out about it was when it was publicly announced.

|

||||

>

|

||||

> Let me ask you a straight question. If it turns out that the plan to call the new spec “RSS 1.0” was done in private, without any heads-up or consultation, or for a chance for the Syndication list members to agree or disagree, not just me, what are you going to do?

|

||||

>

|

||||

> UserLand did a lot of work to create and popularize and support RSS. We walked away from that, and let your guys have the name. That’s the top level. If I want to do any further work in Web syndication, I have to use a different name. Why and how did that happen Tim?

|

||||

|

||||

I have not been able to find a discussion in the Syndication mailing list about using the RSS 1.0 name prior to the announcement of the RSS 1.0 proposal.

|

||||

|

||||

RSS would fork again in 2003, when several developers frustrated with the bickering in the RSS community sought to create an entirely new format. These developers created Atom, a format that did away with RDF but embraced XML namespaces. Atom would eventually be specified by [a proposed IETF standard][7]. After the introduction of Atom, there were three competing versions of RSS: Winer’s RSS 0.92 (updated to RSS 2.0 in 2002 and renamed “Really Simple Syndication”), the RSS-DEV Working Group’s RSS 1.0, and Atom.

|

||||

|

||||

### Decline

|

||||

|

||||

The proliferation of competing RSS specifications may have hampered RSS in other ways that I’ll discuss shortly. But it did not stop RSS from becoming enormously popular during the 2000s. By 2004, the New York Times had started offering its headlines in RSS and had written an article explaining to the layperson what RSS was and how to use it. Google Reader, an RSS aggregator ultimately used by millions, was launched in 2005. By 2013, RSS seemed popular enough that the New York Times, in its obituary for Aaron Swartz, called the technology “ubiquitous.” For a while, before a third of the planet had signed up for Facebook, RSS was simply how many people stayed abreast of news on the internet.

|

||||

|

||||

The New York Times published Swartz’ obituary in January, 2013. By that point, though, RSS had actually turned a corner and was well on its way to becoming an obscure technology. Google Reader was shutdown in July, 2013, ostensibly because user numbers had been falling “over the years.” This prompted several articles from various outlets declaring that RSS was dead. But people had been declaring that RSS was dead for years, even before Google Reader’s shuttering. Steve Gillmor, writing for TechCrunch in May, 2009, advised that “it’s time to get completely off RSS and switch to Twitter” because “RSS just doesn’t cut it anymore.” He pointed out that Twitter was basically a better RSS feed, since it could show you what people thought about an article in addition to the article itself. It allowed you to follow people and not just channels. Gillmor told his readers that it was time to let RSS recede into the background. He ended his article with a verse from Bob Dylan’s “Forever Young.”

|

||||

|

||||

Today, RSS is not dead. But neither is it anywhere near as popular as it once was. Lots of people have offered explanations for why RSS lost its broad appeal. Perhaps the most persuasive explanation is exactly the one offered by Gillmor in 2009. Social networks, just like RSS, provide a feed featuring all the latest news on the internet. Social networks took over from RSS because they were simply better feeds. They also provide more benefits to the companies that own them. Some people have accused Google, for example, of shutting down Google Reader in order to encourage people to use Google+. Google might have been able to monetize Google+ in a way that it could never have monetized Google Reader. Marco Arment, the creator of Instapaper, wrote on his blog in 2013:

|

||||

|

||||

> Google Reader is just the latest casualty of the war that Facebook started, seemingly accidentally: the battle to own everything. While Google did technically “own” Reader and could make some use of the huge amount of news and attention data flowing through it, it conflicted with their far more important Google+ strategy: they need everyone reading and sharing everything through Google+ so they can compete with Facebook for ad-targeting data, ad dollars, growth, and relevance.

|

||||

|

||||

So both users and technology companies realized that they got more out of using social networks than they did out of RSS.

|

||||

|

||||

Another theory is that RSS was always too geeky for regular people. Even the New York Times, which seems to have been eager to adopt RSS and promote it to its audience, complained in 2006 that RSS is a “not particularly user friendly” acronym coined by “computer geeks.” Before the RSS icon was designed in 2004, websites like the New York Times linked to their RSS feeds using little orange boxes labeled “XML,” which can only have been intimidating. The label was perfectly accurate though, because back then clicking the link would take a hapless user to a page full of XML. [This great tweet][8] captures the essence of this explanation for RSS’ demise. Regular people never felt comfortable using RSS; it hadn’t really been designed as a consumer-facing technology and involved too many hurdles; people jumped ship as soon as something better came along.

|

||||

|

||||

RSS might have been able to overcome some of these limitations if it had been further developed. Maybe RSS could have been extended somehow so that friends subscribed to the same channel could syndicate their thoughts about an article to each other. But whereas a company like Facebook was able to “move fast and break things,” the RSS developer community was stuck trying to achieve consensus. The Great RSS Fork only demonstrates how difficult it was to do that. So if we are asking ourselves why RSS is no longer popular, a good first-order explanation is that social networks supplanted it. If we ask ourselves why social networks were able to supplant it, then the answer may be that the people trying to make RSS succeed faced a problem much harder than, say, building Facebook. As Dornfest wrote to the Syndication mailing list at one point, “currently it’s the politics far more than the serialization that’s far from simple.”

|

||||

|

||||

So today we are left with centralized silos of information. In a way, we do have the syndicated internet that Kevin Werbach foresaw in 1999. After all, The Onion is a publication that relies on syndication through Facebook and Twitter the same way that Seinfeld relied on syndication to rake in millions after the end of its original run. But syndication on the web only happens through one of a very small number of channels, meaning that none of us “retain control over our online personae” the way that Werbach thought we would. One reason this happened is garden-variety corporate rapaciousness—RSS, an open format, didn’t give technology companies the control over data and eyeballs that they needed to sell ads, so they did not support it. But the more mundane reason is that centralized silos are just easier to design than common standards. Consensus is difficult to achieve and it takes time, but without consensus spurned developers will go off and create competing standards. The lesson here may be that if we want to see a better, more open web, we have to get better at not screwing each other over.

|

||||

|

||||

If you enjoyed this post, more like it come out every two weeks! Follow [@TwoBitHistory][9] on Twitter or subscribe to the [RSS feed][10] to make sure you know when a new post is out.

|

||||

|

||||

Previously on TwoBitHistory…

|

||||

|

||||

> New post: This week we're traveling back in time in our DeLorean to see what it was like learning to program on early home computers.<https://t.co/qDrwqgIuuy>

|

||||

>

|

||||

> — TwoBitHistory (@TwoBitHistory) [September 2, 2018][11]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://twobithistory.org/2018/09/16/the-rise-and-demise-of-rss.html

|

||||

|

||||

作者:[Two-Bit History][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://twobithistory.org

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://trends.google.com/trends/explore?date=all&geo=US&q=rss

|

||||

[2]: https://twobithistory.org/images/mnn-channel.gif

|

||||

[3]: https://twobithistory.org/2018/05/27/semantic-web.html

|

||||

[4]: http://web.archive.org/web/19970703020212/http://mcf.research.apple.com:80/hs/screen_shot.html

|

||||

[5]: http://scripting.com/

|

||||

[6]: https://groups.yahoo.com/neo/groups/syndication/info

|

||||

[7]: https://tools.ietf.org/html/rfc4287

|

||||

[8]: https://twitter.com/mgsiegler/status/311992206716203008

|

||||

[9]: https://twitter.com/TwoBitHistory

|

||||

[10]: https://twobithistory.org/feed.xml

|

||||

[11]: https://twitter.com/TwoBitHistory/status/1036295112375115778?ref_src=twsrc%5Etfw

|

||||

@ -0,0 +1,126 @@

|

||||

How Lisp Became God's Own Programming Language

|

||||

======

|

||||

When programmers discuss the relative merits of different programming languages, they often talk about them in prosaic terms as if they were so many tools in a tool belt—one might be more appropriate for systems programming, another might be more appropriate for gluing together other programs to accomplish some ad hoc task. This is as it should be. Languages have different strengths and claiming that a language is better than other languages without reference to a specific use case only invites an unproductive and vitriolic debate.

|

||||

|

||||

But there is one language that seems to inspire a peculiar universal reverence: Lisp. Keyboard crusaders that would otherwise pounce on anyone daring to suggest that some language is better than any other will concede that Lisp is on another level. Lisp transcends the utilitarian criteria used to judge other languages, because the median programmer has never used Lisp to build anything practical and probably never will, yet the reverence for Lisp runs so deep that Lisp is often ascribed mystical properties. Everyone’s favorite webcomic, xkcd, has depicted Lisp this way at least twice: In [one comic][1], a character reaches some sort of Lisp enlightenment, which appears to allow him to comprehend the fundamental structure of the universe. In [another comic][2], a robed, senescent programmer hands a stack of parentheses to his padawan, saying that the parentheses are “elegant weapons for a more civilized age,” suggesting that Lisp has all the occult power of the Force.

|

||||

|

||||

Another great example is Bob Kanefsky’s parody of a song called “God Lives on Terra.” His parody, written in the mid-1990s and called “Eternal Flame”, describes how God must have created the world using Lisp. The following is an excerpt, but the full set of lyrics can be found in the [GNU Humor Collection][3]:

|

||||

|

||||

> For God wrote in Lisp code

|

||||

> When he filled the leaves with green.

|

||||

> The fractal flowers and recursive roots:

|

||||

> The most lovely hack I’ve seen.

|

||||

> And when I ponder snowflakes,

|

||||

> never finding two the same,

|

||||

> I know God likes a language

|

||||

> with its own four-letter name.

|

||||

|

||||

I can only speak for myself, I suppose, but I think this “Lisp Is Arcane Magic” cultural meme is the most bizarre and fascinating thing ever. Lisp was concocted in the ivory tower as a tool for artificial intelligence research, so it was always going to be unfamiliar and maybe even a bit mysterious to the programming laity. But programmers now [urge each other to “try Lisp before you die”][4] as if it were some kind of mind-expanding psychedelic. They do this even though Lisp is now the second-oldest programming language in widespread use, younger only than Fortran, and even then by just one year. Imagine if your job were to promote some new programming language on behalf of the organization or team that created it. Wouldn’t it be great if you could convince everyone that your new language had divine powers? But how would you even do that? How does a programming language come to be known as a font of hidden knowledge?

|

||||

|

||||

How did Lisp get to be this way?

|

||||

|

||||

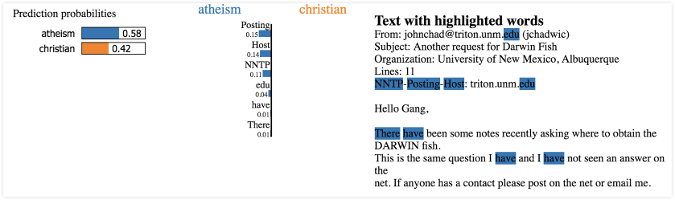

![Byte Magazine Cover, August, 1979.][5]

|

||||

The cover of Byte Magazine, August, 1979.

|

||||

|

||||

### Theory A: The Axiomatic Language

|

||||

|

||||

John McCarthy, Lisp’s creator, did not originally intend for Lisp to be an elegant distillation of the principles of computation. But, after one or two fortunate insights and a series of refinements, that’s what Lisp became. Paul Graham—we will talk about him some more later—has written that, with Lisp, McCarthy “did for programming something like what Euclid did for geometry.” People might see a deeper meaning in Lisp because McCarthy built Lisp out of parts so fundamental that it is hard to say whether he invented it or discovered it.

|

||||

|

||||

McCarthy began thinking about creating a language during the 1956 Darthmouth Summer Research Project on Artificial Intelligence. The Summer Research Project was in effect an ongoing, multi-week academic conference, the very first in the field of artificial intelligence. McCarthy, then an assistant professor of Mathematics at Dartmouth, had actually coined the term “artificial intelligence” when he proposed the event. About ten or so people attended the conference for its entire duration. Among them were Allen Newell and Herbert Simon, two researchers affiliated with the RAND Corporation and Carnegie Mellon that had just designed a language called IPL.

|

||||

|

||||

Newell and Simon had been trying to build a system capable of generating proofs in propositional calculus. They realized that it would be hard to do this while working at the level of the computer’s native instruction set, so they decided to create a language—or, as they called it, a “pseudo-code”—that would help them more naturally express the workings of their “Logic Theory Machine.” Their language, called IPL for “Information Processing Language”, was more of a high-level assembly dialect then a programming language in the sense we mean today. Newell and Simon, perhaps referring to Fortran, noted that other “pseudo-codes” then in development were “preoccupied” with representing equations in standard mathematical notation. Their language focused instead on representing sentences in propositional calculus as lists of symbolic expressions. Programs in IPL would basically leverage a series of assembly-language macros to manipulate and evaluate expressions within one or more of these lists.

|

||||

|

||||

McCarthy thought that having algebraic expressions in a language, Fortran-style, would be useful. So he didn’t like IPL very much. But he thought that symbolic lists were a good way to model problems in artificial intelligence, particularly problems involving deduction. This was the germ of McCarthy’s desire to create an algebraic list processing language, a language that would resemble Fortran but also be able to process symbolic lists like IPL.

|

||||

|

||||

Of course, Lisp today does not resemble Fortran. Over the next few years, McCarthy’s ideas about what an ideal list processing language should look like evolved. His ideas began to change in 1957, when he started writing routines for a chess-playing program in Fortran. The prolonged exposure to Fortran convinced McCarthy that there were several infelicities in its design, chief among them the awkward `IF` statement. McCarthy invented an alternative, the “true” conditional expression, which returns sub-expression A if the supplied test succeeds and sub-expression B if the supplied test fails and which also only evaluates the sub-expression that actually gets returned. During the summer of 1958, when McCarthy worked to design a program that could perform differentiation, he realized that his “true” conditional expression made writing recursive functions easier and more natural. The differentiation problem also prompted McCarthy to devise the maplist function, which takes another function as an argument and applies it to all the elements in a list. This was useful for differentiating sums of arbitrarily many terms.

|

||||

|

||||

None of these things could be expressed in Fortran, so, in the fall of 1958, McCarthy set some students to work implementing Lisp. Since McCarthy was now an assistant professor at MIT, these were all MIT students. As McCarthy and his students translated his ideas into running code, they made changes that further simplified the language. The biggest change involved Lisp’s syntax. McCarthy had originally intended for the language to include something called “M-expressions,” which would be a layer of syntactic sugar that made Lisp’s syntax resemble Fortran’s. Though M-expressions could be translated to S-expressions—the basic lists enclosed by parentheses that Lisp is known for— S-expressions were really a low-level representation meant for the machine. The only problem was that McCarthy had been denoting M-expressions using square brackets, and the IBM 026 keypunch that McCarthy’s team used at MIT did not have any square bracket keys on its keyboard. So the Lisp team stuck with S-expressions, using them to represent not just lists of data but function applications too. McCarthy and his students also made a few other simplifications, including a switch to prefix notation and a memory model change that meant the language only had one real type.

|

||||

|

||||

In 1960, McCarthy published his famous paper on Lisp called “Recursive Functions of Symbolic Expressions and Their Computation by Machine.” By that time, the language had been pared down to such a degree that McCarthy realized he had the makings of “an elegant mathematical system” and not just another programming language. He later wrote that the many simplifications that had been made to Lisp turned it “into a way of describing computable functions much neater than the Turing machines or the general recursive definitions used in recursive function theory.” In his paper, he therefore presented Lisp both as a working programming language and as a formalism for studying the behavior of recursive functions.

|

||||

|

||||

McCarthy explained Lisp to his readers by building it up out of only a very small collection of rules. Paul Graham later retraced McCarthy’s steps, using more readable language, in his essay [“The Roots of Lisp”][6]. Graham is able to explain Lisp using only seven primitive operators, two different notations for functions, and a half-dozen higher-level functions defined in terms of the primitive operators. That Lisp can be specified by such a small sequence of basic rules no doubt contributes to its mystique. Graham has called McCarthy’s paper an attempt to “axiomatize computation.” I think that is a great way to think about Lisp’s appeal. Whereas other languages have clearly artificial constructs denoted by reserved words like `while` or `typedef` or `public static void`, Lisp’s design almost seems entailed by the very logic of computing. This quality and Lisp’s original connection to a field as esoteric as “recursive function theory” should make it no surprise that Lisp has so much prestige today.

|

||||

|

||||

### Theory B: Machine of the Future

|

||||

|

||||

Two decades after its creation, Lisp had become, according to the famous [Hacker’s Dictionary][7], the “mother tongue” of artificial intelligence research. Early on, Lisp spread quickly, probably because its regular syntax made implementing it on new machines relatively straightforward. Later, researchers would keep using it because of how well it handled symbolic expressions, important in an era when so much of artificial intelligence was symbolic. Lisp was used in seminal artificial intelligence projects like the [SHRDLU natural language program][8], the [Macsyma algebra system][9], and the [ACL2 logic system][10].

|

||||

|

||||

By the mid-1970s, though, artificial intelligence researchers were running out of computer power. The PDP-10, in particular—everyone’s favorite machine for artificial intelligence work—had an 18-bit address space that increasingly was insufficient for Lisp AI programs. Many AI programs were also supposed to be interactive, and making a demanding interactive program perform well on a time-sharing system was challenging. The solution, originally proposed by Peter Deutsch at MIT, was to engineer a computer specifically designed to run Lisp programs. These Lisp machines, as I described in [my last post on Chaosnet][11], would give each user a dedicated processor optimized for Lisp. They would also eventually come with development environments written entirely in Lisp for hardcore Lisp programmers. Lisp machines, devised in an awkward moment at the tail of the minicomputer era but before the full flowering of the microcomputer revolution, were high-performance personal computers for the programming elite.

|

||||

|

||||

For a while, it seemed as if Lisp machines would be the wave of the future. Several companies sprang into existence and raced to commercialize the technology. The most successful of these companies was called Symbolics, founded by veterans of the MIT AI Lab. Throughout the 1980s, Symbolics produced a line of computers known as the 3600 series, which were popular in the AI field and in industries requiring high-powered computing. The 3600 series computers featured large screens, bit-mapped graphics, a mouse interface, and [powerful graphics and animation software][12]. These were impressive machines that enabled impressive programs. For example, Bob Culley, who worked in robotics research and contacted me via Twitter, was able to implement and visualize a path-finding algorithm on a Symbolics 3650 in 1985. He explained to me that bit-mapped graphics and object-oriented programming (available on Lisp machines via [the Flavors extension][13]) were very new in the 1980s. Symbolics was the cutting edge.

|

||||

|

||||

![Bob Culley's path-finding program.][14] Bob Culley’s path-finding program.

|

||||

|

||||

As a result, Symbolics machines were outrageously expensive. The Symbolics 3600 cost $110,000 in 1983. So most people could only marvel at the power of Lisp machines and the wizardry of their Lisp-writing operators from afar. But marvel they did. Byte Magazine featured Lisp and Lisp machines several times from 1979 through to the end of the 1980s. In the August, 1979 issue, a special on Lisp, the magazine’s editor raved about the new machines being developed at MIT with “gobs of memory” and “an advanced operating system.” He thought they sounded so promising that they would make the two prior years—which saw the launch of the Apple II, the Commodore PET, and the TRS-80—look boring by comparison. A half decade later, in 1985, a Byte Magazine contributor described writing Lisp programs for the “sophisticated, superpowerful Symbolics 3670” and urged his audience to learn Lisp, claiming it was both “the language of choice for most people working in AI” and soon to be a general-purpose programming language as well.

|

||||

|

||||

I asked Paul McJones, who has done lots of Lisp [preservation work][15] for the Computer History Museum in Mountain View, about when people first began talking about Lisp as if it were a gift from higher-dimensional beings. He said that the inherent properties of the language no doubt had a lot to do with it, but he also said that the close association between Lisp and the powerful artificial intelligence applications of the 1960s and 1970s probably contributed too. When Lisp machines became available for purchase in the 1980s, a few more people outside of places like MIT and Stanford were exposed to Lisp’s power and the legend grew. Today, Lisp machines and Symbolics are little remembered, but they helped keep the mystique of Lisp alive through to the late 1980s.

|

||||

|

||||

### Theory C: Learn to Program

|

||||

|

||||

In 1985, MIT professors Harold Abelson and Gerald Sussman, along with Sussman’s wife, Julie Sussman, published a textbook called Structure and Interpretation of Computer Programs. The textbook introduced readers to programming using the language Scheme, a dialect of Lisp. It was used to teach MIT’s introductory programming class for two decades. My hunch is that SICP (as the title is commonly abbreviated) about doubled Lisp’s “mystique factor.” SICP took Lisp and showed how it could be used to illustrate deep, almost philosophical concepts in the art of computer programming. Those concepts were general enough that any language could have been used, but SICP’s authors chose Lisp. As a result, Lisp’s reputation was augmented by the notoriety of this bizarre and brilliant book, which has intrigued generations of programmers (and also become [a very strange meme][16]). Lisp had always been “McCarthy’s elegant formalism”; now it was also “that language that teaches you the hidden secrets of programming.”

|

||||

|

||||

It’s worth dwelling for a while on how weird SICP really is, because I think the book’s weirdness and Lisp’s weirdness get conflated today. The weirdness starts with the book’s cover. It depicts a wizard or alchemist approaching a table, prepared to perform some sort of sorcery. In one hand he holds a set of calipers or a compass, in the other he holds a globe inscribed with the words “eval” and “apply.” A woman opposite him gestures at the table; in the background, the Greek letter lambda floats in mid-air, radiating light.

|

||||

|

||||

![The cover art for SICP.][17] The cover art for SICP.

|

||||

|

||||

Honestly, what is going on here? Why does the table have animal feet? Why is the woman gesturing at the table? What is the significance of the inkwell? Are we supposed to conclude that the wizard has unlocked the hidden mysteries of the universe, and that those mysteries consist of the “eval/apply” loop and the Lambda Calculus? It would seem so. This image alone must have done an enormous amount to shape how people talk about Lisp today.

|

||||

|

||||

But the text of the book itself is often just as weird. SICP is unlike most other computer science textbooks that you have ever read. Its authors explain in the foreword to the book that the book is not merely about how to program in Lisp—it is instead about “three foci of phenomena: the human mind, collections of computer programs, and the computer.” Later, they elaborate, describing their conviction that programming shouldn’t be considered a discipline of computer science but instead should be considered a new notation for “procedural epistemology.” Programs are a new way of structuring thought that only incidentally get fed into computers. The first chapter of the book gives a brief tour of Lisp, but most of the book after that point is about much more abstract concepts. There is a discussion of different programming paradigms, a discussion of the nature of “time” and “identity” in object-oriented systems, and at one point a discussion of how synchronization problems may arise because of fundamental constraints on communication that play a role akin to the fixed speed of light in the theory of relativity. It’s heady stuff.

|

||||

|

||||

All this isn’t to say that the book is bad. It’s a wonderful book. It discusses important programming concepts at a higher level than anything else I have read, concepts that I had long wondered about but didn’t quite have the language to describe. It’s impressive that an introductory programming textbook can move so quickly to describing the fundamental shortfalls of object-oriented programming and the benefits of functional languages that minimize mutable state. It’s mind-blowing that this then turns into a discussion of how a stream paradigm, perhaps something like today’s [RxJS][18], can give you the best of both worlds. SICP distills the essence of high-level program design in a way reminiscent of McCarthy’s original Lisp paper. The first thing you want to do after reading it is get your programmer friends to read it; if they look it up, see the cover, but then don’t read it, all they take away is that some mysterious, fundamental “eval/apply” thing gives magicians special powers over tables with animal feet. I would be deeply impressed in their shoes too.

|

||||

|

||||

But maybe SICP’s most important contribution was to elevate Lisp from curious oddity to pedagogical must-have. Well before SICP, people told each other to learn Lisp as a way of getting better at programming. The 1979 Lisp issue of Byte Magazine is testament to that fact. The same editor that raved about MIT’s new Lisp machines also explained that the language was worth learning because it “represents a different point of view from which to analyze problems.” But SICP presented Lisp as more than just a foil for other languages; SICP used Lisp as an introductory language, implicitly making the argument that Lisp is the best language in which to grasp the fundamentals of computer programming. When programmers today tell each other to try Lisp before they die, they arguably do so in large part because of SICP. After all, the language [Brainfuck][19] presumably offers “a different point of view from which to analyze problems.” But people learn Lisp instead because they know that, for twenty years or so, the Lisp point of view was thought to be so useful that MIT taught Lisp to undergraduates before anything else.

|

||||

|

||||

### Lisp Comes Back

|

||||

|

||||

The same year that SICP was released, Bjarne Stroustrup published the first edition of The C++ Programming Language, which brought object-oriented programming to the masses. A few years later, the market for Lisp machines collapsed and the AI winter began. For the next decade and change, C++ and then Java would be the languages of the future and Lisp would be left out in the cold.

|

||||

|

||||

It is of course impossible to pinpoint when people started getting excited about Lisp again. But that may have happened after Paul Graham, Y-Combinator co-founder and Hacker News creator, published a series of influential essays pushing Lisp as the best language for startups. In his essay [“Beating the Averages,”][20] for example, Graham argued that Lisp macros simply made Lisp more powerful than other languages. He claimed that using Lisp at his own startup, Viaweb, helped him develop features faster than his competitors were able to. [Some programmers at least][21] were persuaded. But the vast majority of programmers did not switch to Lisp.

|

||||

|

||||

What happened instead is that more and more Lisp-y features have been incorporated into everyone’s favorite programming languages. Python got list comprehensions. C# got Linq. Ruby got… well, Ruby [is a Lisp][22]. As Graham noted even back in 2001, “the default language, embodied in a succession of popular languages, has gradually evolved toward Lisp.” Though other languages are gradually becoming like Lisp, Lisp itself somehow manages to retain its special reputation as that mysterious language that few people understand but everybody should learn. In 1980, on the occasion of Lisp’s 20th anniversary, McCarthy wrote that Lisp had survived as long as it had because it occupied “some kind of approximate local optimum in the space of programming languages.” That understates Lisp’s real influence. Lisp hasn’t survived for over half a century because programmers have begrudgingly conceded that it is the best tool for the job decade after decade; in fact, it has survived even though most programmers do not use it at all. Thanks to its origins and use in artificial intelligence research and perhaps also the legacy of SICP, Lisp continues to fascinate people. Until we can imagine God creating the world with some newer language, Lisp isn’t going anywhere.

|

||||

|

||||

If you enjoyed this post, more like it come out every two weeks! Follow [@TwoBitHistory][23] on Twitter or subscribe to the [RSS feed][24] to make sure you know when a new post is out.

|

||||

|

||||

Previously on TwoBitHistory…

|

||||

|

||||

> This week's post: A look at Chaosnet, the network that gave us the "CH" DNS class.<https://t.co/dC7xqPYzi5>

|

||||

>

|

||||

> — TwoBitHistory (@TwoBitHistory) [September 30, 2018][25]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://twobithistory.org/2018/10/14/lisp.html

|

||||

|

||||

作者:[Two-Bit History][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://twobithistory.org

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://xkcd.com/224/

|

||||

[2]: https://xkcd.com/297/

|

||||

[3]: https://www.gnu.org/fun/jokes/eternal-flame.en.html

|

||||

[4]: https://www.reddit.com/r/ProgrammerHumor/comments/5c14o6/xkcd_lisp/d9szjnc/

|

||||

[5]: https://twobithistory.org/images/byte_lisp.jpg

|

||||

[6]: http://languagelog.ldc.upenn.edu/myl/llog/jmc.pdf

|

||||

[7]: https://en.wikipedia.org/wiki/Jargon_File

|

||||

[8]: https://hci.stanford.edu/winograd/shrdlu/

|

||||

[9]: https://en.wikipedia.org/wiki/Macsyma

|

||||

[10]: https://en.wikipedia.org/wiki/ACL2

|

||||

[11]: https://twobithistory.org/2018/09/30/chaosnet.html

|

||||

[12]: https://youtu.be/gV5obrYaogU?t=201

|

||||

[13]: https://en.wikipedia.org/wiki/Flavors_(programming_language)

|

||||

[14]: https://twobithistory.org/images/symbolics.jpg

|

||||

[15]: http://www.softwarepreservation.org/projects/LISP/

|

||||

[16]: https://knowyourmeme.com/forums/meme-research/topics/47038-structure-and-interpretation-of-computer-programs-hugeass-image-dump-for-evidence

|

||||

[17]: https://twobithistory.org/images/sicp.jpg

|

||||

[18]: https://rxjs-dev.firebaseapp.com/

|

||||

[19]: https://en.wikipedia.org/wiki/Brainfuck

|

||||

[20]: http://www.paulgraham.com/avg.html

|

||||

[21]: https://web.archive.org/web/20061004035628/http://wiki.alu.org/Chris-Perkins

|

||||

[22]: http://www.randomhacks.net/2005/12/03/why-ruby-is-an-acceptable-lisp/

|

||||

[23]: https://twitter.com/TwoBitHistory

|

||||

[24]: https://twobithistory.org/feed.xml

|

||||

[25]: https://twitter.com/TwoBitHistory/status/1046437600658169856?ref_src=twsrc%5Etfw

|

||||

@ -1,3 +1,5 @@

|

||||

translating by belitex

|

||||

|

||||

What is an SRE and how does it relate to DevOps?

|

||||

======

|

||||

The SRE role is common in large enterprises, but smaller businesses need it, too.

|

||||

|

||||

@ -0,0 +1,213 @@

|

||||

What MMORPGs can teach us about leveling up a heroic developer team

|

||||

======

|

||||

The team-building skills that make winning gaming guilds also produce successful work teams.

|

||||

|

||||

|

||||

For the better part of a decade, I have been leading guilds in massively multiplayer role-playing games (MMORPGs). Currently, I lead a guild in [Guild Wars 2][1], and before that, I led progression raid teams in [World of Warcraft][2], while also maintaining a career as a software engineer. As I made the transition into software development, it became clear that the skills I gained in building successful raid groups translated well to building successful tech teams.

|

||||

|

||||

|

||||

![Guild Wars 2 guild members after an event.][4]

|

||||

|

||||

Guild Wars 2 guild members after an event.

|

||||

|

||||

### Identify your problem

|

||||

|

||||

The first step to building a successful team, whether in software or MMORPGs, is to recognize your problem. In video games, it's obvious: the monster. If you don't take it down, it will take you down. In tech, it's a product or service you want to deliver to solve your users' problems. In both situations, this is a problem you are unlikely to solve by yourself. You need a team.

|

||||

|

||||

In MMORPGs, the goal is to create a "progression" raid team that improves over time for faster and smoother tackling of objectives together, allowing it to push its goals further and further. You will not reach the second objective in a raid without tackling the initial one first.

|

||||

|

||||

In this article, I'll share how you can build, improve, and maintain your own progression software and/or systems teams. I'll cover assembling our team, leading the team, optimizing for success, continuously improving, and keeping morale high.

|

||||

|

||||

### Assemble your team

|

||||

|

||||

In MMORPGs, progression teams commonly have different levels of commitment, summed up into three tiers: hardcore, semi-hardcore, and casuals. These commitment levels translate to what players value in their raiding experience.

|

||||

|

||||

You may have heard of the concept of "cultural fit" vs "value fit." One of the most important things in assembling your team is making sure everyone aligns with your concrete values and goals. Creating teams based on cultural fit is problematic because culture is hard to define. Matching new recruits based on their culture will also result in homogenous groups.

|

||||

|

||||

Hardcore teams value dedication, mastery, and achievements. Semi-hardcore teams value efficiency, balance, and empathy. Casual teams balance fun above all else. If you put a casual player in a hardcore raid group, the casual player is probably going to tell the hardcore players they're taking things too seriously, while the hardcore players will tell the casual player they aren't taking things seriously enough (then remove them promptly).

|

||||

|

||||

#### Values-driven team building

|

||||

|

||||

A mismatch in values results in a negative experience for everyone. You need to build your team on a shared foundation of what is important, and each member should align with your team's values and goals. What is important to your team? What do you want your team's driving values to be? If you cannot easily answer those questions, take a moment right away and define them with your team.

|

||||

|

||||

The values you define should influence which new members you recruit. In building raid teams, each potential member should be assessed not only on their skills but also their values. One of my previous employers had a "value fit" interview that a person must pass after their skills assessment to be considered for hiring. It doesn't matter if you're a "ninja" or a "rockstar" if you don't align with the company's values.

|

||||

|

||||

#### Diversify your team

|

||||

|

||||

When looking for new positions, I want a team that has a strong emphasis on delivering a quality product while understanding that work/life balance should be weighed more heavily on the life side ("life/work balance"). I steer away from companies with meager, two-week PTO policies, commitments over 40 hours, or rigid schedules. When interviews with companies show less emphasis on technical collaboration, I know there is a values mismatch.

|

||||

|

||||

While values are important to share, the same skills, experience, and roles are not. Ten tanks might be able to get a boss down, eventually, but it is certainly more effective to have diversity. You need people who are skilled and trained in their specific roles to work together, with everyone focusing on what they do best.

|

||||

|

||||

In MMORPGs, there are always considerably more people who want to play damage roles because they get all the glory. However, you're not going to down the boss without at least a tank and a healer. The tank and the healer mitigate the damage so that the damage classes can do what they do. We need to be respectful of the roles we each play and realize we're much better when we work together. There shouldn't be developers vs. operators when working together helps us deliver more effectively.

|

||||

|

||||

Diversity in roles is important but so is diversity within roles. If you take 10 necromancers to a raid, you'll quickly find there are problems you can't solve with your current ability pool. You need to throw in some elementalists, thieves, and mesmers, too. It's the same with developers; if you everyone comes from the same background, abilities, and experience, you're going to face unnecessary challenges.

|

||||

|

||||

It's better to take the inexperienced person who is willing to learn than the experienced person unwilling to take criticism. If a developer doesn't have hundreds of open source commits, it doesn't necessarily mean they are less skilled. Everyone has to learn somewhere. Senior developers and operators don't appear out of nowhere. Teams often only look for "experienced" people, spending more time with less manpower than if they had just trained an inexperienced recruit.

|

||||

|

||||

Teams often only look for "experienced" people, spending more time with less manpower than if they had just trained an inexperienced recruit.

|

||||

|

||||