mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

1192c6a01c

@ -0,0 +1,136 @@

|

||||

在 Linux 中用 nmcli 命令绑定多块网卡

|

||||

================================================================================

|

||||

今天,我们来学习一下在 CentOS 7.x 中如何用 nmcli(Network Manager Command Line Interface:网络管理命令行接口)进行网卡绑定。

|

||||

|

||||

网卡(接口)绑定是将多块 **网卡** 逻辑地连接到一起从而允许故障转移或者提高吞吐率的方法。提高服务器网络可用性的一个方式是使用多个网卡。Linux 绑定驱动程序提供了一种将多个网卡聚合到一个逻辑的绑定接口的方法。这是个新的实现绑定的方法,并不影响 linux 内核中旧绑定驱动。

|

||||

|

||||

**网卡绑定为我们提供了两个主要的好处:**

|

||||

|

||||

1. **高带宽**

|

||||

1. **冗余/弹性**

|

||||

|

||||

现在让我们在 CentOS 7 上配置网卡绑定吧。我们需要决定选取哪些接口配置成一个组接口(Team interface)。

|

||||

|

||||

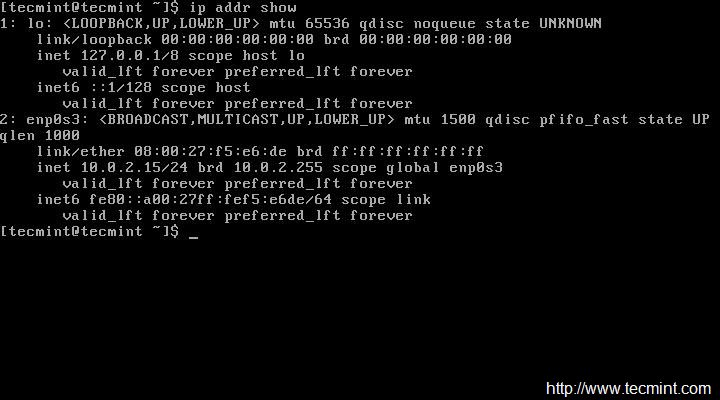

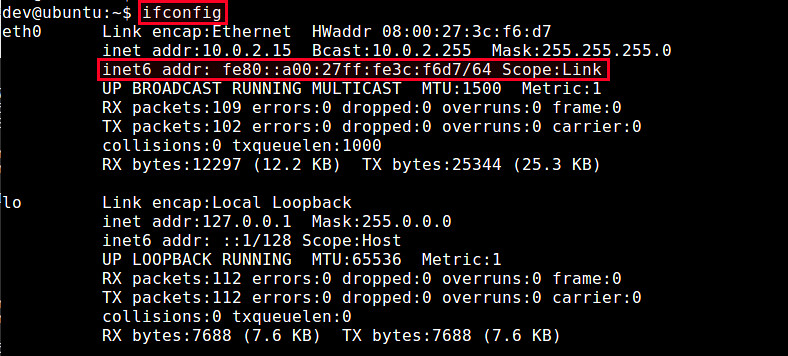

运行 **ip link** 命令查看系统中可用的接口。

|

||||

|

||||

$ ip link

|

||||

|

||||

|

||||

|

||||

这里我们使用 **eno16777736** 和 **eno33554960** 网卡在 “主动备份” 模式下创建一个组接口。(译者注:关于不同模式可以参考:<a href="http://support.huawei.com/ecommunity/bbs/10155553.html">多网卡的7种bond模式原理</a>)

|

||||

|

||||

按照下面的语法,用 **nmcli** 命令为网络组接口创建一个连接。

|

||||

|

||||

# nmcli con add type team con-name CNAME ifname INAME [config JSON]

|

||||

|

||||

**CNAME** 指代连接的名称,**INAME** 是接口名称,**JSON** (JavaScript Object Notation) 指定所使用的处理器(runner)。**JSON** 语法格式如下:

|

||||

|

||||

'{"runner":{"name":"METHOD"}}'

|

||||

|

||||

**METHOD** 是以下的其中一个:**broadcast、activebackup、roundrobin、loadbalance** 或者 **lacp**。

|

||||

|

||||

### 1. 创建组接口 ###

|

||||

|

||||

现在让我们来创建组接口。这是我们创建组接口所使用的命令。

|

||||

|

||||

# nmcli con add type team con-name team0 ifname team0 config '{"runner":{"name":"activebackup"}}'

|

||||

|

||||

|

||||

|

||||

运行 **# nmcli con show** 命令验证组接口配置。

|

||||

|

||||

# nmcli con show

|

||||

|

||||

|

||||

|

||||

### 2. 添加从设备 ###

|

||||

|

||||

现在让我们添加从设备到主设备 team0。这是添加从设备的语法:

|

||||

|

||||

# nmcli con add type team-slave con-name CNAME ifname INAME master TEAM

|

||||

|

||||

在这里我们添加 **eno16777736** 和 **eno33554960** 作为 **team0** 接口的从设备。

|

||||

|

||||

# nmcli con add type team-slave con-name team0-port1 ifname eno16777736 master team0

|

||||

|

||||

# nmcli con add type team-slave con-name team0-port2 ifname eno33554960 master team0

|

||||

|

||||

|

||||

|

||||

再次用命令 **#nmcli con show** 验证连接配置。现在我们可以看到从设备配置信息。

|

||||

|

||||

#nmcli con show

|

||||

|

||||

|

||||

|

||||

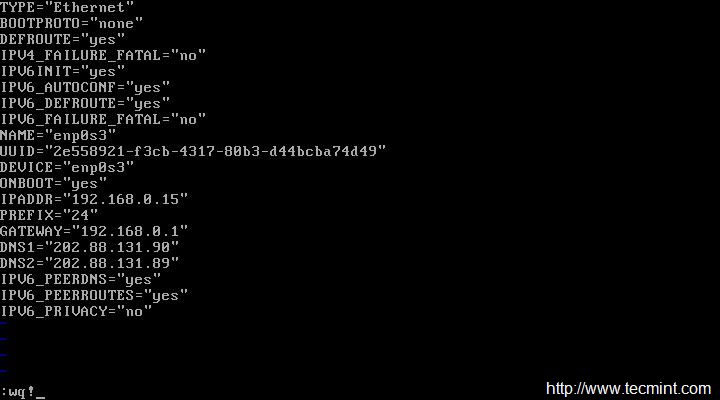

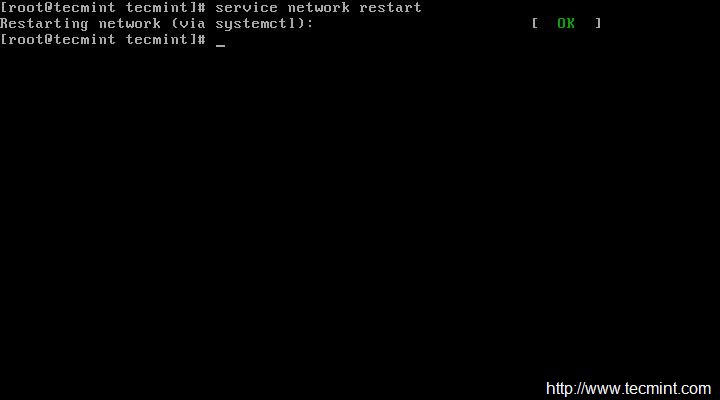

### 3. 分配 IP 地址 ###

|

||||

|

||||

上面的命令会在 **/etc/sysconfig/network-scripts/** 目录下创建需要的配置文件。

|

||||

|

||||

现在让我们为 team0 接口分配一个 IP 地址并启用这个连接。这是进行 IP 分配的命令。

|

||||

|

||||

# nmcli con mod team0 ipv4.addresses "192.168.1.24/24 192.168.1.1"

|

||||

# nmcli con mod team0 ipv4.method manual

|

||||

# nmcli con up team0

|

||||

|

||||

|

||||

|

||||

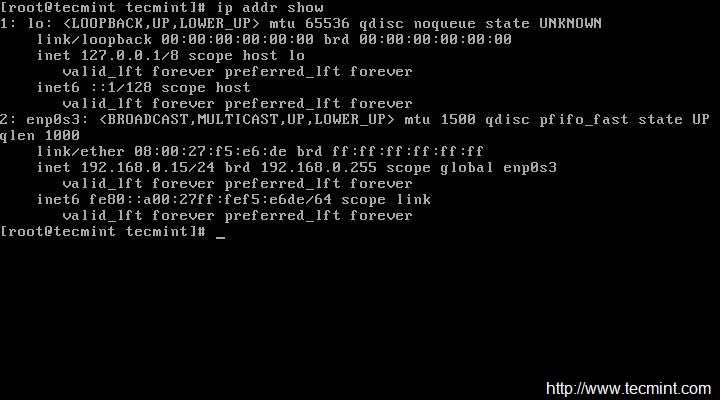

### 4. 验证绑定 ###

|

||||

|

||||

用 **#ip add show team0** 命令验证 IP 地址信息。

|

||||

|

||||

#ip add show team0

|

||||

|

||||

|

||||

|

||||

现在用 **teamdctl** 命令检查 **主动备份** 配置功能。

|

||||

|

||||

# teamdctl team0 state

|

||||

|

||||

|

||||

|

||||

现在让我们把激活的端口断开连接并再次检查状态来确认主动备份配置是否像希望的那样工作。

|

||||

|

||||

# nmcli dev dis eno33554960

|

||||

|

||||

|

||||

|

||||

断开激活端口后再次用命令 **#teamdctl team0 state** 检查状态。

|

||||

|

||||

# teamdctl team0 state

|

||||

|

||||

|

||||

|

||||

是的,它运行良好!!我们会使用下面的命令连接回到 team0 的断开的连接。

|

||||

|

||||

#nmcli dev con eno33554960

|

||||

|

||||

|

||||

|

||||

我们还有一个 **teamnl** 命令可以显示 **teamnl** 命令的一些选项。

|

||||

|

||||

用下面的命令检查在 team0 运行的端口。

|

||||

|

||||

# teamnl team0 ports

|

||||

|

||||

|

||||

|

||||

显示 **team0** 当前活动的端口。

|

||||

|

||||

# teamnl team0 getoption activeport

|

||||

|

||||

|

||||

|

||||

好了,我们已经成功地配置了网卡绑定 :-) ,如果有任何反馈,请告诉我们。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/linux-command/interface-nics-bonding-linux/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/arunp/

|

||||

@ -0,0 +1,236 @@

|

||||

搭建一个私有的Docker registry

|

||||

================================================================================

|

||||

|

||||

|

||||

[TL;DR] 这是系列的第二篇文章,这系列讲述了我的公司如何把基础服务从PaaS迁移到Docker上

|

||||

|

||||

- [第一篇文章][1]: 我谈到了接触Docker之前的经历;

|

||||

- [第三篇文章][2]: 我展示如何使创建镜像的过程自动化以及如何用Docker部署一个Rails应用。

|

||||

|

||||

----------

|

||||

|

||||

为什么需要搭建一个私有的registry呢?嗯,对于新手来说,Docker Hub(一个Docker公共仓库)只允许你拥有一个免费的私有版本库(repo)。其他的公司也开始提供类似服务,但是价格可不便宜。另外,如果你需要用Docker部署一个用于生产环境的应用,恐怕你不希望将这些镜像放在公开的Docker Hub上吧!

|

||||

|

||||

这篇文章提供了一个非常务实的方法来处理搭建私有Docker registry时出现的各种错综复杂的情况。我们将会使用一个运行于DigitalOcean(之后简称为DO)的非常小巧的512MB VPS 实例。并且我会假定你已经了解了Docker的基本概念,因为我必须集中精力在复杂的事情上!

|

||||

|

||||

###本地搭建###

|

||||

|

||||

首先你需要安装**boot2docker**以及docker CLI。如果你已经搭建好了基本的Docker环境,你可以直接跳过这一步。

|

||||

|

||||

从终端运行以下命令(我假设你使用OS X,使用 HomeBrew 来安装相关软件,你可以根据你的环境使用不同的包管理软件来安装):

|

||||

|

||||

brew install boot2docker docker

|

||||

|

||||

如果一切顺利(想要了解搭建docker环境的完整指南,请参阅 [http://boot2docker.io/][10]) ,你现在就能够通过如下命令启动一个 Docker 运行于其中的虚拟机:

|

||||

|

||||

boot2docker up

|

||||

|

||||

按照屏幕显示的说明,复制粘贴book2docker在终端输出的命令。如果你现在运行`docker ps`命令,终端将有以下显示。

|

||||

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

|

||||

好了,Docker已经准备就绪,这就够了,我们回过头去搭建registry。

|

||||

|

||||

###创建服务器###

|

||||

|

||||

登录进你的DO账号,选择一个预安装了Docker的镜像文件,创建一个新的Drople。(本文写成时选择的是 Image > Applications > Docker 1.4.1 on 14.04)

|

||||

|

||||

|

||||

|

||||

你将会以邮件的方式收到一个根用户凭证。登录进去,然后运行`docker ps`命令来查看系统状态。

|

||||

|

||||

### 搭建AWS S3 ###

|

||||

|

||||

我们现在将使用Amazo Simple Storage Service(S3)作为我们registry/repository的存储层。我们将需要创建一个桶(bucket)以及用户凭证(user credentials)来允许我们的docker容器访问它。

|

||||

|

||||

登录到我们的AWS账号(如果没有,就申请一个[http://aws.amazon.com/][5]),在控制台选择S3(Simpole Storage Service)。

|

||||

|

||||

|

||||

|

||||

点击 **Create Bucket**,为你的桶输入一个名字(把它记下来,我们一会需要用到它),然后点击**Create**。

|

||||

|

||||

|

||||

|

||||

OK!我们已经搭建好存储部分了。

|

||||

|

||||

### 设置AWS访问凭证###

|

||||

|

||||

我们现在将要创建一个新的用户。退回到AWS控制台然后选择IAM(Identity & Access Management)。

|

||||

|

||||

|

||||

|

||||

在dashboard的左边,点击Users。然后选择 **Create New Users**。

|

||||

|

||||

如图所示:

|

||||

|

||||

|

||||

|

||||

输入一个用户名(例如 docker-registry)然后点击Create。写下(或者下载csv文件)你的Access Key以及Secret Access Key。回到你的用户列表然后选择你刚刚创建的用户。

|

||||

|

||||

在Permission section下面,点击Attach User Policy。之后在下一屏,选择Custom Policy。

|

||||

|

||||

|

||||

|

||||

custom policy的内容如下:

|

||||

|

||||

{

|

||||

"Version": "2012-10-17",

|

||||

"Statement": [

|

||||

{

|

||||

"Sid": "SomeStatement",

|

||||

"Effect": "Allow",

|

||||

"Action": [

|

||||

"s3:*"

|

||||

],

|

||||

"Resource": [

|

||||

"arn:aws:s3:::docker-registry-bucket-name/*",

|

||||

"arn:aws:s3:::docker-registry-bucket-name"

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

这个配置将允许用户(也就是regitstry)来对桶上的内容进行操作(读/写)(确保使用你之前创建AWS S3时使用的桶名)。总结一下:当你想把你的Docker镜像从你的本机推送到仓库中时,服务器就会将他们上传到S3。

|

||||

|

||||

### 安装registry ###

|

||||

|

||||

现在回过头来看我们的DO服务器,SSH登录其上。我们将要[使用][11]一个[官方Docker registry镜像][6]。

|

||||

|

||||

输入如下命令,开启registry。

|

||||

|

||||

docker run \

|

||||

-e SETTINGS_FLAVOR=s3 \

|

||||

-e AWS_BUCKET=bucket-name \

|

||||

-e STORAGE_PATH=/registry \

|

||||

-e AWS_KEY=your_aws_key \

|

||||

-e AWS_SECRET=your_aws_secret \

|

||||

-e SEARCH_BACKEND=sqlalchemy \

|

||||

-p 5000:5000 \

|

||||

--name registry \

|

||||

-d \

|

||||

registry

|

||||

|

||||

Docker将会从Docker Hub上拉取所需的文件系统分层(fs layers)并启动守护容器(daemonised container)。

|

||||

|

||||

### 测试registry ###

|

||||

|

||||

如果上述操作奏效,你可以通过ping命令,或者查找它的内容来测试registry(虽然这个时候容器还是空的)。

|

||||

|

||||

我们的registry非常基础,而且没有提供任何“验明正身”的方式。因为添加身份验证可不是一件轻松事(至少我认为没有一种部署方法是简单的,像是为了证明你努力过似的),我觉得“查询/拉取/推送”仓库内容的最简单方法就是通过SSH通道的未加密连接(通过HTTP)。

|

||||

|

||||

打开SSH通道的操作非常简单:

|

||||

|

||||

ssh -N -L 5000:localhost:5000 root@your_registry.com

|

||||

|

||||

这条命令建立了一条从registry服务器(前面执行`docker run`命令的时候我们见过它)的5000号端口到本机的5000号端口之间的 SSH 管道连接。

|

||||

|

||||

如果你现在用浏览器访问 [http://localhost:5000/v1/_ping][7],将会看到下面这个非常简短的回复。

|

||||

|

||||

{}

|

||||

|

||||

这个意味着registry工作正常。你还可以通过登录 [http://localhost:5000/v1/search][8] 来查看registry内容,内容相似:

|

||||

|

||||

{

|

||||

"num_results": 2,

|

||||

"query": "",

|

||||

"results": [

|

||||

{

|

||||

"description": "",

|

||||

"name": "username/first-repo"

|

||||

},

|

||||

{

|

||||

"description": "",

|

||||

"name": "username/second-repo"

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

### 创建一个镜像 ###

|

||||

|

||||

我们现在创建一个非常简单的Docker镜像,来检验我们新弄好的registry。在我们的本机上,用如下内容创建一个Dockerfile(这里只有一点代码,在下一篇文章里我将会展示给你如何将一个Rails应用绑定进Docker容器中。):

|

||||

|

||||

# ruby 2.2.0 的基础镜像

|

||||

FROM ruby:2.2.0

|

||||

|

||||

MAINTAINER Michelangelo Chasseur <michelangelo.chasseur@touchwa.re>

|

||||

|

||||

并创建它:

|

||||

|

||||

docker build -t localhost:5000/username/repo-name .

|

||||

|

||||

`localhost:5000`这个部分非常重要:Docker镜像名的最前面一个部分将告知`docker push`命令我们将要把我们的镜像推送到哪里。在我们这个例子当中,因为我们要通过SSH管道连接远程的私有registry,`localhost:5000`精确地指向了我们的registry。

|

||||

|

||||

如果一切顺利,当命令执行完成返回后,你可以输入`docker images`命令来列出新近创建的镜像。执行它看看会出现什么现象?

|

||||

|

||||

### 推送到仓库 ###

|

||||

|

||||

接下来是更好玩的部分。实现我所描述的东西着实花了我一点时间,所以如果你第一次读的话就耐心一点吧,跟着我一起操作。我知道接下来的东西会非常复杂(如果你不自动化这个过程就一定会这样),但是我保证到最后你一定都能明白。在下一篇文章里我将会使用到一大波shell脚本和Rake任务,通过它们实现自动化并且用简单的命令实现部署Rails应用。

|

||||

|

||||

你在终端上运行的docker命令实际上都是使用boot2docker虚拟机来运行容器及各种东西。所以当你执行像`docker push some_repo`这样的命令时,是boot2docker虚拟机在与registry交互,而不是我们自己的机器。

|

||||

|

||||

接下来是一个非常重要的点:为了将Docker镜像推送到远端的私有仓库,SSH管道需要在boot2docker虚拟机上配置好,而不是在你的本地机器上配置。

|

||||

|

||||

有许多种方法实现它。我给你展示最简短的一种(可能不是最容易理解的,但是能够帮助你实现自动化)

|

||||

|

||||

在这之前,我们需要对 SSH 做最后一点工作。

|

||||

|

||||

### 设置 SSH ###

|

||||

|

||||

让我们把boot2docker 的 SSH key添加到远端服务器的“已知主机”里面。我们可以使用ssh-copy-id工具完成,通过下面的命令就可以安装上它了:

|

||||

|

||||

brew install ssh-copy-id

|

||||

|

||||

然后运行:

|

||||

|

||||

ssh-copy-id -i /Users/username/.ssh/id_boot2docker root@your-registry.com

|

||||

|

||||

用你ssh key的真实路径代替`/Users/username/.ssh/id_boot2docker`。

|

||||

|

||||

这样做能够让我们免密码登录SSH。

|

||||

|

||||

现在我们来测试以下:

|

||||

|

||||

boot2docker ssh "ssh -o 'StrictHostKeyChecking no' -i /Users/michelangelo/.ssh/id_boot2docker -N -L 5000:localhost:5000 root@registry.touchwa.re &" &

|

||||

|

||||

分开阐述:

|

||||

|

||||

- `boot2docker ssh`允许你以参数的形式传递给boot2docker虚拟机一条执行的命令;

|

||||

- 最后面那个`&`表明这条命令将在后台执行;

|

||||

- `ssh -o 'StrictHostKeyChecking no' -i /Users/michelangelo/.ssh/id_boot2docker -N -L 5000:localhost:5000 root@registry.touchwa.re &`是boot2docker虚拟机实际运行的命令;

|

||||

- `-o 'StrictHostKeyChecking no'`——不提示安全问题;

|

||||

- `-i /Users/michelangelo/.ssh/id_boot2docker`指出虚拟机使用哪个SSH key来进行身份验证。(注意这里的key应该是你前面添加到远程仓库的那个)

|

||||

- 最后我们将打开一条端口5000映射到localhost:5000的SSH通道。

|

||||

|

||||

### 从其他服务器上拉取 ###

|

||||

|

||||

你现在将可以通过下面的简单命令将你的镜像推送到远端仓库:

|

||||

|

||||

docker push localhost:5000/username/repo_name

|

||||

|

||||

在下一篇[文章][9]中,我们将会了解到如何自动化处理这些事务,并且真正地容器化一个Rails应用。请继续收听!

|

||||

|

||||

如有错误,请不吝指出。祝你Docker之路顺利!

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://cocoahunter.com/2015/01/23/docker-2/

|

||||

|

||||

作者:[Michelangelo Chasseur][a]

|

||||

译者:[DongShuaike](https://github.com/DongShuaike)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://cocoahunter.com/author/michelangelo/

|

||||

[1]:https://linux.cn/article-5339-1.html

|

||||

[2]:http://cocoahunter.com/2015/01/23/docker-3/

|

||||

[3]:http://cocoahunter.com/2015/01/23/docker-2/#fn:1

|

||||

[4]:http://cocoahunter.com/2015/01/23/docker-2/#fn:2

|

||||

[5]:http://aws.amazon.com/

|

||||

[6]:https://registry.hub.docker.com/_/registry/

|

||||

[7]:http://localhost:5000/v1/_ping

|

||||

[8]:http://localhost:5000/v1/search

|

||||

[9]:http://cocoahunter.com/2015/01/23/docker-3/

|

||||

[10]:http://boot2docker.io/

|

||||

[11]:https://github.com/docker/docker-registry/

|

||||

@ -53,11 +53,11 @@ Budgie是为Linux发行版定制的旗舰桌面,也是一个定制工程。为

|

||||

|

||||

|

||||

|

||||

**注意点**

|

||||

**注意**

|

||||

|

||||

这是一个活跃的开发版本,一些主要的特点可能还不是特别的完善,如:网络管理器,为数不多的控制组件,无通知系统斌并且无法将app锁定到任务栏。

|

||||

这是一个活跃的开发版本,一些主要的功能可能还不是特别的完善,如:没有网络管理器,没有音量控制组件(可以使用键盘控制),无通知系统并且无法将app锁定到任务栏。

|

||||

|

||||

作为工作区你能够禁用滚动栏,通过设置一个默认的主题并且通过下面的命令退出当前的会话

|

||||

有一个临时解决方案可以禁用叠加滚动栏:设置另外一个默认主题,然后从终端退出当前会话:

|

||||

|

||||

$ gnome-session-quit

|

||||

|

||||

@ -65,7 +65,7 @@ Budgie是为Linux发行版定制的旗舰桌面,也是一个定制工程。为

|

||||

|

||||

### 登录Budgie会话 ###

|

||||

|

||||

安装完成之后,我们能在登录时选择机进入budgie桌面。

|

||||

安装完成之后,我们能在登录时选择进入budgie桌面。

|

||||

|

||||

|

||||

|

||||

@ -79,8 +79,7 @@ Budgie是为Linux发行版定制的旗舰桌面,也是一个定制工程。为

|

||||

|

||||

### 结论 ###

|

||||

|

||||

Hurray! We have successfully installed our Lightweight Budgie Desktop Environment in our Ubuntu 14.04 LTS "Trusty" box. As we know, Budgie Desktop is still underdevelopment which makes it a lot of stuffs missing. Though it’s based on Gnome’s GTK3, it’s not a fork. The desktop is written completely from scratch, and the design is elegant and well thought out. If you have any questions, comments, feedback please do write on the comment box below and let us know what stuffs needs to be added or improved. Thank You! Enjoy Budgie Desktop 0.8 :-)

|

||||

Budgie桌面当前正在开发过程中,因此有目前有很多功能的缺失。虽然它是基于Gnome,但不是完全的复制。Budgie是完全从零开始实现,它的设计是优雅的并且正在不断的完善。

|

||||

嗨,现在我们已经成功的在 Ubuntu 14.04 LTS 上安装了轻量级 Budgie 桌面环境。Budgie桌面当前正在开发过程中,因此有目前有很多功能的缺失。虽然它是基于Gnome 的 GTK3,但不是完全的复制。Budgie是完全从零开始实现,它的设计是优雅的并且正在不断的完善。如果你有任何问题、评论,请在下面的评论框发表。愿你喜欢 Budgie 桌面 0.8 。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -88,7 +87,7 @@ via: http://linoxide.com/ubuntu-how-to/install-lightweight-budgie-v8-desktop-ubu

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[johnhoow](https://github.com/johnhoow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -54,7 +54,7 @@ via: http://www.unixmen.com/install-mate-desktop-freebsd-10-1/

|

||||

|

||||

作者:[M.el Khamlichi][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,14 @@

|

||||

如何记住并在下一次登录时还原正在运行的应用

|

||||

如何在 Ubuntu 中再次登录时还原上次运行的应用

|

||||

================================================================================

|

||||

在你的 Ubuntu 里,你正运行着某些应用,但并不想停掉它们的进程,只想管理一下窗口,并打开那些工作需要的应用。接着,某些其他的事需要你转移注意力或你的机器电量低使得你必须马上关闭电脑。(幸运的是,)你可以让 Ubuntu 记住所有你正运行的应用并在你下一次登录时还原它们。

|

||||

在你的 Ubuntu 里,如果你需要处理一些工作,你并不需要关闭正运行着的那些应用,只需要管理一下窗口,并打开那些工作需要的应用就行。然而,如果你需要离开处理些别的事情或你的机器电量低使得你必须马上关闭电脑,这些程序可能就需要关闭终止了。不过幸运的是,你可以让 Ubuntu 记住所有你正运行的应用并在你下一次登录时还原它们。

|

||||

|

||||

现在,为了让我们的 Ubuntu 记住当前会话中正运行的应用并在我们下一次登录时还原它们,我们将会使用到 `dconf-editor`。这个工具代替了前一个 Ubuntu 版本里安装的 `gconf-editor`,但默认情况下并没有在现在这个 Ubuntu 版本(注:这里指的是 Ubuntu 14.04 LTS) 里安装。为了安装 `dconf-editor`, 你需要运行 `sudo apt-get install dconf-editor`命令:

|

||||

###自动保存会话

|

||||

|

||||

现在,为了让我们的 Ubuntu 记住当前会话中正运行的应用并在我们下一次登录时还原它们,我们将会使用到 `dconf-editor`。这个工具代替了前一个 Ubuntu 版本里安装的 `gconf-editor`,但默认情况下现在这个 Ubuntu 版本(注:这里指的是 Ubuntu 14.04 LTS) 并没有安装。为了安装 `dconf-editor`, 你需要运行 `sudo apt-get install dconf-editor`命令:

|

||||

|

||||

$ sudo apt-get install dconf-tools

|

||||

|

||||

一旦 `dconf-editor` 安装完毕,你就可以从应用菜单(注:这里指的是 Unity Dash)里打开它或者你可以通过直接在终端里或使用 `alt+f2` 运行下面的命令来启动它:

|

||||

一旦 `dconf-editor` 安装完毕,你就可以从应用菜单(注:这里指的是 Unity Dash)里打开它,或者你可以通过直接在终端里运行,或使用 `alt+f2` 运行下面的命令来启动它:

|

||||

|

||||

$ dconf-editor

|

||||

|

||||

@ -22,7 +24,7 @@

|

||||

|

||||

|

||||

|

||||

在你检查或对刚才的选项打钩之后,点击默认情况下位于窗口左上角的关闭按钮(X)来关闭 “Dconf Editor”。

|

||||

在你确认对刚才的选项打钩之后,点击默认情况下位于窗口左上角的关闭按钮(X)来关闭 “Dconf Editor”。

|

||||

|

||||

|

||||

|

||||

@ -30,6 +32,10 @@

|

||||

|

||||

欢呼吧,我们已经成功地配置了我们的 Ubuntu 14.04 LTS "Trusty" 来自动记住我们上一次会话中正在运行的应用。

|

||||

|

||||

除了关机后恢复应用之外,还可以通过休眠来达成类似的功能。

|

||||

|

||||

###休眠功能

|

||||

|

||||

现在,在这个教程里,我们也将学会 **如何在 Ubuntu 14.04 LTS 里开启休眠功能** :

|

||||

|

||||

在开始之前,在键盘上按 `Ctrl+Alt+T` 来开启终端。在它开启以后,运行:

|

||||

@ -38,15 +44,15 @@

|

||||

|

||||

在你的电脑关闭后,再重新开启它。这时,你开启的应用被重新打开了吗?如果休眠功能没有发挥作用,请检查你的交换分区大小,它至少要和你可用 RAM 大小相当。

|

||||

|

||||

你可以在系统监视器里查看你的交换分区大小,而系统监视器可以通过在应用菜单或在终端里运行下面的命令来开启:

|

||||

你可以在系统监视器里查看你的交换分区大小,系统监视器可以通过在应用菜单或在终端里运行下面的命令来开启:

|

||||

|

||||

$ gnome-system-monitor

|

||||

|

||||

### 在系统托盘里启用休眠功能: ###

|

||||

#### 在系统托盘里启用休眠功能: ####

|

||||

|

||||

提示模块是通过使用 logind 而不是使用 upower 来更新的。默认情况下,在 upower 和 logind 中,休眠都被禁用了。

|

||||

系统托盘里面的会话指示器现在使用 logind 而不是 upower 了。默认情况下,在 upower 和 logind 中,休眠菜单都被禁用了。

|

||||

|

||||

为了开启休眠功能,依次运行下面的命令来编辑配置文件:

|

||||

为了开启它的休眠菜单,依次运行下面的命令来编辑配置文件:

|

||||

|

||||

sudo -i

|

||||

|

||||

@ -70,26 +76,27 @@

|

||||

|

||||

重启你的电脑就可以了。

|

||||

|

||||

### 当你盖上笔记本的后盖时,让它休眠: ###

|

||||

#### 当你盖上笔记本的后盖时,让它休眠: ####

|

||||

|

||||

1.通过下面的命令编辑文件 “/etc/systemd/logind.conf” :

|

||||

1. 通过下面的命令编辑文件 “/etc/systemd/logind.conf” :

|

||||

|

||||

$ sudo nano /etc/systemd/logind.conf

|

||||

|

||||

$ sudo nano /etc/systemd/logind.conf

|

||||

|

||||

2. 将 **#HandleLidSwitch=suspend** 这一行改为 **HandleLidSwitch=hibernate** 并保存文件;

|

||||

2. 将 **#HandleLidSwitch=suspend** (挂起)这一行改为 **HandleLidSwitch=hibernate** (休眠)并保存文件;

|

||||

|

||||

3. 运行下面的命令或重启你的电脑来应用更改:

|

||||

|

||||

$ sudo restart systemd-logind

|

||||

$ sudo restart systemd-logind

|

||||

|

||||

就是这样。 成功了吗?现在我们设置了 dconf 并开启了休眠功能 :) 这样,无论你是关机还是直接合上笔记本盖子,你的 Ubuntu 将能够完全记住你开启的应用和窗口了。

|

||||

|

||||

就是这样。享受吧!现在我们有了 dconf 并开启了休眠功能 :) 你的 Ubuntu 将能够完全记住你开启的应用和窗口了。

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/ubuntu-how-to/remember-running-applications-ubuntu/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Moving to Docker

|

||||

走向 Docker

|

||||

================================================================================

|

||||

|

||||

|

||||

@ -8,11 +8,11 @@ Moving to Docker

|

||||

|

||||

上个月,我一直在折腾开发环境。这是我个人故事和经验,关于尝试用Docker简化Rails应用的部署过程。

|

||||

|

||||

当我在2012年创建我的公司 – [Touchware][1]时,我还是一个独立开发者。很多事情很小,不复杂,不他们需要很多维护,他们也不需要不部署到很多机器上。经过过去一年的发展,我们成长了很多(我们现在是是拥有10个人团队)而且我们的服务端的程序和API无论在范围和规模方面都有增长。

|

||||

当我在2012年创建我的公司 – [Touchware][1]时,我还是一个独立开发者。很多事情很小,不复杂,他们不需要很多维护,他们也不需要部署到很多机器上。经过过去一年的发展,我们成长了很多(我们现在是是拥有10个人的团队)而且我们的服务端的程序和API无论在范围和规模方面都有增长。

|

||||

|

||||

### 第1步 - Heroku ###

|

||||

|

||||

我们还是个小公司,我们需要让事情运行地尽可能平稳。当我们寻找可行的解决方案时,我们打算坚持用那些可以帮助我们减轻对硬件依赖负担的工具。由于我们主要开发Rails应用,而Heroku对RoR,常用的数据库和缓存(Postgres/Mongo/Redis等)有很好的支持,最明智的选择就是用[Heroku][2] 。我们就是这样做的。

|

||||

我们还是个小公司,我们需要让事情运行地尽可能平稳。当我们寻找可行的解决方案时,我们打算坚持用那些可以帮助我们减轻对硬件依赖负担的工具。由于我们主要开发Rails应用,而Heroku对RoR、常用的数据库和缓存(Postgres/Mongo/Redis等)有很好的支持,最明智的选择就是用[Heroku][2] 。我们就是这样做的。

|

||||

|

||||

Heroku有很好的技术支持和文档,使得部署非常轻松。唯一的问题是,当你处于起步阶段,你需要很多开销。这不是最好的选择,真的。

|

||||

|

||||

@ -20,18 +20,18 @@ Heroku有很好的技术支持和文档,使得部署非常轻松。唯一的

|

||||

|

||||

为了尝试并降低成本,我们决定试试Dokku。[Dokku][3],引用GitHub上的一句话

|

||||

|

||||

> Docker powered mini-Heroku in around 100 lines of Bash

|

||||

> Docker 驱动的 mini-Heroku,只用了一百来行的 bash 脚本

|

||||

|

||||

我们启用的[DigitalOcean][4]上的很多台机器,都预装了Dokku。Dokku非常像Heroku,但是当你有复杂的项目需要调整配置参数或者是需要特殊的依赖时,它就不能胜任了。我们有一个应用,它需要对图片进行多次转换,我们无法安装一个适合版本的imagemagick到托管我们Rails应用的基于Dokku的Docker容器内。尽管我们还有很多应用运行在Dokku上,但我们还是不得不把一些迁移回Heroku。

|

||||

我们启用的[DigitalOcean][4]上的很多台机器,都预装了Dokku。Dokku非常像Heroku,但是当你有复杂的项目需要调整配置参数或者是需要特殊的依赖时,它就不能胜任了。我们有一个应用,它需要对图片进行多次转换,我们把我们Rails应用的托管到基于Dokku的Docker容器,但是无法安装一个适合版本的imagemagick到里面。尽管我们还有很多应用运行在Dokku上,但我们还是不得不把一些迁移回Heroku。

|

||||

|

||||

### 第3步 - Docker ###

|

||||

|

||||

几个月前,由于开发环境和生产环境的问题重新出现,我决定试试Docker。简单来说,Docker让开发者容器化应用,简化部署。由于一个Docker容器本质上已经包含项目运行所需要的所有依赖,只要它能在你的笔记本上运行地很好,你就能确保它将也能在任何一个别的远程服务器的生产环境上运行,包括Amazon的EC2和DigitalOcean上的VPS。

|

||||

几个月前,由于开发环境和生产环境的问题重新出现,我决定试试Docker。简单来说,Docker让开发者容器化应用、简化部署。由于一个Docker容器本质上已经包含项目运行所需要的所有依赖,只要它能在你的笔记本上运行地很好,你就能确保它将也能在任何一个别的远程服务器的生产环境上运行,包括Amazon的EC2和DigitalOcean上的VPS。

|

||||

|

||||

Docker IMHO特别有意思的原因是:

|

||||

就我个人的看法来说,Docker 特别有意思的原因是:

|

||||

|

||||

- 它促进了模块化和分离关注点:你只需要去考虑应用的逻辑部分(负载均衡:1个容器;数据库:1个容器;web服务器:1个容器);

|

||||

- 在部署的配置上非常灵活:容器可以被部署在大量的HW上,也可以容易地重新部署在不同的服务器或者提供商那;

|

||||

- 它促进了模块化和关注点分离:你只需要去考虑应用的逻辑部分(负载均衡:1个容器;数据库:1个容器;web服务器:1个容器);

|

||||

- 在部署的配置上非常灵活:容器可以被部署在各种硬件上,也可以容易地重新部署在不同的服务器和不同的提供商;

|

||||

- 它允许非常细粒度地优化应用的运行环境:你可以利用你的容器来创建镜像,所以你有很多选择来配置环境。

|

||||

|

||||

它也有一些缺点:

|

||||

@ -54,15 +54,15 @@ via: http://cocoahunter.com/2015/01/23/docker-1/

|

||||

|

||||

作者:[Michelangelo Chasseur][a]

|

||||

译者:[mtunique](https://github.com/mtunique)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://cocoahunter.com/author/michelangelo/

|

||||

[1]:http://www.touchwa.re/

|

||||

[2]:http://cocoahunter.com/2015/01/23/docker-1/www.heroku.com

|

||||

[2]:http://www.heroku.com

|

||||

[3]:https://github.com/progrium/dokku

|

||||

[4]:http://cocoahunter.com/2015/01/23/docker-1/www.digitalocean.com

|

||||

[4]:http://www.digitalocean.com

|

||||

[5]:http://www.docker.com/

|

||||

[6]:http://cocoahunter.com/2015/01/23/docker-2/

|

||||

[7]:http://cocoahunter.com/2015/01/23/docker-3/

|

||||

@ -78,4 +78,3 @@ via: http://cocoahunter.com/2015/01/23/docker-1/

|

||||

[17]:

|

||||

[18]:

|

||||

[19]:

|

||||

[20]:

|

||||

@ -1,39 +1,42 @@

|

||||

Linux Shell脚本 入门25问

|

||||

Linux Shell脚本面试25问

|

||||

================================================================================

|

||||

### Q:1 Shell脚本是什么、为什么它是必需的吗? ###

|

||||

### Q:1 Shell脚本是什么、它是必需的吗? ###

|

||||

|

||||

答:一个Shell脚本是一个文本文件,包含一个或多个命令。作为系统管理员,我们经常需要发出多个命令来完成一项任务,我们可以添加这些所有命令在一个文本文件(Shell脚本)来完成日常工作任务。

|

||||

答:一个Shell脚本是一个文本文件,包含一个或多个命令。作为系统管理员,我们经常需要使用多个命令来完成一项任务,我们可以添加这些所有命令在一个文本文件(Shell脚本)来完成这些日常工作任务。

|

||||

|

||||

### Q:2 什么是默认登录shell,如何改变指定用户的登录shell ###

|

||||

|

||||

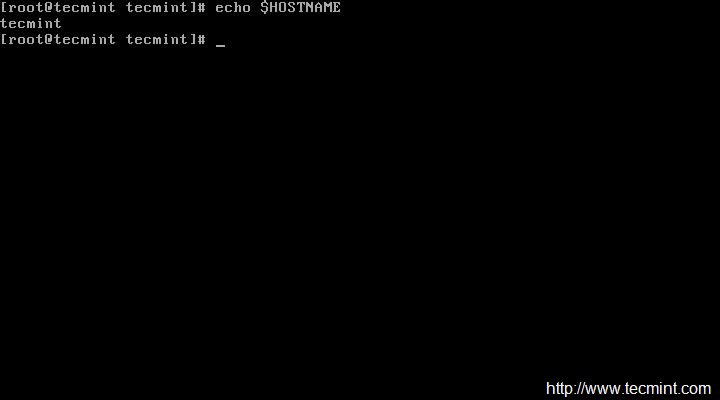

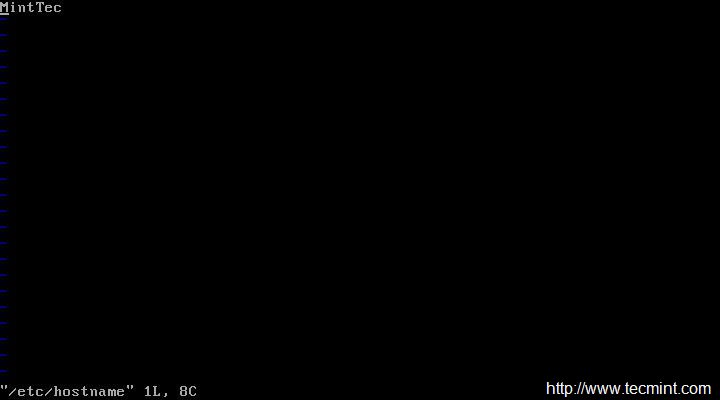

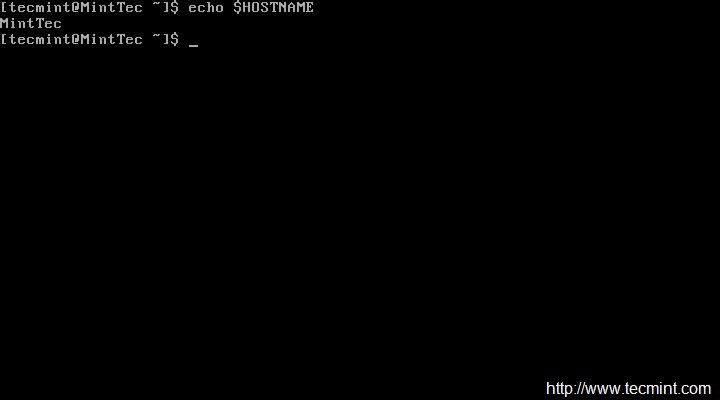

答:在Linux操作系统,“/ bin / bash”是默认登录shell,在用户创建时被分配的。使用chsh命令可以改变默认的shell。示例如下所示:

|

||||

答:在Linux操作系统,“/bin/bash”是默认登录shell,是在创建用户时分配的。使用chsh命令可以改变默认的shell。示例如下所示:

|

||||

|

||||

# chsh <username> -s <new_default_shell>

|

||||

# chsh <用户名> -s <新shell>

|

||||

# chsh linuxtechi -s /bin/sh

|

||||

|

||||

### Q:3 有什么不同的类型在shell脚本中使用? ###

|

||||

### Q:3 可以在shell脚本中使用哪些类型的变量? ###

|

||||

|

||||

答:在shell脚本,我们可以使用两个类型变量:

|

||||

答:在shell脚本,我们可以使用两种类型的变量:

|

||||

|

||||

- 系统定义变量

|

||||

- 用户定义变量

|

||||

|

||||

系统变量是由系统系统自己创建的。这些变量通常由大写字母组成,可以通过“**set**”命令查看。

|

||||

|

||||

系统变量是由系统系统自己创建的。这些变量由大写字母组成,可以通过“**set**”命令查看。

|

||||

用户变量由系统用户来生成和定义,变量的值可以通过命令“`echo $<变量名>`”查看。

|

||||

|

||||

用户变量由系统用户来生成,变量的值可以通过命令“`echo $<变量名>`”查看

|

||||

|

||||

### Q:4 如何同时重定向标准输出和错误输出到同一位置? ###

|

||||

### Q:4 如何将标准输出和错误输出同时重定向到同一位置? ###

|

||||

|

||||

答:这里有两个方法来实现:

|

||||

|

||||

方法1:2>&1 (# ls /usr/share/doc > out.txt 2>&1 )

|

||||

方法一:

|

||||

|

||||

方法二:&> (# ls /usr/share/doc &> out.txt )

|

||||

2>&1 (如# ls /usr/share/doc > out.txt 2>&1 )

|

||||

|

||||

### Q:5 shell脚本中“if”的语法 ? ###

|

||||

方法二:

|

||||

|

||||

答:基础语法:

|

||||

&> (如# ls /usr/share/doc &> out.txt )

|

||||

|

||||

### Q:5 shell脚本中“if”语法如何嵌套? ###

|

||||

|

||||

答:基础语法如下:

|

||||

|

||||

if [ 条件 ]

|

||||

then

|

||||

@ -72,9 +75,9 @@ Linux Shell脚本 入门25问

|

||||

|

||||

如果结束状态不是0,说明命令执行失败。

|

||||

|

||||

### Q:7 在shell脚本中如何比较两个数 ? ###

|

||||

### Q:7 在shell脚本中如何比较两个数字 ? ###

|

||||

|

||||

答:测试用例使用if-then来比较两个数,例子如下:

|

||||

答:在if-then中使用测试命令( -gt 等)来比较两个数字,例子如下:

|

||||

|

||||

#!/bin/bash

|

||||

x=10

|

||||

@ -89,11 +92,11 @@ Linux Shell脚本 入门25问

|

||||

|

||||

### Q:8 shell脚本中break命令的作用 ? ###

|

||||

|

||||

答:break命令一个简单的用途是退出执行中的循环。我们可以在while 和until循环中使用break命令跳出循环。

|

||||

答:break命令一个简单的用途是退出执行中的循环。我们可以在while和until循环中使用break命令跳出循环。

|

||||

|

||||

### Q:9 shell脚本中continue命令的作用 ? ###

|

||||

|

||||

答:continue命令不同于break命令,它只跳出当前循环的迭代,而不是整个循环。continue命令很多时候是很有用的,例如错误发生,但我们依然希望循环继续的时候。

|

||||

答:continue命令不同于break命令,它只跳出当前循环的迭代,而不是**整个**循环。continue命令很多时候是很有用的,例如错误发生,但我们依然希望继续执行大循环的时候。

|

||||

|

||||

### Q:10 告诉我shell脚本中Case语句的语法 ? ###

|

||||

|

||||

@ -116,14 +119,14 @@ Linux Shell脚本 入门25问

|

||||

|

||||

### Q:11 shell脚本中while循环语法 ? ###

|

||||

|

||||

答:如同for循环,while循环重复自己所有命令只要条件成立,不同于for循环。基础语法:

|

||||

答:如同for循环,while循环只要条件成立就重复它的命令块。不同于for循环,while循环会不断迭代,直到它的条件不为真。基础语法:

|

||||

|

||||

while [ 条件 ]

|

||||

do

|

||||

命令…

|

||||

done

|

||||

|

||||

### Q:12 如何使脚本成为可执行状态 ? ###

|

||||

### Q:12 如何使脚本可执行 ? ###

|

||||

|

||||

答:使用chmod命令来使脚本可执行。例子如下:

|

||||

|

||||

@ -131,11 +134,11 @@ Linux Shell脚本 入门25问

|

||||

|

||||

### Q:13 “#!/bin/bash”的作用 ? ###

|

||||

|

||||

答:#!/bin/bash是shell脚本的第一行,总所周知,#符号调用hash而!调用bang。它的意思是命令使用 /bin/bash来执行命令

|

||||

答:#!/bin/bash是shell脚本的第一行,称为释伴(shebang)行。这里#符号叫做hash,而! 叫做 bang。它的意思是命令通过 /bin/bash 来执行。

|

||||

|

||||

### Q:14 shell脚本中for循环语法 ? ###

|

||||

|

||||

答:for循环基础语法:

|

||||

答:for循环的基础语法:

|

||||

|

||||

for 变量 in 循环列表

|

||||

do

|

||||

@ -147,13 +150,13 @@ Linux Shell脚本 入门25问

|

||||

|

||||

### Q:15 如何调试shell脚本 ? ###

|

||||

|

||||

答:使用'-x'参数(sh -x myscript.sh)可以调试shell脚本。另一个种方法是使用‘-nv’参数( sh -nv myscript.sh)

|

||||

答:使用'-x'参数(sh -x myscript.sh)可以调试shell脚本。另一个种方法是使用‘-nv’参数( sh -nv myscript.sh)。

|

||||

|

||||

### Q:16 shell脚本如何比较字符串? ###

|

||||

|

||||

答:test命令可以用来比较字符串。Test命令比较字符串通过比较每一个字符来比较。

|

||||

答:test命令可以用来比较字符串。测试命令会通过比较字符串中的每一个字符来比较。

|

||||

|

||||

### Q:17 Bourne shell(bash) 中有哪些特别变量 ? ###

|

||||

### Q:17 Bourne shell(bash) 中有哪些特殊的变量 ? ###

|

||||

|

||||

答:下面的表列出了Bourne shell为命令行设置的特殊变量。

|

||||

|

||||

@ -175,7 +178,7 @@ Linux Shell脚本 入门25问

|

||||

<p align="left" class="western">$0</p>

|

||||

</td>

|

||||

<td width="453" valign="top">

|

||||

<p align="left" class="western">来自命令行脚本的名字</p>

|

||||

<p align="left" class="western">命令行中的脚本名字</p>

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -252,7 +255,7 @@ Linux Shell脚本 入门25问

|

||||

<p align="left" class="western">-d 文件名</p>

|

||||

</td>

|

||||

<td width="453">

|

||||

<p align="left" class="western">返回true,如果文件存在并且是一个目录</p>

|

||||

<p align="left" class="western">如果文件存在并且是目录,返回true</p>

|

||||

</td>

|

||||

</tr>

|

||||

<tr valign="top">

|

||||

@ -260,7 +263,7 @@ Linux Shell脚本 入门25问

|

||||

<p align="left" class="western">-e 文件名</p>

|

||||

</td>

|

||||

<td width="453">

|

||||

<p align="left" class="western">返回true,如果文件存在</p>

|

||||

<p align="left" class="western">如果文件存在,返回true</p>

|

||||

</td>

|

||||

</tr>

|

||||

<tr valign="top">

|

||||

@ -268,7 +271,7 @@ Linux Shell脚本 入门25问

|

||||

<p align="left" class="western">-f 文件名</p>

|

||||

</td>

|

||||

<td width="453">

|

||||

<p align="left" class="western">返回true,如果文件存在并且是普通文件</p>

|

||||

<p align="left" class="western">如果文件存在并且是普通文件,返回true</p>

|

||||

</td>

|

||||

</tr>

|

||||

<tr valign="top">

|

||||

@ -276,7 +279,7 @@ Linux Shell脚本 入门25问

|

||||

<p align="left" class="western">-r 文件名</p>

|

||||

</td>

|

||||

<td width="453">

|

||||

<p align="left" class="western">返回true,如果文件存在并拥有读权限</p>

|

||||

<p align="left" class="western">如果文件存在并可读,返回true</p>

|

||||

</td>

|

||||

</tr>

|

||||

<tr valign="top">

|

||||

@ -284,7 +287,7 @@ Linux Shell脚本 入门25问

|

||||

<p align="left" class="western">-s 文件名</p>

|

||||

</td>

|

||||

<td width="453">

|

||||

<p align="left" class="western">返回true,如果文件存在并且不为空</p>

|

||||

<p align="left" class="western">如果文件存在并且不为空,返回true</p>

|

||||

</td>

|

||||

</tr>

|

||||

<tr valign="top">

|

||||

@ -292,7 +295,7 @@ Linux Shell脚本 入门25问

|

||||

<p align="left" class="western">-w 文件名</p>

|

||||

</td>

|

||||

<td width="453">

|

||||

<p align="left" class="western">返回true,如果文件存在并拥有写权限</p>

|

||||

<p align="left" class="western">如果文件存在并可写,返回true</p>

|

||||

</td>

|

||||

</tr>

|

||||

<tr valign="top">

|

||||

@ -300,7 +303,7 @@ Linux Shell脚本 入门25问

|

||||

<p align="left" class="western">-x 文件名</p>

|

||||

</td>

|

||||

<td width="453">

|

||||

<p align="left" class="western">返回true,如果文件存在并拥有执行权限</p>

|

||||

<p align="left" class="western">如果文件存在并可执行,返回true</p>

|

||||

</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

@ -308,15 +311,15 @@ Linux Shell脚本 入门25问

|

||||

|

||||

### Q:19 在shell脚本中,如何写入注释 ? ###

|

||||

|

||||

答:注释可以用来描述一个脚本可以做什么和它是如何工作的。每一个注释以#开头。例子如下:

|

||||

答:注释可以用来描述一个脚本可以做什么和它是如何工作的。每一行注释以#开头。例子如下:

|

||||

|

||||

#!/bin/bash

|

||||

# This is a command

|

||||

echo “I am logged in as $USER”

|

||||

|

||||

### Q:20 如何得到来自终端的命令输入到shell脚本? ###

|

||||

### Q:20 如何让 shell 就脚本得到来自终端的输入? ###

|

||||

|

||||

答:read命令可以读取来自终端(使用键盘)的数据。read命令接入用户的输入并置于变量中。例子如下:

|

||||

答:read命令可以读取来自终端(使用键盘)的数据。read命令得到用户的输入并置于你给出的变量中。例子如下:

|

||||

|

||||

# vi /tmp/test.sh

|

||||

|

||||

@ -330,9 +333,9 @@ Linux Shell脚本 入门25问

|

||||

LinuxTechi

|

||||

My Name is LinuxTechi

|

||||

|

||||

### Q:21 如何取消设置或取消变量 ? ###

|

||||

### Q:21 如何取消变量或取消变量赋值 ? ###

|

||||

|

||||

答:“unset”命令用于去取消或取消设置一个变量。语法如下所示:

|

||||

答:“unset”命令用于取消变量或取消变量赋值。语法如下所示:

|

||||

|

||||

# unset <变量名>

|

||||

|

||||

@ -345,7 +348,7 @@ Linux Shell脚本 入门25问

|

||||

|

||||

### Q:23 do-while语句的基本格式 ? ###

|

||||

|

||||

答:do-while语句类似于while语句,但检查条件语句之前先执行命令。下面是用do-while语句的语法

|

||||

答:do-while语句类似于while语句,但检查条件语句之前先执行命令(LCTT 译注:意即至少执行一次。)。下面是用do-while语句的语法

|

||||

|

||||

do

|

||||

{

|

||||

@ -354,7 +357,7 @@ Linux Shell脚本 入门25问

|

||||

|

||||

### Q:24 在shell脚本如何定义函数呢 ? ###

|

||||

|

||||

答:函数是拥有名字的代码块。当我们定义代码块,我们就可以在我们的脚本调用名字,该块就会被执行。示例如下所示:

|

||||

答:函数是拥有名字的代码块。当我们定义代码块,我们就可以在我们的脚本调用函数名字,该块就会被执行。示例如下所示:

|

||||

|

||||

$ diskusage () { df -h ; }

|

||||

|

||||

@ -371,7 +374,7 @@ Linux Shell脚本 入门25问

|

||||

|

||||

### Q:25 如何在shell脚本中使用BC(bash计算器) ? ###

|

||||

|

||||

答:使用下列格式,在shell脚本中使用bc

|

||||

答:使用下列格式,在shell脚本中使用bc:

|

||||

|

||||

variable=`echo “options; expression” | bc`

|

||||

|

||||

@ -381,7 +384,7 @@ via: http://www.linuxtechi.com/linux-shell-scripting-interview-questions-answers

|

||||

|

||||

作者:[Pradeep Kumar][a]

|

||||

译者:[VicYu/Vic020](http://vicyu.net)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,24 +1,22 @@

|

||||

===>> boredivan翻译中 <<===

|

||||

怎样在CentOS 7.0上安装/配置VNC服务器

|

||||

怎样在CentOS 7.0上安装和配置VNC服务器

|

||||

================================================================================

|

||||

这是一个关于怎样在你的 CentOS 7 上安装配置 [VNC][1] 服务的教程。当然这个教程也适合 RHEL 7 。在这个教程里,我们将学习什么是VNC以及怎样在 CentOS 7 上安装配置 [VNC 服务器][1]。

|

||||

这是一个关于怎样在你的 CentOS 7 上安装配置 [VNC][1] 服务的教程。当然这个教程也适合 RHEL 7 。在这个教程里,我们将学习什么是 VNC 以及怎样在 CentOS 7 上安装配置 [VNC 服务器][1]。

|

||||

|

||||

我们都知道,作为一个系统管理员,大多数时间是通过网络管理服务器的。在管理服务器的过程中很少会用到图形界面,多数情况下我们只是用 SSH 来完成我们的管理任务。在这篇文章里,我们将配置 VNC 来提供一个连接我们 CentOS 7 服务器的方法。VNC 允许我们开启一个远程图形会话来连接我们的服务器,这样我们就可以通过网络远程访问服务器的图形界面了。

|

||||

|

||||

VNC 服务器是一个自由且开源的软件,它可以让用户可以远程访问服务器的桌面环境。另外连接 VNC 服务器需要使用 VNC viewer 这个客户端。

|

||||

VNC 服务器是一个自由开源软件,它可以让用户可以远程访问服务器的桌面环境。另外连接 VNC 服务器需要使用 VNC viewer 这个客户端。

|

||||

|

||||

** 一些 VNC 服务器的优点:**

|

||||

|

||||

远程的图形管理方式让工作变得简单方便。

|

||||

剪贴板可以在 CentOS 服务器主机和 VNC 客户端机器之间共享。

|

||||

CentOS 服务器上也可以安装图形工具,让管理能力变得更强大。

|

||||

只要安装了 VNC 客户端,任何操作系统都可以管理 CentOS 服务器了。

|

||||

比 ssh 图形和 RDP 连接更可靠。

|

||||

- 远程的图形管理方式让工作变得简单方便。

|

||||

- 剪贴板可以在 CentOS 服务器主机和 VNC 客户端机器之间共享。

|

||||

- CentOS 服务器上也可以安装图形工具,让管理能力变得更强大。

|

||||

- 只要安装了 VNC 客户端,通过任何操作系统都可以管理 CentOS 服务器了。

|

||||

- 比 ssh 图形转发和 RDP 连接更可靠。

|

||||

|

||||

那么,让我们开始安装 VNC 服务器之旅吧。我们需要按照下面的步骤一步一步来搭建一个有效的 VNC。

|

||||

那么,让我们开始安装 VNC 服务器之旅吧。我们需要按照下面的步骤一步一步来搭建一个可用的 VNC。

|

||||

|

||||

|

||||

首先,我们需要一个有效的桌面环境(X-Window),如果没有的话要先安装一个。

|

||||

首先,我们需要一个可用的桌面环境(X-Window),如果没有的话要先安装一个。

|

||||

|

||||

**注意:以下命令必须以 root 权限运行。要切换到 root ,请在终端下运行“sudo -s”,当然不包括双引号(“”)**

|

||||

|

||||

@ -34,7 +32,8 @@ VNC 服务器是一个自由且开源的软件,它可以让用户可以远程

|

||||

#yum install gnome-classic-session gnome-terminal nautilus-open-terminal control-center liberation-mono-fonts

|

||||

|

||||

|

||||

|

||||

|

||||

### 设置默认启动图形界面

|

||||

# unlink /etc/systemd/system/default.target

|

||||

# ln -sf /lib/systemd/system/graphical.target /etc/systemd/system/default.target

|

||||

|

||||

@ -56,13 +55,13 @@ VNC 服务器是一个自由且开源的软件,它可以让用户可以远程

|

||||

|

||||

### 3. 配置 VNC ###

|

||||

|

||||

然后,我们需要在 **/etc/systemd/system/** 目录里创建一个配置文件。我们可以从 **/lib/systemd/sytem/vncserver@.service** 拷贝一份配置文件范例过来。

|

||||

然后,我们需要在 `/etc/systemd/system/` 目录里创建一个配置文件。我们可以将 `/lib/systemd/sytem/vncserver@.service` 拷贝一份配置文件范例过来。

|

||||

|

||||

# cp /lib/systemd/system/vncserver@.service /etc/systemd/system/vncserver@:1.service

|

||||

|

||||

|

||||

|

||||

接着我们用自己最喜欢的编辑器(这儿我们用的 **nano** )打开 **/etc/systemd/system/vncserver@:1.service** ,找到下面这几行,用自己的用户名替换掉 <USER> 。举例来说,我的用户名是 linoxide 所以我用 linoxide 来替换掉 <USER> :

|

||||

接着我们用自己最喜欢的编辑器(这儿我们用的 **nano** )打开 `/etc/systemd/system/vncserver@:1.service` ,找到下面这几行,用自己的用户名替换掉 <USER> 。举例来说,我的用户名是 linoxide 所以我用 linoxide 来替换掉 <USER> :

|

||||

|

||||

ExecStart=/sbin/runuser -l <USER> -c "/usr/bin/vncserver %i"

|

||||

PIDFile=/home/<USER>/.vnc/%H%i.pid

|

||||

@ -83,8 +82,7 @@ VNC 服务器是一个自由且开源的软件,它可以让用户可以远程

|

||||

|

||||

# systemctl daemon-reload

|

||||

|

||||

Finally, we'll create VNC password for the user . To do so, first you'll need to be sure that you have sudo access to the user, here I will login to user "linoxide" then, execute the following. To login to linoxide we'll run "**su linoxide" without quotes** .

|

||||

最后还要设置一下用户的 VNC 密码。要设置某个用户的密码,必须要获得该用户的权限,这里我用 linoxide 的权限,执行“**su linoxide**”就可以了。

|

||||

最后还要设置一下用户的 VNC 密码。要设置某个用户的密码,必须要有能通过 sudo 切换到用户的权限,这里我用 linoxide 的权限,执行“`su linoxide`”就可以了。

|

||||

|

||||

# su linoxide

|

||||

$ sudo vncpasswd

|

||||

@ -112,7 +110,7 @@ Finally, we'll create VNC password for the user . To do so, first you'll need to

|

||||

|

||||

|

||||

|

||||

现在就可以用 IP 和端口号(例如 192.168.1.1:1 ,这里的端口不是服务器的端口,而是视 VNC 连接数的多少从1开始排序——译注)来连接 VNC 服务器了。

|

||||

现在就可以用 IP 和端口号(LCTT 译注:例如 192.168.1.1:1 ,这里的端口不是服务器的端口,而是视 VNC 连接数的多少从1开始排序)来连接 VNC 服务器了。

|

||||

|

||||

### 6. 用 VNC 客户端连接服务器 ###

|

||||

|

||||

@ -122,33 +120,33 @@ Finally, we'll create VNC password for the user . To do so, first you'll need to

|

||||

|

||||

你可以用像 [Tightvnc viewer][3] 和 [Realvnc viewer][4] 的客户端来连接到服务器。

|

||||

|

||||

要用其他用户和端口连接 VNC 服务器,请回到第3步,添加一个新的用户和端口。你需要创建 **vncserver@:2.service** 并替换配置文件里的用户名和之后步骤里响应的文件名、端口号。**请确保你登录 VNC 服务器用的是你之前配置 VNC 密码的时候使用的那个用户名**

|

||||

要用更多的用户连接,需要创建配置文件和端口,请回到第3步,添加一个新的用户和端口。你需要创建 `vncserver@:2.service` 并替换配置文件里的用户名和之后步骤里相应的文件名、端口号。**请确保你登录 VNC 服务器用的是你之前配置 VNC 密码的时候使用的那个用户名**。

|

||||

|

||||

VNC 服务本身使用的是5900端口。鉴于有不同的用户使用 VNC ,每个人的连接都会获得不同的端口。配置文件名里面的数字告诉 VNC 服务器把服务运行在5900的子端口上。在我们这个例子里,第一个 VNC 服务会运行在5901(5900 + 1)端口上,之后的依次增加,运行在5900 + x 号端口上。其中 x 是指之后用户的配置文件名 `vncserver@:x.service` 里面的 x 。

|

||||

|

||||

在建立连接之前,我们需要知道服务器的 IP 地址和端口。IP 地址是一台计算机在网络中的独特的识别号码。我的服务器的 IP 地址是96.126.120.92,VNC 用户端口是1。

|

||||

|

||||

VNC 服务本身使用的是5900端口。鉴于有不同的用户使用 VNC ,每个人的连接都会获得不同的端口。配置文件名里面的数字告诉 VNC 服务器把服务运行在5900的子端口上。在我们这个例子里,第一个 VNC 服务会运行在5901(5900 + 1)端口上,之后的依次增加,运行在5900 + x 号端口上。其中 x 是指之后用户的配置文件名 **vncserver@:x.service** 里面的 x 。

|

||||

|

||||

在建立连接之前,我们需要知道服务器的 IP 地址和端口。IP 地址是一台计算机在网络中的独特的识别号码。我的服务器的 IP 地址是96.126.120.92,VNC 用户端口是1。执行下面的命令可以获得服务器的公网 IP 地址。

|

||||

执行下面的命令可以获得服务器的公网 IP 地址(LCTT 译注:如果你的服务器放在内网或使用动态地址的话,可以这样获得其公网 IP 地址)。

|

||||

|

||||

# curl -s checkip.dyndns.org|sed -e 's/.*Current IP Address: //' -e 's/<.*$//'

|

||||

|

||||

### 总结 ###

|

||||

|

||||

好了,现在我们已经在运行 CentOS 7 / RHEL 7 (Red Hat Enterprises Linux)的服务器上安装配置好了 VNC 服务器。VNC 是自由及开源的软件中最简单的一种能实现远程控制服务器的一种工具,也是 Teamviewer Remote Access 的一款优秀的替代品。VNC 允许一个安装了 VNC 客户端的用户远程控制一台安装了 VNC 服务的服务器。下面还有一些经常使用的相关命令。好好玩!

|

||||

好了,现在我们已经在运行 CentOS 7 / RHEL 7 的服务器上安装配置好了 VNC 服务器。VNC 是自由开源软件中最简单的一种能实现远程控制服务器的工具,也是一款优秀的 Teamviewer Remote Access 替代品。VNC 允许一个安装了 VNC 客户端的用户远程控制一台安装了 VNC 服务的服务器。下面还有一些经常使用的相关命令。好好玩!

|

||||

|

||||

#### 其他命令: ####

|

||||

|

||||

- 关闭 VNC 服务。

|

||||

|

||||

# systemctl stop vncserver@:1.service

|

||||

# systemctl stop vncserver@:1.service

|

||||

|

||||

- 禁止 VNC 服务开机启动。

|

||||

|

||||

# systemctl disable vncserver@:1.service

|

||||

# systemctl disable vncserver@:1.service

|

||||

|

||||

- 关闭防火墙。

|

||||

|

||||

# systemctl stop firewalld.service

|

||||

# systemctl stop firewalld.service

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -156,7 +154,7 @@ via: http://linoxide.com/linux-how-to/install-configure-vnc-server-centos-7-0/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[boredivan](https://github.com/boredivan)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -5,27 +5,28 @@

|

||||

数百万个网站用着 WordPress ,这当然是有原因的。WordPress 是众多内容管理系统中对开发者最友好的,本质上说你可以用它做任何事情。不幸的是,每天都有些吓人的报告说某个主要的网站被黑了,或者某个重要的数据库被泄露了之类的,吓得人一愣一愣的。

|

||||

|

||||

如果你还没有安装 WordPress ,可以看下下面的文章。

|

||||

|

||||

在基于 Debian 的系统上:

|

||||

|

||||

- [How to install WordPress On Ubuntu][1]

|

||||

- [如何在 Ubuntu 上安装 WordPress][1]

|

||||

|

||||

在基于 RPM 的系统上:

|

||||

|

||||

- [How to install wordpress On CentOS][2]

|

||||

- [如何在 CentOS 上安装 WordPress][2]

|

||||

|

||||

我之前的文章 [How To Secure WordPress Website][3] 里面列出的**备忘录**为读者维护 WordPress 的安全提供了一点帮助。

|

||||

我之前的文章 [ 如何安全加固 WordPress 站点][3] 里面列出的**备忘录**为读者维护 WordPress 的安全提供了一点帮助。

|

||||

|

||||

在这篇文章里面,我将说明 **wpscan** 的安装过程,以及怎样使用 wpscan 来锁定任何已知的会让你的站点变得易受攻击的插件和主题。还有怎样安装和使用一款免费的网络探索和攻击的安全扫描软件 **nmap** 。最后展示的是使用 **nikto** 的步骤。

|

||||

在这篇文章里面,我将介绍 **wpscan** 的安装过程,以及怎样使用 wpscan 来定位那些已知的会让你的站点变得易受攻击的插件和主题。还有怎样安装和使用一款免费的网络探索和攻击的安全扫描软件 **nmap** 。最后展示的是使用 **nikto** 的步骤。

|

||||

|

||||

### 用 WPScan 测试 WordPress 中易受攻击的插件和主题 ###

|

||||

|

||||

**WPScan** 是一个 WordPress 黑盒安全扫描软件,用 Ruby 写成,它是专门用来寻找已知的 WordPress 的弱点的。它为安全专家和 WordPress 管理员提供了一条评估他们的 WordPress 站点的途径。它的基于开源代码,在 GPLv3 下发行。

|

||||

|

||||

### 下载和安装 WPScan ###

|

||||

#### 下载和安装 WPScan ####

|

||||

|

||||

在我们开始安装之前,很重要的一点是要注意 wpscan 不能在 Windows 下工作,所以你需要使用一台 Linux 或者 OS X 的机器来完成下面的事情。如果你只有 Windows 的系统,拿你可以下载一个 Virtualbox 然后在虚拟机里面安装任何你喜欢的 Linux 发行版本。

|

||||

|

||||

WPScan 的源代码被放在 Github 上,所以需要先安装 git。

|

||||

WPScan 的源代码放在 Github 上,所以需要先安装 git(LCTT 译注:其实你也可以直接从 Github 上下载打包的源代码,而不必非得装 git )。

|

||||

|

||||

sudo apt-get install git

|

||||

|

||||

@ -44,7 +45,7 @@ git 装好了,我们就要安装 wpscan 的依赖包了。

|

||||

|

||||

现在 wpscan 装好了,我们就可以用它来搜索我们 WordPress 站点潜在的易受攻击的文件。wpcan 最重要的方面是它能列出不仅是插件和主题,也能列出用户和缩略图的功能。WPScan 也可以用来暴力破解 WordPress —— 但这不是本文要讨论的内容。

|

||||

|

||||

#### 跟新 WPScan ####

|

||||

#### 更新 WPScan ####

|

||||

|

||||

ruby wpscan.rb --update

|

||||

|

||||

@ -95,7 +96,6 @@ git 装好了,我们就要安装 wpscan 的依赖包了。

|

||||

|

||||

列举主题和列举插件差不多,只要用"--enumerate t"就可以了。

|

||||

|

||||

|

||||

ruby wpscan.rb --url http(s)://www.host-name.com --enumerate t

|

||||

|

||||

或者只列出易受攻击的主题:

|

||||

@ -135,7 +135,7 @@ WPscan 也可以用来列举某个 WordPress 站点的用户和有效的登录

|

||||

|

||||

#### 列举 Timthumb 文件 ####

|

||||

|

||||

关于 WPscan ,我要说的最后一个功能是列举 timthub 相关的文件。近年来,timthumb 已经成为攻击者眼里的一个普通的目标,因为无数的漏洞被找出来并发到论坛上、邮件列表等等地方。用下面的命令可以通过 wpscan 找出易受攻击的 timthub 文件:

|

||||

关于 WPscan ,我要说的最后一个功能是列举 timthub (缩略图)相关的文件。近年来,timthumb 已经成为攻击者眼里的一个常见目标,因为无数的漏洞被找出来并发到论坛上、邮件列表等等地方。用下面的命令可以通过 wpscan 找出易受攻击的 timthub 文件:

|

||||

|

||||

ruby wpscan.rb --url http(s)://www.host-name.com --enumerate tt

|

||||

|

||||

@ -143,11 +143,10 @@ WPscan 也可以用来列举某个 WordPress 站点的用户和有效的登录

|

||||

|

||||

**Nmap** 是一个开源的用于网络探索和安全审查方面的工具。它可以迅速扫描巨大的网络,也可一单机使用。Nmap 用原始 IP 数据包通过不同寻常的方法判断网络里那些主机是正在工作的,那些主机上都提供了什么服务(应用名称和版本),是什么操作系统(以及版本),用的什么类型的防火墙,以及很多其他特征。

|

||||

|

||||

### 在 Debian 和 Ubuntu 上下载和安装 nmap ###

|

||||

#### 在 Debian 和 Ubuntu 上下载和安装 nmap ####

|

||||

|

||||

要在基于 Debian 和 Ubuntu 的操作系统上安装 nmap ,运行下面的命令:

|

||||

|

||||

|

||||

sudo apt-get install nmap

|

||||

|

||||

**输出样例**

|

||||

@ -168,7 +167,7 @@ WPscan 也可以用来列举某个 WordPress 站点的用户和有效的登录

|

||||

Processing triggers for man-db ...

|

||||

Setting up nmap (5.21-1.1ubuntu1) ...

|

||||

|

||||

#### 打个例子 ####

|

||||

#### 举个例子 ####

|

||||

|

||||

输出 nmap 的版本:

|

||||

|

||||

@ -182,7 +181,7 @@ WPscan 也可以用来列举某个 WordPress 站点的用户和有效的登录

|

||||

|

||||

Nmap version 5.21 ( http://nmap.org )

|

||||

|

||||

### 在 Centos 上下载和安装 nmap ###

|

||||

#### 在 Centos 上下载和安装 nmap ####

|

||||

|

||||

要在基于 RHEL 的 Linux 上面安装 nmap ,输入下面的命令:

|

||||

|

||||

@ -227,7 +226,7 @@ WPscan 也可以用来列举某个 WordPress 站点的用户和有效的登录

|

||||

|

||||

Complete!

|

||||

|

||||

#### 举个比方 ####

|

||||

#### 举个例子 ####

|

||||

|

||||

输出 nmap 版本号:

|

||||

|

||||

@ -239,7 +238,7 @@ WPscan 也可以用来列举某个 WordPress 站点的用户和有效的登录

|

||||

|

||||

#### 用 Nmap 扫描端口 ####

|

||||

|

||||

你可以用 nmap 来获得很多关于你的服务器的信息,它让你站在对你的网站不怀好意的人的角度看你自己的网站。

|

||||

你可以用 nmap 来获得很多关于你的服务器的信息,它可以让你站在对你的网站不怀好意的人的角度看你自己的网站。

|

||||

|

||||

因此,请仅用它测试你自己的服务器或者在行动之前通知服务器的所有者。

|

||||

|

||||

@ -277,7 +276,7 @@ nmap 的作者提供了一个测试服务器:

|

||||

|

||||

sudo nmap -p port_number remote_host

|

||||

|

||||

扫描一个网络,找出那些服务器在线,分别运行了什么服务

|

||||

扫描一个网络,找出哪些服务器在线,分别运行了什么服务。

|

||||

|

||||

这就是传说中的主机探索或者 ping 扫描:

|

||||

|

||||

@ -294,19 +293,19 @@ nmap 的作者提供了一个测试服务器:

|

||||

MAC Address: 00:11:32:11:15:FC (Synology Incorporated)

|

||||

Nmap done: 256 IP addresses (4 hosts up) scanned in 2.80 second

|

||||

|

||||

理解端口配置和如何发现你的服务器上的攻击的载体只是确保你的信息和你的 VPS 安全的第一步。

|

||||

理解端口配置和如何发现你的服务器上的攻击目标只是确保你的信息和你的 VPS 安全的第一步。

|

||||

|

||||

### 用 Nikto 扫描你网站的缺陷 ###

|

||||

|

||||

[Nikto][4] 网络扫描器是一个开源的 web 服务器的扫描软件,它可以用来扫描 web 服务器上的恶意的程序和文件。Nikto 也可一用来检查软件版本是否过期。Nikto 能进行简单而快速地扫描以发现服务器上危险的文件和程序。扫描结束后会给出一个日志文件。`

|

||||

[Nikto][4] 网络扫描器是一个开源的 web 服务器的扫描软件,它可以用来扫描 web 服务器上的恶意的程序和文件。Nikto 也可以用来检查软件版本是否过期。Nikto 能进行简单而快速地扫描以发现服务器上危险的文件和程序。扫描结束后会给出一个日志文件。`

|

||||

|

||||

### 在 Linux 服务器上下载和安装 Nikto ###

|

||||

#### 在 Linux 服务器上下载和安装 Nikto ####

|

||||

|

||||

Perl 在 Linux 上是预先安装好的,所以你只需要从[项目页面][5]下载 nikto ,解压到一个目录里面,然后开始测试。

|

||||

|

||||

wget https://cirt.net/nikto/nikto-2.1.4.tar.gz

|

||||

|

||||

你可以用某个归档管理工具或者用下面这个命令,同时使用 tar 和 gzip 。

|

||||

你可以用某个归档管理工具解包,或者如下同时使用 tar 和 gzip :

|

||||

|

||||

tar zxvf nikto-2.1.4.tar.gz

|

||||

cd nikto-2.1.4

|

||||

@ -369,7 +368,7 @@ Perl 在 Linux 上是预先安装好的,所以你只需要从[项目页面][5]

|

||||

|

||||

**输出样例**

|

||||

|

||||

会有十分冗长的输出,可能一开始会让人感到困惑。许多 Nikto 的警报会返回 OSVDB 序号。这是开源缺陷数据库([http://osvdb.org/][6])的意思。你可以在 OSVDB 上找出相关缺陷的深入说明。

|

||||

会有十分冗长的输出,可能一开始会让人感到困惑。许多 Nikto 的警报会返回 OSVDB 序号。这是由开源缺陷数据库([http://osvdb.org/][6])所指定。你可以在 OSVDB 上找出相关缺陷的深入说明。

|

||||

|

||||

$ nikto -h http://www.host-name.com

|

||||

- Nikto v2.1.4

|

||||

@ -402,7 +401,7 @@ Perl 在 Linux 上是预先安装好的,所以你只需要从[项目页面][5]

|

||||

|

||||

**Nikto** 是一个非常轻量级的通用工具。因为 Nikto 是用 Perl 写的,所以它可以在几乎任何服务器的操作系统上运行。

|

||||

|

||||

希望这篇文章能在你找你的 wordpress 站点的缺陷的时候给你一些提示。我之前的文章[怎样保护 WordPress 站点][7]记录了一个**清单**,可以让你保护你的 WordPress 站点的工作变得更简单。

|

||||

希望这篇文章能在你检查 wordpress 站点的缺陷的时候给你一些提示。我之前的文章[如何安全加固 WordPress 站点][7]记录了一个**清单**,可以让你保护你的 WordPress 站点的工作变得更简单。

|

||||

|

||||

有想说的,留下你的评论。

|

||||

|

||||

@ -412,7 +411,7 @@ via: http://www.unixmen.com/scan-check-wordpress-website-security-using-wpscan-n

|

||||

|

||||

作者:[anismaj][a]

|

||||

译者:[boredivan](https://github.com/boredivan)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

在Ubuntu14.10/Mint7上安装Gnome Flashback classical桌面

|

||||

在Ubuntu14.10/Mint7上安装Gnome Flashback 经典桌面

|

||||

================================================================================

|

||||

如果你不喜欢现在的Unity桌面,[Gnome Flashback][1]桌面环境是一个简单的并且很棒的选择,让你能找回曾经经典的桌面。

|

||||

|

||||

Gnome Flashback基于GTK3并提供与原先gnome桌面视觉上相似的界面。

|

||||

|

||||

gnome flashback的另一个改变是采用了源自mint和xface的MATE桌面,但无论mint还是xface都是基于gtk2的。

|

||||

Gnome Flashback的另一个改变是采用了源自mint和xface的MATE桌面,但无论mint还是xface都是基于GTK2的。

|

||||

|

||||

### 安装 Gnome Flashback ###

|

||||

|

||||

@ -12,7 +12,7 @@ gnome flashback的另一个改变是采用了源自mint和xface的MATE桌面,

|

||||

|

||||

$ sudo apt-get install gnome-session-flashback

|

||||

|

||||

然后注销到达登录界面,单击密码输入框右上角的徽标型按钮,即可选择桌面环境。可供选择的有Gnome Flashback (Metacity) 会话模式和Gnome Flashback (Compiz)会话模式。

|

||||

然后注销返回到登录界面,单击密码输入框右上角的徽标型按钮,即可选择桌面环境。可供选择的有Gnome Flashback (Metacity) 会话模式和Gnome Flashback (Compiz)会话模式。

|

||||

|

||||

Metacity更轻更快,而Compiz则能带给你更棒的桌面效果。下面是我使用gnome flashback桌面的截图。

|

||||

|

||||

@ -24,17 +24,17 @@ Metacity更轻更快,而Compiz则能带给你更棒的桌面效果。下面是

|

||||

|

||||

### 1. 安装 Gnome Tweak Tool ###

|

||||

|

||||

Gnome Tweak Tool能够帮助你定制比如字体、主题等,那些在Unity桌面的控制中心十分困难或是不可能完成的任务。

|

||||

Gnome Tweak Tool能够帮助你定制比如字体、主题等,这些在Unity桌面的控制中心是十分困难,几乎不可能完成的任务。

|

||||

|

||||

$ sudo apt-get install gnome-tweak-tool

|

||||

|

||||

启动按步骤 应用程序 > 系统工具 > 首选项 > Tweak Tool

|

||||

启动按步骤: 应用程序 > 系统工具 > 首选项 > Tweak Tool

|

||||

|

||||

### 2. 在面板上添加小应用 ###

|

||||

|

||||

默认的右键点击面板是没有效果的。你可以尝试在右键点击面板的同时按住键盘上的Alt+Super (win)键,这样定制面板的相关选项将会出现。

|

||||

默认的右键点击面板是没有效果的。你可以尝试在右键点击面板的同时按住键盘上的Alt+Super (win)键,这样就会出现定制面板的相关选项。

|

||||

|

||||

你可以修改或删除面板并在上面添加些小应用。在这个例子中我们移除了底部面板,并用Plank dock来代替它的位置。

|

||||

你可以修改或删除面板,并在上面添加些小应用。在这个例子中我们移除了底部面板,并用Plank dock来代替它的位置。

|

||||

|

||||

在顶部面板的中间添加一个显示时间的小应用。通过配置使它显示时间和天气。

|

||||

|

||||

@ -42,7 +42,7 @@ Gnome Tweak Tool能够帮助你定制比如字体、主题等,那些在Unity

|

||||

|

||||

### 3. 将窗口标题栏的按钮右置 ###

|

||||

|

||||

在ubuntu中,最小化、最大化和关闭按钮默认实在标题栏的左侧的。需要稍作手脚才能让他们乖乖回到右边去。

|

||||

在ubuntu中,最小化、最大化和关闭按钮默认是在标题栏左侧的。需要稍作手脚才能让他们乖乖回到右边去。

|

||||

|

||||

想让窗口的按钮到右边可以使用下面的命令,这是我在askubuntu上找到的。

|

||||

|

||||

@ -50,7 +50,7 @@ Gnome Tweak Tool能够帮助你定制比如字体、主题等,那些在Unity

|

||||

|

||||

### 4.安装 Plank dock ###

|

||||

|

||||

plank dock位于屏幕底部用于启动应用和切换打开的窗口。会在必要的时间隐藏自己,并在需要的时候出现。elementary OS使用的dock就是plank dock。

|

||||

plank dock位于屏幕底部,用于启动应用和切换打开的窗口。它会在必要的时间隐藏自己,并在需要的时候出现。elementary OS使用的dock就是plank dock。

|

||||

|

||||

运行以下命令安装:

|

||||

|

||||

@ -58,11 +58,11 @@ plank dock位于屏幕底部用于启动应用和切换打开的窗口。会在

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install plank -y

|

||||

|

||||

现在启动 应用程序 > 附件 > Plank。若想让它开机自动启动,找到 应用程序 > 系统工具 > 首选项 > 启动应用程序 并将“plank”的命令加到列表中。

|

||||

现在启动:应用程序 > 附件 > Plank。若想让它开机自动启动,找到 应用程序 > 系统工具 > 首选项 > 启动应用程序 并将“plank”的命令加到列表中。

|

||||

|

||||

### 5. 安装 Conky 系统监视器 ###

|

||||

|

||||

Conky非常酷,它用系统的中如CPU和内存使用率的统计值来装饰桌面。它不太占资源并且运行的大部分时间都不惹麻烦。

|

||||

Conky非常酷,它用系统的中如CPU和内存使用率的统计值来装饰桌面。它不太占资源,并且绝大部分情况下运行都不会有什么问题。

|

||||

|

||||

运行如下命令安装:

|

||||

|

||||

@ -70,7 +70,7 @@ Conky非常酷,它用系统的中如CPU和内存使用率的统计值来装饰

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install conky-manager

|

||||

|

||||

现在启动 应用程序 > 附件 > Conky Manager 选择你想在桌面上显示的部件。Conky Manager同样可以配置到启动项中。

|

||||

现在启动:应用程序 > 附件 > Conky Manager ,选择你想在桌面上显示的部件。Conky Manager同样可以配置到启动项中。

|

||||

|

||||

### 6. 安装CCSM ###

|

||||

|

||||

@ -80,10 +80,10 @@ Conky非常酷,它用系统的中如CPU和内存使用率的统计值来装饰

|

||||

|

||||

$ sudo apt-get install compizconfig-settings-manager

|

||||

|

||||

启动按步骤 应用程序 > 系统工具 > 首选项 > CompizConfig Settings Manager.

|

||||

启动按步骤: 应用程序 > 系统工具 > 首选项 > CompizConfig Settings Manager.

|

||||

|

||||

|

||||

>在虚拟机中经常会发生compiz会话中装饰窗口消失。可以通过启动Compiz设置,在打开"Copy to texture",注销后重新登录即可。

|

||||

> 在虚拟机中经常会发生compiz会话中装饰窗口消失。可以通过启动Compiz设置,并启用"Copy to texture"插件,注销后重新登录即可。

|

||||

|

||||

不过值得一提的是Compiz 会话会比Metacity慢。

|

||||

|

||||

@ -92,8 +92,8 @@ Conky非常酷,它用系统的中如CPU和内存使用率的统计值来装饰

|

||||

via: http://www.binarytides.com/install-gnome-flashback-ubuntu/

|

||||

|

||||

作者:[Silver Moon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[martin2011qi](https://github.com/martin2011qi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,144 @@

|

||||

在 Linux 中以交互方式实时查看Apache web访问统计

|

||||

================================================================================

|

||||

|

||||

无论你是在网站托管业务,还是在自己的VPS上运行几个网站,你总会有需要显示访客统计信息,例如前几的访客、访问请求的文件(无论动态或者静态)、所用的带宽、客户端的浏览器,和访问的来源网站,等等。

|

||||

|

||||

[GoAccess][1] 是一款用于Apache或者Nginx的命令行日志分析器和交互式查看器。使用这款工具,你不仅可以浏览到之前提及的相关数据,还可以通过分析网站服务器日志来进一步挖掘数据 - 而且**这一切都是在一个终端窗口实时输出的**。由于今天的[大多数web服务器][2]都使用Debian的衍生版或者基于RedHat的发行版来作为底层操作系统,所以本文中我告诉你如何在Debian和CentOS中安装和使用GoAccess。

|

||||

|

||||

### 在Linux系统安装GoAccess ###

|

||||

|

||||

在Debian,Ubuntu及其衍生版本,运行以下命令来安装GoAccess:

|

||||

|

||||

# aptitude install goaccess

|

||||

|

||||

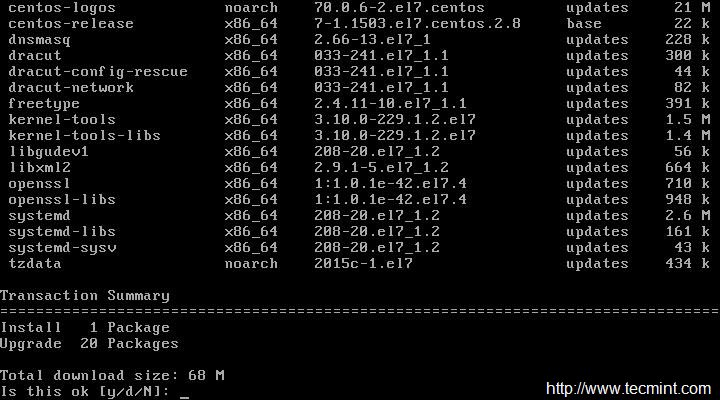

在CentOS中,你将需要使你的[EPEL 仓库][3]可用然后执行以下命令:

|

||||

|

||||

# yum install goaccess

|

||||

|

||||

在Fedora,同样使用yum命令:

|

||||

|

||||

# yum install goaccess

|

||||

|

||||

|

||||

如果你想从源码安装GoAccess来使用更多功能(例如 GeoIP 定位功能),需要在你的操作系统安装[必需的依赖包][4],然后按以下步骤进行:

|

||||

|

||||

# wget http://tar.goaccess.io/goaccess-0.8.5.tar.gz

|

||||

# tar -xzvf goaccess-0.8.5.tar.gz

|

||||

# cd goaccess-0.8.5/

|

||||

# ./configure --enable-geoip

|

||||

# make

|

||||

# make install

|

||||

|

||||

以上安装的版本是 0.8.5,但是你也可以在该软件的网站[下载页][5]确认是否是最新版本。

|

||||

|

||||

由于GoAccess不需要后续的配置,一旦安装你就可以马上使用。

|

||||

|

||||

### 运行 GoAccess ###

|

||||

|

||||

开始使用GoAccess,只需要对它指定你的Apache访问日志。

|

||||

|

||||

对于Debian及其衍生版本:

|

||||

|

||||

# goaccess -f /var/log/apache2/access.log

|

||||

|

||||

基于红帽的发行版:

|

||||

|

||||

# goaccess -f /var/log/httpd/access_log

|

||||

|

||||

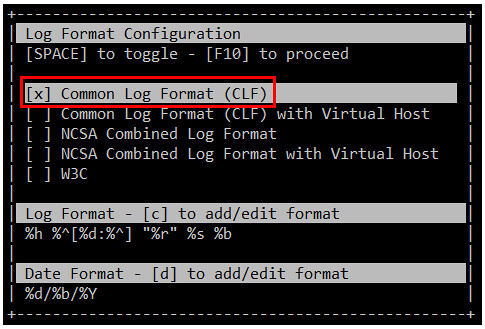

当你第一次启动GoAccess,你将会看到如下的屏幕中选择日期和日志格式。正如前面所述,你可以按空格键进行选择,并按F10确认。至于日期和日志格式,你可能需要参考[Apache 文档][6]来刷新你的记忆。

|

||||

|

||||

在这个例子中,选择常见日志格式(Common Log Format(CLF)):

|

||||

|

||||

|

||||

|

||||

然后按F10 确认。你将会从屏幕上看到统计数据。为了简洁起见,这里只显示了首部,也就是日志文件的摘要,如下图所示:

|

||||

|

||||

|

||||

|

||||

### 通过 GoAccess来浏览网站服务器统计数据 ###

|

||||

|

||||

你可以按向下的箭头滚动页面,你会发现以下区域,它们是按请求排序的。这里提及的目录顺序可能会根据你的发行版或者你所选的安装方式(从源和库)不同而不同:

|

||||

|

||||

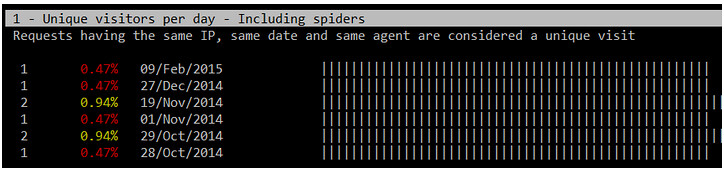

1. 每天唯一访客(来自同样IP、同一日期和同一浏览器的请求被认为是是唯一访问)

|

||||

|

||||

|

||||

|

||||

2. 请求的文件(网页URL)

|

||||

|

||||

|

||||

|

||||

3. 请求的静态文件(例如,.png文件,.js文件等等)

|

||||

|

||||

4. 来源的URLs(每一个URL请求的出处)

|

||||

|

||||

5. HTTP 404 未找到的响应代码

|

||||

|

||||

|

||||

|

||||

6. 操作系统

|

||||

|

||||

7. 浏览器

|

||||

|

||||

8. 主机地址(客户端IP地址)

|

||||

|

||||

|

||||

|

||||

9. HTTP 状态代码

|

||||

|

||||

|

||||

|

||||

10. 前几位的来源站点

|

||||

|

||||

11. 来自谷歌搜索引擎的前几位的关键字

|

||||

|

||||

如果你想要检查已经存档的日志,你可以通过管道将它们发送给GoAccess,如下:

|

||||

|

||||

在Debian及其衍生版本:

|

||||

|

||||

# zcat -f /var/log/apache2/access.log* | goaccess

|

||||

|

||||

在基于红帽的发行版:

|

||||

|

||||

# cat /var/log/httpd/access* | goaccess

|

||||

|

||||

如果你需要上述部分的详细报告(1至11项),直接按下其序号再按O(大写o),就可以显示出你需要的详细视图。下面的图像显示5-O的输出(先按5,再按O)

|

||||

|

||||

|

||||

|

||||

如果要显示GeoIP位置信息,打开主机部分的详细视图,如前面所述,你将会看到正在请求你的服务器的客户端IP地址所在的位置。

|

||||

|

||||

|

||||

|

||||

如果你的系统还不是很忙碌,以上提及的章节将不会显示大量的信息,但是这种情形可以通过在你网站服务器越来越多的请求发生改变。

|

||||

|

||||

### 保存用于离线分析的报告 ###

|

||||

|

||||

有时候你不想每次都实时去检查你的系统状态,可以保存一份在线的分析文件或打印出来。要生成一个HTML报告,只需要通过之前提到GoAccess命令,将输出来重定向到一个HTML文件即可。然后,用web浏览器来将这份报告打开即可。

|

||||

|

||||

# zcat -f /var/log/apache2/access.log* | goaccess > /var/www/webserverstats.html

|

||||

|

||||

一旦报告生成,你将需要点击展开的链接来显示每个类别详细的视图信息:

|

||||

|

||||

|

||||

|

||||

可以查看youtube视频:https://youtu.be/UVbLuaOpYdg 。

|

||||

|

||||

正如我们通过这篇文章讨论,GoAccess是一个非常有价值的工具,它能给系统管理员实时提供可视的HTTP 统计分析。虽然GoAccess的默认输出是标准输出,但是你也可以将他们保存到JSON,HTML或者CSV文件。这种转换可以让 GoAccess在监控和显示网站服务器的统计数据时更有用。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/interactive-apache-web-server-log-analyzer-linux.html

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[disylee](https://github.com/disylee)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/gabriel

|

||||

[1]:http://goaccess.io/

|

||||

[2]:http://w3techs.com/technologies/details/os-linux/all/all

|

||||

[3]:http://linux.cn/article-2324-1.html

|

||||

[4]:http://goaccess.io/download#dependencies

|

||||

[5]:http://goaccess.io/download

|

||||

[6]:http://httpd.apache.org/docs/2.4/logs.html

|

||||

@ -6,7 +6,7 @@ Linux 有问必答:如何在Linux中修复“fatal error: lame/lame.h: No such

|

||||

|

||||

fatal error: lame/lame.h: No such file or directory

|

||||

|

||||

[LAME][1]("LAME Ain't an MP3 Encoder")是一个流行的LPGL授权的MP3编码器。许多视频编码工具使用或者支持LAME。这其中有[FFmpeg][2]、 VLC、 [Audacity][3]、 K3b、 RipperX等。

|

||||

[LAME][1]("LAME Ain't an MP3 Encoder")是一个流行的LPGL授权的MP3编码器。许多视频编码工具使用或者支持LAME,如 [FFmpeg][2]、 VLC、 [Audacity][3]、 K3b、 RipperX等。

|

||||

|

||||

要修复这个编译错误,你需要安装LAME库和开发文件,按照下面的来。

|

||||

|

||||

@ -20,7 +20,7 @@ Debian和它的衍生版在基础库中已经提供了LAME库,因此可以用a

|

||||

|

||||

在基于RED HAT的版本中,LAME在RPM Fusion的免费仓库中就有,那么你需要先设置[RPM Fusion (免费)仓库][4]。

|

||||

|

||||

RPM Fusion设置完成后,如下安装LAME开发文件。

|

||||

RPM Fusion设置完成后,如下安装LAME开发包。

|

||||

|

||||

$ sudo yum --enablerepo=rpmfusion-free-updates install lame-devel

|

||||

|

||||

@ -42,7 +42,7 @@ RPM Fusion设置完成后,如下安装LAME开发文件。

|

||||

|

||||

$ ./configure --help

|

||||

|

||||

共享/静态LAME默认安装在 /usr/local/lib。要让共享库可以被其他程序使用,完成最后一步:

|

||||

共享/静态的LAME库默认安装在 /usr/local/lib。要让共享库可以被其他程序使用,完成最后一步:

|

||||

|

||||

用编辑器打开 /etc/ld.so.conf,加入下面这行。

|

||||

|

||||

@ -56,7 +56,6 @@ RPM Fusion设置完成后,如下安装LAME开发文件。

|

||||

|

||||

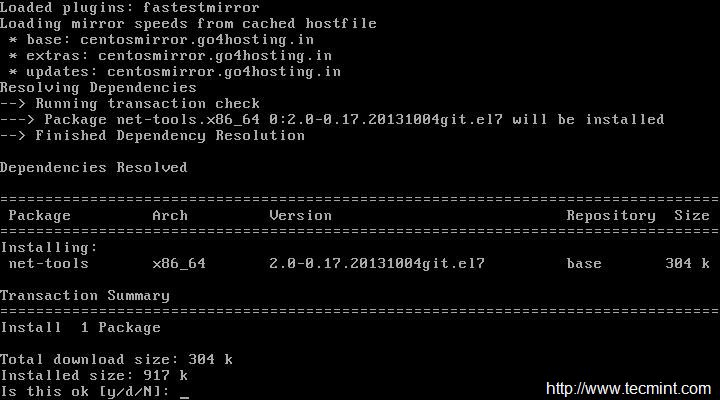

如果你的发行版(比如 CentOS 7)没有提供预编译的LAME库,或者你想要自定义LAME库,你需要从源码自己编译。下面是在基于Red Hat的系统中编译安装LAME库的方法。

|

||||

|

||||

|

||||

$ sudo yum install gcc git

|

||||

$ wget http://downloads.sourceforge.net/project/lame/lame/3.99/lame-3.99.5.tar.gz

|

||||

$ tar -xzf lame-3.99.5.tar.gz

|

||||

@ -87,7 +86,7 @@ via: http://ask.xmodulo.com/fatal-error-lame-no-such-file-or-directory.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,12 @@

|

||||

Linux有问必答 -- 如何在树莓派上安装USB网络摄像头

|

||||

Linux有问必答:如何在树莓派上安装USB网络摄像头

|

||||

================================================================================

|

||||

> **Question**: 我可以在树莓派上使用标准的USB网络摄像头么?我该如何检查USB网络摄像头与树莓派是否兼容?另外我该如何在树莓派上安装它?

|

||||

|

||||

如果你想在树莓上拍照或者录影,你可以安装[树莓派的摄像头板][1]。如果你不想要为摄像头模块花费额外的金钱,那有另外一个方法,就是你常见的[USB 摄像头][2]。你可能已经在PC上安装了。

|

||||

如果你想在树莓上拍照或者录影,你可以安装[树莓派的摄像头板][1]。如果你不想要为摄像头模块花费额外的金钱,那有另外一个方法,就是你常见的[USB 摄像头][2]。你可能已经在PC上安装过了。

|

||||

|

||||

本教程中,我会展示如何在树莓派上设置摄像头。我们假设你使用的系统是Raspbian。

|

||||

|

||||

在此之前,你最好检查一下你的摄像头是否在[这些][3]已知与树莓派兼容的摄像头之中。如果你的摄像头不在这个兼容列表中,不要丧气,仍然有可能你的摄像头被树莓派检测到。

|

||||

在此之前,你最好检查一下你的摄像头是否在[这些][3]已知与树莓派兼容的摄像头之中。如果你的摄像头不在这个兼容列表中,不要丧气,仍然有可能树莓派能检测到你的摄像头。

|

||||

|

||||

### 检查USB摄像头是否雨树莓派兼容 ###

|

||||

|

||||

@ -34,7 +34,7 @@ fswebcam安装完成后,在终端中运行下面的命令来抓去一张来自

|

||||

|

||||

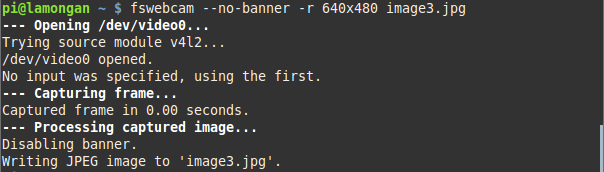

$ fswebcam --no-banner -r 640x480 image.jpg

|

||||

|

||||

这条命令可以抓取一张640x480分辨率的照片,并且用jpg格式保存。它不会在照片的地步留下任何标志.

|

||||

这条命令可以抓取一张640x480分辨率的照片,并且用jpg格式保存。它不会在照片的底部留下任何水印.

|

||||

|

||||

|

||||

|

||||

@ -52,7 +52,7 @@ via: http://ask.xmodulo.com/install-usb-webcam-raspberry-pi.html

|

||||

|

||||

作者:[Kristophorus Hadiono][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||