mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge branch 'master' of git@github.com:LCTT/TranslateProject.git

This commit is contained in:

commit

10d78d7662

@ -1,5 +1,4 @@

|

||||

|

||||

Linux 有问必答 - 如何在 Linux 中统计一个进程的线程数

|

||||

Linux 有问必答:如何在 Linux 中统计一个进程的线程数

|

||||

================================================================================

|

||||

> **问题**: 我正在运行一个程序,它在运行时会派生出多个线程。我想知道程序在运行时会有多少线程。在 Linux 中检查进程的线程数最简单的方法是什么?

|

||||

|

||||

@ -7,11 +6,11 @@ Linux 有问必答 - 如何在 Linux 中统计一个进程的线程数

|

||||

|

||||

### 方法一: /proc ###

|

||||

|

||||

proc 伪文件系统,它驻留在 /proc 目录,这是最简单的方法来查看任何活动进程的线程数。 /proc 目录以可读文本文件形式输出,提供现有进程和系统硬件相关的信息如 CPU, interrupts, memory, disk, 等等.

|

||||

proc 伪文件系统,它驻留在 /proc 目录,这是最简单的方法来查看任何活动进程的线程数。 /proc 目录以可读文本文件形式输出,提供现有进程和系统硬件相关的信息如 CPU、中断、内存、磁盘等等.

|

||||

|

||||

$ cat /proc/<pid>/status

|

||||

|

||||

上面的命令将显示进程 <pid> 的详细信息,包括过程状态(例如, sleeping, running),父进程 PID,UID,GID,使用的文件描述符的数量,以及上下文切换的数量。输出也包括**进程创建的总线程数**如下所示。

|

||||

上面的命令将显示进程 \<pid> 的详细信息,包括过程状态(例如, sleeping, running),父进程 PID,UID,GID,使用的文件描述符的数量,以及上下文切换的数量。输出也包括**进程创建的总线程数**如下所示。

|

||||

|

||||

Threads: <N>

|

||||

|

||||

@ -23,11 +22,11 @@ Linux 有问必答 - 如何在 Linux 中统计一个进程的线程数

|

||||

|

||||

输出表明该进程有28个线程。

|

||||

|

||||

或者,你可以在 /proc/<pid>/task 中简单的统计目录的数量,如下所示。

|

||||

或者,你可以在 /proc/<pid>/task 中简单的统计子目录的数量,如下所示。

|

||||

|

||||

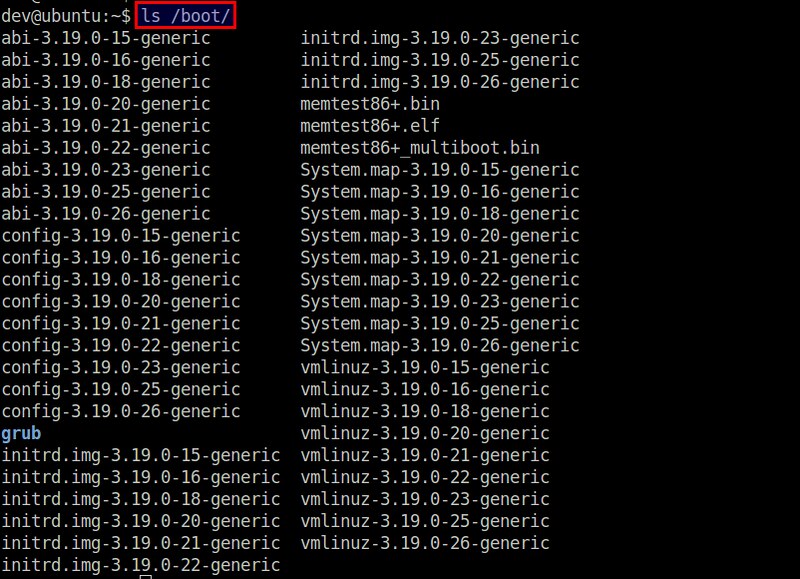

$ ls /proc/<pid>/task | wc

|

||||

|

||||

这是因为,对于一个进程中创建的每个线程,在 /proc/<pid>/task 中会创建一个相应的目录,命名为其线程 ID。由此在 /proc/<pid>/task 中目录的总数表示在进程中线程的数目。

|

||||

这是因为,对于一个进程中创建的每个线程,在 `/proc/<pid>/task` 中会创建一个相应的目录,命名为其线程 ID。由此在 `/proc/<pid>/task` 中目录的总数表示在进程中线程的数目。

|

||||

|

||||

### 方法二: ps ###

|

||||

|

||||

@ -35,7 +34,7 @@ Linux 有问必答 - 如何在 Linux 中统计一个进程的线程数

|

||||

|

||||

$ ps hH p <pid> | wc -l

|

||||

|

||||

如果你想监视一个进程的不同线程消耗的硬件资源(CPU & memory),请参阅[此教程][1]。(注:此文我们翻译过)

|

||||

如果你想监视一个进程的不同线程消耗的硬件资源(CPU & memory),请参阅[此教程][1]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -43,9 +42,9 @@ via: http://ask.xmodulo.com/number-of-threads-process-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://ask.xmodulo.com/author/nanni

|

||||

[1]:http://ask.xmodulo.com/view-threads-process-linux.html

|

||||

[1]:https://linux.cn/article-5633-1.html

|

||||

@ -1,14 +1,15 @@

|

||||

网络管理命令行工具基础,Nmcli

|

||||

Nmcli 网络管理命令行工具基础

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

### 介绍 ###

|

||||

|

||||

在本教程中,我们会在CentOS / RHEL 7中讨论网络管理工具,也叫**nmcli**。那些使用**ifconfig**的用户应该在CentOS 7中避免使用这个命令。

|

||||

在本教程中,我们会在CentOS / RHEL 7中讨论网络管理工具(NetworkManager command line tool),也叫**nmcli**。那些使用**ifconfig**的用户应该在CentOS 7中避免使用**ifconfig** 了。

|

||||

|

||||

让我们用nmcli工具配置一些网络设置。

|

||||

|

||||

### 要得到系统中所有接口的地址信息 ###

|

||||

#### 要得到系统中所有接口的地址信息 ####

|

||||

|

||||

[root@localhost ~]# ip addr show

|

||||

|

||||

@ -27,13 +28,13 @@

|

||||

inet6 fe80::20c:29ff:fe67:2f4c/64 scope link

|

||||

valid_lft forever preferred_lft forever

|

||||

|

||||

#### 检索与连接的接口相关的数据包统计 ####

|

||||

#### 检索与已连接的接口相关的数据包统计 ####

|

||||

|

||||

[root@localhost ~]# ip -s link show eno16777736

|

||||

|

||||

**示例输出:**

|

||||

|

||||

|

||||

|

||||

|

||||

#### 得到路由配置 ####

|

||||

|

||||

@ -50,11 +51,11 @@

|

||||

|

||||

输出像traceroute,但是更加完整。

|

||||

|

||||

|

||||

|

||||

|

||||

### nmcli 工具 ###

|

||||

|

||||

**Nmcli** 是一个非常丰富和灵活的命令行工具。nmcli使用的情况有:

|

||||

**nmcli** 是一个非常丰富和灵活的命令行工具。nmcli使用的情况有:

|

||||

|

||||

- **设备** – 正在使用的网络接口

|

||||

- **连接** – 一组配置设置,对于一个单一的设备可以有多个连接,可以在连接之间切换。

|

||||

@ -63,7 +64,7 @@

|

||||

|

||||

[root@localhost ~]# nmcli connection show

|

||||

|

||||

|

||||

|

||||

|

||||

#### 得到特定连接的详情 ####

|

||||

|

||||

@ -71,7 +72,7 @@

|

||||

|

||||

**示例输出:**

|

||||

|

||||

|

||||

|

||||

|

||||

#### 得到网络设备状态 ####

|

||||

|

||||

@ -89,7 +90,7 @@

|

||||

|

||||

这里,

|

||||

|

||||

- **Connection add** – 添加新的连接

|

||||

- **connection add** – 添加新的连接

|

||||

- **con-name** – 连接名

|

||||

- **type** – 设备类型

|

||||

- **ifname** – 接口名

|

||||

@ -100,7 +101,7 @@

|

||||

|

||||

Connection 'dhcp' (163a6822-cd50-4d23-bb42-8b774aeab9cb) successfully added.

|

||||

|

||||

#### 不同过dhcp分配IP,使用“static”添加地址 ####

|

||||

#### 不通过dhcp分配IP,使用“static”添加地址 ####

|

||||

|

||||

[root@localhost ~]# nmcli connection add con-name "static" ifname eno16777736 autoconnect no type ethernet ip4 192.168.1.240 gw4 192.168.1.1

|

||||

|

||||

@ -112,25 +113,23 @@

|

||||

|

||||

[root@localhost ~]# nmcli connection up eno1

|

||||

|

||||

Again Check, whether ip address is changed or not.

|

||||

再检查一遍,ip地址是否已经改变

|

||||

|

||||

[root@localhost ~]# ip addr show

|

||||

|

||||

|

||||

|

||||

|

||||

#### 添加DNS设置到静态连接中 ####

|

||||

|

||||

[root@localhost ~]# nmcli connection modify "static" ipv4.dns 202.131.124.4

|

||||

|

||||

#### 添加额外的DNS值 ####

|

||||

#### 添加更多的DNS ####

|

||||

|

||||

[root@localhost ~]# nmcli connection modify "static" +ipv4.dns 8.8.8.8

|

||||

[root@localhost ~]# nmcli connection modify "static" +ipv4.dns 8.8.8.8

|

||||

|

||||

**注意**:要使用额外的**+**符号,并且要是**+ipv4.dns**,而不是**ip4.dns**。

|

||||

|

||||

|

||||

添加一个额外的ip地址:

|

||||

####添加一个额外的ip地址####

|

||||

|

||||

[root@localhost ~]# nmcli connection modify "static" +ipv4.addresses 192.168.200.1/24

|

||||

|

||||

@ -138,11 +137,11 @@ Again Check, whether ip address is changed or not.

|

||||

|

||||

[root@localhost ~]# nmcli connection up eno1

|

||||

|

||||

|

||||

|

||||

|

||||

你会看见,设置生效了。

|

||||

|

||||

完结

|

||||

完结。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -150,6 +149,6 @@ via: http://www.unixmen.com/basics-networkmanager-command-line-tool-nmcli/

|

||||

|

||||

作者:Rajneesh Upadhyay

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

@ -18,9 +18,9 @@ Elementary OS 它自己本身借鉴了 OS X,也就不奇怪它的很多第三

|

||||

|

||||

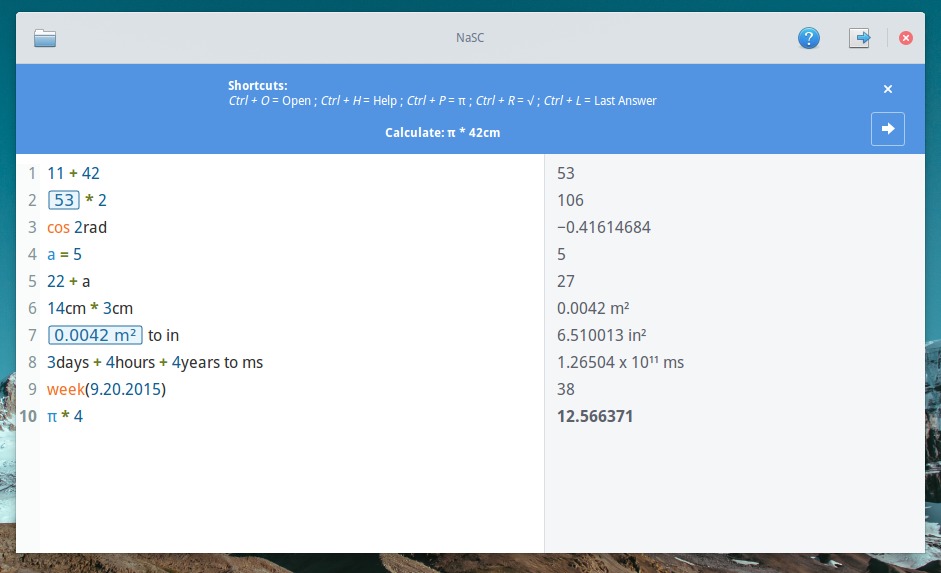

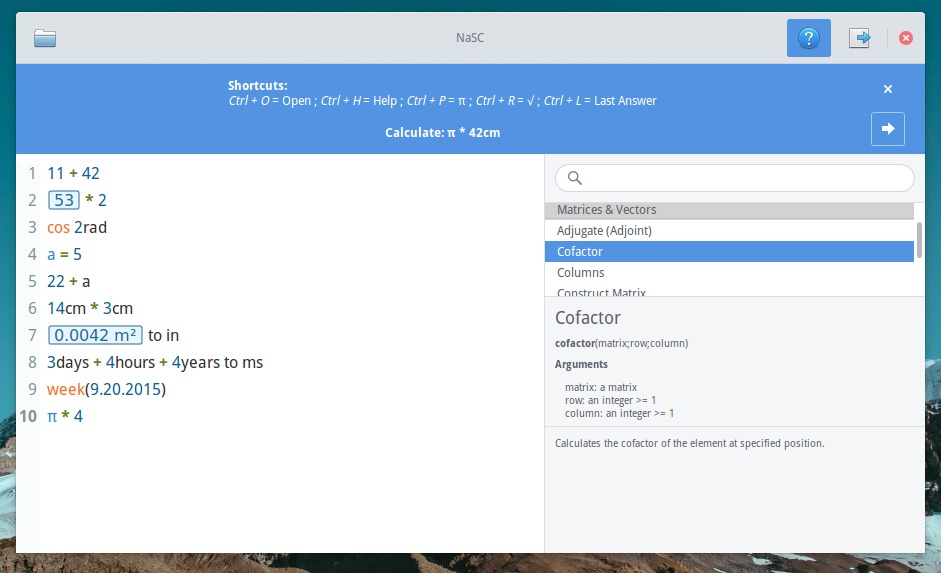

### 在 Ubuntu、Elementary OS 和 Mint 上安装 NaSC ###

|

||||

|

||||

安装 NaSC 有一个可用的 PPA。PPA 中说 ‘每日’,意味着所有构建(包括不稳定),但作为我的快速测试,并没什么影响。

|

||||

安装 NaSC 有一个可用的 PPA。PPA 是 ‘每日’,意味着每日构建(意即,不稳定),但作为我的快速测试,并没什么影响。

|

||||

|

||||

打卡一个终端并运行下面的命令:

|

||||

打开一个终端并运行下面的命令:

|

||||

|

||||

sudo apt-add-repository ppa:nasc-team/daily

|

||||

sudo apt-get update

|

||||

@ -35,7 +35,7 @@ Elementary OS 它自己本身借鉴了 OS X,也就不奇怪它的很多第三

|

||||

sudo apt-get remove nasc

|

||||

sudo apt-add-repository --remove ppa:nasc-team/daily

|

||||

|

||||

如果你试用了这个软件,要分享你的经验哦。除此之外,你也可以在第三方 Elementary OS 应用中体验[Vocal podcast app for Linux][3]。

|

||||

如果你试用了这个软件,要分享你的经验哦。除此之外,你也可以在第三方 Elementary OS 应用中体验 [Vocal podcast app for Linux][3]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -43,7 +43,7 @@ via: http://itsfoss.com/math-ubuntu-nasc/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[ictlyh](http://www.mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,14 +1,14 @@

|

||||

Linux有问必答--如何删除Ubuntu上不再使用的老内核

|

||||

Linux有问必答:如何删除Ubuntu上不再使用的旧内核

|

||||

================================================================================

|

||||

> **提问**:过去我已经在我的Ubuntu上升级了几次内核。现在我想要删除这些旧的内核镜像来节省我的磁盘空间。如何用最简单的方法删除Ubuntu上先前版本的内核?

|

||||

|

||||

在Ubuntu上,有几个方法来升级内核。在Ubuntu桌面中,软件更新允许你每天检查并更新到最新的内核上。在Ubuntu服务器上,一个无人值守的包会自动更新内核最为一项最要的安全更新。然而,你可以手动用apt-get或者aptitude命令来更新。

|

||||

在Ubuntu上,有几个方法来升级内核。在Ubuntu桌面中,软件更新允许你每天检查并更新到最新的内核上。在Ubuntu服务器上,最为重要的安全更新项目之一就是 unattended-upgrades 软件包会自动更新内核。然而,你也可以手动用apt-get或者aptitude命令来更新。

|

||||

|

||||

随着时间的流逝,持续的内核更新会在系统中积聚大量的不再使用的内核,浪费你的磁盘空间。每个内核镜像和其相关联的模块/头文件会占用200-400MB的磁盘空间,因此由不再使用的内核而浪费的磁盘空间会快速地增加。

|

||||

|

||||

|

||||

|

||||

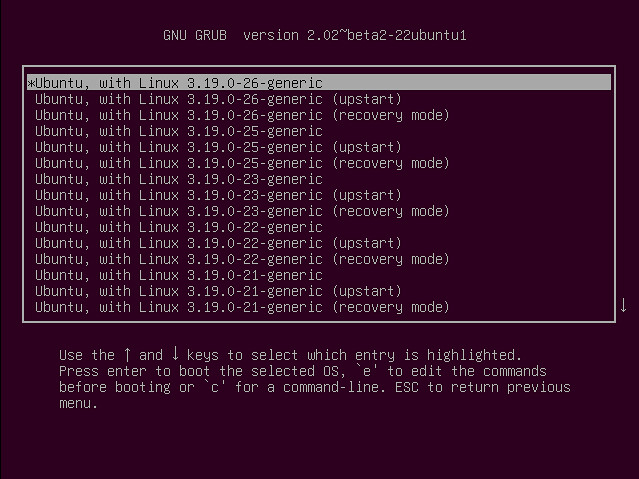

GRUB管理器为每个旧内核都维护了一个GRUB入口,防止你想要进入它们。

|

||||

GRUB管理器为每个旧内核都维护了一个GRUB入口,以备你想要使用它们。

|

||||

|

||||

|

||||

|

||||

@ -18,7 +18,7 @@ GRUB管理器为每个旧内核都维护了一个GRUB入口,防止你想要进

|

||||

|

||||

在删除旧内核之前,记住最好留有2个最近的内核(最新的和上一个版本),以防主要的版本出错。现在就让我们看看如何在Ubuntu上清理旧内核。

|

||||

|

||||

在Ubuntu内核镜像包哈了以下的包。

|

||||

在Ubuntu内核镜像包含了以下的包。

|

||||

|

||||

- **linux-image-<VERSION-NUMBER>**: 内核镜像

|

||||

- **linux-image-extra-<VERSION-NUMBER>**: 额外的内核模块

|

||||

@ -36,7 +36,6 @@ GRUB管理器为每个旧内核都维护了一个GRUB入口,防止你想要进

|

||||

|

||||

上面的命令会删除内核镜像和它相关联的内核模块和头文件。

|

||||

|

||||

updated to remove the corresponding GRUB entry from GRUB menu.

|

||||

注意如果你还没有升级内核那么删除旧内核会自动触发安装新内核。这样在删除旧内核之后,GRUB配置会自动升级来移除GRUB菜单中相关GRUB入口。

|

||||

|

||||

如果你有很多没用的内核,你可以用shell表达式来一次性地删除多个内核。注意这个括号表达式只在bash或者兼容的shell中才有效。

|

||||

@ -52,7 +51,7 @@ updated to remove the corresponding GRUB entry from GRUB menu.

|

||||

|

||||

$ sudo update-grub2

|

||||

|

||||

现在就重启来验证GRUB菜单已经正确清理了。

|

||||

现在就重启来验证GRUB菜单是否已经正确清理了。

|

||||

|

||||

|

||||

|

||||

@ -62,7 +61,7 @@ via: http://ask.xmodulo.com/remove-kernel-images-ubuntu.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,152 @@

|

||||

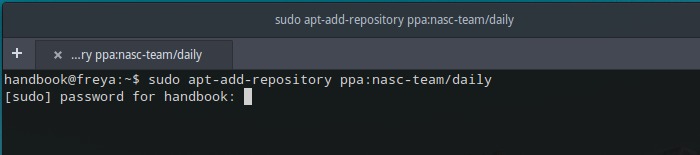

RHCSA 系列(五): RHEL7 中的进程管理:开机,关机

|

||||

================================================================================

|

||||

我们将概括和简要地复习从你按开机按钮来打开你的 RHEL 7 服务器到呈现出命令行界面的登录屏幕之间所发生的所有事情,以此来作为这篇文章的开始。

|

||||

|

||||

|

||||

|

||||

*Linux 开机过程*

|

||||

|

||||

**请注意:**

|

||||

|

||||

1. 相同的基本原则也可以应用到其他的 Linux 发行版本中,但可能需要较小的更改,并且

|

||||

2. 下面的描述并不是旨在给出开机过程的一个详尽的解释,而只是介绍一些基础的东西

|

||||

|

||||

### Linux 开机过程 ###

|

||||

|

||||

1. 初始化 POST(加电自检)并执行硬件检查;

|

||||

|

||||

2. 当 POST 完成后,系统的控制权将移交给启动管理器的第一阶段(first stage),它存储在一个硬盘的引导扇区(对于使用 BIOS 和 MBR 的旧式的系统而言)或存储在一个专门的 (U)EFI 分区上。

|

||||

|

||||

3. 启动管理器的第一阶段完成后,接着进入启动管理器的第二阶段(second stage),通常大多数使用的是 GRUB(GRand Unified Boot Loader 的简称),它驻留在 `/boot` 中,然后开始加载内核和驻留在 RAM 中的初始化文件系统(被称为 initramfs,它包含执行必要操作所需要的程序和二进制文件,以此来最终挂载真实的根文件系统)。

|

||||

|

||||

4. 接着展示了闪屏(splash)过后,呈现在我们眼前的是类似下图的画面,它允许我们选择一个操作系统和内核来启动:

|

||||

|

||||

|

||||

|

||||

*启动菜单屏幕*

|

||||

|

||||

5. 内核会对接入到系统的硬件进行设置,当根文件系统被挂载后,接着便启动 PID 为 1 的进程,这个进程将开始初始化其他的进程并最终呈现给我们一个登录提示符界面。

|

||||

|

||||

注意:假如我们想在启动后查看这些信息,我们可以使用 [dmesg 命令][1],并使用这个系列里的上一篇文章中介绍过的工具(注:即 grep)来过滤它的输出。

|

||||

|

||||

|

||||

|

||||

*登录屏幕和进程的 PID*

|

||||

|

||||

在上面的例子中,我们使用了大家熟知的 `ps` 命令来显示在系统启动过程中的一系列当前进程的信息,它们的父进程(或者换句话说,就是那个开启这些进程的进程)为 systemd(大多数现代的 Linux 发行版本已经切换到的系统和服务管理器):

|

||||

|

||||

# ps -o ppid,pid,uname,comm --ppid=1

|

||||

|

||||

记住 `-o`(为 -format 的简写)选项允许你以一个自定义的格式来显示 ps 的输出,以此来满足你的需求;这个自定义格式使用 `man ps` 里 STANDARD FORMAT SPECIFIERS 一节中的特定关键词。

|

||||

|

||||

另一个你想自定义 ps 的输出而不是使用其默认输出的情形是:当你需要找到引起 CPU 或内存消耗过多的那些进程,并按照下列方式来对它们进行排序时:

|

||||

|

||||

# ps aux --sort=+pcpu # 以 %CPU 来排序(增序)

|

||||

# ps aux --sort=-pcpu # 以 %CPU 来排序(降序)

|

||||

# ps aux --sort=+pmem # 以 %MEM 来排序(增序)

|

||||

# ps aux --sort=-pmem # 以 %MEM 来排序(降序)

|

||||

# ps aux --sort=+pcpu,-pmem # 结合 %CPU (增序) 和 %MEM (降序)来排列

|

||||

|

||||

|

||||

|

||||

*自定义 ps 命令的输出*

|

||||

|

||||

### systemd 的一个介绍 ###

|

||||

|

||||

在 Linux 世界中,很少有能比在主流的 Linux 发行版本中采用 systemd 引起更多的争论的决定。systemd 的倡导者根据以下事实来表明其主要的优势:

|

||||

|

||||

1. 在系统启动期间,systemd 允许并发地启动更多的进程(相比于先前的 SysVinit,SysVinit 似乎总是表现得更慢,因为它一个接一个地启动进程,检查一个进程是否依赖于另一个进程,然后等待守护进程启动才可以启动的更多的服务),并且

|

||||

2. 在一个运行着的系统中,它用作一个动态的资源管理器。这样在启动期间,当一个服务被需要时,才启动它(以此来避免消耗系统资源)而不是在没有一个合理的原因的情况下启动额外的服务。

|

||||

3. 向后兼容 sysvinit 的脚本。

|

||||

|

||||

另外请阅读: ['init' 和 'systemd' 背后的故事][2]

|

||||

|

||||

systemd 由 systemctl 工具控制,假如你了解 SysVinit,你将会对以下的内容感到熟悉:

|

||||

|

||||

- service 工具,在旧一点的系统中,它被用来管理 SysVinit 脚本,以及

|

||||

- chkconfig 工具,为系统服务升级和查询运行级别信息

|

||||

- shutdown 你一定使用过几次来重启或关闭一个运行的系统。

|

||||

|

||||

下面的表格展示了使用传统的工具和 systemctl 之间的相似之处:

|

||||

|

||||

|

||||

| 旧式工具 | Systemctl 等价命令 | 描述 |

|

||||

|-------------|----------------------|-------------|

|

||||

| service name start | systemctl start name | 启动 name (这里 name 是一个服务) |

|

||||

| service name stop | systemctl stop name | 停止 name |

|

||||

| service name condrestart | systemctl try-restart name | 重启 name (如果它已经运行了) |

|

||||

| service name restart | systemctl restart name | 重启 name |

|

||||

| service name reload | systemctl reload name | 重载 name 的配置 |

|

||||

| service name status | systemctl status name | 显示 name 的当前状态 |

|

||||

| service - status-all | systemctl | 显示当前所有服务的状态 |

|

||||

| chkconfig name on | systemctl enable name | 通过一个特定的单元文件,让 name 可以在系统启动时运行(这个文件是一个符号链接)。启用或禁用一个启动时的进程,实际上是增加或移除一个到 /etc/systemd/system 目录中的符号链接。 |

|

||||

| chkconfig name off | systemctl disable name | 通过一个特定的单元文件,让 name 可以在系统启动时禁止运行(这个文件是一个符号链接)。 |

|

||||

| chkconfig -list name | systemctl is-enabled name | 确定 name (一个特定的服务)当前是否启用。|

|

||||

| chkconfig - list | systemctl - type=service | 显示所有的服务及其是否启用或禁用。 |

|

||||

| shutdown -h now | systemctl poweroff | 关机 |

|

||||

| shutdown -r now | systemctl reboot | 重启系统 |

|

||||

|

||||

systemd 也引进了单元(unit)(它可能是一个服务,一个挂载点,一个设备或者一个网络套接字)和目标(target)(它们定义了 systemd 如何去管理和同时开启几个相关的进程,可以认为它们与在基于 SysVinit 的系统中的运行级别等价,尽管事实上它们并不等价)的概念。

|

||||

|

||||

### 总结归纳 ###

|

||||

|

||||

其他与进程管理相关,但并不仅限于下面所列的功能的任务有:

|

||||

|

||||

**1. 在考虑到系统资源的使用上,调整一个进程的执行优先级:**

|

||||

|

||||

这是通过 `renice` 工具来完成的,它可以改变一个或多个正在运行着的进程的调度优先级。简单来说,调度优先级是一个允许内核(当前只支持 >= 2.6 的版本)根据某个给定进程被分配的执行优先级(即友善度(niceness),从 -20 到 19)来为其分配系统资源的功能。

|

||||

|

||||

`renice` 的基本语法如下:

|

||||

|

||||

# renice [-n] priority [-gpu] identifier

|

||||

|

||||

在上面的通用命令中,第一个参数是将要使用的优先级数值,而另一个参数可以是进程 ID(这是默认的设定),进程组 ID,用户 ID 或者用户名。一个常规的用户(即除 root 以外的用户)只可以更改他或她所拥有的进程的调度优先级,并且只能增加友善度的层次(这意味着占用更少的系统资源)。

|

||||

|

||||

|

||||

|

||||

*进程调度优先级*

|

||||

|

||||

**2. 按照需要杀死一个进程(或终止其正常执行):**

|

||||

|

||||

更精确地说,杀死一个进程指的是通过 [kill 或 pkill][3] 命令给该进程发送一个信号,让它优雅地(SIGTERM=15)或立即(SIGKILL=9)结束它的执行。

|

||||

|

||||

这两个工具的不同之处在于前一个被用来终止一个特定的进程或一个进程组,而后一个则允许你通过进程的名称和其他属性,执行相同的动作。

|

||||

|

||||

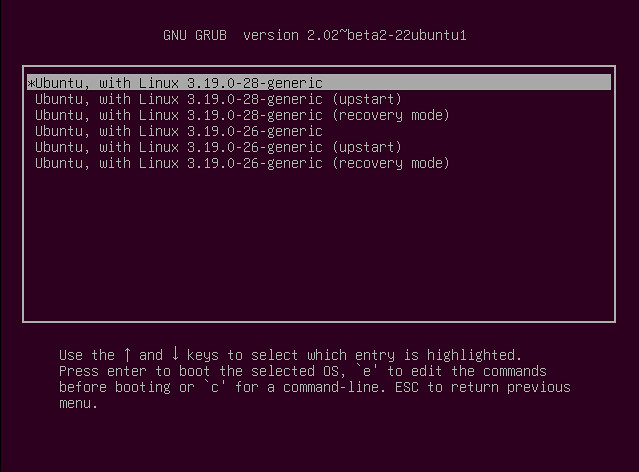

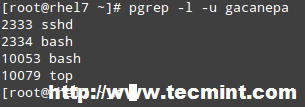

另外, pkill 与 pgrep 相捆绑,pgrep 提供将受符合的进程的 PID 给 pkill 来使用。例如,在运行下面的命令之前:

|

||||

|

||||

# pkill -u gacanepa

|

||||

|

||||

查看一眼由 gacanepa 所拥有的 PID 或许会带来点帮助:

|

||||

|

||||

# pgrep -l -u gacanepa

|

||||

|

||||

|

||||

|

||||

*找到用户拥有的 PID*

|

||||

|

||||

默认情况下,kill 和 pkiill 都发送 SIGTERM 信号给进程,如我们上面提到的那样,这个信号可以被忽略(即该进程可能会终止其自身的执行,也可能不终止),所以当你因一个合理的理由要真正地停止一个运行着的进程,则你将需要在命令行中带上特定的 SIGKILL 信号:

|

||||

|

||||

# kill -9 identifier # 杀死一个进程或一个进程组

|

||||

# kill -s SIGNAL identifier # 同上

|

||||

# pkill -s SIGNAL identifier # 通过名称或其他属性来杀死一个进程

|

||||

|

||||

### 结论 ###

|

||||

|

||||

在这篇文章中,我们解释了在 RHEL 7 系统中,有关开机启动过程的基本知识,并分析了一些可用的工具来帮助你通过使用一般的程序和 systemd 特有的命令来管理进程。

|

||||

|

||||

请注意,这个列表并不旨在涵盖有关这个话题的所有花哨的工具,请随意使用下面的评论栏来添加你自已钟爱的工具和命令。同时欢迎你的提问和其他的评论。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/rhcsa-exam-boot-process-and-process-management/

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

[1]:https://linux.cn/article-3587-1.html

|

||||

[2]:http://www.tecmint.com/systemd-replaces-init-in-linux/

|

||||

[3]:https://linux.cn/article-2116-1.html

|

||||

@ -1,63 +0,0 @@

|

||||

Is Linux Right For You?

|

||||

================================================================================

|

||||

> Not everyone should opt for Linux -- for many users, remaining with Windows or OSX is the better choice.

|

||||

|

||||

I enjoy using Linux on the desktop. Not because of software politics or because I despise other operating systems. I simply like Linux because it just works.

|

||||

|

||||

It's been my experience that not everyone is cut out for the Linux lifestyle. In this article, I'll help you run through the pros and cons of making the switch to Linux so you can determine if switching is right for you.

|

||||

|

||||

### When to make the switch ###

|

||||

|

||||

Switching to Linux makes sense when there is a decisive reason to do so. The same can be said about moving from Windows to OS X or vice versa. In order to have success with switching, you must be able to identify your reason for jumping ship in the first place.

|

||||

|

||||

For some people, the reason for switching is frustration with their current platform. Maybe the latest upgrade left them with a lousy experience and they're ready to chart new horizons. In other instances, perhaps it's simply a matter of curiosity. Whatever the motivation, you must have a good reason for switching operating systems. If you're pushing yourself in this direction without a good reason, then no one wins.

|

||||

|

||||

However, there are exceptions to every rule. And if you're really interested in trying Linux on the desktop, then maybe coming to terms with a workable compromise is the way to go.

|

||||

|

||||

### Starting off slow ###

|

||||

|

||||

After trying Linux for the first time, I've seen people blast their Windows installation to bits because they had a good experience with Ubuntu on a flash drive for 20 minutes. Folks, this isn't a test. Instead I'd suggest the following:

|

||||

|

||||

- Run the [Linux distro in a virtual machine][1] for a week. This means you are committing to running that distro for all browser work, email and other tasks you might otherwise do on that machine.

|

||||

- If running a VM for a week is too resource intensive, try doing the same with a USB drive running Linux that offers [some persistent storage][2]. This will allow you to leave your main OS alone and intact. At the same time, you'll still be able to "live inside" of your Linux distribution for a week.

|

||||

- If you find that everything is successful after a week of running Linux, the next step is to examine how many times you booted into Windows that week. If only occasionally, then the next step is to look into [dual-booting Windows][3] and Linux. For those of you that only found themselves using their Linux distro, it might be worth considering making the switch full time.

|

||||

- Before you hose your Windows partition completely, it might make more sense to purchase a second hard drive to install Linux onto instead. This allows you to dual-boot, but to do so with ample hard drive space. It also makes Windows available to you if something should come up.

|

||||

|

||||

### What do you gain adopting Linux? ###

|

||||

|

||||

So what does one gain by switching to Linux? Generally it comes down to personal freedom for most people. With Linux, if something isn't to your liking, you're free to change it. Using Linux also saves users oodles of money in avoiding hardware upgrades and unnecessary software expenses. Additionally, you're not burdened with tracking down lost license keys for software. And if you dislike the direction a particular distribution is headed, you can switch to another distribution with minimal hassle.

|

||||

|

||||

The sheer volume of desktop choice on the Linux desktop is staggering. This level of choice might even seem overwhelming to the newcomer. But if you find a distro base (Debian, Fedora, Arch, etc) that you like, the hard work is already done. All you need to do now is find a variation of the distro and the desktop environment you prefer.

|

||||

|

||||

Now one of the most common complaints I hear is that there isn't much in the way of software for Linux. However, this isn't accurate at all. While other operating systems may have more of it, today's Linux desktop has applications to do just about anything you can think of. Video editing (home and pro-level), photography, office management, remote access, music (listening and creation), plus much, much more.

|

||||

|

||||

### What you lose adopting Linux? ###

|

||||

|

||||

As much as I enjoy using Linux, my wife's home office relies on OS X. She's perfectly content using Linux for some tasks, however she relies on OS X for specific software not available for Linux. This is a common problem that many people face when first looking at making the switch. You must decide whether or not you're going to be losing out on critical software if you make the switch.

|

||||

|

||||

Sometimes the issue is because the software has content locked down with it. In other cases, it's a workflow and functionality that was found with the legacy applications and not with the software available for Linux. I myself have never experienced this type of challenge, but I know those who have. Many of the software titles available for Linux are also available for other operating systems. So if there is a concern about such things, I encourage you to try out comparable apps on your native OS first.

|

||||

|

||||

Another thing you might lose by switching to Linux is the luxury of local support when you need it. People scoff at this, but I know of countless instances where a newcomer to Linux was dismayed to find their only recourse for solving Linux challenges was from strangers on the Web. This is especially problematic if their only PC is the one having issues. Windows and OS X users are spoiled in that there are endless support techs in cities all over the world that support their platform(s).

|

||||

|

||||

### How to proceed from here ###

|

||||

|

||||

Perhaps the single biggest piece of advice to remember is always have a fallback plan. Remember, once you wipe that copy of Windows 10 from your hard drive, you may find yourself spending money to get it reinstalled. This is especially true for those of you who upgrade from other Windows releases. Accepting this, persistent flash drives with Linux or dual-booting Windows and Linux is always a preferable way forward for newcomers. Odds are that you may be just fine and take to Linux like a fish to water. But having that fallback plan in place just means you'll sleep better at night.

|

||||

|

||||

If instead you've been relying on a dual-boot installation for weeks and feel ready to take the plunge, then by all means do it. Wipe your drive and start off with a clean installation of your favorite Linux distribution. I've been a full time Linux enthusiast for years and I can tell you for certain, it's a great feeling. How long? Let's just say my first Linux experience was with early Red Hat. I finally installed a dedicated installation on my laptop by 2003.

|

||||

|

||||

Existing Linux enthusiasts, where did you first get started? Was your switch an exciting one or was it filled with angst? Hit the Comments and share your experiences.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/open-source/is-linux-right-for-you.html

|

||||

|

||||

作者:[Matt Hartley][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.datamation.com/author/Matt-Hartley-3080.html

|

||||

[1]:http://www.psychocats.net/ubuntu/virtualbox

|

||||

[2]:http://www.howtogeek.com/howto/14912/create-a-persistent-bootable-ubuntu-usb-flash-drive/

|

||||

[3]:http://www.linuxandubuntu.com/home/dual-boot-ubuntu-15-04-14-10-and-windows-10-8-1-8-step-by-step-tutorial-with-screenshots

|

||||

@ -1,41 +0,0 @@

|

||||

Ubuntu Linux-Based Open Source OS Runs 42 Percent of Dell PCs in China

|

||||

================================================================================

|

||||

> Dell says that 42 percent of the PCs it sells in the Chinese market run Kylin, an open source operating system based on Ubuntu Linux that Canonical helped to create.

|

||||

|

||||

Open source fans, rejoice: The Year of the Linux Desktop has arrived. Or something close to it is on the horizon in China, at least, where [Dell][1] has reported that more than 40 percent of the PCs it sells run a variant of [Ubuntu Linux][2] that [Canonical][3] helped develop.

|

||||

|

||||

Specifically, Dell said that 42 percent of computers in China run NeoKylin, an operating system that originated as an effort in China to build a home-grown alternative to [Microsoft][4] (MSFT) Windows. Also known simply Kylin, the OS has been based on Ubuntu since 2013, when Canonical began collaborating with the Chinese government to create an Ubuntu variant tailored for the Chinese market.

|

||||

|

||||

Earlier versions of Kylin, which has been around since 2001, were based on other operating systems, including FreeBSD, an open source Unix-like operating system that is distinct from Linux.

|

||||

|

||||

Ubuntu Kylin looks and feels a lot like modern versions of Ubuntu proper. It sports the [Unity][5] interface and runs the standard suite of open source apps, as well as specialized ones such as Youker Assistant, a graphical front end that helps users manage basic computing tasks. Kylin's default theme makes it look just a little more like Windows than stock Ubuntu, however.

|

||||

|

||||

Given the relative stagnation of the market for desktop Linux PCs in most of the world, Dell's announcement is striking. And in light of China's [hostility][6] toward modern editions of Windows, the news does not bode well for Microsoft's prospects in the Chinese market.

|

||||

|

||||

Dell's comment on Linux PC sales in China—which appeared in the form of a statement by an executive to the Wall Street Journal—comes on the heels of the company's [announcement][7] of $125 million of new investment in China.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://thevarguy.com/open-source-application-software-companies/091515/ubuntu-linux-based-open-source-os-runs-42-percent-dell-pc

|

||||

|

||||

作者:[Christopher Tozzi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://thevarguy.com/author/christopher-tozzi

|

||||

[1]:http://dell.com/

|

||||

[2]:http://ubuntu.com/

|

||||

[3]:http://canonical.com/

|

||||

[4]:http://microsoft.com/

|

||||

[5]:http://unity.ubuntu.com/

|

||||

[6]:http://www.wsj.com/articles/windows-8-faces-new-criticism-in-china-1401882772

|

||||

[7]:http://thevarguy.com/business-technology-solution-sales/091415/dell-125-million-directed-china-jobs-new-business-and-innovation

|

||||

@ -0,0 +1,30 @@

|

||||

Italy's Ministry of Defense to Drop Microsoft Office in Favor of LibreOffice

|

||||

================================================================================

|

||||

>**LibreItalia's Italo Vignoli [reports][1] that the Italian Ministry of Defense is about to migrate to the LibreOffice open-source software for productivity and adopt the Open Document Format (ODF), while moving away from proprietary software products.**

|

||||

|

||||

The movement comes in the form of a [collaboration][1] between Italy's Ministry of Defense and the LibreItalia Association. Sonia Montegiove, President of the LibreItalia Association, and Ruggiero Di Biase, Rear Admiral and General Executive Manager of Automated Information Systems of the Ministry of Defense in Italy signed an agreement for a collaboration to adopt the LibreOffice office suite in all of the Ministry's offices.

|

||||

|

||||

While the LibreItalia non-profit organization promises to help the Italian Ministry of Defense with trainers for their offices across the country, the Ministry will start the implementation of the LibreOffice software on October 2015 with online training courses for their staff. The entire transition process is expected to be completed by the end of year 2016\. An Italian law lets officials find open source software alternatives to well-known commercial software.

|

||||

|

||||

"Under the agreement, the Italian Ministry of Defense will develop educational content for a series of online training courses on LibreOffice, which will be released to the community under Creative Commons, while the partners, LibreItalia, will manage voluntarily the communication and training of trainers in the Ministry," says Italo Vignoli, Honorary President of LibreItalia.

|

||||

|

||||

### The Ministry of Defense will adopt the Open Document Format (ODF)

|

||||

|

||||

The initiative will allow the Italian Ministry of Defense to be independent from proprietary software applications, which are aimed at individual productivity, and adopt open source document format standards like Open Document Format (ODF), which is used by default in the LibreOffice office suite. The project follows similar movements already made by governments of other European countries, including United Kingdom, France, Spain, Germany, and Holland.

|

||||

|

||||

It would appear that numerous other public institutions all over Italy are using open source alternatives, including the Italian Region Emilia Romagna, Galliera Hospital in Genoa, Macerata, Cremona, Trento and Bolzano, Perugia, the municipalities of Bologna, ASL 5 of Veneto, Piacenza and Reggio Emilia, and many others. AGID (Agency for Digital Italy) welcomes this project and hopes that other public institutions will do the same.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/italy-s-ministry-of-defense-to-drop-microsoft-office-in-favor-of-libreoffice-491850.shtml

|

||||

|

||||

作者:[Marius Nestor][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/marius-nestor

|

||||

[1]:http://www.libreitalia.it/accordo-di-collaborazione-tra-associazione-libreitalia-onlus-e-difesa-per-ladozione-del-prodotto-libreoffice-quale-pacchetto-di-produttivita-open-source-per-loffice-automation/

|

||||

[2]:http://www.libreitalia.it/chi-siamo/

|

||||

@ -1,84 +0,0 @@

|

||||

ictlyh Translating

|

||||

Best command line tools for linux performance monitoring

|

||||

================================================================================

|

||||

Sometimes a system can be slow and many reasons can be the root cause. To identify the process that is consuming memory, disk I/O or processor capacity you need to use tools to see what is happening in an operation system.

|

||||

|

||||

There are many tools to monitor a GNU/Linux server. In this article, I am providing 7 monitoring tools and i hope it will help you.

|

||||

|

||||

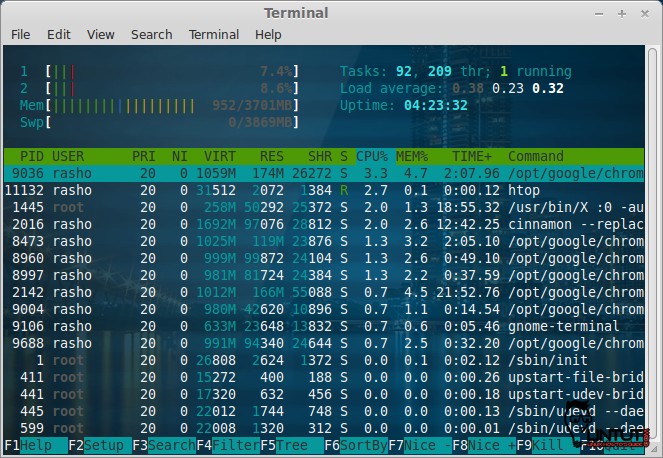

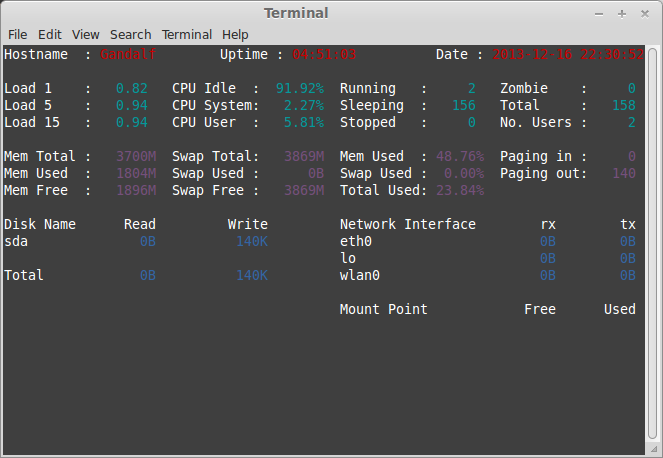

###Htop

|

||||

Htop is an alternative of top command but it provides interactive system-monitor process-viewer and more user friendly output than top.

|

||||

|

||||

htop also provides a better way to navigate to any process using keyboard Up/Down keys as well as we can also operate it using mouse.

|

||||

|

||||

For Check our previous post:[How to install and use htop on RHEL/Centos and Fedora linux][1]

|

||||

|

||||

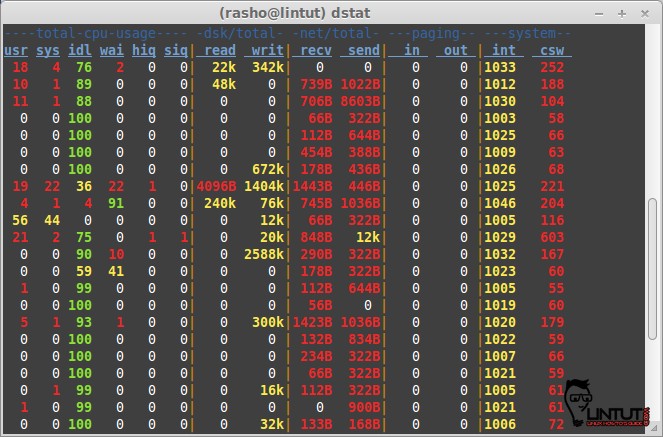

###dstat

|

||||

Dstat is a versatile replacement for vmstat, iostat, netstat and ifstat. Dstat overcomes some of their limitations and adds some extra features, more counters and flexibility. Dstat is handy for monitoring systems during performance tuning tests, benchmarks or troubleshooting.

|

||||

|

||||

Dstat allows you to view all of your system resources in real-time, you can eg. compare disk utilization in combination with interrupts from your IDE controller, or compare the network bandwidth numbers directly with the disk throughput (in the same interval).

|

||||

Dstat gives you detailed selective information in columns and clearly indicates in what magnitude and unit the output is displayed. Less confusion, less mistakes. And most importantly, it makes it very easy to write plugins to collect your own counters and extend in ways you never expected.

|

||||

|

||||

Dstat’s output by default is designed for being interpreted by humans in real-time, however you can export details to CSV output to a file to be imported later into Gnumeric or Excel to generate graphs.

|

||||

Check our previous post:[How to install and use dstat on RHEL/CentOS,Fedora and Debian/Ubuntu based distribution][2]

|

||||

|

||||

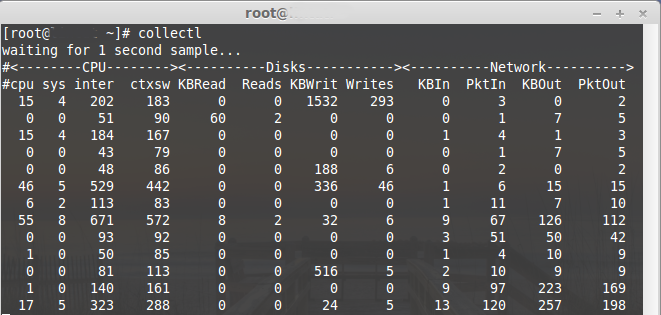

###Collectl

|

||||

Collectl is a light-weight performance monitoring tool capable of reporting interactively as well as logging to disk. It reports statistics on cpu, disk, infiniband, lustre, memory, network, nfs, process, quadrics, slabs and more in easy to read format.

|

||||

In this article i will show you how to install and sample usage Collectl on Debian/Ubuntu and RHEL/Centos and Fedora linux.

|

||||

|

||||

Check our previous post:[Collectl-Monitoring system resources][3]

|

||||

|

||||

|

||||

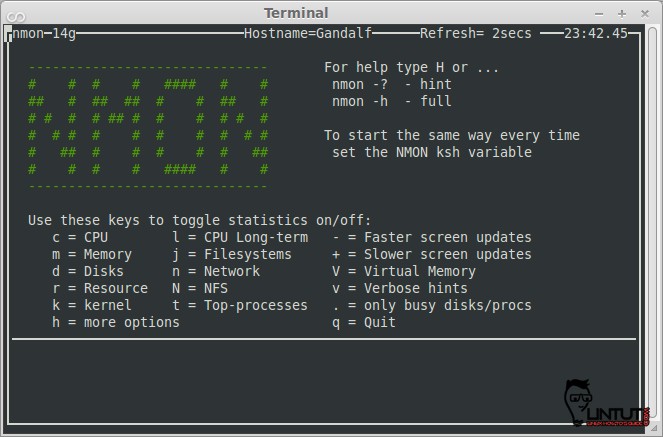

###Nmon

|

||||

nmon is a beutiful tool to monitor linux system performance. It works on Linux, IBM AIX Unix, Power,x86, amd64 and ARM based system such as Raspberry Pi. The nmon command displays and recordslocal system information. The command can run either in interactive or recording mode.

|

||||

|

||||

Check our previous post: [Nmon – linux monitoring tools][4]

|

||||

|

||||

###Saidar

|

||||

Saidar is a curses-based application to display system statistics. It use the libstatgrab library, which provides cross platform access to statistics about the system on which it’s run. Reported statistics includeCPU, load, processes, memory, swap, network input and output and disks activities along with their free space.

|

||||

|

||||

Check our previous post:[Saidar – system monitoring tool][5]

|

||||

|

||||

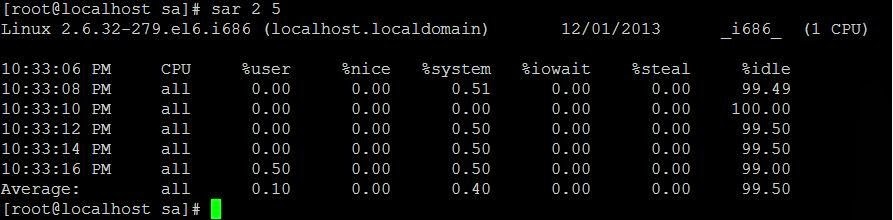

###Sar

|

||||

The sar utility, which is part of the systat package, can be used to review history performance data on your server. System resource utilization can be seen for given time frames to help troubleshoot performance issues, or to optimize performance.

|

||||

|

||||

Check our previous post:[Using Sar To Monitor System Performance][6]

|

||||

|

||||

|

||||

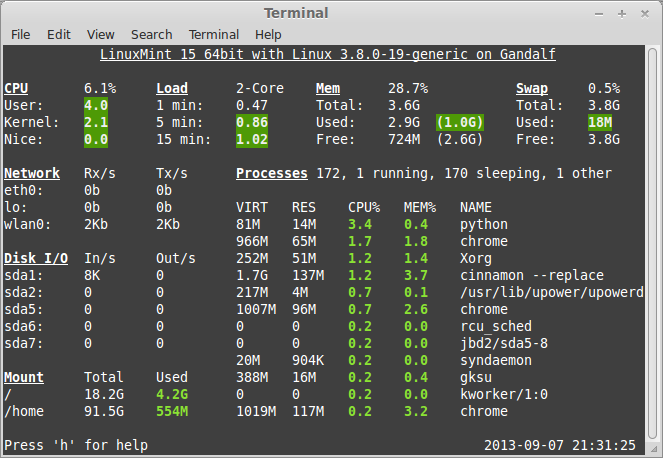

###Glances

|

||||

Glances is a cross-platform curses-based command line monitoring tool writen in Python which use the psutil library to grab informations from the system. Glance monitoring CPU, Load Average, Memory, Network Interfaces, Disk I/O, Processesand File System spaces utilization.

|

||||

|

||||

Glances can adapt dynamically the displayed information depending on the terminal siwrize. It can also work in a client/server mode for remote monitoring.

|

||||

|

||||

Check our previous post: [Glances – Real Time System Monitoring Tool for Linux][7]

|

||||

|

||||

|

||||

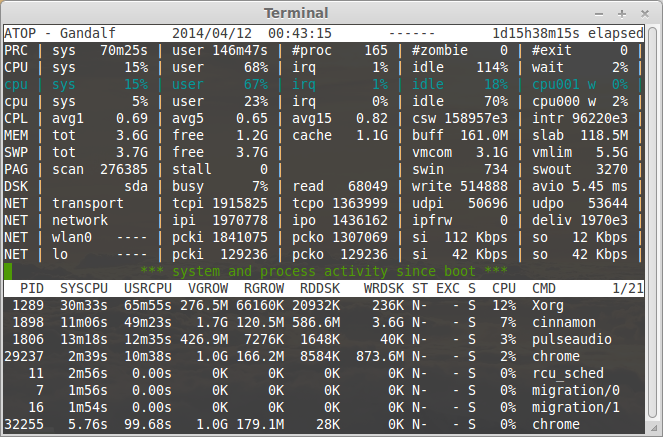

###Atop

|

||||

[Atop](http://www.atoptool.nl/) is an interactive monitor to view the load on a Linux system. It shows the occupation of the most critical hardware resources on system level, i.e. cpu, memory, disk and network. It also shows which processes are responsible for the indicated load with respect to cpu- and memory load on process level. Disk load is shown if per process “storage accounting” is active in the kernel or if the kernel patch ‘cnt’ has been installed. Network load is only shown per process if the kernel patch ‘cnt’ has been installed.

|

||||

|

||||

For more about Atop check next post:[Atop - monitor system resources in linux][8]

|

||||

So, if you come across any other similar tool then let us know in the comment box below.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://lintut.com/best-command-line-tools-for-linux-performance-monitring/

|

||||

|

||||

作者:[rasho][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:

|

||||

[1]:http://lintut.com/install-htop-in-rhel-centos-fedora-linux/

|

||||

[2]:http://lintut.com/dstat-linux-monitoring-tools/

|

||||

[3]:http://lintut.com/collectl-monitoring-system-resources/

|

||||

[4]:http://lintut.com/nmon-linux-monitoring-tools/

|

||||

[5]:http://lintut.com/saidar-system-monitoring-tool/

|

||||

[6]:http://lintut.com/using-sar-to-monitor-system-performance/

|

||||

[7]:http://lintut.com/glances-an-eye-on-your-system/

|

||||

[8]:http://lintut.com/atop-linux-system-resource-monitor/

|

||||

@ -1,3 +1,4 @@

|

||||

ictlyh Translating

|

||||

5 Useful Commands to Manage File Types and System Time in Linux – Part 3

|

||||

================================================================================

|

||||

Adapting to using the command line or terminal can be very hard for beginners who want to learn Linux. Because the terminal gives more control over a Linux system than GUIs programs, one has to get a used to running commands on the terminal. Therefore to memorize different commands in Linux, you should use the terminal on a daily basis to understand how commands are used with different options and arguments.

|

||||

|

||||

@ -1,105 +0,0 @@

|

||||

|

||||

translating by ezio

|

||||

|

||||

How to Setup Node JS v4.0.0 on Ubuntu 14.04 / 15.04

|

||||

================================================================================

|

||||

Hi everyone, Node.JS Version 4.0.0 has been out, the popular server-side JavaScript platform has combines the Node.js and io.js code bases. This release represents the combined efforts encapsulated in both the Node.js project and the io.js project that are now combined in a single codebase. The most important change is this Node.js is ships with version 4.5 of Google's V8 JavaScript engine, which is the same version that ships with the current Chrome browser. So, being able to more closely track V8’s releases means Node.js runs JavaScript faster, more securely, and with the ability to use many desirable ES6 language features.

|

||||

|

||||

|

||||

|

||||

Node.js 4.0.0 aims to provide an easy update path for current users of io.js and node as there are no major API changes. Let’s see how you can easily get it installed and setup on Ubuntu server by following this simple article.

|

||||

|

||||

### Basic System Setup ###

|

||||

|

||||

Node works perfectly on Linux, Macintosh, and Solaris operating systems and among the Linux operating systems it has the best results using Ubuntu OS. That's why we are to setup it Ubuntu 15.04 while the same steps can be followed using Ubuntu 14.04.

|

||||

|

||||

#### 1) System Resources ####

|

||||

|

||||

The basic system resources for Node depend upon the size of your infrastructure requirements. So, here in this tutorial we will setup Node with 1 GB RAM, 1 GHz Processor and 10 GB of available disk space with minimal installation packages installed on the server that is no web or database server packages are installed.

|

||||

|

||||

#### 2) System Update ####

|

||||

|

||||

It always been recommended to keep your system upto date with latest patches and updates, so before we move to the installation on Node, let's login to your server with super user privileges and run update command.

|

||||

|

||||

# apt-get update

|

||||

|

||||

#### 3) Installing Dependencies ####

|

||||

|

||||

Node JS only requires some basic system and software utilities to be present on your server, for its successful installation like 'make' 'gcc' and 'wget'. Let's run the below command to get them installed if they are not already present.

|

||||

|

||||

# apt-get install python gcc make g++ wget

|

||||

|

||||

### Download Latest Node JS v4.0.0 ###

|

||||

|

||||

Let's download the latest Node JS version 4.0.0 by following this link of [Node JS Download Page][1].

|

||||

|

||||

|

||||

|

||||

We will copy the link location of its latest package and download it using 'wget' command as shown.

|

||||

|

||||

# wget https://nodejs.org/download/rc/v4.0.0-rc.1/node-v4.0.0-rc.1.tar.gz

|

||||

|

||||

Once download completes, unpack using 'tar' command as shown.

|

||||

|

||||

# tar -zxvf node-v4.0.0-rc.1.tar.gz

|

||||

|

||||

|

||||

|

||||

### Installing Node JS v4.0.0 ###

|

||||

|

||||

Now we have to start the installation of Node JS from its downloaded source code. So, change your directory and configure the source code by running its configuration script before compiling it on your ubuntu server.

|

||||

|

||||

root@ubuntu-15:~/node-v4.0.0-rc.1# ./configure

|

||||

|

||||

|

||||

|

||||

Now run the 'make install' command to compile the Node JS installation package as shown.

|

||||

|

||||

root@ubuntu-15:~/node-v4.0.0-rc.1# make install

|

||||

|

||||

The make command will take a couple of minutes while compiling its binaries so after executinf above command, wait for a while and keep calm.

|

||||

|

||||

### Testing Node JS Installation ###

|

||||

|

||||

Once the compilation process is complete, we will test it if every thing went fine. Let's run the following command to confirm the installed version of Node JS.

|

||||

|

||||

root@ubuntu-15:~# node -v

|

||||

v4.0.0-pre

|

||||

|

||||

By executing 'node' without any arguments from the command-line you will be dropped into the REPL (Read-Eval-Print-Loop) that has simplistic emacs line-editing where you can interactively run JavaScript and see the results.

|

||||

|

||||

|

||||

|

||||

### Writing Test Program ###

|

||||

|

||||

We can also try out a very simple console program to test the successful installation and proper working of Node JS. To do so we will create a file named "test.js" and write the following code into it and save the changes made in the file as shown.

|

||||

|

||||

root@ubuntu-15:~# vim test.js

|

||||

var util = require("util");

|

||||

console.log("Hello! This is a Node Test Program");

|

||||

:wq!

|

||||

|

||||

Now in order to run the above program, from the command prompt run the below command.

|

||||

|

||||

root@ubuntu-15:~# node test.js

|

||||

|

||||

|

||||

|

||||

So, upon successful installation we will get the output as shown in the screen, where as in the above program it loads the "util" class into a variable "util" and then uses the "util" object to perform the console tasks. While the console.log is a command similar to the cout in C++.

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

That’s it. Hope this gives you a good idea of Node.js going with Node.js on Ubuntu. If you are new to developing applications with Node.js. After all we can say that we can expect significant performance gains with Node JS Version 4.0.0.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/ubuntu-how-to/setup-node-js-4-0-ubuntu-14-04-15-04/

|

||||

|

||||

作者:[Kashif Siddique][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/kashifs/

|

||||

[1]:https://nodejs.org/download/rc/v4.0.0-rc.1/

|

||||

@ -1,78 +0,0 @@

|

||||

translating by ezio

|

||||

|

||||

Linux FAQs with Answers--How to check weather forecasts from the command line on Linux

|

||||

================================================================================

|

||||

> **Question**: I often check local weather forecasts on the Linux desktop. However, is there an easy way to access weather forecast information in the terminal environment, where I don't have access to desktop widgets or web browser?

|

||||

|

||||

For Linux desktop users, there are many ways to access weather forecasts, e.g., using standalone weather apps, desktop widgets, or panel applets. If your work environment is terminal-based, there are also several ways to access weather forecasts from the command line.

|

||||

|

||||

Among them is [wego][1], **a cute little weather app for the terminal**. Using an ncurses-based fancy interface, this command-line app allows you to see current weather conditions and forecasts at a glance. It retrieves the weather forecasts for the next 5 days via a weather forecast API.

|

||||

|

||||

### Install Wego on Linux ###

|

||||

|

||||

Installation of wego is pretty simple. wego is written in Go language, thus the first step is to [install Go language][2]. After installing Go, proceed to install wego as follows.

|

||||

|

||||

$ go get github.com/schachmat/wego

|

||||

|

||||

The wego tool will be installed under $GOPATH/bin. So add $GOPATH/bin to your $PATH variable.

|

||||

|

||||

$ echo 'export PATH="$PATH:$GOPATH/bin"' >> ~/.bashrc

|

||||

$ source ~/.bashrc

|

||||

|

||||

Now go ahead and invoke wego from the command line.

|

||||

|

||||

$ wego

|

||||

|

||||

The first time you run wego, it will generate a config file (~/.wegorc), where you need to specify a weather API key.

|

||||

|

||||

You can obtain a free API key from [worldweatheronline.com][3]. Free sign-up is quick and easy. You only need a valid email address.

|

||||

|

||||

|

||||

|

||||

Your .wegorc will look like the following.

|

||||

|

||||

|

||||

|

||||

Other than API key, you can specify in ~/.wegorc your preferred location, use of metric/imperial units, and language.

|

||||

|

||||

Note that the weather API is rate-limited; 5 queries per second, and 250 queries per day.

|

||||

|

||||

When you invoke wego command again, you will see the latest weather forecast (of your preferred location), shown as follows.

|

||||

|

||||

|

||||

|

||||

The displayed weather information includes: (1) temperature, (2) wind direction and speed, (3) viewing distance, and (4) precipitation amount and probability.

|

||||

|

||||

By default, it will show 3-day weather forecast. To change this behavior, you can supply the number of days (upto five) as an argument. For example, to see 5-day forecast:

|

||||

|

||||

$ wego 5

|

||||

|

||||

If you want to check the weather of any other location, you can specify the city name.

|

||||

|

||||

$ wego Seattle

|

||||

|

||||

### Troubleshooting ###

|

||||

|

||||

1. You encounter the following error while running wego.

|

||||

|

||||

user: Current not implemented on linux/amd64

|

||||

|

||||

This error can happen when you run wego on a platform which is not supported by the native Go compiler gc (e.g., Fedora). In that case, you can compile the program using gccgo, a compiler-frontend for Go language. This can be done as follows.

|

||||

|

||||

$ sudo yum install gcc-go

|

||||

$ go get -compiler=gccgo github.com/schachmat/wego

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/weather-forecasts-command-line-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://ask.xmodulo.com/author/nanni

|

||||

[1]:https://github.com/schachmat/wego

|

||||

[2]:http://ask.xmodulo.com/install-go-language-linux.html

|

||||

[3]:https://developer.worldweatheronline.com/auth/register

|

||||

@ -1,48 +0,0 @@

|

||||

Enable Automatic System Updates In Ubuntu

|

||||

================================================================================

|

||||

Before seeing **how to enable automatic system updates in Ubuntu**, first let’s see why should we do it in the first place.

|

||||

|

||||

By default Ubuntu checks for updates daily. When there are security updates, it shows immediately but for other updates (i.e. regular software updates) it pop ups once a week. So, if you have been using Ubuntu for a while, this may be a familiar sight for you:

|

||||

|

||||

|

||||

|

||||

Now if you are a normal desktop user, you don’t really care about what kind of updates are these. And this is not entirely a bad thing. You trust Ubuntu to provide you good updates, right? So, you just select ‘Install Now’ most of the time, don’t you?

|

||||

|

||||

And all you do is to click on Install Now, why not enable the automatic system updates? Enabling automatic system updates means all the latest updates will be automatically downloaded and installed without requiring any actions from you. Isn’t it convenient?

|

||||

|

||||

### Enable automatic updates in Ubuntu ###

|

||||

|

||||

I am using Ubuntu 15.04 in this tutorial but the steps are the same for Ubuntu 14.04 as well.

|

||||

|

||||

Go to Unity Dash and look for Software & Updates:

|

||||

|

||||

|

||||

|

||||

This will open the Software sources settings for you. Click on Updates tab here:

|

||||

|

||||

|

||||

|

||||

In here, you’ll see the default settings which is daily check for updates and immediate notification for security updates.

|

||||

|

||||

|

||||

|

||||

All you need to do is to change the action which reads “When there are” to “Download and install automatically”. This will download all the available updates and install them automatically.

|

||||

|

||||

|

||||

|

||||

That’s it. Close it and you have automatic updates enabled in Ubuntu. In fact this tutorial is pretty similar to [changing update notification frequency in Ubuntu][1].

|

||||

|

||||

Do you use automatic updates installation or you prefer to install them manually?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/automatic-system-updates-ubuntu/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/abhishek/

|

||||

[1]:http://itsfoss.com/ubuntu-notify-updates-frequently/

|

||||

@ -1,82 +0,0 @@

|

||||

translation by strugglingyouth

|

||||

Linux FAQs with Answers--How to find out which CPU core a process is running on

|

||||

================================================================================

|

||||

> Question: I have a Linux process running on my multi-core processor system. How can I find out which CPU core the process is running on?

|

||||

|

||||

When you run performance-critical HPC applications or network-heavy workload on [multi-core NUMA processors][1], CPU/memory affinity is one important factor to consider to maximize their performance. Scheduling closely related processes on the same NUMA node can reduce slow remote memory access. On processors like Intel's Sandy Bridge processor which has an integrated PCIe controller, you want to schedule network I/O workload on the same NUMA node as the NIC card to exploit PCI-to-CPU affinity.

|

||||

|

||||

As part of performance tuning or troubleshooting, you may want to know on which CPU core (or NUMA node) a particular process is currently scheduled.

|

||||

|

||||

Here are several ways to **find out which CPU core is a given Linux process or a thread is scheduled on**.

|

||||

|

||||

### Method One ###

|

||||

|

||||

If a process is explicitly pinned to a particular CPU core using commands like [taskset][2], you can find out the pinned CPU using the following taskset command:

|

||||

|

||||

$ taskset -c -p <pid>

|

||||

|

||||

For example, if the process you are interested in has PID 5357:

|

||||

|

||||

$ taskset -c -p 5357

|

||||

|

||||

----------

|

||||

|

||||

pid 5357's current affinity list: 5

|

||||

|

||||

The output says the process is pinned to CPU core 5.

|

||||

|

||||

However, if you haven't explicitly pinned the process to any CPU core, you will get something like the following as the affinity list.

|

||||

|

||||

pid 5357's current affinity list: 0-11

|

||||

|

||||

The output indicates that the process can potentially be scheduled on any CPU core from 0 to 11. So in this case, taskset is not useful in identifying which CPU core the process is currently assigned to, and you should use other methods as described below.

|

||||

|

||||

### Method Two ###

|

||||

|

||||

The ps command can tell you the CPU ID each process/thread is currently assigned to (under "PSR" column).

|

||||

|

||||

$ ps -o pid,psr,comm -p <pid>

|

||||

|

||||

----------

|

||||

|

||||

PID PSR COMMAND

|

||||

5357 10 prog

|

||||

|

||||

The output says the process with PID 5357 (named "prog") is currently running on CPU core 10. If the process is not pinned, the PSR column can keep changing over time depending on where the kernel scheduler assigns the process.

|

||||

|

||||

### Method Three ###

|

||||

|

||||

The top command can also show the CPU assigned to a given process. First, launch top command with "p" option. Then press 'f' key, and add "Last used CPU" column to the display. The currently used CPU core will appear under "P" (or "PSR") column.

|

||||

|

||||

$ top -p 5357

|

||||

|

||||

|

||||

|

||||

Compared to ps command, the advantage of using top command is that you can continuously monitor how the assigned CPU changes over time.

|

||||

|

||||

### Method Four ###

|

||||

|

||||

Yet another method to check the currently used CPU of a process/thread is to use [htop command][3].

|

||||

|

||||

Launch htop from the command line. Press <F2> key, go to "Columns", and add PROCESSOR under "Available Columns".

|

||||

|

||||

The currently used CPU ID of each process will appear under "CPU" column.

|

||||

|

||||

|

||||

|

||||

Note that all previous commands taskset, ps and top assign CPU core IDs 0, 1, 2, ..., N-1. However, htop assigns CPU core IDs starting from 1 (upto N).

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/cpu-core-process-is-running.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://ask.xmodulo.com/author/nanni

|

||||

[1]:http://xmodulo.com/identify-cpu-processor-architecture-linux.html

|

||||

[2]:http://xmodulo.com/run-program-process-specific-cpu-cores-linux.html

|

||||

[3]:http://ask.xmodulo.com/install-htop-centos-rhel.html

|

||||

@ -0,0 +1,202 @@

|

||||

A Repository with 44 Years of Unix Evolution

|

||||

================================================================================

|

||||

### Abstract ###

|

||||

|

||||

The evolution of the Unix operating system is made available as a version-control repository, covering the period from its inception in 1972 as a five thousand line kernel, to 2015 as a widely-used 26 million line system. The repository contains 659 thousand commits and 2306 merges. The repository employs the commonly used Git system for its storage, and is hosted on the popular GitHub archive. It has been created by synthesizing with custom software 24 snapshots of systems developed at Bell Labs, Berkeley University, and the 386BSD team, two legacy repositories, and the modern repository of the open source FreeBSD system. In total, 850 individual contributors are identified, the early ones through primary research. The data set can be used for empirical research in software engineering, information systems, and software archaeology.

|

||||

|

||||

### 1 Introduction ###

|

||||

|

||||

The Unix operating system stands out as a major engineering breakthrough due to its exemplary design, its numerous technical contributions, its development model, and its widespread use. The design of the Unix programming environment has been characterized as one offering unusual simplicity, power, and elegance [[1][1]]. On the technical side, features that can be directly attributed to Unix or were popularized by it include [[2][2]]: the portable implementation of the kernel in a high level language; a hierarchical file system; compatible file, device, networking, and inter-process I/O; the pipes and filters architecture; virtual file systems; and the shell as a user-selectable regular process. A large community contributed software to Unix from its early days [[3][3]], [[4][4],pp. 65-72]. This community grew immensely over time and worked using what are now termed open source software development methods [[5][5],pp. 440-442]. Unix and its intellectual descendants have also helped the spread of the C and C++ programming languages, parser and lexical analyzer generators (*yacc, lex*), document preparation tools (*troff, eqn, tbl*), scripting languages (*awk, sed, Perl*), TCP/IP networking, and configuration management systems (*SCCS, RCS, Subversion, Git*), while also forming a large part of the modern internet infrastructure and the web.

|

||||

|

||||

Luckily, important Unix material of historical importance has survived and is nowadays openly available. Although Unix was initially distributed with relatively restrictive licenses, the most significant parts of its early development have been released by one of its right-holders (Caldera International) under a liberal license. Combining these parts with software that was developed or released as open source software by the University of California, Berkeley and the FreeBSD Project provides coverage of the system's development over a period ranging from June 20th 1972 until today.

|

||||

|

||||

Curating and processing available snapshots as well as old and modern configuration management repositories allows the reconstruction of a new synthetic Git repository that combines under a single roof most of the available data. This repository documents in a digital form the detailed evolution of an important digital artefact over a period of 44 years. The following sections describe the repository's structure and contents (Section [II][6]), the way it was created (Section [III][7]), and how it can be used (Section [IV][8]).

|

||||

|

||||

### 2 Data Overview ###

|

||||

|

||||

The 1GB Unix history Git repository is made available for cloning on [GitHub][9].[1][10] Currently[2][11] the repository contains 659 thousand commits and 2306 merges from about 850 contributors. The contributors include 23 from the Bell Labs staff, 158 from Berkeley's Computer Systems Research Group (CSRG), and 660 from the FreeBSD Project.

|

||||

|

||||

The repository starts its life at a tag identified as *Epoch*, which contains only licensing information and its modern README file. Various tag and branch names identify points of significance.

|

||||

|

||||

- *Research-VX* tags correspond to six research editions that came out of Bell Labs. These start with *Research-V1* (4768 lines of PDP-11 assembly) and end with *Research-V7* (1820 mostly C files, 324kLOC).

|

||||

- *Bell-32V* is the port of the 7th Edition Unix to the DEC/VAX architecture.

|

||||

- *BSD-X* tags correspond to 15 snapshots released from Berkeley.

|

||||

- *386BSD-X* tags correspond to two open source versions of the system, with the Intel 386 architecture kernel code mainly written by Lynne and William Jolitz.

|

||||

- *FreeBSD-release/X* tags and branches mark 116 releases coming from the FreeBSD project.

|

||||

|

||||

In addition, branches with a *-Snapshot-Development* suffix denote commits that have been synthesized from a time-ordered sequence of a snapshot's files, while tags with a *-VCS-Development* suffix mark the point along an imported version control history branch where a particular release occurred.

|

||||

|

||||

The repository's history includes commits from the earliest days of the system's development, such as the following.

|

||||

|

||||

commit c9f643f59434f14f774d61ee3856972b8c3905b1

|

||||

Author: Dennis Ritchie <research!dmr>

|

||||

Date: Mon Dec 2 18:18:02 1974 -0500

|

||||

Research V5 development

|

||||

Work on file usr/sys/dmr/kl.c

|

||||

|

||||

Merges between releases that happened along the system's evolution, such as the development of BSD 3 from BSD 2 and Unix 32/V, are also correctly represented in the Git repository as graph nodes with two parents.

|

||||

|

||||

More importantly, the repository is constructed in a way that allows *git blame*, which annotates source code lines with the version, date, and author associated with their first appearance, to produce the expected code provenance results. For example, checking out the *BSD-4* tag, and running git blame on the kernel's *pipe.c* file will show lines written by Ken Thompson in 1974, 1975, and 1979, and by Bill Joy in 1980. This allows the automatic (though computationally expensive) detection of the code's provenance at any point of time.

|

||||

|

||||

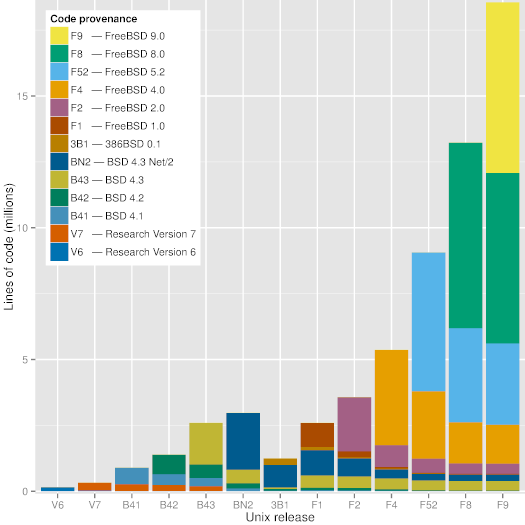

|

||||

|

||||

Figure 1: Code provenance across significant Unix releases.

|

||||

|

||||

As can be seen in Figure [1][12], a modern version of Unix (FreeBSD 9) still contains visible chunks of code from BSD 4.3, BSD 4.3 Net/2, and FreeBSD 2.0. Interestingly, the Figure shows that code developed during the frantic dash to create an open source operating system out of the code released by Berkeley (386BSD and FreeBSD 1.0) does not seem to have survived. The oldest code in FreeBSD 9 appears to be an 18-line sequence in the C library file timezone.c, which can also be found in the 7th Edition Unix file with the same name and a time stamp of January 10th, 1979 - 36 years ago.

|

||||

|

||||

### 3 Data Collection and Processing ###

|

||||

|

||||

The goal of the project is to consolidate data concerning the evolution of Unix in a form that helps the study of the system's evolution, by entering them into a modern revision repository. This involves collecting the data, curating them, and synthesizing them into a single Git repository.

|

||||

|

||||

|

||||

|

||||

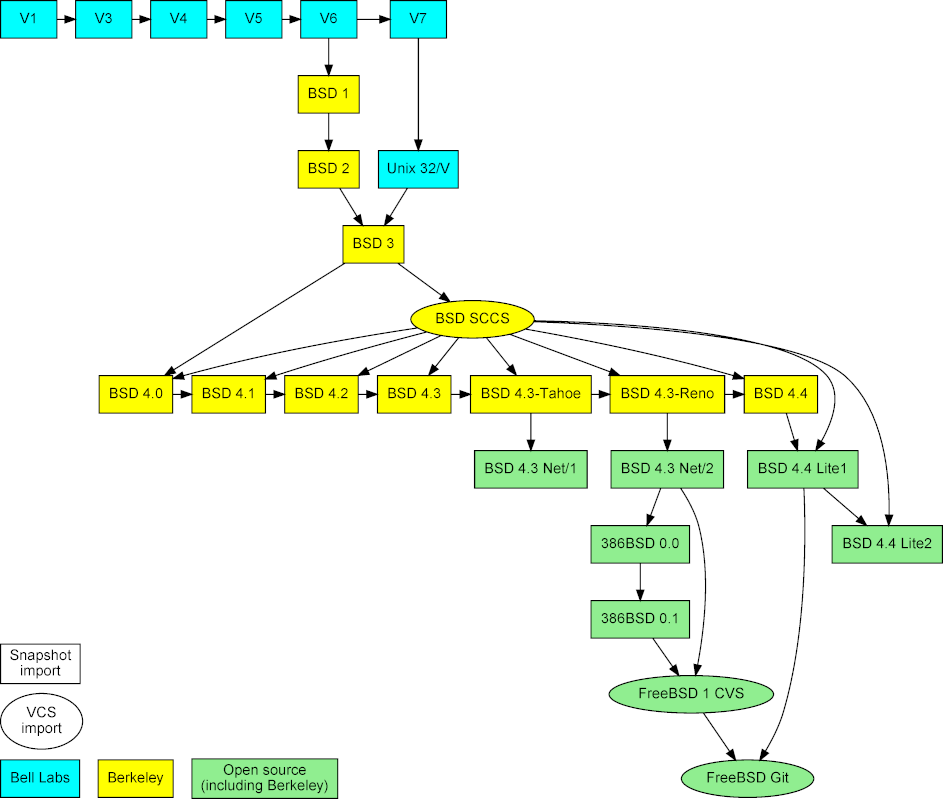

Figure 2: Imported Unix snapshots, repositories, and their mergers.

|

||||

|

||||

The project is based on three types of data (see Figure [2][13]). First, snapshots of early released versions, which were obtained from the [Unix Heritage Society archive][14],[3][15] the [CD-ROM images][16] containing the full source archives of CSRG,[4][17] the [OldLinux site][18],[5][19] and the [FreeBSD archive][20].[6][21] Second, past and current repositories, namely the CSRG SCCS [[6][22]] repository, the FreeBSD 1 CVS repository, and the [Git mirror of modern FreeBSD development][23].[7][24] The first two were obtained from the same sources as the corresponding snapshots.

|

||||

|

||||