mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

Merge remote-tracking branch 'upstream/master' into patch-2

This commit is contained in:

commit

0ee8bb305c

@ -0,0 +1,81 @@

|

||||

An introduction to Ansible Operators in Kubernetes

|

||||

======

|

||||

The new Operator SDK makes it easy to create a Kubernetes controller to deploy and manage a service or application in a cluster.

|

||||

|

||||

|

||||

For years, Ansible has been a go-to choice for infrastructure automation. As Kubernetes adoption has skyrocketed, Ansible has continued to shine in the emerging container orchestration ecosystem.

|

||||

|

||||

Ansible fits naturally into a Kubernetes workflow, using YAML to describe the desired state of the world. Multiple projects, including the [Automation Broker][1], are adapting Ansible for use behind specific APIs. This article will focus on a new technique, created through a joint effort by the Ansible core team and the developers of Automation Broker, that uses Ansible to create Operators with minimal effort.

|

||||

|

||||

### What is an Operator?

|

||||

|

||||

An [Operator][2] is a Kubernetes controller that deploys and manages a service or application in a cluster. It automates human operation knowledge and best practices to keep services running and healthy. Input is received in the form of a custom resource. Let's walk through that using a Memcached Operator as an example.

|

||||

|

||||

The [Memcached Operator][3] can be deployed as a service running in a cluster, and it includes a custom resource definition (CRD) for a resource called Memcached. The end user creates an instance of that custom resource to describe how the Memcached Deployment should look. The following example requests a Deployment with three Pods.

|

||||

|

||||

```

|

||||

apiVersion: "cache.example.com/v1alpha1"

|

||||

kind: "Memcached"

|

||||

metadata:

|

||||

name: "example-memcached"

|

||||

spec:

|

||||

size: 3

|

||||

```

|

||||

|

||||

The Operator's job is called reconciliation—continuously ensuring that what is specified in the "spec" matches the real state of the world. This sample Operator delegates Pod management to a Deployment controller. So while it does not directly create or delete Pods, if you change the size, the Operator's reconciliation loop ensures that the new value is applied to the Deployment resource it created.

|

||||

|

||||

A mature Operator can deploy, upgrade, back up, repair, scale, and reconfigure an application that it manages. As you can see, not only does an Operator provide a simple way to deploy arbitrary services using only native Kubernetes APIs; it enables full day-two (post-deployment, such as updates, backups, etc.) management, limited only by what you can code.

|

||||

|

||||

### Creating an Operator

|

||||

|

||||

The [Operator SDK][4] makes it easy to get started. It lays down the skeleton of a new Operator with many of the complex pieces already handled. You can focus on defining your custom resources and coding the reconciliation logic in Go. The SDK saves you a lot of time and ongoing maintenance burden, but you will still end up owning a substantial software project.

|

||||

|

||||

Ansible was recently introduced to the Operator SDK as an even simpler way to make an Operator, with no coding required. To create an Operator, you merely:

|

||||

|

||||

* Create a CRD in the form of YAML

|

||||

* Define what reconciliation should do by creating an Ansible role or playbook

|

||||

|

||||

|

||||

|

||||

It's YAML all the way down—a familiar experience for Kubernetes users.

|

||||

|

||||

### How does it work?

|

||||

|

||||

There is a preexisting Ansible Operator base container image that includes Ansible, [ansible-runner][5], and the Operator's executable service. The SDK helps to build a layer on top that adds one or more CRDs and associates each with an Ansible role or playbook.

|

||||

|

||||

When it's running, the Operator uses a Kubernetes feature to "watch" for changes to any resource of the type defined. Upon receiving such a notification, it reconciles the resource that changed. The Operator runs the corresponding role or playbook, and information about the resource is passed to Ansible as [extra-vars][6].

|

||||

|

||||

### Using Ansible with Kubernetes

|

||||

|

||||

Following several iterations, the Ansible community has produced a remarkably easy-to-use module for working with Kubernetes. Especially if you have any experience with a Kubernetes module prior to Ansible 2.6, you owe it to yourself to have a look at the [k8s module][7]. Creating, retrieving, and updating resources is a natural experience that will feel familiar to any Kubernetes user. It makes creating an Operator that much easier.

|

||||

|

||||

### Give it a try

|

||||

|

||||

If you need to build a Kubernetes Operator, doing so with Ansible could save time and complexity. To learn more, head over to the Operator SDK documentation and work through the [Getting Started Guide][8] for Ansible-based Operators. Then join us on the [Operator Framework mailing list][9] and let us know what you think.

|

||||

|

||||

Michael Hrivnak will present [Automating Multi-Service Deployments on Kubernetes][10] at [LISA18][11], October 29-31 in Nashville, Tennessee, USA.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/10/ansible-operators-kubernetes

|

||||

|

||||

作者:[Michael Hrivnak][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/mhrivnak

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/article/18/2/automated-provisioning-kubernetes

|

||||

[2]: https://coreos.com/operators/

|

||||

[3]: https://github.com/operator-framework/operator-sdk-samples/tree/master/memcached-operator

|

||||

[4]: https://github.com/operator-framework/operator-sdk/

|

||||

[5]: https://github.com/ansible/ansible-runner

|

||||

[6]: https://docs.ansible.com/ansible/latest/user_guide/playbooks_variables.html#passing-variables-on-the-command-line

|

||||

[7]: https://docs.ansible.com/ansible/2.6/modules/k8s_module.html

|

||||

[8]: https://github.com/operator-framework/operator-sdk/blob/master/doc/ansible/user-guide.md

|

||||

[9]: https://groups.google.com/forum/#!forum/operator-framework

|

||||

[10]: https://www.usenix.org/conference/lisa18/presentation/hrivnak

|

||||

[11]: https://www.usenix.org/conference/lisa18

|

||||

@ -0,0 +1,87 @@

|

||||

piwheels: Speedy Python package installation for the Raspberry Pi

|

||||

======

|

||||

https://opensource.com/article/18/10/piwheels-python-raspberrypi

|

||||

|

||||

|

||||

|

||||

One of the great things about the Python programming language is [PyPI][1], the Python Package Index, where third-party libraries are hosted, available for anyone to install and gain access to pre-existing functionality without starting from scratch. These libraries are handy utilities, written by members of the community, that aren't found within the Python standard library. But they work in much the same way—you import them into your code and have access to functions and classes you didn't write yourself.

|

||||

|

||||

### The cross-platform problem

|

||||

|

||||

Many of the 150,000+ libraries hosted on PyPI are written in Python, but that's not the only option—you can write Python libraries in C, C++, or anything with Python bindings. The usual benefit of writing a library in C or C++ is speed. The NumPy project is a good example: NumPy provides highly powerful mathematical functionality for dealing with matrix operations. It is highly optimized code that allows users to write in Python but have access to speedy mathematics operations.

|

||||

|

||||

The problem comes when trying to distribute libraries for others to use cross-platform. The standard is to create built distributions called Python wheels. While pure Python libraries are automatically compatible cross-platform, those implemented in C/C++ must be built separately for each operating system, Python version, and system architecture. So, if a library wanted to support Windows, MacOS, and Linux, for both 32-bit and 64-bit computers, and for Python 2.7, 3.4, 3.5, and 3.6, that would require 24 different versions! Some packages do this, but others rely on users building the package from the source code, which can take a long time and can often be complex.

|

||||

|

||||

### Raspberry Pi and Arm

|

||||

|

||||

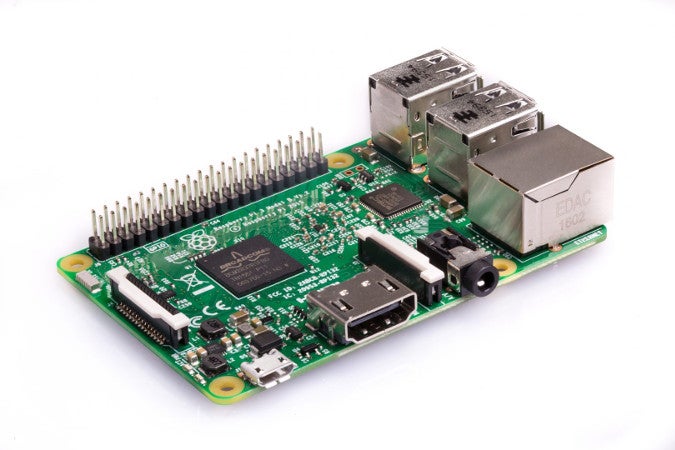

While the Raspberry Pi runs Linux, it's not the same architecture as your regular PC—it's Arm, rather than Intel. That means the Linux wheels don't work, and Raspberry Pi users had to build from source—until the piwheels project came to fruition last year. [Piwheels][2] is an open source project that aims to build Raspberry Pi platform wheels for every package on PyPI.

|

||||

|

||||

|

||||

|

||||

Packages are natively compiled on Raspberry Pi 3 hardware and hosted in a data center provided by UK-based [Mythic Beasts][3], which provides cloud Pis as part of its hosting service. The piwheels website hosts the wheels in a [pip][4]-compatible web server configuration so Raspberry Pi users can use them easily. Raspbian Stretch even comes preconfigured to use piwheels.org as an additional index to PyPI by default.

|

||||

|

||||

### The piwheels stack

|

||||

|

||||

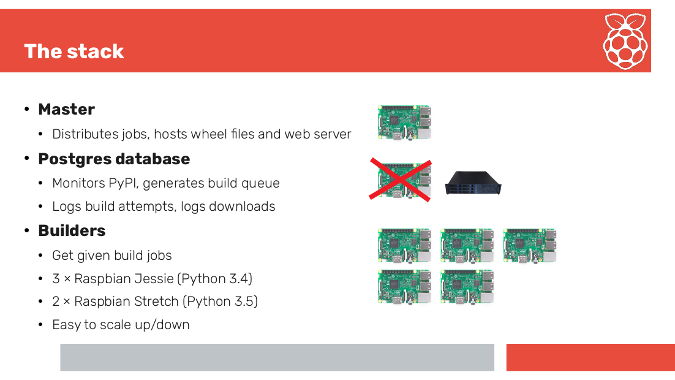

The piwheels project runs (almost) entirely on Raspberry Pi hardware:

|

||||

|

||||

* **Master**

|

||||

* A Raspberry Pi web server hosts the wheel files and distributes jobs to the builder Pis.

|

||||

* **Database server**

|

||||

* All package information is stored in a [Postgres database][5].

|

||||

* The master logs build attempts and downloads.

|

||||

* **Builders**

|

||||

* Builder Pis are given build jobs to attempt, and they communicate with the database.

|

||||

* The backlog of packages on PyPI was completed using around 20 Raspberry Pis.

|

||||

* A smaller number of Pis is required to keep up with new releases. Currently, there are three with Raspbian Jessie (Python 3.4) and two with Raspbian Stretch (Python 3.5).

|

||||

|

||||

|

||||

|

||||

The database server was originally a Raspberry Pi but was moved to another server when the database got too large.

|

||||

|

||||

|

||||

|

||||

### Time saved

|

||||

|

||||

Around 500,000 packages are downloaded from piwheels.org every month.

|

||||

|

||||

Every time a package is built by piwheels or downloaded by a user, its status information (including build duration) is recorded in a database. Therefore, it's possible to calculate how much time has been saved with pre-compiled packages.

|

||||

|

||||

In the 10 months that the service has been running, over 25 years of build time has been saved.

|

||||

|

||||

### Great for projects

|

||||

|

||||

Raspberry Pi project tutorials requiring Python libraries often include warnings like "this step takes a few hours"—but that's no longer true, thanks to piwheels. Piwheels makes it easy for makers and developers to dive straight into their project and not get bogged down waiting for software to install. Amazing libraries are just a **pip install** away; no need to wait for compilation.

|

||||

|

||||

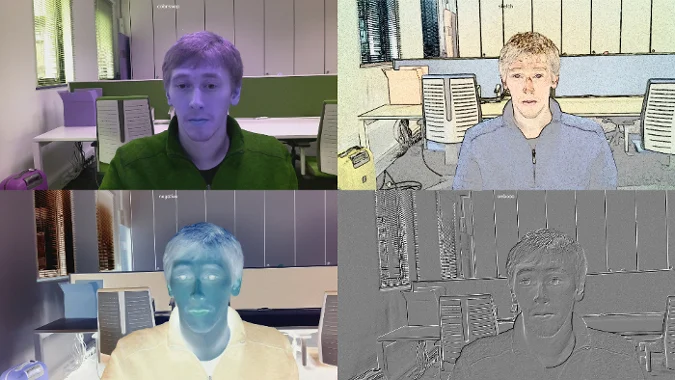

Piwheels has wheels for NumPy, SciPy, OpenCV, Keras, and even [Tensorflow][6], Google's machine learning framework. These libraries are great for [home projects][7], including image and facial recognition with the [camera module][8]. For inspiration, take a look at the Raspberry Pi category on [PyImageSearch][9] (which is one of my [favorite Raspberry Pi blogs][10]) to follow.

|

||||

|

||||

|

||||

|

||||

Read more about piwheels on the project's [blog][11] and the [Raspberry Pi blog][12], see the [source code on GitHub][13], and check out the [piwheels website][2]. If you want to contribute to the project, check the [missing packages tag][14] and see if you can successfully build one of them.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/10/piwheels-python-raspberrypi

|

||||

|

||||

作者:[Ben Nuttall][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bennuttall

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://pypi.org/

|

||||

[2]: https://www.piwheels.org/

|

||||

[3]: https://www.mythic-beasts.com/order/rpi

|

||||

[4]: https://en.wikipedia.org/wiki/Pip_(package_manager)

|

||||

[5]: https://opensource.com/article/17/10/set-postgres-database-your-raspberry-pi

|

||||

[6]: https://www.tensorflow.org/

|

||||

[7]: https://opensource.com/article/17/4/5-projects-raspberry-pi-home

|

||||

[8]: https://opensource.com/life/15/6/raspberry-pi-camera-projects

|

||||

[9]: https://www.pyimagesearch.com/category/raspberry-pi/

|

||||

[10]: https://opensource.com/article/18/8/top-10-raspberry-pi-blogs-follow

|

||||

[11]: https://blog.piwheels.org/

|

||||

[12]: https://www.raspberrypi.org/blog/piwheels/

|

||||

[13]: https://github.com/bennuttall/piwheels

|

||||

[14]: https://github.com/bennuttall/piwheels/issues?q=is%3Aissue+is%3Aopen+label%3A%22missing+package%22

|

||||

@ -0,0 +1,327 @@

|

||||

Automating upstream releases with release-bot

|

||||

======

|

||||

All you need to do is file an issue into your upstream repository and release-bot takes care of the rest.

|

||||

|

||||

|

||||

If you own or maintain a GitHub repo and have ever pushed a package from it into [PyPI][1] and/or [Fedora][2], you know it requires some additional work using the Fedora infrastructure.

|

||||

|

||||

Good news: We have developed a tool called [release-bot][3] that automates the process. All you need to do is file an issue into your upstream repository and release-bot takes care of the rest. But let’s not get ahead of ourselves. First, let’s look at what needs to be set up for this automation to happen. I’ve chosen the **meta-test-family** upstream repository as an example.

|

||||

|

||||

### Configuration files for release-bot

|

||||

|

||||

There are two configuration files for release-bot: **conf.yaml** and **release-conf.yaml**.

|

||||

|

||||

#### conf.yaml

|

||||

|

||||

**conf.yaml** must be accessible during bot initialization; it specifies how to access the GitHub repository. To show that, I have created a new git repository named **mtf-release-bot** , which contains **conf.yaml** and the other secret files.

|

||||

|

||||

```

|

||||

repository_name: name

|

||||

repository_owner: owner

|

||||

# https://help.github.com/articles/creating-a-personal-access-token-for-the-command-line/

|

||||

github_token: xxxxxxxxxxxxxxxxxxxxxxxxx

|

||||

# time in seconds during checks for new releases

|

||||

refresh_interval: 180

|

||||

```

|

||||

|

||||

For the meta-test-family case, the configuration file looks like this:

|

||||

|

||||

```

|

||||

repository_name: meta-test-family

|

||||

repository_owner: fedora-modularity

|

||||

github_token: xxxxxxxxxxxxxxxxxxxxx

|

||||

refresh_interval: 180

|

||||

```

|

||||

|

||||

#### release-conf.yaml

|

||||

|

||||

**release-conf.yaml** must be stored [in the repository itself][4]; it specifies how to do GitHub/PyPI/Fedora releases.

|

||||

|

||||

```

|

||||

# list of major python versions that bot will build separate wheels for

|

||||

python_versions:

|

||||

- 2

|

||||

- 3

|

||||

# optional:

|

||||

changelog:

|

||||

- Example changelog entry

|

||||

- Another changelog entry

|

||||

# this is info for the authorship of the changelog

|

||||

# if this is not set, person who merged the release PR will be used as an author

|

||||

author_name: John Doe

|

||||

author_email: johndoe@example.com

|

||||

# whether to release on fedora. False by default

|

||||

fedora: false

|

||||

# list of fedora branches bot should release on. Master is always implied

|

||||

fedora_branches:

|

||||

- f27

|

||||

```

|

||||

|

||||

For the meta-test-family case, the configuration file looks like this:

|

||||

|

||||

```

|

||||

python_versions:

|

||||

- 2

|

||||

fedora: true

|

||||

fedora_branches:

|

||||

- f29

|

||||

- f28

|

||||

trigger_on_issue: true

|

||||

```

|

||||

|

||||

#### PyPI configuration file

|

||||

|

||||

The file **.pypirc** , stored in your **mtf-release-bot** private repository, is needed for uploading the new package version into PyPI:

|

||||

|

||||

```

|

||||

[pypi]

|

||||

username = phracek

|

||||

password = xxxxxxxx

|

||||

```

|

||||

|

||||

Private SSH key, **id_rsa** , that you configured in [FAS][5].

|

||||

|

||||

The final structure of the git repository, with **conf.yaml** and the others, looks like this:

|

||||

|

||||

```

|

||||

$ ls -la

|

||||

total 24

|

||||

drwxrwxr-x 3 phracek phracek 4096 Sep 24 12:38 .

|

||||

drwxrwxr-x. 20 phracek phracek 4096 Sep 24 12:37 ..

|

||||

-rw-rw-r-- 1 phracek phracek 199 Sep 24 12:26 conf.yaml

|

||||

drwxrwxr-x 8 phracek phracek 4096 Sep 24 12:38 .git

|

||||

-rw-rw-r-- 1 phracek phracek 3243 Sep 24 12:38 id_rsa

|

||||

-rw------- 1 phracek phracek 78 Sep 24 12:28 .pypirc

|

||||

```

|

||||

|

||||

### Requirements

|

||||

|

||||

**requirements.txt** with both versions of pip. You must also set up your PyPI login details in **$HOME/.pypirc** , as described in the `-k/–keytab`. Also, **fedpkg** requires that you have an SSH key in your keyring that you uploaded to FAS.

|

||||

|

||||

### How to deploy release-bot

|

||||

|

||||

Releasing to PyPI requires the [wheel package][6] for both Python 2 and Python 3, so installwith both versions of pip. You must also set up your PyPI login details in, as described in the [PyPI documentation][7] . If you are releasing to Fedora, you must have an active [Kerberos][8] ticket while the bot runs, or specify the path to the Kerberos keytab file with. Also,requires that you have an SSH key in your keyring that you uploaded to FAS.

|

||||

|

||||

There are two ways to use release-bot: as a Docker image or as an OpenShift template.

|

||||

|

||||

#### Docker image

|

||||

|

||||

Let’s build the image using the `s2i` command:

|

||||

|

||||

```

|

||||

$ s2i build $CONFIGURATION_REPOSITORY_URL usercont/release-bot app-name

|

||||

```

|

||||

|

||||

where `$CONFIGURATION_REPOSITORY_URL` is a reference to the GitHub repository, like _https:// <GIT_LAB_PATH>/mtf-release-conf._

|

||||

|

||||

Let’s look at Docker images:

|

||||

|

||||

```

|

||||

$ docker images

|

||||

REPOSITORY TAG IMAGE ID CREATED SIZE

|

||||

mtf-release-bot latest 08897871e65e 6 minutes ago 705 MB

|

||||

docker.io/usercont/release-bot latest 5b34aa670639 9 days ago 705 MB

|

||||

```

|

||||

|

||||

Now let’s try to run the **mtf-release-bot** image with this command:

|

||||

|

||||

```

|

||||

$ docker run mtf-release-bot

|

||||

---> Setting up ssh key...

|

||||

Agent pid 12

|

||||

Identity added: ./.ssh/id_rsa (./.ssh/id_rsa)

|

||||

12:21:18.982 configuration.py DEBUG Loaded configuration for fedora-modularity/meta-test-family

|

||||

12:21:18.982 releasebot.py INFO release-bot v0.4.1 reporting for duty!

|

||||

12:21:18.982 github.py DEBUG Fetching release-conf.yaml

|

||||

12:21:37.611 releasebot.py DEBUG No merged release PR found

|

||||

12:21:38.282 releasebot.py INFO Found new release issue with version: 0.8.5

|

||||

12:21:42.565 releasebot.py DEBUG No more open issues found

|

||||

12:21:43.190 releasebot.py INFO Making a new PR for release of version 0.8.5 based on an issue.

|

||||

12:21:46.709 utils.py DEBUG ['git', 'clone', 'https://github.com/fedora-modularity/meta-test-family.git', '.']

|

||||

|

||||

12:21:47.401 github.py DEBUG {"message":"Branch not found","documentation_url":"https://developer.github.com/v3/repos/branches/#get-branch"}

|

||||

12:21:47.994 utils.py DEBUG ['git', 'config', 'user.email', 'the.conu.bot@gmail.com']

|

||||

|

||||

12:21:47.996 utils.py DEBUG ['git', 'config', 'user.name', 'Release bot']

|

||||

|

||||

12:21:48.009 utils.py DEBUG ['git', 'checkout', '-b', '0.8.5-release']

|

||||

|

||||

12:21:48.014 utils.py ERROR No version files found. Aborting version update.

|

||||

12:21:48.014 utils.py WARNING No CHANGELOG.md present in repository

|

||||

[Errno 2] No such file or directory: '/tmp/tmpmbvb05jq/CHANGELOG.md'

|

||||

12:21:48.020 utils.py DEBUG ['git', 'commit', '--allow-empty', '-m', '0.8.5 release']

|

||||

[0.8.5-release 7ee62c6] 0.8.5 release

|

||||

|

||||

12:21:51.342 utils.py DEBUG ['git', 'push', 'origin', '0.8.5-release']

|

||||

|

||||

12:21:51.905 github.py DEBUG No open PR's found

|

||||

12:21:51.905 github.py DEBUG Attempting a PR for 0.8.5-release branch

|

||||

12:21:53.215 github.py INFO Created PR: https://github.com/fedora-modularity/meta-test-family/pull/243

|

||||

12:21:53.216 releasebot.py INFO I just made a PR request for a release version 0.8.5

|

||||

12:21:54.154 github.py DEBUG Comment added to PR: I just made a PR request for a release version 0.8.5

|

||||

Here's a [link to the PR](https://github.com/fedora-modularity/meta-test-family/pull/243)

|

||||

12:21:54.154 github.py DEBUG Attempting to close issue #242

|

||||

12:21:54.992 github.py DEBUG Closed issue #242

|

||||

```

|

||||

|

||||

As you can see, release-bot automatically closed the following issue, requesting a new upstream release of the meta-test-family: [https://github.com/fedora-modularity/meta-test-family/issues/243][9].

|

||||

|

||||

In addition, release-bot created a new PR with changelog. You can update the PR—for example, squash changelog—and once you merge it, it will automatically release to GitHub, and PyPI and Fedora will start.

|

||||

|

||||

You now have a working solution to easily release upstream versions of your package into PyPi and Fedora.

|

||||

|

||||

#### OpenShift template

|

||||

|

||||

Another option to deliver automated releases using release-bot is to deploy it in OpenShift.

|

||||

|

||||

The OpenShift template looks as follows:

|

||||

|

||||

```

|

||||

kind: Template

|

||||

apiVersion: v1

|

||||

metadata:

|

||||

name: release-bot

|

||||

annotations:

|

||||

description: S2I Relase-bot image builder

|

||||

tags: release-bot s2i

|

||||

iconClass: icon-python

|

||||

labels:

|

||||

template: release-bot

|

||||

role: releasebot_application_builder

|

||||

objects:

|

||||

- kind : ImageStream

|

||||

apiVersion : v1

|

||||

metadata :

|

||||

name : ${APP_NAME}

|

||||

labels :

|

||||

appid : release-bot-${APP_NAME}

|

||||

- kind : ImageStream

|

||||

apiVersion : v1

|

||||

metadata :

|

||||

name : ${APP_NAME}-s2i

|

||||

labels :

|

||||

appid : release-bot-${APP_NAME}

|

||||

spec :

|

||||

tags :

|

||||

- name : latest

|

||||

from :

|

||||

kind : DockerImage

|

||||

name : usercont/release-bot:latest

|

||||

#importPolicy:

|

||||

# scheduled: true

|

||||

- kind : BuildConfig

|

||||

apiVersion : v1

|

||||

metadata :

|

||||

name : ${APP_NAME}

|

||||

labels :

|

||||

appid : release-bot-${APP_NAME}

|

||||

spec :

|

||||

triggers :

|

||||

- type : ConfigChange

|

||||

- type : ImageChange

|

||||

source :

|

||||

type : Git

|

||||

git :

|

||||

uri : ${CONFIGURATION_REPOSITORY}

|

||||

contextDir : ${CONFIGURATION_REPOSITORY}

|

||||

sourceSecret :

|

||||

name : release-bot-secret

|

||||

strategy :

|

||||

type : Source

|

||||

sourceStrategy :

|

||||

from :

|

||||

kind : ImageStreamTag

|

||||

name : ${APP_NAME}-s2i:latest

|

||||

output :

|

||||

to :

|

||||

kind : ImageStreamTag

|

||||

name : ${APP_NAME}:latest

|

||||

- kind : DeploymentConfig

|

||||

apiVersion : v1

|

||||

metadata :

|

||||

name: ${APP_NAME}

|

||||

labels :

|

||||

appid : release-bot-${APP_NAME}

|

||||

spec :

|

||||

strategy :

|

||||

type : Rolling

|

||||

triggers :

|

||||

- type : ConfigChange

|

||||

- type : ImageChange

|

||||

imageChangeParams :

|

||||

automatic : true

|

||||

containerNames :

|

||||

- ${APP_NAME}

|

||||

from :

|

||||

kind : ImageStreamTag

|

||||

name : ${APP_NAME}:latest

|

||||

replicas : 1

|

||||

selector :

|

||||

deploymentconfig : ${APP_NAME}

|

||||

template :

|

||||

metadata :

|

||||

labels :

|

||||

appid: release-bot-${APP_NAME}

|

||||

deploymentconfig : ${APP_NAME}

|

||||

spec :

|

||||

containers :

|

||||

- name : ${APP_NAME}

|

||||

image : ${APP_NAME}:latest

|

||||

resources:

|

||||

requests:

|

||||

memory: "64Mi"

|

||||

cpu: "50m"

|

||||

limits:

|

||||

memory: "128Mi"

|

||||

cpu: "100m"

|

||||

|

||||

parameters :

|

||||

- name : APP_NAME

|

||||

description : Name of application

|

||||

value :

|

||||

required : true

|

||||

- name : CONFIGURATION_REPOSITORY

|

||||

description : Git repository with configuration

|

||||

value :

|

||||

required : true

|

||||

```

|

||||

|

||||

The easiest way to deploy the **mtf-release-bot** repository with secret files into OpenShift is to use the following two commands:

|

||||

|

||||

```

|

||||

$ curl -sLO https://github.com/user-cont/release-bot/raw/master/openshift-template.yml

|

||||

```

|

||||

|

||||

In your OpenShift instance, deploy the template by running the following command:

|

||||

|

||||

```

|

||||

oc process -p APP_NAME="mtf-release-bot" -p CONFIGURATION_REPOSITORY="git@<git_lab_path>/mtf-release-conf.git" -f openshift-template.yml | oc apply

|

||||

```

|

||||

|

||||

### Summary

|

||||

|

||||

See the [example pull request][10] in the meta-test-family upstream repository, where you'll find information about what release-bot released. Once you get to this point, you can see that release-bot is able to push new upstream versions into GitHub, PyPI, and Fedora without heavy user intervention. It automates all the steps so you don’t need to manually upload and build new upstream versions of your package.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/10/upstream-releases-pypi-fedora-release-bot

|

||||

|

||||

作者:[Petr Stone Hracek][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/phracek

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://pypi.org/

|

||||

[2]: https://getfedora.org/

|

||||

[3]: https://github.com/user-cont/release-bot

|

||||

[4]: https://github.com/fedora-modularity/meta-test-family

|

||||

[5]: https://admin.fedoraproject.org/accounts/

|

||||

[6]: https://pypi.org/project/wheel/

|

||||

[7]: https://packaging.python.org/tutorials/distributing-packages/#create-an-account

|

||||

[8]: https://web.mit.edu/kerberos/

|

||||

[9]: https://github.com/fedora-modularity/meta-test-family/issues/238

|

||||

[10]: https://github.com/fedora-modularity/meta-test-family/pull/243

|

||||

@ -0,0 +1,80 @@

|

||||

Browsing the web with Min, a minimalist open source web browser

|

||||

======

|

||||

Not every web browser needs to carry every single feature. Min puts a minimalist spin on the everyday web browser.

|

||||

|

||||

|

||||

Does the world need another web browser? Even though the days of having a multiplicity of browsers to choose from are long gone, there still are folks out there developing new applications that help us use the web.

|

||||

|

||||

One of those new-fangled browsers is [Min][1]. As its name suggests (well, suggests to me, anyway), Min is a minimalist browser. That doesn't mean it's deficient in any significant way, and its open source, Apache 2.0 license piques my interest.

|

||||

|

||||

But is Min worth a look? Let's find out.

|

||||

|

||||

### Getting going

|

||||

|

||||

Min is one of many applications written using a development framework called [Electron][2]. (It's the same framework that brought us the [Atom text editor][3].) You can [get installers][4] for Linux, MacOS, and Windows. You can also grab the [source code from GitHub][5] and compile it if you're inclined.

|

||||

|

||||

I run Manjaro Linux, and there isn't an installer for that distro. Luckily, I was able to install Min from Manjaro's package manager.

|

||||

|

||||

Once that was done, I fired up Min by pressing Alt+F2, typing **min** in the run-application box, and pressing Enter, and I was ready to go.

|

||||

|

||||

|

||||

|

||||

Min is billed as a smarter, faster web browser. It definitely is fast—at the risk of drawing the ire of denizens of certain places on the web, I'll say that it starts faster than Firefox and Chrome on the laptops with which I tried it.

|

||||

|

||||

Browsing with Min is like browsing with Firefox or Chrome. Type a URL in the address bar, press Enter, and away you go.

|

||||

|

||||

### Min's features

|

||||

|

||||

While Min doesn't pack everything you'd find in browsers like Firefox or Chrome, it doesn't do too badly.

|

||||

|

||||

Like any other browser these days, Min supports multiple tabs. It also has a feature called Tasks, which lets you group your open tabs.

|

||||

|

||||

Min's default search engine is [DuckDuckGo][6]. I really like that touch because DuckDuckGo is one of my search engines of choice. If DuckDuckGo isn't your thing, you can set another search engine as the default in Min's preferences.

|

||||

|

||||

Instead of using tools like AdBlock to filter out content you don't want, Min has a built-in ad blocker. It uses the [EasyList filters][7], which were created for AdBlock. You can block scripts and images, and Min also has a built-in tracking blocker.

|

||||

|

||||

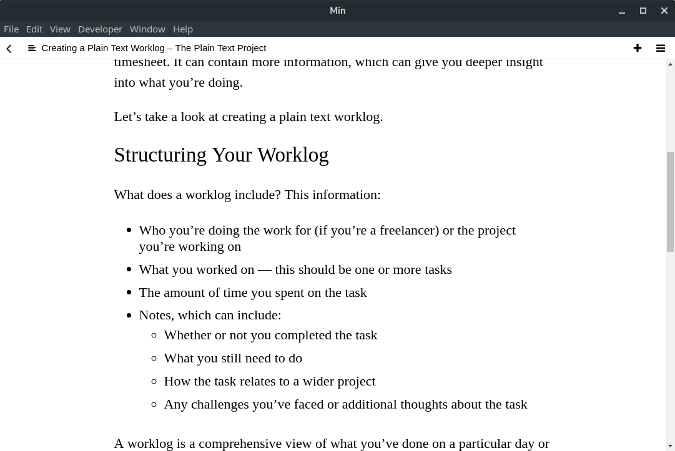

Like Firefox, Min has a reading mode called Reading List. Flipping the Reading List switch (well, clicking the icon in the address bar) removes most of the cruft from a page so you can focus on the words you're reading. Pages stay in the Reading List for 30 days.

|

||||

|

||||

|

||||

|

||||

Speaking of focus, Min also has a Focus Mode that hides and prevents you from opening other tabs. So, if you're working in a web application, you'll need to click a few times if you feel like procrastinating.

|

||||

|

||||

Of course, Min has a number of keyboard shortcuts that can make using it a lot faster. You can find a reference for those shortcuts [on GitHub][8]. You can also change a number of them in Min's preferences.

|

||||

|

||||

I was pleasantly surprised to find Min can play videos on YouTube, Vimeo, Dailymotion, and similar sites. I also played sample tracks at music retailer 7Digital. I didn't try playing music on popular sites like Spotify or Last.fm (because I don't have accounts with them).

|

||||

|

||||

|

||||

|

||||

### What's not there

|

||||

|

||||

The features that Min doesn't pack are as noticeable as the ones it does. There doesn't seem to be a way to bookmark sites. You either have to rely on Min's search history to find your favorite links, or you'll have to rely on a bookmarking service.

|

||||

|

||||

On top of that, Min doesn't support plugins. That's not a deal breaker for me—not having plugins is undoubtedly one of the reasons the browser starts and runs so quickly. I know a number of people who are … well, I wouldn't go so far to say junkies, but they really like their plugins. Min wouldn't cut it for them.

|

||||

|

||||

### Final thoughts

|

||||

|

||||

Min isn't a bad browser. It's light and fast enough to appeal to the minimalists out there. That said, it lacks features that hardcore web browser users clamor for.

|

||||

|

||||

If you want a zippy browser that isn't weighed down by all the features of so-called modern web browsers, I suggest giving Min a serious look.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/10/min-web-browser

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/scottnesbitt

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://minbrowser.github.io/min/

|

||||

[2]: http://electron.atom.io/apps/

|

||||

[3]: https://opensource.com/article/17/5/atom-text-editor-packages-writers

|

||||

[4]: https://github.com/minbrowser/min/releases/

|

||||

[5]: https://github.com/minbrowser/min

|

||||

[6]: http://duckduckgo.com

|

||||

[7]: https://easylist.to/

|

||||

[8]: https://github.com/minbrowser/min/wiki

|

||||

@ -0,0 +1,226 @@

|

||||

Chrony – An Alternative NTP Client And Server For Unix-like Systems

|

||||

======

|

||||

|

||||

|

||||

|

||||

In this tutorial, we will be discussing how to install and configure **Chrony** , an alternative NTP client and server for Unix-like systems. Chrony can synchronise the system clock faster with better time accuracy and it can be particularly useful for the systems which are not online all the time. Chrony is free, open source and supports GNU/Linux and BSD variants such as FreeBSD, NetBSD, macOS, and Solaris.

|

||||

|

||||

### Installing Chrony

|

||||

|

||||

Chrony is available in the default repositories of most Linux distributions. If you’re on Arch Linux, run the following command to install it:

|

||||

|

||||

```

|

||||

$ sudo pacman -S chrony

|

||||

```

|

||||

|

||||

On Debian, Ubuntu, Linux Mint:

|

||||

|

||||

```

|

||||

$ sudo apt-get install chrony

|

||||

```

|

||||

|

||||

On Fedora:

|

||||

|

||||

```

|

||||

$ sudo dnf install chrony

|

||||

```

|

||||

|

||||

Once installed, start **chronyd.service** daemon if it is not started already:

|

||||

|

||||

```

|

||||

$ sudo systemctl start chronyd.service

|

||||

```

|

||||

|

||||

Make it to start automatically on every reboot using command:

|

||||

|

||||

```

|

||||

$ sudo systemctl enable chronyd.service

|

||||

```

|

||||

|

||||

To verify if the Chronyd.service has been started, run:

|

||||

|

||||

```

|

||||

$ sudo systemctl status chronyd.service

|

||||

```

|

||||

|

||||

If everything is OK, you will see an output something like below.

|

||||

|

||||

```

|

||||

● chrony.service - chrony, an NTP client/server

|

||||

Loaded: loaded (/lib/systemd/system/chrony.service; enabled; vendor preset: ena

|

||||

Active: active (running) since Wed 2018-10-17 10:34:53 UTC; 3min 15s ago

|

||||

Docs: man:chronyd(8)

|

||||

man:chronyc(1)

|

||||

man:chrony.conf(5)

|

||||

Main PID: 2482 (chronyd)

|

||||

Tasks: 1 (limit: 2320)

|

||||

CGroup: /system.slice/chrony.service

|

||||

└─2482 /usr/sbin/chronyd

|

||||

|

||||

Oct 17 10:34:53 ubuntuserver systemd[1]: Starting chrony, an NTP client/server...

|

||||

Oct 17 10:34:53 ubuntuserver chronyd[2482]: chronyd version 3.2 starting (+CMDMON

|

||||

Oct 17 10:34:53 ubuntuserver chronyd[2482]: Initial frequency -268.088 ppm

|

||||

Oct 17 10:34:53 ubuntuserver systemd[1]: Started chrony, an NTP client/server.

|

||||

Oct 17 10:35:03 ubuntuserver chronyd[2482]: Selected source 85.25.84.166

|

||||

Oct 17 10:35:03 ubuntuserver chronyd[2482]: Source 85.25.84.166 replaced with 2403

|

||||

Oct 17 10:35:03 ubuntuserver chronyd[2482]: Selected source 91.189.89.199

|

||||

Oct 17 10:35:06 ubuntuserver chronyd[2482]: Selected source 106.10.186.200

|

||||

```

|

||||

|

||||

As you can see, Chrony service is started and working!

|

||||

|

||||

### Configure Chrony

|

||||

|

||||

The NTP clients needs to know which NTP servers it should contact to get the current time. We can specify the NTP servers in the **server** or **pool** directive in the NTP configuration file. Usually, the default configuration file is **/etc/chrony/chrony.conf** or **/etc/chrony.conf** depending upon the Linux distribution version. For better reliability, it is recommended to specify at least three servers.

|

||||

|

||||

The following lines are just an example taken from my Ubuntu 18.04 LTS server.

|

||||

|

||||

```

|

||||

[...]

|

||||

# About using servers from the NTP Pool Project in general see (LP: #104525).

|

||||

# Approved by Ubuntu Technical Board on 2011-02-08.

|

||||

# See http://www.pool.ntp.org/join.html for more information.

|

||||

pool ntp.ubuntu.com iburst maxsources 4

|

||||

pool 0.ubuntu.pool.ntp.org iburst maxsources 1

|

||||

pool 1.ubuntu.pool.ntp.org iburst maxsources 1

|

||||

pool 2.ubuntu.pool.ntp.org iburst maxsources 2

|

||||

[...]

|

||||

```

|

||||

|

||||

As you see in the above output, [**NTP Pool Project**][1] has been set as the default time server. For those wondering, NTP pool project is the cluster of time servers that provides NTP service for tens of millions clients across the world. It is the default time server for Ubuntu and most of the other major Linux distributions.

|

||||

|

||||

Here,

|

||||

|

||||

* the **iburst** option is used to speed up the initial synchronisation.

|

||||

* the **maxsources** refers the maximum number of NTP sources.

|

||||

|

||||

|

||||

|

||||

Please make sure that the NTP servers you have chosen are well synchronised, stable and close to your location to improve the accuracy of the time with NTP sources.

|

||||

|

||||

### Manage Chronyd from command line

|

||||

|

||||

Chrony has a command line utility named **chronyc** to control and monitor the **chrony** daemon (chronyd).

|

||||

|

||||

To check if **chrony** is synchronized, we can use the **tracking** command as shown below.

|

||||

|

||||

```

|

||||

$ chronyc tracking

|

||||

Reference ID : 6A0ABAC8 (t1.time.sg3.yahoo.com)

|

||||

Stratum : 3

|

||||

Ref time (UTC) : Wed Oct 17 11:48:51 2018

|

||||

System time : 0.000984587 seconds slow of NTP time

|

||||

Last offset : -0.000912981 seconds

|

||||

RMS offset : 0.007983995 seconds

|

||||

Frequency : 23.704 ppm slow

|

||||

Residual freq : +0.006 ppm

|

||||

Skew : 1.734 ppm

|

||||

Root delay : 0.089718960 seconds

|

||||

Root dispersion : 0.008760406 seconds

|

||||

Update interval : 515.1 seconds

|

||||

Leap status : Normal

|

||||

```

|

||||

|

||||

We can verify the current time sources that chrony uses with command:

|

||||

|

||||

```

|

||||

$ chronyc sources

|

||||

210 Number of sources = 8

|

||||

MS Name/IP address Stratum Poll Reach LastRx Last sample

|

||||

===============================================================================

|

||||

^- chilipepper.canonical.com 2 10 377 296 +102ms[ +104ms] +/- 279ms

|

||||

^- golem.canonical.com 2 10 377 302 +105ms[ +107ms] +/- 290ms

|

||||

^+ pugot.canonical.com 2 10 377 297 +36ms[ +38ms] +/- 238ms

|

||||

^- alphyn.canonical.com 2 10 377 279 -43ms[ -42ms] +/- 238ms

|

||||

^- dadns.cdnetworks.co.kr 2 10 377 1070 +40ms[ +42ms] +/- 314ms

|

||||

^* t1.time.sg3.yahoo.com 2 10 377 169 -13ms[ -11ms] +/- 80ms

|

||||

^+ sin1.m-d.net 2 10 275 567 -9633us[-7826us] +/- 115ms

|

||||

^- ns2.pulsation.fr 2 10 377 311 -75ms[ -73ms] +/- 250ms

|

||||

```

|

||||

|

||||

Chronyc utility can find the statistics of each sources, such as drift rate and offset estimation process, using **sourcestats** command.

|

||||

|

||||

```

|

||||

$ chronyc sourcestats

|

||||

210 Number of sources = 8

|

||||

Name/IP Address NP NR Span Frequency Freq Skew Offset Std Dev

|

||||

==============================================================================

|

||||

chilipepper.canonical.com 32 16 89m +6.293 14.345 +30ms 24ms

|

||||

golem.canonical.com 32 17 89m +0.312 18.887 +20ms 33ms

|

||||

pugot.canonical.com 32 18 89m +0.281 11.237 +3307us 23ms

|

||||

alphyn.canonical.com 31 20 88m -4.087 8.910 -58ms 17ms

|

||||

dadns.cdnetworks.co.kr 29 16 76m -1.094 9.895 -83ms 14ms

|

||||

t1.time.sg3.yahoo.com 32 16 91m +0.153 1.952 +2835us 4044us

|

||||

sin1.m-d.net 29 13 83m +0.049 6.060 -8466us 9940us

|

||||

ns2.pulsation.fr 32 17 88m +0.784 9.834 -62ms 22ms

|

||||

```

|

||||

|

||||

If your system is not connected to Internet, you need to notify Chrony that the system is not connected to the Internet. To do so, run:

|

||||

|

||||

```

|

||||

$ sudo chronyc offline

|

||||

[sudo] password for sk:

|

||||

200 OK

|

||||

```

|

||||

|

||||

To verify the status of your NTP sources, simply run:

|

||||

|

||||

```

|

||||

$ chronyc activity

|

||||

200 OK

|

||||

0 sources online

|

||||

8 sources offline

|

||||

0 sources doing burst (return to online)

|

||||

0 sources doing burst (return to offline)

|

||||

0 sources with unknown address

|

||||

```

|

||||

|

||||

As you see, all my NTP sources are down at the moment.

|

||||

|

||||

Once you’re connected to the Internet, just notify Chrony that your system is back online using command:

|

||||

|

||||

```

|

||||

$ sudo chronyc online

|

||||

200 OK

|

||||

```

|

||||

|

||||

To view the status of NTP source(s), run:

|

||||

|

||||

```

|

||||

$ chronyc activity

|

||||

200 OK

|

||||

8 sources online

|

||||

0 sources offline

|

||||

0 sources doing burst (return to online)

|

||||

0 sources doing burst (return to offline)

|

||||

0 sources with unknown address

|

||||

```

|

||||

|

||||

For more detailed explanation of all options and parameters, refer the man pages.

|

||||

|

||||

```

|

||||

$ man chronyc

|

||||

|

||||

$ man chronyd

|

||||

```

|

||||

|

||||

And, that’s all for now. Hope this was useful. In the subsequent tutorials, we will see how to setup a local NTP server using Chrony and configure the clients to use it to synchronise time.

|

||||

|

||||

Stay tuned!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/chrony-an-alternative-ntp-client-and-server-for-unix-like-systems/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/sk/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.ntppool.org/en/

|

||||

Loading…

Reference in New Issue

Block a user