mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

0de21ea021

210

published/20190809 Copying files in Linux.md

Normal file

210

published/20190809 Copying files in Linux.md

Normal file

@ -0,0 +1,210 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (tomjlw)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11259-1.html)

|

||||

[#]: subject: (Copying files in Linux)

|

||||

[#]: via: (https://opensource.com/article/19/8/copying-files-linux)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/sethhttps://opensource.com/users/scottnesbitthttps://opensource.com/users/greg-p)

|

||||

|

||||

在 Linux 中复制文档

|

||||

======

|

||||

|

||||

> 了解在 Linux 中多种复制文档的方式以及各自的优点。

|

||||

|

||||

|

||||

|

||||

在办公室里复印文档过去需要专门的员工与机器。如今,复制是电脑用户无需多加思考的任务。在电脑里复制数据是如此微不足道的事,以致于你还没有意识到复制就发生了,例如当拖动文档到外部硬盘的时候。

|

||||

|

||||

数字实体复制起来十分简单已是一个不争的事实,以致于大部分现代电脑用户从未考虑过其它的复制他们工作的方式。无论如何,在 Linux 中复制文档仍有几种不同的方式。每种方法取决于你的目的不同而都有其独到之处。

|

||||

|

||||

以下是一系列在 Linux、BSD 及 Mac 上复制文件的方式。

|

||||

|

||||

### 在 GUI 中复制

|

||||

|

||||

如大多数操作系统一样,如果你想的话,你可以完全用 GUI 来管理文件。

|

||||

|

||||

#### 拖拽放下

|

||||

|

||||

最浅显的复制文件的方式可能就是你以前在电脑中复制文件的方式:拖拽并放下。在大多数 Linux 桌面上,从一个本地文件夹拖拽放下到另一个本地文件夹是*移动*文件的默认方式,你可以通过在拖拽文件开始后按住 `Ctrl` 来改变这个行为。

|

||||

|

||||

你的鼠标指针可能会有一个指示,例如一个加号以显示你在复制模式。

|

||||

|

||||

![复制一个文件][2]

|

||||

|

||||

注意如果文件是放在远程系统上的,不管它是一个 Web 服务器还是在你自己网络里用文件共享协议访问的另一台电脑,默认动作经常是复制而不是移动文件。

|

||||

|

||||

#### 右击

|

||||

|

||||

如果你觉得在你的桌面拖拽文档不够精准或者有点笨拙,或者这么做会让你的手离开键盘太久,你可以经常使用右键菜单来复制文件。这取决于你所用的文件管理器,但通常来说,右键弹出的关联菜单会包括常见的操作。

|

||||

|

||||

关联菜单的“复制”动作将你的[文件路径][3](即文件在系统的位置)保存在你的剪切板中,这样你可以将你的文件*粘贴*到别处:(LCTT 译注:此处及下面的描述不确切,这里并非复制的文件路径的“字符串”,而是复制了代表文件实体的对象/指针)

|

||||

|

||||

![从右键菜单复制文件][4]

|

||||

|

||||

在这种情况下,你并没有将文件的内容复制到你的剪切版上。取而代之的是你复制了[文件路径][3]。当你粘贴时,你的文件管理器会查看剪贴板上的路径并执行复制命令,将相应路径上的文件粘贴到你准备复制到的路径。

|

||||

|

||||

### 用命令行复制

|

||||

|

||||

虽然 GUI 通常是相对熟悉的复制文件方式,用终端复制却更有效率。

|

||||

|

||||

#### cp

|

||||

|

||||

在终端上等同于在桌面上复制和粘贴文件的最显而易见的方式就是 `cp` 命令。这个命令可以复制文件和目录,也相对直接。它使用熟悉的*来源*和*目的*(必须以这样的顺序)句法,因此复制一个名为 `example.txt` 的文件到你的 `Documents` 目录就像这样:

|

||||

|

||||

```

|

||||

$ cp example.txt ~/Documents

|

||||

```

|

||||

|

||||

就像当你拖拽文件放在文件夹里一样,这个动作并不会将 `Documents` 替换为 `example.txt`。取而代之的是,`cp` 察觉到 `Documents` 是一个文件夹,就将 `example.txt` 的副本放进去。

|

||||

|

||||

你同样可以便捷有效地重命名你复制的文档:

|

||||

|

||||

```

|

||||

$ cp example.txt ~/Documents/example_copy.txt

|

||||

```

|

||||

|

||||

重要的是,它使得你可以在与原文件相同的目录中生成一个副本:

|

||||

|

||||

```

|

||||

$ cp example.txt example.txt

|

||||

cp: 'example.txt' and 'example.txt' are the same file.

|

||||

$ cp example.txt example_copy.txt

|

||||

```

|

||||

|

||||

要复制一个目录,你必须使用 `-r` 选项(代表 `--recursive`,递归)。以这个选项对目录 `nodes` 运行 `cp` 命令,然后会作用到该目录下的所有文件。没有 `-r` 选项,`cp` 不会将目录当成一个可复制的对象:

|

||||

|

||||

```

|

||||

$ cp notes/ notes-backup

|

||||

cp: -r not specified; omitting directory 'notes/'

|

||||

$ cp -r notes/ notes-backup

|

||||

```

|

||||

|

||||

#### cat

|

||||

|

||||

`cat` 命令是最易被误解的命令,但这只是因为它表现了 [POSIX][5] 系统的极致灵活性。在 `cat` 可以做到的所有事情中(包括其原意的连接文件的用途),它也能复制。例如说使用 `cat` 你可以仅用一个命令就[从一个文件创建两个副本][6]。你用 `cp` 无法做到这一点。

|

||||

|

||||

使用 `cat` 复制文档要注意的是系统解释该行为的方式。当你使用 `cp` 复制文件时,该文件的属性跟着文件一起被复制,这意味着副本的权限和原件一样。

|

||||

|

||||

```

|

||||

$ ls -l -G -g

|

||||

-rw-r--r--. 1 57368 Jul 25 23:57 foo.jpg

|

||||

$ cp foo.jpg bar.jpg

|

||||

-rw-r--r--. 1 57368 Jul 29 13:37 bar.jpg

|

||||

-rw-r--r--. 1 57368 Jul 25 23:57 foo.jpg

|

||||

```

|

||||

|

||||

然而用 `cat` 将一个文件的内容读取至另一个文件是让系统创建了一个新文件。这些新文件取决于你的默认 umask 设置。要了解 umask 更多的知识,请阅读 Alex Juarez 讲述 [umask][7] 以及权限概览的文章。

|

||||

|

||||

运行 `unmask` 获取当前设置:

|

||||

|

||||

```

|

||||

$ umask

|

||||

0002

|

||||

```

|

||||

|

||||

这个设置代表在该处新创建的文档被给予 `664`(`rw-rw-r--`)权限,因为该 `unmask` 设置的前几位数字没有遮掩任何权限(而且执行位不是文件创建的默认位),并且写入权限被最终位所屏蔽。

|

||||

|

||||

当你使用 `cat` 复制时,实际上你并没有真正复制文件。你使用 `cat` 读取文件内容并将输出重定向到了一个新文件:

|

||||

|

||||

```

|

||||

$ cat foo.jpg > baz.jpg

|

||||

$ ls -l -G -g

|

||||

-rw-r--r--. 1 57368 Jul 29 13:37 bar.jpg

|

||||

-rw-rw-r--. 1 57368 Jul 29 13:42 baz.jpg

|

||||

-rw-r--r--. 1 57368 Jul 25 23:57 foo.jpg

|

||||

```

|

||||

|

||||

如你所见,`cat` 应用系统默认的 umask 设置创建了一个全新的文件。

|

||||

|

||||

最后,当你只是想复制一个文件时,这些手段无关紧要。但如果你想复制文件并保持默认权限时,你可以用一个命令 `cat` 完成一切。

|

||||

|

||||

#### rsync

|

||||

|

||||

有着著名的同步源和目的文件的能力,`rsync` 命令是一个复制文件的多才多艺的工具。最为简单的,`rsync` 可以类似于 `cp` 命令一样使用。

|

||||

|

||||

```

|

||||

$ rsync example.txt example_copy.txt

|

||||

$ ls

|

||||

example.txt example_copy.txt

|

||||

```

|

||||

|

||||

这个命令真正的威力藏在其能够*不做*不必要的复制的能力里。如果你使用 `rsync` 来将文件复制进目录里,且其已经存在在该目录里,那么 `rsync` 不会做复制操作。在本地这个差别不是很大,但如果你将海量数据复制到远程服务器,这个特性的意义就完全不一样了。

|

||||

|

||||

甚至在本地中,真正不一样的地方在于它可以分辨具有相同名字但拥有不同数据的文件。如果你曾发现你面对着同一个目录的两个相同副本时,`rsync` 可以将它们同步至一个包含每一个最新修改的目录。这种配置在尚未发现版本控制威力的业界十分常见,同时也作为需要从一个可信来源复制的备份方案。

|

||||

|

||||

你可以通过创建两个文件夹有意识地模拟这种情况,一个叫做 `example` 另一个叫做 `example_dupe`:

|

||||

|

||||

```

|

||||

$ mkdir example example_dupe

|

||||

```

|

||||

|

||||

在第一个文件夹里创建文件:

|

||||

|

||||

```

|

||||

$ echo "one" > example/foo.txt

|

||||

```

|

||||

|

||||

用 `rsync` 同步两个目录。这种做法最常见的选项是 `-a`(代表 “archive”,可以保证符号链接和其它特殊文件保留下来)和 `-v`(代表 “verbose”,向你提供当前命令的进度反馈):

|

||||

|

||||

```

|

||||

$ rsync -av example/ example_dupe/

|

||||

```

|

||||

|

||||

两个目录现在包含同样的信息:

|

||||

|

||||

```

|

||||

$ cat example/foo.txt

|

||||

one

|

||||

$ cat example_dupe/foo.txt

|

||||

one

|

||||

```

|

||||

|

||||

如果你当作源分支的文件发生改变,目的文件也会随之跟新:

|

||||

|

||||

```

|

||||

$ echo "two" >> example/foo.txt

|

||||

$ rsync -av example/ example_dupe/

|

||||

$ cat example_dupe/foo.txt

|

||||

one

|

||||

two

|

||||

```

|

||||

|

||||

注意 `rsync` 命令是用来复制数据的,而不是充当版本管理系统的。例如假设有一个目的文件比源文件多了改变,那个文件仍将被覆盖,因为 `rsync` 比较文件的分歧并假设目的文件总是应该镜像为源文件:

|

||||

|

||||

```

|

||||

$ echo "You will never see this note again" > example_dupe/foo.txt

|

||||

$ rsync -av example/ example_dupe/

|

||||

$ cat example_dupe/foo.txt

|

||||

one

|

||||

two

|

||||

```

|

||||

|

||||

如果没有改变,那么就不会有复制动作发生。

|

||||

|

||||

`rsync` 命令有许多 `cp` 没有的选项,例如设置目标权限、排除文件、删除没有在两个目录中出现的过时文件,以及更多。可以使用 `rsync` 作为 `cp` 的强力替代或者有效补充。

|

||||

|

||||

### 许多复制的方式

|

||||

|

||||

在 POSIX 系统中有许多能够达成同样目的的方式,因此开源的灵活性名副其实。我忘了哪个复制数据的有效方式吗?在评论区分享你的复制神技。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/8/copying-files-linux

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/sethhttps://opensource.com/users/scottnesbitthttps://opensource.com/users/greg-p

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/documents_papers_file_storage_work.png?itok=YlXpAqAJ (Filing papers and documents)

|

||||

[2]: https://opensource.com/sites/default/files/uploads/copy-nautilus.jpg (Copying a file.)

|

||||

[3]: https://opensource.com/article/19/7/understanding-file-paths-and-how-use-them

|

||||

[4]: https://opensource.com/sites/default/files/uploads/copy-files-menu.jpg (Copying a file from the context menu.)

|

||||

[5]: https://linux.cn/article-11222-1.html

|

||||

[6]: https://opensource.com/article/19/2/getting-started-cat-command

|

||||

[7]: https://opensource.com/article/19/7/linux-permissions-101

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11258-1.html)

|

||||

[#]: subject: (How To Change Linux Console Font Type And Size)

|

||||

[#]: via: (https://www.ostechnix.com/how-to-change-linux-console-font-type-and-size/)

|

||||

[#]: author: (sk https://www.ostechnix.com/author/sk/)

|

||||

@ -10,7 +10,9 @@

|

||||

如何更改 Linux 控制台字体类型和大小

|

||||

======

|

||||

|

||||

如果你有图形桌面环境,那么就很容易更改文本的字体以及大小。你如何在没有图形环境的 Ubuntu 无头服务器中做到?别担心!本指南介绍了如何更改 Linux 控制台的字体和大小。这对于那些不喜欢默认字体类型/大小或者喜欢不同字体的人来说非常有用。

|

||||

|

||||

|

||||

如果你有图形桌面环境,那么就很容易更改文本的字体以及大小。但你如何在没有图形环境的 Ubuntu 无头服务器中做到?别担心!本指南介绍了如何更改 Linux 控制台的字体和大小。这对于那些不喜欢默认字体类型/大小或者喜欢不同字体的人来说非常有用。

|

||||

|

||||

### 更改 Linux 控制台字体类型和大小

|

||||

|

||||

@ -18,13 +20,13 @@

|

||||

|

||||

![][2]

|

||||

|

||||

Ubuntu Linux 控制台

|

||||

*Ubuntu Linux 控制台*

|

||||

|

||||

据我所知,我们可以[**列出已安装的字体**][3],但是没有选项可以像在 Linux 桌面终端仿真器中那样更改 Linux 控制台字体类型或大小。

|

||||

据我所知,我们可以[列出已安装的字体][3],但是没有办法可以像在 Linux 桌面终端仿真器中那样更改 Linux 控制台字体类型或大小。

|

||||

|

||||

但这并不意味着我们无法改变它。我们仍然可以更改控制台字体。

|

||||

|

||||

如果你正在使用 Debian、Ubuntu 和其他基于 DEB 的系统,你可以使用 **“console-setup”** 配置文件来设置 **setupcon**,它用于配置控制台的字体和键盘布局。控制台设置的配置文件位于 **/etc/default/console-setup**。

|

||||

如果你正在使用 Debian、Ubuntu 和其他基于 DEB 的系统,你可以使用 `console-setup` 配置文件来设置 `setupcon`,它用于配置控制台的字体和键盘布局。该控制台设置的配置文件位于 `/etc/default/console-setup`。

|

||||

|

||||

现在,运行以下命令来设置 Linux 控制台的字体。

|

||||

|

||||

@ -36,25 +38,25 @@ $ sudo dpkg-reconfigure console-setup

|

||||

|

||||

![][4]

|

||||

|

||||

选择要在 Ubuntu 控制台上设置的编码

|

||||

*选择要在 Ubuntu 控制台上设置的编码*

|

||||

|

||||

接下来,在列表中选择受支持的字符集。默认情况下,这是我系统中的最后一个选项,即 **Guess optimal character set**。只需保留默认值,然后按回车键。

|

||||

接下来,在列表中选择受支持的字符集。默认情况下,它是最后一个选项,即在我的系统中 **Guess optimal character set**(猜测最佳字符集)。只需保留默认值,然后按回车键。

|

||||

|

||||

![][5]

|

||||

|

||||

在 Ubuntu 中选择字符集

|

||||

*在 Ubuntu 中选择字符集*

|

||||

|

||||

接下来选择控制台的字体,然后按回车键。我这里选择 “TerminusBold”。

|

||||

|

||||

![][6]

|

||||

|

||||

选择 Linux 控制台的字体

|

||||

*选择 Linux 控制台的字体*

|

||||

|

||||

这里,我们为 Linux 控制台选择所需的字体大小。

|

||||

|

||||

![][7]

|

||||

|

||||

选择 Linux 控制台的字体大小

|

||||

*选择 Linux 控制台的字体大小*

|

||||

|

||||

几秒钟后,所选的字体及大小将应用于你的 Linux 控制台。

|

||||

|

||||

@ -66,9 +68,9 @@ $ sudo dpkg-reconfigure console-setup

|

||||

|

||||

![][9]

|

||||

|

||||

如你所见,文本更大、更好,字体类型不同于默认。

|

||||

如你所见,文本更大、更好,字体类型也不同于默认。

|

||||

|

||||

你也可以直接编辑 **/etc/default/console-setup**,并根据需要设置字体类型和大小。根据以下示例,我的 Linux 控制台字体类型为 “Terminus Bold”,字体大小为 32。

|

||||

你也可以直接编辑 `/etc/default/console-setup`,并根据需要设置字体类型和大小。根据以下示例,我的 Linux 控制台字体类型为 “Terminus Bold”,字体大小为 32。

|

||||

|

||||

```

|

||||

ACTIVE_CONSOLES="/dev/tty[1-6]"

|

||||

@ -78,8 +80,7 @@ FONTFACE="TerminusBold"

|

||||

FONTSIZE="16x32"

|

||||

```

|

||||

|

||||

|

||||

##### 显示控制台字体

|

||||

### 附录:显示控制台字体

|

||||

|

||||

要显示你的控制台字体,只需输入:

|

||||

|

||||

@ -91,11 +92,11 @@ $ showconsolefont

|

||||

|

||||

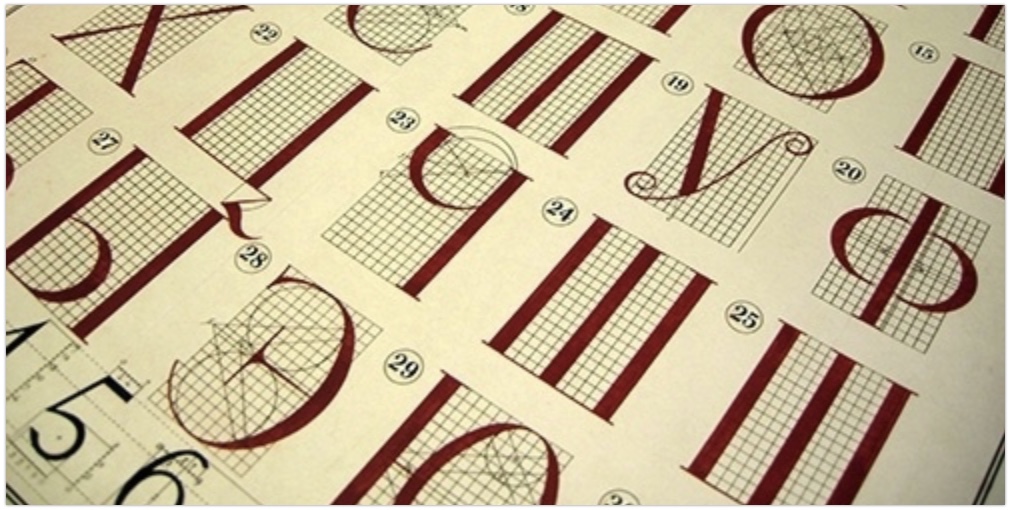

![][11]

|

||||

|

||||

显示控制台字体

|

||||

*显示控制台字体*

|

||||

|

||||

如果你的 Linux 发行版没有 “console-setup”,你可以从[**这里**][12]获取它。

|

||||

如果你的 Linux 发行版没有 `console-setup`,你可以从[这里][12]获取它。

|

||||

|

||||

在使用 **Systemd** 的 Linux 发行版上,你可以通过编辑 **“/etc/vconsole.conf”** 来更改控制台字体。

|

||||

在使用 Systemd 的 Linux 发行版上,你可以通过编辑 `/etc/vconsole.conf` 来更改控制台字体。

|

||||

|

||||

以下是德语键盘的示例配置。

|

||||

|

||||

@ -115,7 +116,7 @@ via: https://www.ostechnix.com/how-to-change-linux-console-font-type-and-size/

|

||||

作者:[sk][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,85 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Serverless on Kubernetes, diverse automation, and more industry trends)

|

||||

[#]: via: (https://opensource.com/article/19/8/serverless-kubernetes-and-more)

|

||||

[#]: author: (Tim Hildred https://opensource.com/users/thildred)

|

||||

|

||||

Serverless on Kubernetes, diverse automation, and more industry trends

|

||||

======

|

||||

A weekly look at open source community and industry trends.

|

||||

![Person standing in front of a giant computer screen with numbers, data][1]

|

||||

|

||||

As part of my role as a senior product marketing manager at an enterprise software company with an open source development model, I publish a regular update about open source community, market, and industry trends for product marketers, managers, and other influencers. Here are five of my and their favorite articles from that update.

|

||||

|

||||

## [10 tips for creating robust serverless components][2]

|

||||

|

||||

> There are some repeated patterns that we have seen after creating 20+ serverless components. We recommend that you browse through the [available component repos on GitHub][3] and check which one is close to what you’re building. Just open up the repo and check the code and see how everything fits together.

|

||||

>

|

||||

> All component code is open source, and we are striving to keep it clean, simple and easy to follow. After you look around you’ll be able to understand how our core API works, how we interact with external APIs, and how we are reusing other components.

|

||||

|

||||

**The impact**: Serverless Inc is striving to take probably the most hyped architecture early on in the hype cycle and make it usable and practical today. For serverless to truly go mainstream, producing something useful has to be as easy for a developer as "Hello world!," and these components are a step in that direction.

|

||||

|

||||

## [Kubernetes workloads in the serverless era: Architecture, platforms, and trends][4]

|

||||

|

||||

> There are many fascinating elements of the Kubernetes architecture: the containers providing common packaging, runtime and resource isolation model within its foundation; the simple control loop mechanism that monitors the actual state of components and reconciles this with the desired state; the custom resource definitions. But the true enabler for extending Kubernetes to support diverse workloads is the concept of the pod.

|

||||

>

|

||||

> A pod provides two sets of guarantees. The deployment guarantee ensures that the containers of a pod are always placed on the same node. This behavior has some useful properties such as allowing containers to communicate synchronously or asynchronously over localhost, over inter-process communication ([IPC][5]), or using the local file system.

|

||||

|

||||

**The impact**: If developer adoption of serverless architectures is largely driven by how easily they can be productive working that way, business adoption will be driven by the ability to place this trend in the operational and business context. IT decision-makers need to see a holistic picture of how serverless adds value alongside their existing investments, and operators and architects need to envision how they'll keep it all up and running.

|

||||

|

||||

## [How developers can survive the Last Mile with CodeReady Workspaces][6]

|

||||

|

||||

> Inside each cloud provider, a host of tools can address CI/CD, testing, monitoring, backing up and recovery problems. Outside of those providers, the cloud native community has been hard at work cranking out new tooling from [Prometheus][7], [Knative][8], [Envoy][9] and [Fluentd][10], to [Kubenetes][11] itself and the expanding ecosystem of Kubernetes Operators.

|

||||

>

|

||||

> Within all of those projects, cloud-based services and desktop utilities is one major gap, however: the last mile of software development is the IDE. And despite the wealth of development projects inside the community and Cloud Native Computing Foundation, it is indeed the Eclipse Foundation, as mentioned above, that has taken on this problem with a focus on the new cloud development landscape.

|

||||

|

||||

**The impact**: Increasingly complex development workflows and deployment patterns call for increasingly intelligent IDEs. While I'm sure it is possible to push a button and redeploy your microservices to a Kubernetes cluster from emacs (or vi, relax), Eclipse Che (and CodeReady Workspaces) are being built from the ground up with these types of cloud-native workflows in mind.

|

||||

|

||||

## [Automate security in increasingly complex hybrid environments][12]

|

||||

|

||||

> According to the [Information Security Forum][13]’s [Global Security Threat Outlook for 2019][14], one of the biggest IT trends to watch this year is the increasing sophistication of cybercrime and ransomware. And even as the volume of ransomware attacks is dropping, cybercriminals are finding new, more potent ways to be disruptive. An [article in TechRepublic][15] points to cryptojacking malware, which enables someone to hijack another's hardware without permission to mine cryptocurrency, as a growing threat for enterprise networks.

|

||||

>

|

||||

> To more effectively mitigate these risks, organizations could invest in automation as a component of their security plans. That’s because it takes time to investigate and resolve issues, in addition to applying controlled remediations across bare metal, virtualized systems, and cloud environments -- both private and public -- all while documenting changes.

|

||||

|

||||

**The impact**: This one is really about our ability to trust that the network service providers that we rely upon to keep our phones and smart TVs full of stutter-free streaming HD content have what they need to protect the infrastructure that makes it all possible. I for one am rooting for you!

|

||||

|

||||

## [AnsibleFest 2019 session catalog][16]

|

||||

|

||||

> 85 Ansible automation sessions over 3 days in Atlanta, Georgia

|

||||

|

||||

**The impact**: What struck me is the range of things that can be automated with Ansible. Windows? Check. Multicloud? Check. Security? Check. The real question after those three days are over will be: Is there anything in IT that can't be automated with Ansible? Seriously, I'm asking, let me know.

|

||||

|

||||

_I hope you enjoyed this list of what stood out to me from last week and come back next Monday for more open source community, market, and industry trends._

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/8/serverless-kubernetes-and-more

|

||||

|

||||

作者:[Tim Hildred][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/thildred

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/data_metrics_analytics_desktop_laptop.png?itok=9QXd7AUr (Person standing in front of a giant computer screen with numbers, data)

|

||||

[2]: https://serverless.com/blog/10-tips-creating-robust-serverless-components/

|

||||

[3]: https://github.com/serverless-components/

|

||||

[4]: https://www.infoq.com/articles/kubernetes-workloads-serverless-era/

|

||||

[5]: https://opensource.com/article/19/4/interprocess-communication-linux-networking

|

||||

[6]: https://thenewstack.io/how-developers-can-survive-the-last-mile-with-codeready-workspaces/

|

||||

[7]: https://prometheus.io/

|

||||

[8]: https://knative.dev/

|

||||

[9]: https://www.envoyproxy.io/

|

||||

[10]: https://www.fluentd.org/

|

||||

[11]: https://kubernetes.io/

|

||||

[12]: https://www.redhat.com/en/blog/automate-security-increasingly-complex-hybrid-environments

|

||||

[13]: https://www.securityforum.org/

|

||||

[14]: https://www.prnewswire.com/news-releases/information-security-forum-forecasts-2019-global-security-threat-outlook-300757408.html

|

||||

[15]: https://www.techrepublic.com/article/top-4-security-threats-businesses-should-expect-in-2019/

|

||||

[16]: https://agenda.fest.ansible.com/sessions

|

||||

@ -0,0 +1,64 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Breakthroughs bring a quantum Internet closer)

|

||||

[#]: via: (https://www.networkworld.com/article/3432509/breakthroughs-bring-a-quantum-internet-closer.html)

|

||||

[#]: author: (Patrick Nelson https://www.networkworld.com/author/Patrick-Nelson/)

|

||||

|

||||

Breakthroughs bring a quantum Internet closer

|

||||

======

|

||||

Universities around the world are making discoveries that advance technologies needed to underpin quantum computing.

|

||||

![Getty Images][1]

|

||||

|

||||

Breakthroughs in the manipulation of light are making it more likely that we will, in due course, be seeing a significantly faster and more secure Internet. Adoption of optical circuits in chips, for example, to be driven by [quantum technologies][2] could be just around the corner.

|

||||

|

||||

Physicists at the Technical University of Munich (TUM), have just announced a dramatic leap forward in the methods used to accurately place light sources in atom-thin layers. That fine positioning has been one block in the movement towards quantum chips.

|

||||

|

||||

[See who's creating quantum computers][3]

|

||||

|

||||

“Previous circuits on chips rely on electrons as the information carriers,” [the school explains in a press release][4]. However, by using light instead, it's possible to send data at the faster speed of light, gain power-efficiencies and take advantage of quantum entanglement, where the data is positioned in multiple states in the circuit, all at the same time.

|

||||

|

||||

Roughly, quantum entanglement is highly secure because eavesdropping attempts can not only be spotted immediately anywhere along a circuit, due to the always-intertwined parts, but the keys can be automatically shut down at the same time, thus corrupting visibility for the hacker.

|

||||

|

||||

The school says its light-source-positioning technique, using a three-atom-thick layer of the semiconductor molybdenum disulfide (MoS2) as the initial material and then irradiating it with a helium ion beam, controls the positioning of the light source better, in a chip, than has been achieved before.

|

||||

|

||||

They say that the precision now opens the door to quantum sensor chips for smartphones, and also “new encryption technologies for data transmission.” Any smartphone sensor also has applications in IoT.

|

||||

|

||||

The TUM quantum-electronics breakthrough is just one announced in the last few weeks. Scientists at Osaka University say they’ve figured a way to get information that’s encoded in a laser-beam to translate to a spin state of an electron in a quantum dot. They explain, [in their release][5], that they solve an issue where entangled states can be extremely fragile, in other words, petering out and not lasting for the required length of transmission. Roughly, they explain that their invention allows electron spins in distant, terminus computers to interact better with the quantum-data-carrying light signals.

|

||||

|

||||

“The achievement represents a major step towards a ‘quantum internet,’ the university says.

|

||||

|

||||

“There are those who think all computers, and other electronics, will eventually be run on light and forms of photons, and that we will see a shift to all-light,” [I wrote earlier this year][6].

|

||||

|

||||

That movement is not slowing. Unrelated to the aforementioned quantum-based light developments, we’re also seeing a light-based thrust that can be used in regular electronics too.

|

||||

|

||||

Engineers may soon be designing with small photon diodes (not traditional LEDs, which are also diodes) that would allow light to flow in one direction only, [says Stanford University in a press release][7]. They are using materials science and have figured a way to trap light in nano-sized silicon. Diodes are basically a valve that stops electrical circuits running in reverse. Light-based diodes, for direction, haven’t been available in small footprints, such as would be needed in smartphone-sized form factors, or IoT sensing, for example.

|

||||

|

||||

“One grand vision is to have an all-optical computer where electricity is replaced completely by light and photons drive all information processing,” Mark Lawrence of Stanford says. “The increased speed and bandwidth of light would enable faster solutions.”

|

||||

|

||||

Join the Network World communities on [Facebook][8] and [LinkedIn][9] to comment on topics that are top of mind.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3432509/breakthroughs-bring-a-quantum-internet-closer.html

|

||||

|

||||

作者:[Patrick Nelson][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Patrick-Nelson/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.idgesg.net/images/article/2018/08/3_nodes-and-wires_servers_hardware-100769198-large.jpg

|

||||

[2]: https://www.networkworld.com/article/3275367/what-s-quantum-computing-and-why-enterprises-need-to-care.html

|

||||

[3]: https://www.networkworld.com/article/3275385/who-s-developing-quantum-computers.html

|

||||

[4]: https://www.tum.de/nc/en/about-tum/news/press-releases/details/35627/

|

||||

[5]: https://resou.osaka-u.ac.jp/en/research/2019/20190717_1

|

||||

[6]: https://www.networkworld.com/article/3338081/light-based-computers-to-be-5000-times-faster.html

|

||||

[7]: https://news.stanford.edu/2019/07/24/developing-technologies-run-light/

|

||||

[8]: https://www.facebook.com/NetworkWorld/

|

||||

[9]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,88 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (The cloud isn't killing open source software)

|

||||

[#]: via: (https://opensource.com/article/19/8/open-source-licensing)

|

||||

[#]: author: (Peter Zaitsev https://opensource.com/users/peter-zaitsev)

|

||||

|

||||

The cloud isn't killing open source software

|

||||

======

|

||||

How the cloud motivates open source businesses to evolve quickly.

|

||||

![Globe up in the clouds][1]

|

||||

|

||||

Over the last few months, I participated in two keynote panels where people asked questions about open source licensing:

|

||||

|

||||

* Do we need to redefine what open source means in the age of the cloud?

|

||||

* Are cloud vendors abusing open source?

|

||||

* Will open source, as we know it, survive?

|

||||

|

||||

|

||||

|

||||

Last year was the most eventful in my memory for the usually very conservative open source licensing space:

|

||||

|

||||

* [Elastic][2] and [Confluent][3] introduced their own licenses for a portion of their stack.

|

||||

* [Redis Labs][4] changed its license for some extensions by adding "Commons Clause," then changed the entire license a few months later.

|

||||

* [MongoDB][5] famously proposed a new license called Server-Side Public License (SSPL) to the Open Source Initiative (OSI) for approval, only to [retract][6] the proposal before the OSI had an opportunity to reach a decision. Many in the open source community regarded SSPL as failing to meet the standards of open source licenses. As a result, MongoDB is under a license that can be described as "[source-available][7]" but not open source, given that it has not been approved by the OSI.

|

||||

|

||||

|

||||

|

||||

### Competition in the cloud

|

||||

|

||||

The most common reason given for software vendors making these changes is "foul play" by cloud vendors. The argument is that cloud vendors unfairly offer open source software "as a service," capturing large portions of the revenue, while the original software vendor continues to carry most of the development costs. Market rumors claim Amazon Web Services (AWS) makes more revenue from MySQL than Oracle, which owns the product.

|

||||

|

||||

So, who is claiming foul play is destroying the open source ecosystem? Typically, the loudest voices are venture-funded open source software companies. These companies require a very high growth rate to justify their hefty valuation, so it makes sense that they would prefer not to worry about additional competition.

|

||||

|

||||

But I reject this argument. If you have an open source license for your software, you need to accept the benefits and drawbacks that go along with it. Besides, you are likely to have a much faster and larger adoption rate partly because other businesses, large and small, can make money from your software. You need to accept and even expect competition from these businesses.

|

||||

|

||||

In simple terms, there will be a larger cake, but you will only get a slice of it. If you want a bigger slice of that cake, you can choose a proprietary license for all or some of your software (the latter is often called "open core"). Or, you can choose more or less permissive open source licensing. Choosing the right mix and adapting it as time goes by is critical for the success of businesses that produce open source software.

|

||||

|

||||

### Open source communities

|

||||

|

||||

But what about software users and the open source communities that surround these projects? These groups generally love to see their software available from cloud vendors, for example, database-as-a-service (DBaaS), as it makes the software much easier to access and gives users more choices than ever. This can have a very positive impact on the community. For example, the adoption of PostgreSQL, which was not easy to use, was dramatically boosted by its availability on Heroku and then as DBaaS on major cloud vendors.

|

||||

|

||||

Another criticism leveled at cloud vendors is that they do not support open source communities. This is partly due to their reluctance to share software code. They do, however, contribute significantly to the community by pushing the boundaries of usability, and more and more, we see examples of cloud vendors contributing code. AWS, which gets most of the criticism, has multiple [open source projects][8] and contributes to other projects. Amazon [contributed Encryption in Transit to Redis][9] and recently released [Open Distro for Elasticsearch][10], which provides open source equivalents for many features not available in the open source version of the Elastic platform.

|

||||

|

||||

### Open source now and in the future

|

||||

|

||||

So, while open source companies impacted by cloud vendors continue to argue that such competition can kill their business—and consequently kill open source projects—this argument is misguided. Competition is not new. Weaker companies that fail to adjust to these new business realities may fail. Other companies will thrive or be acquired by stronger players. This process generally leads to better products and more choice.

|

||||

|

||||

This is especially true for open source software, which, unlike proprietary software, cannot be wiped out by a company's failure. Once released, open source code is _always_ open (you can only change the license for new releases), so everyone can exercise the right to fork and continue development if there is demand.

|

||||

|

||||

So, I believe open source software is working exactly as intended.

|

||||

|

||||

Some businesses attempt to balance open and proprietary software licenses and are now changing to restrictive licenses. Time will tell whether this will protect them or result in their users seeking a more open alternative.

|

||||

|

||||

But, what about "source-available" licenses? This is a new category and another option for software vendors and users. However, it can be confusing. The source-available category is not well defined. Some people even refer to this software as open source, as you can browse the source code on GitHub. When source-available code is mixed in with truly open source components in the same product, it can be problematic. If issues arise, they could damage the reputation of the open source software and even expose the user to potential litigation. I hope that standardized source-available licenses will be developed and adopted by software vendors, as was the case with open source licenses.

|

||||

|

||||

At [Percona][11], we find ourselves in a unique position. We have spent years using the freedom of open source to develop better versions of existing software, with enhanced features, at no cost to our users. Percona Server for MySQL is as open as MySQL Community Edition but has many of the enhanced features available in MySQL Enterprise as well as additional benefits. This also applies to Percona Server for MongoDB. So, we compete with MongoDB and Oracle, while also being thankful for the amazing engineering work they are doing.

|

||||

|

||||

We also compete with DBaaS on other cloud vendors. DBaaS is a great choice for smaller companies that aren't worried about vendor lock-in. It offers superb value without huge costs and is a great choice for some customers. This rivalry is sometimes unpleasant, but it is ultimately fair, and the competition pushes us to be a better company.

|

||||

|

||||

In summary, there is no need to panic! The cloud is not going to kill open source software, but it should motivate open source software businesses to adjust and evolve their operations. It is clear that agility will be key, and businesses that can take advantage of new developments and adapt to changing market conditions will be more successful. The final result is likely to be more open software and also more non-open source software, all operating under a variety of licenses.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/8/open-source-licensing

|

||||

|

||||

作者:[Peter Zaitsev][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/peter-zaitsev

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/cloud-globe.png?itok=_drXt4Tn (Globe up in the clouds)

|

||||

[2]: https://www.elastic.co/guide/en/elastic-stack-overview/current/license-management.html

|

||||

[3]: https://www.confluent.io/blog/license-changes-confluent-platform

|

||||

[4]: https://redislabs.com/blog/redis-labs-modules-license-changes/

|

||||

[5]: https://www.mongodb.com/licensing/server-side-public-license

|

||||

[6]: http://lists.opensource.org/pipermail/license-review_lists.opensource.org/2019-March/003989.html

|

||||

[7]: https://en.wikipedia.org/wiki/Source-available_software

|

||||

[8]: https://aws.amazon.com/opensource/

|

||||

[9]: https://aws.amazon.com/blogs/opensource/open-sourcing-encryption-in-transit-redis/

|

||||

[10]: https://aws.amazon.com/blogs/opensource/keeping-open-source-open-open-distro-for-elasticsearch/

|

||||

[11]: https://www.percona.com/

|

||||

@ -1,222 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (tomjlw)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Copying files in Linux)

|

||||

[#]: via: (https://opensource.com/article/19/8/copying-files-linux)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/sethhttps://opensource.com/users/scottnesbitthttps://opensource.com/users/greg-p)

|

||||

|

||||

Copying files in Linux

|

||||

======

|

||||

Learn multiple ways to copy files on Linux, and the advantages of each.

|

||||

![Filing papers and documents][1]

|

||||

|

||||

Copying documents used to require a dedicated staff member in offices, and then a dedicated machine. Today, copying is a task computer users do without a second thought. Copying data on a computer is so trivial that copies are made without you realizing it, such as when dragging a file to an external drive.

|

||||

|

||||

The concept that digital entities are trivial to reproduce is pervasive, so most modern computerists don’t think about the options available for duplicating their work. And yet, there are several different ways to copy a file on Linux. Each method has nuanced features that might benefit you, depending on what you need to get done.

|

||||

|

||||

Here are a number of ways to copy files on Linux, BSD, and Mac.

|

||||

|

||||

### Copying in the GUI

|

||||

|

||||

As with most operating systems, you can do all of your file management in the GUI, if that's the way you prefer to work.

|

||||

|

||||

Drag and drop

|

||||

|

||||

The most obvious way to copy a file is the way you’re probably used to copying files on computers: drag and drop. On most Linux desktops, dragging and dropping from one local folder to another local folder _moves_ a file by default. You can change this behavior to a copy operation by holding down the **Ctrl** key after you start dragging the file.

|

||||

|

||||

Your cursor may show an indicator, such as a plus sign, to show that you are in copy mode:

|

||||

|

||||

![Copying a file.][2]

|

||||

|

||||

Note that if the file exists on a remote system, whether it’s a web server or another computer on your own network that you access through a file-sharing protocol, the default action is often to copy, not move, the file.

|

||||

|

||||

#### Right-click

|

||||

|

||||

If you find dragging and dropping files around your desktop imprecise or clumsy, or doing so takes your hands away from your keyboard too much, you can usually copy a file using the right-click menu. This possibility depends on the file manager you use, but generally, a right-click produces a contextual menu containing common actions.

|

||||

|

||||

The contextual menu copy action stores the [file path][3] (where the file exists on your system) in your clipboard so you can then _paste_ the file somewhere else:

|

||||

|

||||

![Copying a file from the context menu.][4]

|

||||

|

||||

In this case, you’re not actually copying the file’s contents to your clipboard. Instead, you're copying the [file path][3]. When you paste, your file manager looks at the path in your clipboard and then runs a copy command, copying the file located at that path to the path you are pasting into.

|

||||

|

||||

### Copying on the command line

|

||||

|

||||

While the GUI is a generally familiar way to copy files, copying in a terminal can be more efficient.

|

||||

|

||||

#### cp

|

||||

|

||||

The obvious terminal-based equivalent to copying and pasting a file on the desktop is the **cp** command. This command copies files and directories and is relatively straightforward. It uses the familiar _source_ and _target_ (strictly in that order) syntax, so to copy a file called **example.txt** into your **Documents** directory:

|

||||

|

||||

|

||||

```

|

||||

$ cp example.txt ~/Documents

|

||||

```

|

||||

|

||||

Just like when you drag and drop a file onto a folder, this action doesn’t replace **Documents** with **example.txt**. Instead, **cp** detects that **Documents** is a folder, and places a copy of **example.txt** into it.

|

||||

|

||||

You can also, conveniently (and efficiently), rename the file as you copy it:

|

||||

|

||||

|

||||

```

|

||||

$ cp example.txt ~/Documents/example_copy.txt

|

||||

```

|

||||

|

||||

That fact is important because it allows you to make a copy of a file in the same directory as the original:

|

||||

|

||||

|

||||

```

|

||||

$ cp example.txt example.txt

|

||||

cp: 'example.txt' and 'example.txt' are the same file.

|

||||

$ cp example.txt example_copy.txt

|

||||

```

|

||||

|

||||

To copy a directory, you must use the **-r** option, which stands for --**recursive**. This option runs **cp** on the directory _inode_, and then on all files within the directory. Without the **-r** option, **cp** doesn’t even recognize a directory as an object that can be copied:

|

||||

|

||||

|

||||

```

|

||||

$ cp notes/ notes-backup

|

||||

cp: -r not specified; omitting directory 'notes/'

|

||||

$ cp -r notes/ notes-backup

|

||||

```

|

||||

|

||||

#### cat

|

||||

|

||||

The **cat** command is one of the most misunderstood commands, but only because it exemplifies the extreme flexibility of a [POSIX][5] system. Among everything else **cat** does (including its intended purpose of con_cat_enating files), it can also copy. For instance, with **cat** you can [create two copies from one file][6] with just a single command. You can’t do that with **cp**.

|

||||

|

||||

The significance of using **cat** to copy a file is the way the system interprets the action. When you use **cp** to copy a file, the file’s attributes are copied along with the file itself. That means that the file permissions of the duplicate are the same as the original:

|

||||

|

||||

|

||||

```

|

||||

$ ls -l -G -g

|

||||

-rw-r--r--. 1 57368 Jul 25 23:57 foo.jpg

|

||||

$ cp foo.jpg bar.jpg

|

||||

-rw-r--r--. 1 57368 Jul 29 13:37 bar.jpg

|

||||

-rw-r--r--. 1 57368 Jul 25 23:57 foo.jpg

|

||||

```

|

||||

|

||||

Using **cat** to read the contents of a file into another file, however, invokes a system call to create a new file. These new files are subject to your default **umask** settings. To learn more about `umask`, read Alex Juarez’s article covering [umask][7] and permissions in general.

|

||||

|

||||

Run **umask** to get the current settings:

|

||||

|

||||

|

||||

```

|

||||

$ umask

|

||||

0002

|

||||

```

|

||||

|

||||

This setting means that new files created in this location are granted **664** (**rw-rw-r--**) permission because nothing is masked by the first digits of the **umask** setting (and the executable bit is not a default bit for file creation), and the write permission is blocked by the final digit.

|

||||

|

||||

When you copy with **cat**, you don’t actually copy the file. You use **cat** to read the contents of the file, and then redirect the output into a new file:

|

||||

|

||||

|

||||

```

|

||||

$ cat foo.jpg > baz.jpg

|

||||

$ ls -l -G -g

|

||||

-rw-r--r--. 1 57368 Jul 29 13:37 bar.jpg

|

||||

-rw-rw-r--. 1 57368 Jul 29 13:42 baz.jpg

|

||||

-rw-r--r--. 1 57368 Jul 25 23:57 foo.jpg

|

||||

```

|

||||

|

||||

As you can see, **cat** created a brand new file with the system’s default umask applied.

|

||||

|

||||

In the end, when all you want to do is copy a file, the technicalities often don’t matter. But sometimes you want to copy a file and end up with a default set of permissions, and with **cat** you can do it all in one command**.**

|

||||

|

||||

#### rsync

|

||||

|

||||

The **rsync** command is a versatile tool for copying files, with the notable ability to synchronize your source and destination. At its most simple, **rsync** can be used similarly to **cp** command:

|

||||

|

||||

|

||||

```

|

||||

$ rsync example.txt example_copy.txt

|

||||

$ ls

|

||||

example.txt example_copy.txt

|

||||

```

|

||||

|

||||

The command’s true power lies in its ability to _not_ copy when it’s not necessary. If you use **rsync** to copy a file into a directory, but that file already exists in that directory, then **rsync** doesn’t bother performing the copy operation. Locally, that fact doesn’t necessarily mean much, but if you’re copying gigabytes of data to a remote server, this feature makes a world of difference.

|

||||

|

||||

What does make a difference even locally, though, is the command’s ability to differentiate files that share the same name but which contain different data. If you’ve ever found yourself faced with two copies of what is meant to be the same directory, then **rsync** can synchronize them into one directory containing the latest changes from each. This setup is a pretty common occurrence in industries that haven’t yet discovered the magic of version control, and for backup solutions in which there is one source of truth to propagate.

|

||||

|

||||

You can emulate this situation intentionally by creating two folders, one called **example** and the other **example_dupe**:

|

||||

|

||||

|

||||

```

|

||||

$ mkdir example example_dupe

|

||||

```

|

||||

|

||||

Create a file in the first folder:

|

||||

|

||||

|

||||

```

|

||||

$ echo "one" > example/foo.txt

|

||||

```

|

||||

|

||||

Use **rsync** to synchronize the two directories. The most common options for this operation are **-a** (for _archive_, which ensures symlinks and other special files are preserved) and **-v** (for _verbose_, providing feedback to you on the command’s progress):

|

||||

|

||||

|

||||

```

|

||||

$ rsync -av example/ example_dupe/

|

||||

```

|

||||

|

||||

The directories now contain the same information:

|

||||

|

||||

|

||||

```

|

||||

$ cat example/foo.txt

|

||||

one

|

||||

$ cat example_dupe/foo.txt

|

||||

one

|

||||

```

|

||||

|

||||

If the file you are treating as the source diverges, then the target is updated to match:

|

||||

|

||||

|

||||

```

|

||||

$ echo "two" >> example/foo.txt

|

||||

$ rsync -av example/ example_dupe/

|

||||

$ cat example_dupe/foo.txt

|

||||

one

|

||||

two

|

||||

```

|

||||

|

||||

Keep in mind that the **rsync** command is meant to copy data, not to act as a version control system. For instance, if a file in the destination somehow gets ahead of a file in the source, that file is still overwritten because **rsync** compares files for divergence and assumes that the destination is always meant to mirror the source:

|

||||

|

||||

|

||||

```

|

||||

$ echo "You will never see this note again" > example_dupe/foo.txt

|

||||

$ rsync -av example/ example_dupe/

|

||||

$ cat example_dupe/foo.txt

|

||||

one

|

||||

two

|

||||

```

|

||||

|

||||

If there is no change, then no copy occurs.

|

||||

|

||||

The **rsync** command has many options not available in **cp**, such as the ability to set target permissions, exclude files, delete outdated files that don’t appear in both directories, and much more. Use **rsync** as a powerful replacement for **cp**, or just as a useful supplement.

|

||||

|

||||

### Many ways to copy

|

||||

|

||||

There are many ways to achieve essentially the same outcome on a POSIX system, so it seems that open source’s reputation for flexibility is well earned. Have I missed a useful way to copy data? Share your copy hacks in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/8/copying-files-linux

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/sethhttps://opensource.com/users/scottnesbitthttps://opensource.com/users/greg-p

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/documents_papers_file_storage_work.png?itok=YlXpAqAJ (Filing papers and documents)

|

||||

[2]: https://opensource.com/sites/default/files/uploads/copy-nautilus.jpg (Copying a file.)

|

||||

[3]: https://opensource.com/article/19/7/understanding-file-paths-and-how-use-them

|

||||

[4]: https://opensource.com/sites/default/files/uploads/copy-files-menu.jpg (Copying a file from the context menu.)

|

||||

[5]: https://opensource.com/article/19/7/what-posix-richard-stallman-explains

|

||||

[6]: https://opensource.com/article/19/2/getting-started-cat-command

|

||||

[7]: https://opensource.com/article/19/7/linux-permissions-101

|

||||

@ -1,105 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (How to Reinstall Ubuntu in Dual Boot or Single Boot Mode)

|

||||

[#]: via: (https://itsfoss.com/reinstall-ubuntu/)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

How to Reinstall Ubuntu in Dual Boot or Single Boot Mode

|

||||

======

|

||||

|

||||

If you have messed up your Ubuntu system and after trying numerous ways to fix it, you finally give up and take the easy way out: you reinstall Ubuntu.

|

||||

|

||||

We have all been in a situation when reinstalling Linux seems a better idea than try to troubleshoot and fix the issue for good. Troubleshooting a Linux system teaches you a lot but you cannot always afford to spend more time fixing a broken system.

|

||||

|

||||

There is no Windows like recovery drive system in Ubuntu as far as I know. So, the question then arises: how to reinstall Ubuntu? Let me show you how can you reinstall Ubuntu.

|

||||

|

||||

Warning!

|

||||

|

||||

Playing with disk partitions is always a risky task. I strongly recommend to make a backup of your data on an external disk.

|

||||

|

||||

### How to reinstall Ubuntu Linux

|

||||

|

||||

![][1]

|

||||

|

||||

Here are the steps to follow for reinstalling Ubuntu.

|

||||

|

||||

#### Step 1: Create a live USB

|

||||

|

||||

First, download Ubuntu from its website. You can download [whichever Ubuntu version][2] you want to use.

|

||||

|

||||

[Download Ubuntu][3]

|

||||

|

||||

Once you have got the ISO image, it’s time to create a live USB from it. If your Ubuntu system is still accessible, you can create a live disk using the startup disk creator tool provided by Ubuntu.

|

||||

|

||||

If you cannot access your Ubuntu system, you’ll have to use another system. You can refer to this article to learn [how to create live USB of Ubuntu in Windows][4].

|

||||

|

||||

#### Step 2: Reinstall Ubuntu

|

||||

|

||||

Once you have got the live USB of Ubuntu, plugin the USB. Reboot your system. At boot time, press F2/10/F12 key to go into the BIOS settings and make sure that you have set Boot from Removable Devices/USB option at the top. Save and exit BIOS. This will allow you to boot into live USB.

|

||||

|

||||

Once you are in the live USB, choose to install Ubuntu. You’ll get the usual option for choosing your language and keyboard layout. You’ll also get the option to download updates etc.

|

||||

|

||||

![Go ahead with regular installation option][5]

|

||||

|

||||

The important steps comes now. You should see an “Installation Type” screen. What you see on your screen here depends heavily on how Ubuntu sees the disk partitioning and installed operating systems on your system.

|

||||

|

||||

[][6]

|

||||

|

||||

Suggested read How to Update Ubuntu Linux [Beginner's Tip]

|

||||

|

||||

Be very careful in reading the options and its details at this step. Pay attention to what each options says. The screen options may look different in different systems.

|

||||

|

||||

![Reinstall Ubuntu option in dual boot mode][7]

|

||||

|

||||

In my case, it finds that I have Ubuntu 18.04.2 and Windows installed on my system and it gives me a few options.

|

||||

|

||||

The first option here is to erase Ubuntu 18.04.2 and reinstall it. It tells me that it will delete my personal data but it says nothing about deleting all the operating systems (i.e. Windows).

|

||||

|

||||

If you are super lucky or in single boot mode, you may see an option where you can see a “Reinstall Ubuntu”. This option will keep your existing data and even tries to keep the installed software. If you see this option, you should go for it it.

|

||||

|

||||

Attention for Dual Boot System

|

||||

|

||||

If you are dual booting Ubuntu and Windows and during reinstall, your Ubuntu system doesn’t see Windows, you must go for Something else option and install Ubuntu from there. I have described the [process of reinstalling Linux in dual boot in this tutorial][8].

|

||||

|

||||

For me, there was no reinstall and keep the data option so I went for “Erase Ubuntu and reinstall”option. This will install Ubuntu afresh even if it is in dual boot mode with Windows.

|

||||

|

||||

The reinstalling part is why I recommend using separate partitions for root and home. With that, you can keep your data in home partition safe even if you reinstall Linux. I have already demonstrated it in this video:

|

||||

|

||||

Once you have chosen the reinstall Ubuntu option, the rest of the process is just clicking next. Select your location and when asked, create your user account.

|

||||

|

||||

![Just go on with the installation options][9]

|

||||

|

||||

Once the procedure finishes, you’ll have your Ubuntu reinstalled afresh.

|

||||

|

||||

In this tutorial, I have assumed that you know things because you already has Ubuntu installed before. If you need clarification at any step, please feel free to ask in the comment section.

|

||||

|

||||

[][10]

|

||||

|

||||

Suggested read How To Fix No Wireless Network In Ubuntu

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/reinstall-ubuntu/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/08/Reinstall-Ubuntu.png?resize=800%2C450&ssl=1

|

||||

[2]: https://itsfoss.com/which-ubuntu-install/

|

||||

[3]: https://ubuntu.com/download/desktop

|

||||

[4]: https://itsfoss.com/create-live-usb-of-ubuntu-in-windows/

|

||||

[5]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/08/reinstall-ubuntu-1.jpg?resize=800%2C473&ssl=1

|

||||

[6]: https://itsfoss.com/update-ubuntu/

|

||||

[7]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/08/reinstall-ubuntu-dual-boot.jpg?ssl=1

|

||||

[8]: https://itsfoss.com/replace-linux-from-dual-boot/

|

||||

[9]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/08/reinstall-ubuntu-3.jpg?ssl=1

|

||||

[10]: https://itsfoss.com/fix-no-wireless-network-ubuntu/

|

||||

353

sources/tech/20190819 An introduction to bpftrace for Linux.md

Normal file

353

sources/tech/20190819 An introduction to bpftrace for Linux.md

Normal file

@ -0,0 +1,353 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (An introduction to bpftrace for Linux)

|

||||

[#]: via: (https://opensource.com/article/19/8/introduction-bpftrace)

|

||||

[#]: author: (Brendan Gregg https://opensource.com/users/brendanghttps://opensource.com/users/marcobravo)

|

||||

|

||||

An introduction to bpftrace for Linux

|

||||

======

|

||||

New Linux tracer analyzes production performance problems and

|

||||

troubleshoots software.

|

||||

![Linux keys on the keyboard for a desktop computer][1]

|

||||

|

||||

Bpftrace is a new open source tracer for Linux for analyzing production performance problems and troubleshooting software. Its users and contributors include Netflix, Facebook, Red Hat, Shopify, and others, and it was created by [Alastair Robertson][2], a talented UK-based developer who has won various coding competitions.

|

||||

|

||||

Linux already has many performance tools, but they are often counter-based and have limited visibility. For example, [iostat(1)][3] or a monitoring agent may tell you your average disk latency, but not the distribution of this latency. Distributions can reveal multiple modes or outliers, either of which may be the real cause of your performance problems. [Bpftrace][4] is suited for this kind of analysis: decomposing metrics into distributions or per-event logs and creating new metrics for visibility into blind spots.

|

||||

|

||||

You can use bpftrace via one-liners or scripts, and it ships with many prewritten tools. Here is an example that traces the distribution of read latency for PID 181 and shows it as a power-of-two histogram:

|

||||

|

||||

|

||||

```

|

||||

# bpftrace -e 'kprobe:vfs_read /pid == 30153/ { @start[tid] = nsecs; }

|

||||

kretprobe:vfs_read /@start[tid]/ { @ns = hist(nsecs - @start[tid]); delete(@start[tid]); }'

|

||||

Attaching 2 probes...

|

||||

^C

|

||||

|

||||

@ns:

|

||||

[256, 512) 10900 |@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ |

|

||||

[512, 1k) 18291 |@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@|

|

||||

[1k, 2k) 4998 |@@@@@@@@@@@@@@ |

|

||||

[2k, 4k) 57 | |

|

||||

[4k, 8k) 117 | |

|

||||

[8k, 16k) 48 | |

|

||||

[16k, 32k) 109 | |

|

||||

[32k, 64k) 3 | |

|

||||

```

|

||||

|

||||

This example instruments one event out of thousands available. If you have some weird performance problem, there's probably some bpftrace one-liner that can shed light on it. For large environments, this ability can help you save millions. For smaller environments, it can be of more use in helping to eliminate latency outliers.

|

||||

|

||||

I [previously][5] wrote about bpftrace vs. other tracers, including [BCC][6] (BPF Compiler Collection). BCC is great for canned complex tools and agents. Bpftrace is best for short scripts and ad hoc investigations. In this article, I'll summarize the bpftrace language, variable types, probes, and tools.

|

||||

|

||||

Bpftrace uses BPF (Berkeley Packet Filter), an in-kernel execution engine that processes a virtual instruction set. BPF has been extended (aka eBPF) in recent years for providing a safe way to extend kernel functionality. It also has become a hot topic in systems engineering, with at least 24 talks on BPF at the last [Linux Plumber's Conference][7]. BPF is in the Linux kernel, and bpftrace is the best way to get started using BPF for observability.

|

||||

|

||||

See the bpftrace [INSTALL][8] guide for how to install it, and get the latest version; [0.9.2][9] was just released. For Kubernetes clusters, there is also [kubectl-trace][10] for running it.

|

||||

|

||||

### Syntax

|

||||

|

||||

|

||||

```

|

||||

`probe[,probe,...] /filter/ { action }`

|

||||

```

|

||||

|

||||

The probe specifies what events to instrument. The filter is optional and can filter down the events based on a boolean expression, and the action is the mini-program that runs.

|

||||

|

||||

Here's hello world:

|

||||

|

||||

|

||||

```

|

||||

`# bpftrace -e 'BEGIN { printf("Hello eBPF!\n"); }'`

|

||||

```

|

||||

|

||||

The probe is **BEGIN**, a special probe that runs at the beginning of the program (like awk). There's no filter. The action is a **printf()** statement.

|

||||

|

||||

Now a real example:

|

||||

|

||||

|

||||

```

|

||||

`# bpftrace -e 'kretprobe:sys_read /pid == 181/ { @bytes = hist(retval); }'`

|

||||

```

|

||||

|

||||

This uses a **kretprobe** to instrument the return of the **sys_read()** kernel function. If the PID is 181, a special map variable **@bytes** is populated with a log2 histogram function with the return value **retval** of **sys_read()**. This produces a histogram of the returned read size for PID 181. Is your app doing lots of one byte reads? Maybe that can be optimized.

|

||||

|

||||

### Probe types

|

||||

|

||||

These are libraries of related probes. The currently supported types are (more will be added):

|

||||

|

||||

Type | Description

|

||||

---|---

|

||||

**tracepoint** | Kernel static instrumentation points

|

||||

**usdt** | User-level statically defined tracing

|

||||

**kprobe** | Kernel dynamic function instrumentation

|

||||

**kretprobe** | Kernel dynamic function return instrumentation

|

||||

**uprobe** | User-level dynamic function instrumentation

|

||||

**uretprobe** | User-level dynamic function return instrumentation

|

||||

**software** | Kernel software-based events

|

||||

**hardware** | Hardware counter-based instrumentation

|

||||

**watchpoint** | Memory watchpoint events (in development)

|

||||

**profile** | Timed sampling across all CPUs

|

||||

**interval** | Timed reporting (from one CPU)

|

||||

**BEGIN** | Start of bpftrace

|

||||

**END** | End of bpftrace

|

||||

|

||||

Dynamic instrumentation (aka dynamic tracing) is the superpower that lets you trace any software function in a running binary without restarting it. This lets you get to the bottom of just about any problem. However, the functions it exposes are not considered a stable API, as they can change from one software version to another. Hence static instrumentation, where event points are hard-coded and become a stable API. When you write bpftrace programs, try to use the static types first, before the dynamic ones, so your programs are more stable.

|

||||

|

||||

### Variable types

|

||||

|

||||

Variable | Description

|

||||

---|---

|

||||

**@name** | global

|

||||

**@name[key]** | hash

|

||||

**@name[tid]** | thread-local

|

||||

**$name** | scratch

|

||||

|

||||

Variables with an **@** prefix use BPF maps, which can behave like associative arrays. They can be populated in one of two ways:

|

||||

|

||||

* Variable assignment: **@name = x;**

|

||||

* Function assignment: **@name = hist(x);**

|

||||

|

||||

|

||||

|

||||

Various map-populating functions are built in to provide quick ways to summarize data.

|

||||

|

||||

### Built-in variables and functions

|

||||

|

||||

Here are some of the built-in variables and functions, but there are many more.

|

||||

|

||||

**Built-in variables:**

|

||||

|

||||

Variable | Description

|

||||

---|---

|

||||

**pid** | process ID

|

||||

**comm** | Process or command name

|

||||

**nsecs** | Current time in nanoseconds

|

||||

**kstack** | Kernel stack trace

|

||||

**ustack** | User-level stack trace

|

||||

**arg0...argN** | Function arguments

|

||||

**args** | Tracepoint arguments

|

||||

**retval** | Function return value

|

||||

**name** | Full probe name

|

||||

|

||||

**Built-in functions:**

|

||||

|

||||

Function | Description

|

||||

---|---

|

||||

**printf("...")** | Print formatted string

|

||||

**time("...")** | Print formatted time

|

||||

**system("...")** | Run shell command

|

||||

**@ = count()** | Count events

|

||||