mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-28 23:20:10 +08:00

commit

0ae98883d2

@ -14,7 +14,7 @@

|

||||

|

||||

### 1、操作系统

|

||||

|

||||

操作系统就是一个非常复杂的程序。它的任务就是组织安排计算机上的其它程序,包括共享计算机的时间、内存、硬件和其它资源。你可能听说过的一些比较大的桌面操作系统家族有 GNU/Linux、Mac OS X 和 Microsoft Windows。其它的设备比如电话,也需要操作系统,它可能使用的操作系统是 Android、iOS 和 [Windows Phone](https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/introduction.html#note1)。

|

||||

操作系统就是一个非常复杂的程序。它的任务就是组织安排计算机上的其它程序,包括共享计算机的时间、内存、硬件和其它资源。你可能听说过的一些比较大的桌面操作系统家族有 GNU/Linux、Mac OS X 和 Microsoft Windows。其它的设备比如电话,也需要操作系统,它可能使用的操作系统是 Android、iOS 和 Windows Phone。 [^1]

|

||||

|

||||

由于操作系统是用来与计算机系统上的硬件进行交互的,所以它必须了解系统上硬件专有的信息。为了能让操作系统适用于各种类型的计算机,发明了 **驱动程序** 的概念。驱动程序是为了能够让操作系统与特定的硬件进行交互而添加(并可删除)到操作系统上的一小部分代码。在本课程中,我们并不涉及如何创建可删除的驱动程序,而是专注于特定的一个硬件:树莓派。

|

||||

|

||||

@ -26,7 +26,7 @@

|

||||

|

||||

本课程几乎要完全靠汇编代码来写。汇编代码非常接近计算机的底层。计算机其实是靠一个叫处理器的设备来工作的,处理器能够执行像加法这样的简单任务,还有一组叫做 RAM 的芯片,它能够用来保存数字。当计算机通电后,处理器执行程序员给定的一系列指令,这将导致内存中的数字发生变化,以及与连接的硬件进行交互。汇编代码只是将这些机器命令转换为人类可读的文本。

|

||||

|

||||

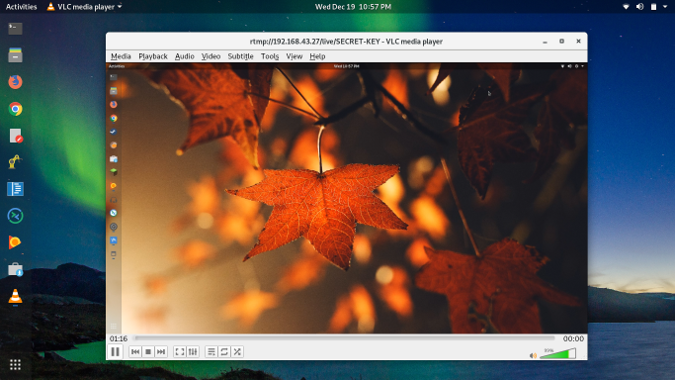

常规的编程就是,程序员使用编程语言,比如 C++、Java、C#、Basic 等等来写代码,然后一个叫编译器的程序将程序员写的代码转换成汇编代码,然后进一步转换为[二进制代码](https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/introduction.html#note2)。二进制代码才是计算机真正能够理解的东西,但它是人类无法读取的东西。汇编代码比二进制代码好一点,至少它的命令是人类可读的,但它仍然让人很沮丧。请记住,你用汇编代码写的每个命令都是处理器可以直接认识的,因此这些命令设计的很简单,因为物理电路必须能够处理每个命令。

|

||||

常规的编程就是,程序员使用编程语言,比如 C++、Java、C#、Basic 等等来写代码,然后一个叫编译器的程序将程序员写的代码转换成汇编代码,然后进一步转换为二进制代码。[^2] 二进制代码才是计算机真正能够理解的东西,但它是人类无法读取的东西。汇编代码比二进制代码好一点,至少它的命令是人类可读的,但它仍然让人很沮丧。请记住,你用汇编代码写的每个命令都是处理器可以直接认识的,因此这些命令设计的很简单,因为物理电路必须能够处理每个命令。

|

||||

|

||||

![Compiler process][1]

|

||||

|

||||

@ -34,6 +34,9 @@

|

||||

|

||||

现在,你已经准备好进入第一节课了,它是 [课程 1 OK01][2]

|

||||

|

||||

[^1]: 要查看更完整的操作系统列表,请参照:[操作系统列表 - Wikipedia](http://en.wikipedia.org/wiki/List_of_operating_systems)

|

||||

[^2]: 当然,我简化了普通编程的这种解释,实际上它在很大程度上取决于语言和机器。感兴趣的话,参见 [编译器 - Wikipedia](http://en.wikipedia.org/wiki/Compiler)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/introduction.html

|

||||

|

||||

@ -0,0 +1,205 @@

|

||||

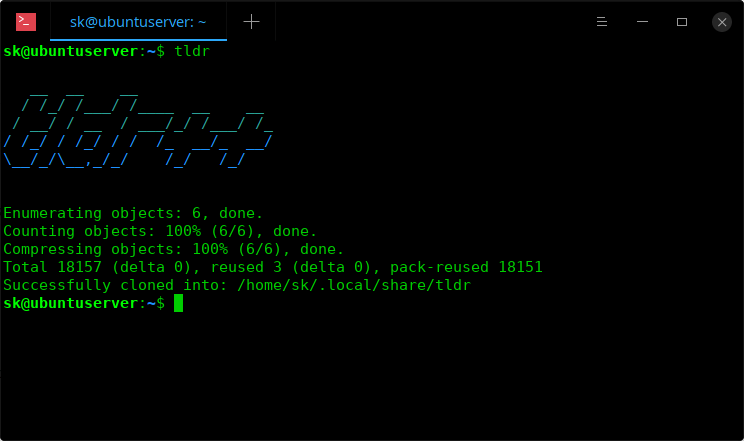

使用 Ansible 来管理你的工作站:配置自动化

|

||||

======

|

||||

> 学习如何使 Ansible 自动对一系列台式机和笔记本应用配置。

|

||||

|

||||

|

||||

|

||||

Ansible 是一个令人惊讶的自动化的配置管理工具。其主要应用在服务器和云部署上,但在工作站上的应用(无论是台式机还是笔记本)却鲜少得到关注,这就是本系列所要关注的。

|

||||

|

||||

在这个系列的[第一部分][1],我向你展示了 `ansible-pull` 命令的基本用法,我们创建了一个安装了少量包的剧本。它本身是没有多大的用处的,但是为后续的自动化做了准备。

|

||||

|

||||

在这篇文章中,将会达成闭环,而且在最后部分,我们将会有一个针对工作站自动配置的完整的工作解决方案。现在,我们将要设置 Ansible 的配置,这样未来将要做的改变将会自动的部署应用到我们的工作站上。现阶段,假设你已经完成了[第一部分][1]的工作。如果没有的话,当你完成的时候回到本文。你应该已经有一个包含第一篇文章中代码的 GitHub 库。我们将直接在之前创建的部分之上继续。

|

||||

|

||||

首先,因为我们要做的不仅仅是安装包文件,所以我们要做一些重新的组织工作。现在,我们已经有一个名为 `local.yml` 并包含以下内容的剧本:

|

||||

|

||||

```

|

||||

- hosts: localhost

|

||||

become: true

|

||||

tasks:

|

||||

- name: Install packages

|

||||

apt: name={{item}}

|

||||

with_items:

|

||||

- htop

|

||||

- mc

|

||||

- tmux

|

||||

```

|

||||

|

||||

如果我们仅仅想实现一个任务那么上面的配置就足够了。随着向我们的配置中不断的添加内容,这个文件将会变的相当的庞大和杂乱。最好能够根据不同类型的配置将我们的<ruby>动作<rt>play</rt></ruby>分为独立的文件。为了达到这个要求,创建一个名为<ruby>任务手册<rt>taskbook</rt></ruby>的东西,它和<ruby>剧本<rt>playbook</rt></ruby>很像但内容更加的流线型。让我们在 Git 库中为任务手册创建一个目录。

|

||||

|

||||

```

|

||||

mkdir tasks

|

||||

```

|

||||

|

||||

`local.yml ` 剧本中的代码可以很好地过渡为安装包文件的任务手册。让我们把这个文件移动到刚刚创建好的 `task` 目录中,并重新命名。

|

||||

|

||||

```

|

||||

mv local.yml tasks/packages.yml

|

||||

```

|

||||

|

||||

现在,我们编辑 `packages.yml` 文件将它进行大幅的瘦身,事实上,我们可以精简除了独立任务本身之外的所有内容。让我们把 `packages.yml` 编辑成如下的形式:

|

||||

|

||||

```

|

||||

- name: Install packages

|

||||

apt: name={{item}}

|

||||

with_items:

|

||||

- htop

|

||||

- mc

|

||||

- tmux

|

||||

```

|

||||

|

||||

正如你所看到的,它使用同样的语法,但我们去掉了对这个任务无用没有必要的所有内容。现在我们有了一个专门安装包文件的任务手册。然而我们仍然需要一个名为 `local.yml` 的文件,因为执行 `ansible-pull` 命令时仍然会去找这个文件。所以我们将在我们库的根目录下(不是在 `task` 目录下)创建一个包含这些内容的全新文件:

|

||||

|

||||

```

|

||||

- hosts: localhost

|

||||

become: true

|

||||

pre_tasks:

|

||||

- name: update repositories

|

||||

apt: update_cache=yes

|

||||

changed_when: False

|

||||

|

||||

tasks:

|

||||

- include: tasks/packages.yml

|

||||

```

|

||||

|

||||

这个新的 `local.yml` 扮演的是导入我们的任务手册的索引的角色。我已经在这个文件中添加了一些你在这个系列中还没见到的内容。首先,在这个文件的开头处,我添加了 `pre_tasks`,这个任务的作用是在其他所有任务运行之前先运行某个任务。在这种情况下,我们给 Ansible 的命令是让它去更新我们的发行版的软件库的索引,下面的配置将执行这个任务要求:

|

||||

|

||||

```

|

||||

apt: update_cache=yes

|

||||

```

|

||||

|

||||

通常 `apt` 模块是用来安装包文件的,但我们也能够让它来更新软件库索引。这样做的目的是让我们的每个动作在 Ansible 运行的时候能够以最新的索引工作。这将确保我们在使用一个老旧的索引安装一个包的时候不会出现问题。因为 `apt` 模块仅仅在 Debian、Ubuntu 及它们的衍生发行版下工作。如果你运行的一个不同的发行版,你要使用特定于你的发行版的模块而不是 `apt`。如果你需要使用一个不同的模块请查看 Ansible 的相关文档。

|

||||

|

||||

下面这行也需要进一步解释:

|

||||

|

||||

```

|

||||

changed_when: False

|

||||

```

|

||||

|

||||

在某个任务中的这行阻止了 Ansible 去报告动作改变的结果,即使是它本身在系统中导致的一个改变。在这里,我们不会去在意库索引是否包含新的数据;它几乎总是会的,因为库总是在改变的。我们不会去在意 `apt` 库的改变,因为索引的改变是正常的过程。如果我们删除这行,我们将在过程报告的后面看到所有的变动,即使仅仅库的更新而已。最好忽略这类的改变。

|

||||

|

||||

接下来是常规任务的阶段,我们将创建好的任务手册导入。我们每次添加另一个任务手册的时候,要添加下面这一行:

|

||||

|

||||

```

|

||||

tasks:

|

||||

- include: tasks/packages.yml

|

||||

```

|

||||

|

||||

如果你现在运行 `ansible-pull` 命令,它应该基本上像上一篇文章中做的一样。不同的是我们已经改进了我们的组织方式,并且能够更有效的扩展它。为了节省你到上一篇文章中去寻找,`ansible-pull` 命令的语法参考如下:

|

||||

|

||||

```

|

||||

sudo ansible-pull -U https://github.com/<github_user>/ansible.git

|

||||

```

|

||||

|

||||

如果你还记得话,`ansible-pull` 的命令拉取一个 Git 仓库并且应用它所包含的配置。

|

||||

|

||||

既然我们的基础已经搭建好,我们现在可以扩展我们的 Ansible 并且添加功能。更特别的是,我们将添加配置来自动化的部署对工作站要做的改变。为了支撑这个要求,首先我们要创建一个特殊的账户来应用我们的 Ansible 配置。这个不是必要的,我们仍然能够在我们自己的用户下运行 Ansible 配置。但是使用一个隔离的用户能够将其隔离到不需要我们参与的在后台运行的一个系统进程中,

|

||||

|

||||

我们可以使用常规的方式来创建这个用户,但是既然我们正在使用 Ansible,我们应该尽量避开使用手动的改变。替代的是,我们将会创建一个任务手册来处理用户创建任务。这个任务手册目前将会仅仅创建一个用户,但你可以在这个任务手册中添加额外的动作来创建更多的用户。我将这个用户命名为 `ansible`,你可以按照自己的想法来命名(如果你做了这个改变要确保更新所有出现地方)。让我们来创建一个名为 `user.yml` 的任务手册并且将以下代码写进去:

|

||||

|

||||

```

|

||||

- name: create ansible user

|

||||

user: name=ansible uid=900

|

||||

```

|

||||

|

||||

下一步,我们需要编辑 `local.yml` 文件,将这个新的任务手册添加进去,像如下这样写:

|

||||

|

||||

```

|

||||

- hosts: localhost

|

||||

become: true

|

||||

pre_tasks:

|

||||

- name: update repositories

|

||||

apt: update_cache=yes

|

||||

changed_when: False

|

||||

|

||||

tasks:

|

||||

- include: tasks/users.yml

|

||||

- include: tasks/packages.yml

|

||||

```

|

||||

|

||||

现在当我们运行 `ansible-pull` 命令的时候,一个名为 `ansible` 的用户将会在系统中被创建。注意我特地通过参数 `uid` 为这个用户声明了用户 ID 为 900。这个不是必须的,但建议直接创建好 UID。因为在 1000 以下的 UID 在登录界面是不会显示的,这样是很棒的,因为我们根本没有需要去使用 `ansibe` 账户来登录我们的桌面。UID 900 是随便定的;它应该是在 1000 以下没有被使用的任何一个数值。你可以使用以下命令在系统中去验证 UID 900 是否已经被使用了:

|

||||

|

||||

```

|

||||

cat /etc/passwd |grep 900

|

||||

```

|

||||

|

||||

不过,你使用这个 UID 应该不会遇到什么问题,因为迄今为止在我使用的任何发行版中我还没遇到过它是被默认使用的。

|

||||

|

||||

现在,我们已经拥有了一个名为 `ansible` 的账户,它将会在之后的自动化配置中使用。接下来,我们可以创建实际的定时作业来自动操作。我们应该将其分开放到它自己的文件中,而不是将其放置到我们刚刚创建的 `users.yml` 文件中。在任务目录中创建一个名为 `cron.yml` 的任务手册并且将以下的代码写进去:

|

||||

|

||||

```

|

||||

- name: install cron job (ansible-pull)

|

||||

cron: user="ansible" name="ansible provision" minute="*/10" job="/usr/bin/ansible-pull -o -U https://github.com/<github_user>/ansible.git > /dev/null"

|

||||

```

|

||||

|

||||

`cron` 模块的语法几乎不需加以说明。通过这个动作,我们创建了一个通过用户 `ansible` 运行的定时作业。这个作业将每隔 10 分钟执行一次,下面是它将要执行的命令:

|

||||

|

||||

```

|

||||

/usr/bin/ansible-pull -o -U https://github.com/<github_user>/ansible.git > /dev/null

|

||||

```

|

||||

|

||||

同样,我们也可以添加想要我们的所有工作站部署的额外的定时作业到这个文件中。我们只需要在新的定时作业中添加额外的动作即可。然而,仅仅是添加一个定时的任务手册是不够的,我们还需要将它添加到 `local.yml` 文件中以便它能够被调用。将下面的一行添加到末尾:

|

||||

|

||||

```

|

||||

- include: tasks/cron.yml

|

||||

```

|

||||

|

||||

现在当 `ansible-pull` 命令执行的时候,它将会以用户 `ansible` 每隔十分钟设置一个新的定时作业。但是,每个十分钟运行一个 Ansible 作业并不是一个好的方式,因为这个将消耗很多的 CPU 资源。每隔十分钟来运行对于 Ansible 来说是毫无意义的,除非我们已经在 Git 仓库中改变一些东西。

|

||||

|

||||

然而,我们已经解决了这个问题。注意我在定时作业中的命令 `ansible-pill` 添加的我们之前从未用到过的参数 `-o`。这个参数告诉 Ansible 只有在从上次 `ansible-pull` 被调用以后库有了变化后才会运行。如果库没有任何变化,它将不会做任何事情。通过这个方法,你将不会无端的浪费 CPU 资源。当然在拉取存储库的时候会使用一些 CPU 资源,但不会像再一次应用整个配置的时候使用的那么多。当 `ansible-pull` 执行的时候,它将会遍历剧本和任务手册中的所有任务,但至少它不会毫无目的的运行。

|

||||

|

||||

尽管我们已经添加了所有必须的配置要素来自动化 `ansible-pull`,它仍然还不能正常的工作。`ansible-pull` 命令需要 `sudo` 的权限来运行,这将允许它执行系统级的命令。然而我们创建的用户 `ansible` 并没有被设置为以 `sudo` 的权限来执行命令,因此当定时作业触发的时候,执行将会失败。通常我们可以使用命令 `visudo` 来手动的去设置用户 `ansible` 去拥有这个权限。然而我们现在应该以 Ansible 的方式来操作,而且这将会是一个向你展示 `copy` 模块是如何工作的机会。`copy` 模块允许你从库复制一个文件到文件系统的任何位置。在这个案列中,我们将会复制 `sudo` 的一个配置文件到 `/etc/sudoers.d/` 以便用户 `ansible` 能够以管理员的权限执行任务。

|

||||

|

||||

打开 `users.yml`,将下面的的动作添加到文件末尾。

|

||||

|

||||

```

|

||||

- name: copy sudoers_ansible

|

||||

copy: src=files/sudoers_ansible dest=/etc/sudoers.d/ansible owner=root group=root mode=0440

|

||||

```

|

||||

|

||||

正如我们看到的,`copy`模块从我们的仓库中复制一个文件到其他任何位置。在这个过程中,我们正在抓取一个名为 `sudoers_ansible`(我们将在后续创建)的文件并将它复制为 `/etc/sudoers/ansible`,并且拥有者为 `root`。

|

||||

|

||||

接下来,我们需要创建我们将要复制的文件。在你的仓库的根目录下,创建一个名为 `files` 的目录:

|

||||

|

||||

```

|

||||

mkdir files

|

||||

```

|

||||

|

||||

然后,在我们刚刚创建的 `files` 目录里,创建名为 `sudoers_ansible` 的文件,包含以下内容:

|

||||

|

||||

```

|

||||

ansible ALL=(ALL) NOPASSWD: ALL

|

||||

```

|

||||

|

||||

就像我们正在这样做的,在 `/etc/sudoer.d` 目录里创建一个文件允许我们为一个特殊的用户配置 `sudo` 权限。现在我们正在通过 `sudo` 允许用户 `ansible` 不需要密码提示就拥有完全控制权限。这将允许 `ansible-pull` 以后台任务的形式运行而不需要手动去运行。

|

||||

|

||||

现在,你可以通过再次运行 `ansible-pull` 来拉取最新的变动:

|

||||

|

||||

```

|

||||

sudo ansible-pull -U https://github.com/<github_user>/ansible.git

|

||||

```

|

||||

|

||||

从这里开始,`ansible-pull` 的定时作业将会在后台每隔十分钟运行一次来检查你的仓库是否有变化,如果它发现有变化,将会运行你的剧本并且应用你的任务手册。

|

||||

|

||||

所以现在我们有了一个完整的可工作方案。当你第一次设置一台新的笔记本或者台式机的时候,你要去手动的运行 `ansible-pull` 命令,但仅仅是在第一次的时候。从第一次之后,用户 `ansible` 将会在后台接手后续的运行任务。当你想对你的机器做变动的时候,你只需要简单的去拉取你的 Git 仓库来做变动,然后将这些变化回传到库中。接着,当定时作业下次在每台机器上运行的时候,它将会拉取变动的部分并应用它们。你现在只需要做一次变动,你的所有工作站将会跟着一起变动。这方法尽管有一点不同寻常,通常,你会有一个包含你的机器列表和不同机器所属规则的清单文件。然而,`ansible-pull` 的方法,就像在文章中描述的,是管理工作站配置的非常有效的方法。

|

||||

|

||||

我已经在我的 [Github 仓库][2]中更新了这篇文章中的代码,所以你可以随时去浏览来对比检查你的语法。同时我将前一篇文章中的代码移到了它自己的目录中。

|

||||

|

||||

在[第三部分][3],我们将通过介绍使用 Ansible 来配置 GNOME 桌面设置来结束这个系列。我将会告诉你如何设置你的墙纸和锁屏壁纸、应用一个桌面主题以及更多的东西。

|

||||

|

||||

同时,到了布置一些作业的时候了,大多数人都有我们所使用的各种应用的配置文件。可能是 Bash、Vim 或者其他你使用的工具的配置文件。现在你可以尝试通过我们在使用的 Ansible 库来自动复制这些配置到你的机器中。在这篇文章中,我已将向你展示了如何去复制文件,所以去尝试以下看看你是都已经能应用这些知识。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/manage-your-workstation-configuration-ansible-part-2

|

||||

|

||||

作者:[Jay LaCroix][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[FelixYFZ](https://github.com/FelixYFZ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jlacroix

|

||||

[1]:https://linux.cn/article-10434-1.html

|

||||

[2]:https://github.com/jlacroix82/ansible_article.git

|

||||

[3]:https://opensource.com/article/18/5/manage-your-workstation-ansible-part-3

|

||||

@ -1,20 +1,22 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: subject: (Turn an old Linux desktop into a home media center)

|

||||

[#]: via: (https://opensource.com/article/18/11/old-linux-desktop-new-home-media-center)

|

||||

[#]: author: ([Alan Formy-Duval](https://opensource.com/users/alanfdoss))

|

||||

[#]: url: ( )

|

||||

[#]: url: (https://linux.cn/article-10446-1.html)

|

||||

|

||||

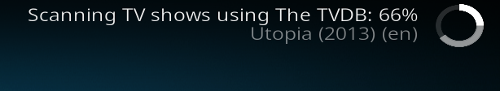

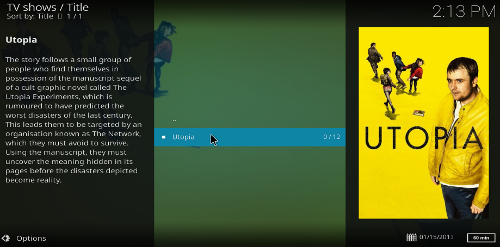

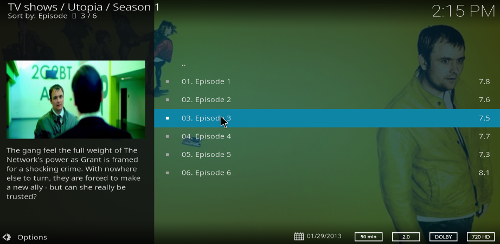

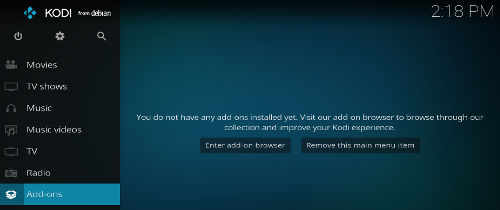

将旧的 Linux 桌面变成家庭媒体中心

|

||||

将旧的 Linux 台式机变成家庭媒体中心

|

||||

======

|

||||

重新利用过时的计算机来浏览互联网并在大屏电视上观看视频。

|

||||

|

||||

> 重新利用过时的计算机来浏览互联网并在大屏电视上观看视频。

|

||||

|

||||

|

||||

|

||||

我第一次尝试搭建一台“娱乐电脑”是在 20 世纪 90 年代后期,使用一台带 Trident ProVidia 9685 PCI 显卡的普通旧台式电脑。我使用了所谓的“电视输出”卡,它有一个额外的输出连接到标准电视端子上。屏幕显示看起来不太好,而且没有音频输出。并且外观很丑:有一条 S-Video 线穿过了客厅地板连接到我的 19 英寸 Sony Trinitron CRT 电视机上。

|

||||

我第一次尝试搭建一台“娱乐电脑”是在 20 世纪 90 年代后期,使用了一台带 Trident ProVidia 9685 PCI 显卡的普通旧台式电脑。我使用了所谓的“电视输出”卡,它有一个额外的输出可以连接到标准电视端子上。屏幕显示看起来不太好,而且没有音频输出。并且外观很丑:有一条 S-Video 线穿过了客厅地板连接到我的 19 英寸 Sony Trinitron CRT 电视机上。

|

||||

|

||||

我在 Linux 和 Windows 98 上也得到了同样令人遗憾的结果。在和那些看起来不对劲的系统挣扎之后,我放弃了几年。值得庆幸的是,如今的 HDMI 拥有更好的性能和标准化的分辨率,这使得廉价的家庭媒体中心成为现实。

|

||||

我在 Linux 和 Windows 98 上得到了同样令人遗憾的结果。在和那些看起来不对劲的系统挣扎之后,我放弃了几年。值得庆幸的是,如今的 HDMI 拥有更好的性能和标准化的分辨率,这使得廉价的家庭媒体中心成为现实。

|

||||

|

||||

我的新媒体中心娱乐电脑实际上是我的旧 Ubuntu Linux 桌面,最近我用更快的电脑替换了它。这台电脑在工作中太慢,但是它的 3.4GHz 的 AMD Phenom II X4 965 处理器和 8GB 的 RAM 足以满足一般浏览和视频流的要求。

|

||||

|

||||

@ -30,37 +32,37 @@

|

||||

|

||||

### 音频

|

||||

|

||||

Nvidia GeForce GTX 音频设备在 GNOME 控制中心的声音设置中被列为 GK107 HDMI Audio Controller,因此单条 HDMI 线缆可同时处理音频和视频。无需将音频线连接到板载声卡的输出插孔。

|

||||

Nvidia GeForce GTX 音频设备在 GNOME 控制中心的声音设置中被显示为 GK107 HDMI Audio Controller,因此单条 HDMI 线缆可同时处理音频和视频。无需将音频线连接到板载声卡的输出插孔。

|

||||

|

||||

![Sound settings screenshot][2]

|

||||

|

||||

GNOME 音频设置中的 HDMI 音频控制器。

|

||||

*GNOME 音频设置中的 HDMI 音频控制器。*

|

||||

|

||||

### 键盘和鼠标

|

||||

|

||||

我有罗技的无线键盘和鼠标。当我安装它们时,我插入了两个外置 USB 接收器,它们可以使用,但我经常遇到信号反应问题。接着我发现其中一个被标记为联合接收器,这意味着它可以自己处理多个罗技输入设备。罗技不提供在 Linux 中配置统一接收器的软件。但幸运的是,有个开源程序 [Solaar][3] 能够做到。使用单个接收器解决了我的输入性能问题。

|

||||

我有罗技的无线键盘和鼠标。当我安装它们时,我插入了两个外置 USB 接收器,它们可以使用,但我经常遇到信号反应问题。接着我发现其中一个被标记为联合接收器,这意味着它可以自己处理多个罗技输入设备。罗技不提供在 Linux 中配置联合接收器的软件。但幸运的是,有个开源程序 [Solaar][3] 能够做到。使用单个接收器解决了我的输入性能问题。

|

||||

|

||||

![Solaar][5]

|

||||

|

||||

Solaar 联合接收器界面。

|

||||

*Solaar 联合接收器界面。*

|

||||

|

||||

### 视频

|

||||

|

||||

最初很难在我的 47 英寸平板电视上阅读,所以我在 Universal Access 下启用了“大文字”。我下载了一些与电视 1920x1080 分辨率相匹配的壁纸,这看起来很棒!

|

||||

最初很难在我的 47 英寸平板电视上阅读文字,所以我在 Universal Access 下启用了“大文字”。我下载了一些与电视 1920x1080 分辨率相匹配的壁纸,这看起来很棒!

|

||||

|

||||

### 最后处理

|

||||

|

||||

我需要在电脑的冷却需求和我对不受阻碍的娱乐的渴望之间取得平衡。由于这是一台标准的 ATX 微型塔式计算机,我确保我有足够的风扇,以及在 BIOS 中精心配置过的温度以减少噪音。我还把电脑放在我的娱乐控制台后面,以进一步减少风扇噪音,但同时我可以按到电源按钮。

|

||||

我需要在电脑的冷却需求和我对不受阻碍的娱乐的渴望之间取得平衡。由于这是一台标准的 ATX 微型塔式计算机,我确保我有足够的风扇转速,以及在 BIOS 中精心配置过的温度以减少噪音。我还把电脑放在我的娱乐控制台后面,以进一步减少风扇噪音,但同时我可以按到电源按钮。

|

||||

|

||||

最后得到一台简单的没有巨大噪音的机器,而且只使用了两根线缆—交流电源线和 HDMI。它应该能够运行任何主流或专门的媒体中心 Linux 发行版。我不期望去玩高端的游戏,因为这可能需要更多的处理能力。

|

||||

最后得到一台简单的、没有巨大噪音的机器,而且只使用了两根线缆:交流电源线和 HDMI。它应该能够运行任何主流或专门的媒体中心 Linux 发行版。我不期望去玩高端的游戏,因为这可能需要更多的处理能力。

|

||||

|

||||

![Showing Ubuntu Linux About page onscreen][7]

|

||||

|

||||

Ubuntu Linux 的关于页面。

|

||||

*Ubuntu Linux 的关于页面。*

|

||||

|

||||

![YouTube on the big screen][9]

|

||||

|

||||

在大屏幕上测试 YouTube 视频。

|

||||

*在大屏幕上测试 YouTube 视频。*

|

||||

|

||||

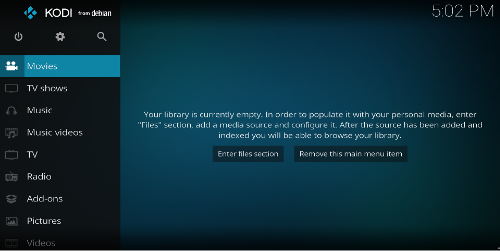

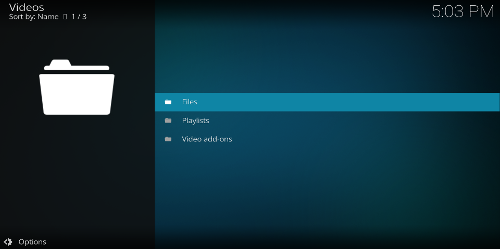

我还没安装像 [Kodi][10] 这样专门的媒体中心发行版。截至目前,它运行的是 Ubuntu Linux 18.04.1 LTS,而且很稳定。

|

||||

|

||||

@ -73,7 +75,7 @@ via: https://opensource.com/article/18/11/old-linux-desktop-new-home-media-cente

|

||||

作者:[Alan Formy-Duval][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -84,4 +86,4 @@ via: https://opensource.com/article/18/11/old-linux-desktop-new-home-media-cente

|

||||

[5]: https://opensource.com/sites/default/files/uploads/solaar_interface.png (Solaar)

|

||||

[7]: https://opensource.com/sites/default/files/uploads/finalresult1.png (Showing Ubuntu Linux About page onscreen)

|

||||

[9]: https://opensource.com/sites/default/files/uploads/finalresult2.png (YouTube on the big screen)

|

||||

[10]: https://kodi.tv/

|

||||

[10]: https://kodi.tv/

|

||||

@ -1,24 +1,26 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10447-1.html)

|

||||

[#]: subject: (Powers of two, powers of Linux: 2048 at the command line)

|

||||

[#]: via: (https://opensource.com/article/18/12/linux-toy-2048)

|

||||

[#]: author: (Jason Baker https://opensource.com/users/jason-baker)

|

||||

|

||||

2 的力量,Linux 的力量:终端中的 2048

|

||||

2 的威力,Linux 的威力:终端中的 2048

|

||||

======

|

||||

正在寻找基于终端的游戏来打发时间么?来看看 2048-cli 吧。

|

||||

|

||||

> 正在寻找基于终端的游戏来打发时间么?来看看 2048-cli 吧。

|

||||

|

||||

|

||||

|

||||

你好,欢迎来到今天的 Linux 命令行玩具日历。每天,我们会为你的终端带来一个不同的玩具:它可能是一个游戏或任何简单的消遣,可以帮助你获得乐趣。

|

||||

|

||||

很可能你们中的一些人之前已经看过我们日历中的各种玩具,但我们希望每个人至少见到一件新事物。

|

||||

|

||||

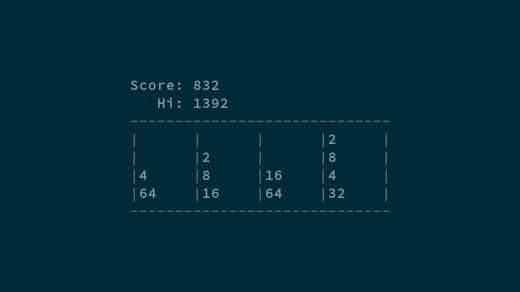

今天的玩具是我最喜欢的休闲游戏之一 [2048][2] (它本身就是另外一个克隆的克隆)的[命令行版本][1]。

|

||||

今天的玩具是我最喜欢的休闲游戏之一 [2048][2] (它本身就是另外一个克隆品的克隆)的[命令行版本][1]。

|

||||

|

||||

要进行游戏,你只需将滑块向上、向下、向左、向右移动,组合成对的数字,并增加数字,直到你得到数字 2048 的块。吸引人的地方(以及挑战)是你不能只移动一个滑块,而是需要移动屏幕上的每一块。

|

||||

要进行游戏,你只需将滑块向上、向下、向左、向右移动,组合成对的数字,并增加数值,直到你得到数字为 2048 的块。最吸引人的地方(以及挑战)是你不能只移动一个滑块,而是需要移动屏幕上的每一块。(LCTT 译注:不知道有没有人在我们 Linux 中国的网站上遇到过 404 页面?那就是一个 2048 游戏,经常我错误地打开一个不存在的页面时,本应该去修复这个问题,却不小心沉迷于其中……)

|

||||

|

||||

它简单、有趣,很容易在里面沉迷几个小时。这个 2048 的克隆 [2048-cli][1] 是 Marc Tiehuis 用 C 编写的,并在 MIT 许可下开源。你可以在 [GitHub][1] 上找到源代码,你也可在这找到适用于你的平台的安装说明。由于它已为 Fedora 打包,因此我来说,安装就像下面那样简单:

|

||||

|

||||

@ -41,7 +43,7 @@ via: https://opensource.com/article/18/12/linux-toy-2048

|

||||

作者:[Jason Baker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -49,4 +51,4 @@ via: https://opensource.com/article/18/12/linux-toy-2048

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://github.com/tiehuis/2048-cli

|

||||

[2]: https://github.com/gabrielecirulli/2048

|

||||

[3]: https://opensource.com/article/18/12/linux-toy-tetris

|

||||

[3]: https://opensource.com/article/18/12/linux-toy-tetris

|

||||

86

published/20181211 Winterize your Bash prompt in Linux.md

Normal file

86

published/20181211 Winterize your Bash prompt in Linux.md

Normal file

@ -0,0 +1,86 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10450-1.html)

|

||||

[#]: subject: (Winterize your Bash prompt in Linux)

|

||||

[#]: via: (https://opensource.com/article/18/12/linux-toy-bash-prompt)

|

||||

[#]: author: (Jason Baker https://opensource.com/users/jason-baker)

|

||||

|

||||

在 Linux 中打扮你的冬季 Bash 提示符

|

||||

======

|

||||

|

||||

> 你的 Linux 终端可能支持 Unicode,那么为何不利用它在提示符中添加季节性的图标呢?

|

||||

|

||||

|

||||

|

||||

欢迎再次来到 Linux 命令行玩具日历的另一篇。如果这是你第一次访问该系列,你甚至可能会问自己什么是命令行玩具?我们对此比较随意:它会是终端上有任何有趣的消遣,对于任何节日主题相关的还有额外的加分。

|

||||

|

||||

也许你以前见过其中的一些,也许你没有。不管怎样,我们希望你玩得开心。

|

||||

|

||||

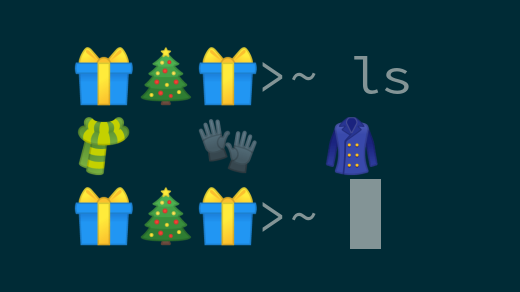

今天的玩具非常简单:它是你的 Bash 提示符。你的 Bash 提示符?是的!我们还有几个星期的假期可以盯着它看,在北半球冬天还会再多几周,所以为什么不玩玩它。

|

||||

|

||||

目前你的 Bash 提示符号可能是一个简单的美元符号( `$`),或者更有可能是一个更长的东西。如果你不确定你的 Bash 提示符是什么,你可以在环境变量 `$PS1` 中找到它。要查看它,请输入:

|

||||

|

||||

```

|

||||

echo $PS1

|

||||

```

|

||||

|

||||

对于我而言,它返回:

|

||||

|

||||

```

|

||||

[\u@\h \W]\$

|

||||

```

|

||||

|

||||

`\u`、`\h` 和 `\W` 分别是用户名、主机名和工作目录的特殊字符。你还可以使用其他一些符号。为了帮助构建你的 Bash 提示符,你可以使用 [EzPrompt][1],这是一个 `PS1` 配置的在线生成器,它包含了许多选项,包括日期和时间、Git 状态等。

|

||||

|

||||

你可能还有其他变量来组成 Bash 提示符。对我来说,`$PS2` 包含了我命令提示符的结束括号。有关详细信息,请参阅 [这篇文章][2]。

|

||||

|

||||

要更改提示符,只需在终端中设置环境变量,如下所示:

|

||||

|

||||

```

|

||||

$ PS1='\u is cold: '

|

||||

jehb is cold:

|

||||

```

|

||||

|

||||

要永久设置它,请使用你喜欢的文本编辑器将相同的代码添加到 `/etc/bashrc` 中。

|

||||

|

||||

那么这些与冬季化有什么关系呢?好吧,你很有可能有现代一下的机器,你的终端支持 Unicode,所以你不仅限于标准的 ASCII 字符集。你可以使用任何符合 Unicode 规范的 emoji,包括雪花 ❄、雪人 ☃ 或一对滑雪板 🎿。你有很多冬季 emoji 可供选择。

|

||||

|

||||

```

|

||||

🎄 圣诞树

|

||||

🧥 外套

|

||||

🦌 鹿

|

||||

🧤 手套

|

||||

🤶 圣诞夫人

|

||||

🎅 圣诞老人

|

||||

🧣 围巾

|

||||

🎿 滑雪者

|

||||

🏂 滑雪板

|

||||

❄ 雪花

|

||||

☃ 雪人

|

||||

⛄ 没有雪的雪人

|

||||

🎁 包装好的礼物

|

||||

```

|

||||

|

||||

选择你最喜欢的,享受冬天的欢乐。有趣的事实:现代文件系统也支持文件名中的 Unicode 字符,这意味着技术上你可以将你下个程序命名为 `❄❄❄❄❄.py`。只是说说,不要这么做。

|

||||

|

||||

你有特别喜欢的命令行小玩具需要我介绍的吗?这个系列要介绍的小玩具大部分已经有了落实,但还预留了几个空位置。如果你有特别想了解的可以评论留言,我会查看的。如果还有空位置,我会考虑介绍它的。如果没有,但如果我得到了一些很好的意见,我会在最后做一些有价值的提及。

|

||||

|

||||

查看昨天的玩具,[在 Linux 终端玩贪吃蛇][3],记得明天再来!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

via: https://opensource.com/article/18/12/linux-toy-bash-prompt

|

||||

|

||||

作者:[Jason Baker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/jason-baker

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: http://ezprompt.net/

|

||||

[2]: https://access.redhat.com/solutions/505983

|

||||

[3]: https://opensource.com/article/18/12/linux-toy-snake

|

||||

@ -0,0 +1,66 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (bestony)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10448-1.html)

|

||||

[#]: subject: (5 resolutions for open source project maintainers)

|

||||

[#]: via: (https://opensource.com/article/18/12/resolutions-open-source-project-maintainers)

|

||||

[#]: author: (Ben Cotton https://opensource.com/users/bcotton)

|

||||

|

||||

一位开源项目维护者的 5 个决心

|

||||

======

|

||||

|

||||

> 不管怎么说,好的交流是一个活跃的开源社区的必备品。

|

||||

|

||||

|

||||

|

||||

我通常不会定下大的新年决心。当然,我在自我提升方面没有任何问题,这篇文章我希望锚定的是这个日历中的另外一部分。不过即使是这样,这里也有一些东西要从今年的免费日历上划掉,并将其替换为一些可以激发我的自省的新日历内容。

|

||||

|

||||

在 2017 年,我从不在社交媒体上分享我从未阅读过的文章。我一直保持这样的状态,我也认为它让我成为了一个更好的互联网公民。对于 2019 年,我正在考虑让我成为更好的开源软件维护者的决心。

|

||||

|

||||

下面是一些我在一些项目中担任维护者或共同维护者时坚持的决心:

|

||||

|

||||

### 1、包含行为准则

|

||||

|

||||

Jono Bacon 在他的文章“[7 个你可能犯的错误][1]”中包含了一条“不强制执行行为准则”。当然,要强制执行行为准则,你首先需要有一个行为准则。我打算默认用[贡献者契约][2],但是你可以使用其他你喜欢的。关于这个许可协议,最好的方法是使用别人已经写好的,而不是你自己写的。但是重要的是,要找到一些能够定义你希望你的社区执行的,无论它们是什么样子。一旦这些被记录下来并强制执行,人们就能自行决定是否成为他们想象中社区的一份子。

|

||||

|

||||

### 2、使许可证清晰且明确

|

||||

|

||||

你知道什么真的很烦么?不清晰的许可证。"这个软件基于 GPL 授权",如果没有进一步提供更多信息的文字,我无法知道更多信息。基于哪个版本的[GPL][3]?我可以用它吗?对于项目的非代码部分,“根据知识共享许可证(CC)授权”更糟糕。我喜欢[知识共享许可证][4],但它有几个不同的许可证包含着不同的权利和义务。因此,我将非常清楚的说明哪个许可证的变种和版本适用于我的项目。我将会在仓库中包含许可的全文,并在其他文件中包含简明的注释。

|

||||

|

||||

与此相关的一类问题是使用 [OSI][5] 批准的许可证。想出一个新的准确的说明了你想要表达什么的许可证是有可能的,但是如果你需要强制推行它,祝你好运。会坚持使用它么?使用您项目的人会理解么?

|

||||

|

||||

### 3、快速分类错误报告和问题

|

||||

|

||||

在技术领域, 很少有比开源维护者更贫乏的东西了。即使在小型项目中,也很难找到时间去回答每个问题并修复每个错误。但这并不意味着我不能哪怕回应一下,它没必要是多段的回复。即使只是给 GitHub 问题贴了个标签也表明了我看见它了。也许我马上就会处理它,也许一年后我会处理它。但是让社区看到它很重要,是的,这里还有人管。

|

||||

|

||||

### 4、如果没有伴随的文档,请不要推送新特性或错误修复

|

||||

|

||||

尽管多年来我的开源贡献都围绕着文档,但我的项目并没有反映出我对它的重视。我能推送的提交不多,并不不需要某种形式的文档。新的特性显然应该在他们被提交时甚至是在之前就编写文档。但即使是错误修复,也应该在发行说明中有一个条目提及。如果没有什么意外,推送提交也是很好的改善文档的机会。

|

||||

|

||||

### 5、放弃一个项目时,要说清楚

|

||||

|

||||

我很不擅长对事情说“不”,我告诉编辑我会为 [Opensource.com][6] 写一到两篇文章,而现在我有了将近 60 篇文章。哎呀。但在某些时候,曾经我有兴趣的事情也会不再有兴趣。也许该项目是不必要的,因为它的功能被吸收到更大的项目中;也许只是我厌倦了它。但这对社区是不公平的(并且存在潜在的危险,正如最近的 [event-stream 恶意软件注入][7]所示),会让该项目陷入困境。维护者有权随时离开,但他们离开时应该说清楚。

|

||||

|

||||

无论你是开源维护者还是贡献者,如果你知道项目维护者应该作出的其他决心,请在评论中分享!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/12/resolutions-open-source-project-maintainers

|

||||

|

||||

作者:[Ben Cotton][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[bestony](https://github.com/bestony)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bcotton

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/article/17/8/mistakes-open-source-avoid

|

||||

[2]: https://www.contributor-covenant.org/

|

||||

[3]: https://opensource.org/licenses/gpl-license

|

||||

[4]: https://creativecommons.org/share-your-work/licensing-types-examples/

|

||||

[5]: https://opensource.org/

|

||||

[6]: http://Opensource.com

|

||||

[7]: https://arstechnica.com/information-technology/2018/11/hacker-backdoors-widely-used-open-source-software-to-steal-bitcoin/

|

||||

@ -1,111 +0,0 @@

|

||||

怎样如软件工程师一样组织知识

|

||||

==========

|

||||

|

||||

总体上说,软件开发和技术是以非常快的速度发展的领域,所以持续学习是必不可少的。在互联网上花几分钟找一下,在 Twitter、媒体、RSS 订阅、Hacker News 和其它专业网站和社区等地方,就可以从文章、案例研究、教程、代码片段、新应用程序和信息中找到大量有用的信息。

|

||||

|

||||

保存和组织所有这些信息可能是一项艰巨的任务。在这篇文章中,我将介绍一些我用来组织信息的工具。

|

||||

|

||||

我认为在知识管理方面非常重要的一点就是避免锁定在特定平台。我使用的所有工具都允许以标准格式(如 Markdown 和 HTML)导出数据。

|

||||

|

||||

请注意,我的流程并不完美,我一直在寻找新工具和方法来优化它。每个人都不同,所以对我有用的东西可能不适合你。

|

||||

|

||||

|

||||

### 用 NotionHQ 做知识库

|

||||

|

||||

对我来说,知识管理的基本部分是拥有某种个人知识库或维基。这是一个你可以以有组织的方式保存链接、书签、备注等的地方。

|

||||

|

||||

我使用 [NotionHQ][7] 做这件事。我使用它来记录各种主题,包括资源列表,如通过编程语言分组的优秀的库或教程,为有趣的博客文章和教程添加书签等等,不仅与软件开发有关,而且与我的个人生活有关。

|

||||

|

||||

我真正喜欢 NotionHQ 的是,创建新内容是如此简单。你可以使用 Markdown 编写它并将其组织为树状。

|

||||

|

||||

这是我的“开发”工作区的顶级页面:

|

||||

|

||||

[][8]

|

||||

|

||||

NotionHQ 有一些很棒的其他功能,如集成了电子表格/数据库和任务板。

|

||||

|

||||

如果您想认真使用 NotionHQ,您将需要订阅付费个人计划,因为免费计划有所限制。我觉得它物有所值。NotionHQ 允许将整个工作区导出为 Markdown 文件。导出功能存在一些重要问题,例如丢失页面层次结构,希望 Notion 团队可以改进这一点。

|

||||

|

||||

作为一个免费的替代方案,我可能会使用 [VuePress][9] 或 [GitBook][10] 来托管我自己的知识库。

|

||||

|

||||

### 用 Pocket 保存感兴趣的文章

|

||||

|

||||

[Pocket][11] 是我最喜欢的应用之一!使用 Pocket,您可以创建一个来自互联网上的文章的阅读列表。每当我看到一篇看起来很有趣的文章时,我都会使用 Chrome 扩展程序将其保存到 Pocket。稍后,我会阅读它,如果我发现它足够有用,我将使用 Pocket 的“存档”功能永久保存该文章并清理我的 Pocket 收件箱。

|

||||

|

||||

我尽量保持这个阅读清单足够小,并存档我已经处理过的信息。Pocket 允许您标记文章,以便以后更轻松地搜索特定主题的文章。

|

||||

|

||||

如果原始网站消失,您还可以在 Pocket 服务器中保存文章的副本,但是您需要 Pocket Premium 订阅计划。

|

||||

|

||||

Pocket 还具有“发现”功能,根据您保存的文章推荐类似的文章。这是找到可以阅读的新内容的好方法。

|

||||

|

||||

### 用 SnippetStore 做代码片段管理

|

||||

|

||||

从 GitHub 到 Stack Overflow 的答案,到博客文章,经常能找到一些你想要保存备用的好代码片段。它可能是一些不错的算法实现、一个有用的脚本或如何在某种语言中执行某种操作的示例。

|

||||

|

||||

我尝试了很多应用程序,从简单的 GitHub Gists 到 [Boostnote][12],直到我发现 [SnippetStore][13]。

|

||||

|

||||

SnippetStore 是一个开源的代码片段管理应用。SnippetStore 与其他产品的区别在于其简单性。您可以按语言或标签整理片段,并且可以拥有多个文件片段。它不完美,但是可以用。例如,Boostnote 具有更多功能,但我更喜欢 SnippetStore 组织内容的简单方法。

|

||||

|

||||

对于我每天使用的缩写和片段,我更喜欢使用我的编辑器 / IDE 的代码片段功能,因为它更便于使用。我使用 SnippetStore 更像是作为编码示例的参考。

|

||||

|

||||

[Cacher][14] 也是一个有趣的选择,因为它与许多编辑器进行了集成,他有一个命令行工具,并使用 GitHub Gists 作为后端,但其专业计划为 6 美元/月,我觉这有点太贵。

|

||||

|

||||

### 用 DevHints 管理速查表

|

||||

|

||||

[Devhints][15] 是由 Rico Sta. Cruz 创建的一个速查表集合。它是开源的,是用 Jekyll 生成的,Jekyll 是最受欢迎的静态站点生成器之一。

|

||||

|

||||

这些速查表是用 Markdown 编写的,带有一些额外的格式化支持,例如支持列。

|

||||

|

||||

我非常喜欢其界面的外观,并且不像可以在 [Cheatography][16] 等网站上找到 PDF 或图像格式的速查表, Markdown 非常容易添加新内容并保持更新和进行版本控制。

|

||||

|

||||

因为它是开源,我创建了自己的分叉版本,删除了一些我不需要的速查表,并添加了更多。

|

||||

|

||||

我使用速查表作为如何使用某些库或编程语言或记住一些命令的参考。速查表的单个页面非常方便,例如,可以列出特定编程语言的所有基本语法。

|

||||

|

||||

我仍在尝试这个工具,但到目前为止它的工作很好。

|

||||

|

||||

### Diigo

|

||||

|

||||

[Diigo][17] 允许您注释和突出显示部分网站。我在研究新东西时使用它来注释重要信息,或者从文章、Stack Overflow 答案或来自 Twitter 的鼓舞人心的引语中保存特定段落!;)

|

||||

|

||||

* * *

|

||||

|

||||

就这些了。某些工具的功能方面可能存在一些重叠,但正如我在开始时所说的那样,这是一个不断演进的工作流程,因为我一直在尝试和寻找改进和提高工作效率的方法。

|

||||

|

||||

你呢?是如何组织你的知识的?请随时在下面发表评论。

|

||||

|

||||

谢谢你的阅读。

|

||||

|

||||

------------------------------------------------------------------------

|

||||

|

||||

作者简介:Bruno Paz,Web 工程师,专精 #PHP 和 @Symfony 框架。热心于新技术。喜欢运动,@FCPorto 的粉丝!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://dev.to/brpaz/how-do-i-organize-my-knowledge-as-a-software-engineer-4387

|

||||

|

||||

作者:[Bruno Paz][a]

|

||||

选题:[oska874](https://github.com/oska874)

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://brunopaz.net/

|

||||

[1]:https://dev.to/brpaz

|

||||

[2]:http://twitter.com/brunopaz88

|

||||

[3]:http://github.com/brpaz

|

||||

[4]:https://dev.to/t/knowledge

|

||||

[5]:https://dev.to/t/learning

|

||||

[6]:https://dev.to/t/development

|

||||

[7]:https://www.notion.so/

|

||||

[8]:https://res.cloudinary.com/practicaldev/image/fetch/s--uMbaRUtu--/c_limit%2Cf_auto%2Cfl_progressive%2Cq_auto%2Cw_880/http://i.imgur.com/kRnuvMV.png

|

||||

[9]:https://vuepress.vuejs.org/

|

||||

[10]:https://www.gitbook.com/?t=1

|

||||

[11]:https://getpocket.com/

|

||||

[12]:https://boostnote.io/

|

||||

[13]:https://github.com/ZeroX-DG/SnippetStore

|

||||

[14]:https://www.cacher.io/

|

||||

[15]:https://devhints.io/

|

||||

[16]:https://cheatography.com/

|

||||

[17]:https://www.diigo.com/index

|

||||

84

sources/talk/20190108 Hacking math education with Python.md

Normal file

84

sources/talk/20190108 Hacking math education with Python.md

Normal file

@ -0,0 +1,84 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Hacking math education with Python)

|

||||

[#]: via: (https://opensource.com/article/19/1/hacking-math)

|

||||

[#]: author: (Don Watkins https://opensource.com/users/don-watkins)

|

||||

|

||||

Hacking math education with Python

|

||||

======

|

||||

Teacher, programmer, and author Peter Farrell explains why teaching math with Python works better than the traditional approach.

|

||||

|

||||

|

||||

Mathematics instruction has a bad reputation, especially with people (like me) who've had trouble with the traditional approach, which emphasizes rote memorization and theory that seems far removed from students' real world.

|

||||

|

||||

While teaching a student who was baffled by his math lessons, [Peter Farrell][1], a Python developer and mathematics teacher, decided to try using Python to teach the boy the math concepts he was having trouble learning.

|

||||

|

||||

Peter was inspired by the work of [Seymour Papert][2], the father of the Logo programming language, which lives on in Python's [Turtle module][3]. The Turtle metaphor hooked Peter on Python and using it to teach math, much like [I was drawn to Python][4].

|

||||

|

||||

Peter shares his approach in his new book, [Math Adventures with Python][5]: An Illustrated Guide to Exploring Math with Code. And, I recently interviewed him to learn more about it.

|

||||

|

||||

**Don Watkins:** What is your background?

|

||||

|

||||

**Peter Farrell:** I was a math teacher for eight years, and I tutored math for 10 years after that. When I was a teacher, I read Papert's [Mindstorms][6] and was inspired to introduce all my math classes to Logo and Turtles.

|

||||

|

||||

**DW:** Why did you start using Python?

|

||||

|

||||

**PF:** I was working with a homeschooled boy on a very dry, textbook-driven math curriculum, which at the time seemed like a curse to me. But I found ways to sneak in the Logo Turtles, and he was a programming fan, so he liked that. Once we got into functions and real programming, he asked if we could continue in Python. I didn't know any Python but it didn't seem that different from Logo, so I agreed. And I never looked back!

|

||||

|

||||

I was also looking for a 3D graphics package I could use to model a solar system and lead students through making planets move and get pulled by the force of attraction between the bodies, according to Newton's formula. Many graphics packages required programming in C or something hard, but I found an excellent package called Visual Python that was very easy to use. I used [VPython][7] for years after that.

|

||||

|

||||

So, I was introduced to Python in the context of working with a student on math. For some time after that, he was my programming tutor while I was his math tutor!

|

||||

|

||||

**DW:** What got you interested in math?

|

||||

|

||||

**PF:** I learned it the old-fashioned way: by hand, on paper and blackboards. I was good at manipulating symbols, so algebra was never a problem, and I liked drawing and graphing, so geometry and trig could be fun, too. I did some programming in BASIC and Fortran in college, but it never inspired me. Later on, programming inspired me greatly! I'm still tickled by the way programming makes easy work of the laborious stuff you have to do in math class, freeing you up to do the more fun of exploring, graphing, tweaking, and discovering.

|

||||

|

||||

**DW:** What inspired you to consider your Python approach to math?

|

||||

|

||||

**PF:** When I was teaching the homeschooled student, I was amazed at what we could do by writing a simple function and then calling it a bunch of times with different values using a loop. That would take a half an hour by hand, but the computer spit it out instantly! Then we could look for patterns (which is what a math student should be doing), express the pattern as a function, and extend it further.

|

||||

|

||||

**DW:** How does your approach to teaching help students—especially those who struggle with math? How does it make math more relevant?

|

||||

|

||||

**PF:** Students, especially high-schoolers, question the need to be doing all this calculating, graphing, and solving by hand in the 21st century, and I don't disagree with them. Learning to use Excel, for example, to crunch numbers should be seen as a basic necessity to work in an office. Learning to code, in any language, is becoming a very valuable skill to companies. So, there's a real-world appeal to me.

|

||||

|

||||

But the idea of making art with code can revolutionize math class. Just putting a shape on a screen requires math—the position (x-y coordinates), the dimensions, and even the color are all numbers. If you want something to move or change, you'll need to use variables, and not the "guess what x equals" kind of variable. You'll vary the position using a variable or, more efficiently, using a vector. [This makes] math topics like vectors and matrices seen as helpful tools you can use, rather than required information you'll never use.

|

||||

|

||||

Students who struggle with math might just be turned off to "school math," which is heavy on memorization and following rules and light on creativity and real applications. They might find they're actually good at math, just not the way it was taught in school. I've had parents see the cool graphics their kids have created with code and say, "I never knew that's what sines and cosines were used for!"

|

||||

|

||||

**DW:** How do you see your approach to math and programming encouraging STEM in schools?

|

||||

|

||||

**PF:** I love the idea of combining previously separated topics into an idea like STEM or STEAM! Unfortunately for us math folks, the "M" is very often neglected. I see lots of fun projects being done in STEM labs, even by very young children, and they're obviously getting an education in technology, engineering, and science. But I see precious little math material in the projects. STEM/[mechatronics][8] teacher extraordinaire Ken Hawthorn and I are creating projects to try to remedy that.

|

||||

|

||||

Hopefully, my book helps encourage students, girls and boys, to get creative with technology, real and virtual. There are a lot of beautiful graphics in the book, which I hope will inspire people to go through the coding adventure and make them. All the software I use ([Python Processing][9]) is available for free and can be easily installed, or is already installed, on the Raspberry Pi. Entry into the STEM world should not be cost-prohibitive to schools or individuals.

|

||||

|

||||

**DW:** What would you like to share with other math teachers?

|

||||

|

||||

**PF:** If the math establishment is really serious about teaching students the standards they have agreed upon, like numerical reasoning, logic, analysis, modeling, geometry, interpreting data, and so on, they're going to have to admit that coding can help with every single one of those goals. My approach was born, as I said before, from just trying to enrich a dry, traditional approach, and I think any teacher can do that. They just need somebody who can show them how to do everything they're already doing, just using code to automate the laborious stuff.

|

||||

|

||||

My graphics-heavy approach is made possible by the availability of free graphics software. Folks might need to be shown where to find these packages and how to get started. But a math teacher can soon be leading students through solving problems using 21st-century technology and visualizing progress or results and finding more patterns to pursue.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/hacking-math

|

||||

|

||||

作者:[Don Watkins][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/don-watkins

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://twitter.com/hackingmath

|

||||

[2]: https://en.wikipedia.org/wiki/Seymour_Papert

|

||||

[3]: https://en.wikipedia.org/wiki/Turtle_graphics

|

||||

[4]: https://opensource.com/life/15/8/python-turtle-graphics

|

||||

[5]: https://nostarch.com/mathadventures

|

||||

[6]: https://en.wikipedia.org/wiki/Mindstorms_(book)

|

||||

[7]: http://vpython.org/

|

||||

[8]: https://en.wikipedia.org/wiki/Mechatronics

|

||||

[9]: https://processing.org/

|

||||

@ -0,0 +1,89 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (NSA to Open Source its Reverse Engineering Tool GHIDRA)

|

||||

[#]: via: (https://itsfoss.com/nsa-ghidra-open-source)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

NSA to Open Source its Reverse Engineering Tool GHIDRA

|

||||

======

|

||||

|

||||

GHIDRA – NSA’s reverse engineering tool is getting ready for a free public release this March at the [RSA Conference 2019][1] to be held in San Francisco.

|

||||

|

||||

The National Security Agency (NSA) did not officially announce this – however – a senior NSA advisor, Robert Joyce’s [session description][2] on the official RSA conference website revealed about it before any official statement or announcement.

|

||||

|

||||

Here’s what it mentioned:

|

||||

|

||||

![][3]

|

||||

Image Credits: [Twitter][4]

|

||||

|

||||

In case the text in the image isn’t properly visible, let me quote the description here:

|

||||

|

||||

> NSA has developed a software reverse engineering framework known as GHIDRA, which will be demonstrated for the first time at RSAC 2019. An interactive GUI capability enables reverse engineers to leverage an integrated set of features that run on a variety of platforms including Windows, Mac OS, and Linux and supports a variety of processor instruction sets. The GHISDRA platform includes all the features expected in high-end commercial tools, with new and expanded functionality NSA uniquely developed. and will be released for free public use at RSA.

|

||||

|

||||

### What is GHIDRA?

|

||||

|

||||

GHIDRA is a software reverse engineering framework developed by [NSA][5] that is in use by the agency for more than a decade.

|

||||

|

||||

Basically, a software reverse engineering tool helps to dig up the source code of a proprietary program which further gives you the ability to detect virus threats or potential bugs. You should read how [reverse engineering][6] works to know more.

|

||||

|

||||

The tool is is written in Java and quite a few people compared it to high-end commercial reverse engineering tools available like [IDA][7].

|

||||

|

||||

A [Reddit thread][8] involves more detailed discussion where you will find some ex-employees giving good amount of details before the availability of the tool.

|

||||

|

||||

![NSA open source][9]

|

||||

|

||||

### GHIDRA was a secret tool, how do we know about it?

|

||||

|

||||

The existence of the tool was uncovered in a series of leaks by [WikiLeaks][10] as part of [Vault 7 documents of CIA][11].

|

||||

|

||||

### Is it going to be open source?

|

||||

|

||||

We do think that the reverse engineering tool to be released could be made open source. Even though there is no official confirmation mentioning “open source” – but a lot of people do believe that NSA is definitely targeting the open source community to help improve their tool while also reducing their effort to maintain this tool.

|

||||

|

||||

This way the tool can remain free and the open source community can help improve GHIDRA as well.

|

||||

|

||||

You can also check out the existing [Vault 7 document at WikiLeaks][12] to come up with your prediction.

|

||||

|

||||

### Is NSA doing a good job here?

|

||||

|

||||

The reverse engineering tool is going to be available for Windows, Linux, and Mac OS for free.

|

||||

|

||||

Of course, we care about the Linux platform here – which could be a very good option for people who do not want to or cannot afford a thousand dollar license for a reverse engineering tool with the best-in-class features.

|

||||

|

||||

### Wrapping Up

|

||||

|

||||

If GHIDRA becomes open source and is available for free, it would definitely help a lot of researchers and students and on the other side – the competitors will be forced to adjust their pricing.

|

||||

|

||||

What are your thoughts about it? Is it a good thing? What do you think about the tool going open sources Let us know what you think in the comments below.

|

||||

|

||||

![][13]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/nsa-ghidra-open-source

|

||||

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/ankush/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.rsaconference.com/events/us19

|

||||

[2]: https://www.rsaconference.com/events/us19/agenda/sessions/16608-come-get-your-free-nsa-reverse-engineering-tool

|

||||

[3]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/01/come-get-your-free-nsa.jpg?fit=800%2C337&ssl=1

|

||||

[4]: https://twitter.com/0xffff0800/status/1080909700701405184

|

||||

[5]: http://nsa.gov

|

||||

[6]: https://en.wikipedia.org/wiki/Reverse_engineering

|

||||

[7]: https://en.wikipedia.org/wiki/Interactive_Disassembler

|

||||

[8]: https://www.reddit.com/r/ReverseEngineering/comments/ace2m3/come_get_your_free_nsa_reverse_engineering_tool/

|

||||

[9]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/01/nsa-open-source.jpeg?resize=800%2C450&ssl=1

|

||||

[10]: https://www.wikileaks.org/

|

||||

[11]: https://en.wikipedia.org/wiki/Vault_7

|

||||

[12]: https://wikileaks.org/ciav7p1/cms/page_9536070.html

|

||||

[13]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/01/nsa-open-source.jpeg?fit=800%2C450&ssl=1

|

||||

64

sources/talk/20190110 Toyota Motors and its Linux Journey.md

Normal file

64

sources/talk/20190110 Toyota Motors and its Linux Journey.md

Normal file

@ -0,0 +1,64 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Toyota Motors and its Linux Journey)

|

||||

[#]: via: (https://itsfoss.com/toyota-motors-linux-journey)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

Toyota Motors and its Linux Journey

|

||||

======

|

||||

|

||||

**This is a community submission from It’s FOSS reader Malcolm Dean.**

|

||||

|

||||

I spoke with Brian R Lyons of TMNA Toyota Motor Corp North America about the implementation of Linux in Toyota and Lexus infotainment systems. I came to find out there is an Automotive Grade Linux (AGL) being used by several autmobile manufacturers.

|

||||

|

||||

I put together a short article comprising of my discussion with Brian about Toyota and its tryst with Linux. I hope that Linux enthusiasts will like this quick little chat.

|

||||

|

||||

All [Toyota vehicles and Lexus vehicles are going to use Automotive Grade Linux][1] (AGL) majorly for the infotainment system. This is instrumental in Toyota Motor Corp because as per Mr. Lyons “As a technology leader, Toyota realized that adopting open source development methodology is the best way to keep up with the rapid pace of new technologies”.

|

||||

|

||||

Toyota among other automotive companies thought, going with a Linux based operating system might be cheaper and quicker when it comes to updates, and upgrades compared to using proprietary software.

|

||||

|

||||

Wow! Finally Linux in a vehicle. I use Linux every day on my desktop; what a great way to expand the use of this awesome software to a completely different industry.

|

||||

|

||||

I was curious when Toyota decided to use the [Automotive Grade Linux][2] (AGL). According to Mr. Lyons, it goes back to 2011.

|

||||

|

||||

> “Toyota has been an active member and contributor to AGL since its launch more than five years ago, collaborating with other OEMs, Tier 1s and suppliers to develop a robust, Linux-based platform with increased security and capabilities”

|

||||

|

||||

![Toyota Infotainment][3]

|

||||

|

||||

In 2011, [Toyota joined the Linux Foundation][4] and started discussions about IVI (In-Vehicle Infotainment) software with other car OEMs and software companies. As a result, in 2012, Automotive Grade Linux working group was formed in the Linux Foundation.

|

||||

|

||||

What Toyota did at first in AGL group was to take “code first” approach as normal as in the open source domains, and then start the conversation about the initial direction by specifying requirement specifications which had been discussed among car OEMs, IVI Tier-1 companies, software companies, and so on.

|

||||

|

||||

Toyota had already realized that sharing the software code among Tier1 companies was going to essential at the time when it joined the Linux Foundation. This was because the cost of maintaining such a huge software was very costly and was no longer differentiation by Tier1 companies. Toyota and its Tier1 supplier companies wanted to spend more resources n new functions and new user experiences rather than maintaining conventional code all by themselves.

|

||||

|

||||

This is a huge thing as automotive companies have gone in together to further their cooperation. Many companies have adopted this after finding proprietary software to be expensive.

|

||||

|

||||

Today, AGL is used for all Toyota and Lexus vehicles and is used in all markets where vehicles are sold.

|

||||

|

||||

As someone who has sold cars for Lexus, I think this is a huge step forward. I and other sales associates had many customers who would come back to speak with a technology specialist to learn about the full capabilities of their infotainment system.

|

||||

|

||||

I see this as a huge step forward for the Linux community, and users. The operating system we use on a daily basis is being put to use right in front of us albeit in a modified form but is there none-the-less.

|

||||

|

||||

Where does this lead? Hopefully a better user-friendly and less glitchy experience for consumers.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/toyota-motors-linux-journey

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.linuxfoundation.org/press-release/2018/01/automotive-grade-linux-hits-road-globally-toyota-amazon-alexa-joins-agl-support-voice-recognition/

|

||||

[2]: https://www.automotivelinux.org/

|

||||

[3]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/01/toyota-interiors.jpg?resize=800%2C450&ssl=1

|

||||

[4]: https://www.linuxfoundation.org/press-release/2011/07/toyota-joins-linux-foundation/

|

||||

130

sources/talk/20190114 Remote Working Survival Guide.md

Normal file

130

sources/talk/20190114 Remote Working Survival Guide.md

Normal file

@ -0,0 +1,130 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Remote Working Survival Guide)

|

||||

[#]: via: (https://www.jonobacon.com/2019/01/14/remote-working-survival/)

|

||||

[#]: author: (Jono Bacon https://www.jonobacon.com/author/admin/)

|

||||

|

||||

Remote Working Survival Guide

|

||||

======

|

||||

|

||||

|

||||

Remote working seems to be all the buzz. Apparently, [70% of professionals work from home at least once a week][1]. Similarly, [77% of people work more productively][2] and [68% of millennials would consider a company more if they offered remote working][3]. It seems to make sense: technology, connectivity, and culture seem to be setting the world up more and more for remote working. Oh, and home-brewed coffee is better than ever too.

|

||||

|

||||

Now, I am going to write another piece for how companies should optimize for remote working (so make sure you [Join As a Member][4] to stay tuned — it is free).

|

||||

|

||||

Today though I want to **share recommendations for how individuals can do remote working well themselves**. Whether you are a full-time remote worker or have the option of working from home a few days a week, this article should hopefully be helpful.

|

||||

|

||||

Now, you need to know that **remote working is not a panacea**. Sure, it seems like hanging around at home in your jimjams, listening to your antisocial music, and sipping on buckets of coffee is perfect, but it isn’t for everyone.

|

||||

|

||||

Some people need the structure of an office. Some people need the social element of an office. Some people need to get out the house. Some people lack the discipline to stay focused at home. Some people are avoiding the government coming and knocking on the door due to years of unpaid back taxes.

|

||||

|

||||

**Remote working is like a muscle: it can bring enormous strength and capabilities IF you train and maintain it**. If you don’t, your results are going to vary.

|

||||

|

||||

I have worked from home for the vast majority of my career. I love it. I am more productive, happier, and empowered when I work from home. I don’t dislike working in an office, and I enjoy the social element, but I am more in my “zone” when I work from home. I also love blisteringly heavy metal, which can pose a problem when the office don’t want to listen to [After The Burial][5].

|

||||

|

||||

![][6]

|

||||

“Squirrel.”

|

||||

[Credit][7]

|

||||

|

||||

I have learned how I need to manage remote work, using the right balance of work routine, travel, and other elements, and here are some of my recommendations. Be sure to **share yours in the comments**.

|

||||

|

||||

### 1\. You need discipline and routine (and to understand your “waves”)

|

||||

|

||||

Remote work really is a muscle that needs to be trained. Just like building actual muscle, there needs to be a clear routine and a healthy dollop of discipline mixed in.

|

||||

|

||||

Always get dressed (no jimjams). Set your start and end time for your day (I work 9am – 6pm most days). Choose your lunch break (mine is 12pm). Choose your morning ritual (mine is email followed by a full review of my client needs). Decide where your main workplace will be (mine is my home office). Decide when you will exercise each day (I do it at 5pm most days).

|

||||

|

||||

**Design a realistic routine and do it for 66 days**. It takes this long to build a habit. Try not to deviate from the routine. The more you stick the routine, the less work it will seem further down the line. By the end of the 66 days it will feel natural and you won’t have to think about it.

|

||||

|

||||

Here’s the deal though, we don’t live in a vacuum ([cleaner, or otherwise][8]). We all have waves.

|

||||

|

||||

A wave is when you need a change of routine to mix things up. For example, in summertime I generally want more sunlight. I will often work outside in the garden. Near the holidays I get more distracted, so I need more structure in my day. Sometimes I just need more human contact, so I will work from coffee shops for a few weeks. Sometimes I just fancy working in the kitchen or on the couch. You need to learn your waves and listen to your body. **Build your habit first, and then modify it as you learn your waves**.

|

||||

|

||||

### 2\. Set expectations with your management and colleagues

|

||||

|

||||

Not everyone knows how to do remote working, and if your company is less familiar with remote working, you especially need to set expectations with colleagues.

|

||||

|

||||

This can be pretty simple: **when you have designed your routine, communicate it clearly to your management and team**. Let them know how they can get hold of you, how to contact you in an emergency, and how you will be collaborating while at home.

|

||||

|

||||

The communication component here is critical. There are some remote workers who are scared to leave their computer for fear that someone will send them a message while they are away (and they are worried people may think they are just eating Cheetos and watching Netflix).

|

||||

|

||||

You need time away. You need to eat lunch without one eye on your computer. You are not a 911 emergency responder. **Set expectations that sometimes you may not be immediately responsive, but you will get back to them as soon as possible**.

|

||||

|

||||

Similarly, set expectations on your general availability. For example, I set expectations with clients that I generally work from 9am – 6pm every day. Sure, if a client needs something urgently, I am more than happy to respond outside of those hours, but as a general rule I am usually working between those hours. This is necessary for a balanced life.

|

||||

|

||||

### 3\. Distractions are your enemy and they need managing

|

||||

|

||||

We all get distracted. It is human nature. It could be your young kid getting home and wanting to play Rescue Bots. It could be checking Facebook, Instagram, or Twitter to ensure you don’t miss any unwanted political opinions or photos of people’s lunches. It could be that there is something else going on your life that is taking your attention (such as an upcoming wedding, event, or big trip.)

|

||||

|

||||

**You need to learn what distracts you and how to manage it**. For example, I know I get distracted by my email and Twitter. I check it religiously and every check gets me out of the zone of what I am working on. I also get distracted by grabbing coffee and water, which then may turn into a snack and a YouTube video.

|

||||

|

||||

![][9]

|

||||

My nemesis for distractions.

|

||||

|

||||

The digital distractions have a simple solution: **lock them out**. Close down the tabs until you complete what you are doing. I do this all the time with big chunks of work: I lock out the distractions until I am done. It requires discipline, but all of this does.

|

||||

|

||||

The human elements are tougher. If you have a family you need to make it clear that when you are work, you need to be generally left alone. This is why a home office is so important: you need to set boundaries that mum or dad is working. Come in if there is emergency, but otherwise they need to be left alone.

|

||||

|

||||

There are all kinds of opportunities for locking these distractions out. Put your phone on silent. Set yourself as away. Move to a different room (or building) where the distraction isn’t there. Again, be honest in what distracts you and manage it. If you don’t, you will always be at their mercy.

|

||||

|

||||

### 4\. Relationships need in-person attention

|

||||

|

||||

Some roles are more attuned to remote working than others. For example, I have seen great work from engineering, quality assurance, support, security, and other teams (typically more focused on digital collaboration). Other teams such as design or marketing often struggle more in remote environments (as they are often more tactile.)

|

||||

|

||||

With any team though, having strong relationship is critical, and in-person discussion, collaboration, and socializing is essential to this. So many of our senses (such as body language) are removed in a digital environment, and these play a key role in how we build trust and relationships.

|

||||

|

||||

![][10]

|

||||

Rockets also help.

|

||||

|

||||

This is especially important if (a) you are new a company and need to build these relationships, (b) are new to a role and need to build relationships with your team, or (c) are in a leadership position where building buy-in and engagement is a key part of your job.

|

||||

|

||||

**The solution? A sensible mix of remote and in-person time.** If your company is nearby, work from home part of the week and at the office part of the week. If your company is further a away, schedule regular trips to the office (and set expectations with your management that you need this). For example, when I worked at XPRIZE I flew to LA every few weeks for a few days. When I worked at Canonical (who were based in London), we had sprints every three months.

|

||||

|

||||

### 5\. Stay focused, but cut yourself some slack

|

||||

|

||||

The crux of everything in this article is about building a capability, and developing a remote working muscle. This is as simple as building a routine, sticking to it, and having an honest view of your “waves” and distractions and how to manage them.

|

||||

|

||||

I see the world in a fairly specific way: **everything we do has the opportunity to be refined and improved**. For example, I have been public speaking now for over 15 years, but I am always discovering new ways to improve, and new mistakes to fix (speaking of which, see my [10 Ways To Up Your Public Speaking Game][11].)

|

||||

|

||||

There is a thrill in the discovery of new ways to get better, and to see every stumbling block and mistake as an “aha!” moment to kick ass in new and different ways. It is no different with remote working: look for patterns that help to unlock ways in which you can make your remote working time more efficient, more comfortable, and more fun.

|

||||

|

||||

![][12]

|

||||

Get these books. They are fantastic for personal development.

|

||||

See my [$150 Personal Development Kit][13] article

|

||||

|

||||

…but don’t go crazy over it. There are some people who obsesses every minute of their day about how to get better. They beat themselves up constantly for “not doing well enough”, “not getting more done”, and not meeting their internal unrealistic view of perfection.

|

||||

|

||||

We are humans. We are animals, and we are not robots. Always strive to improve, but be realistic that not everything will be perfect. You are going to have some off-days or off-weeks. You are going to struggle at times with stress and burnout. You are going to handle a situation poorly remotely that would have been easier in the office. Learn from these moments but don’t obsess over them. Life is too damn short.

|

||||

|

||||

**What are your tips, tricks, and recommendations? How do you manage remote working? What is missing from my recommendations? Share them in the comments box!**

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.jonobacon.com/2019/01/14/remote-working-survival/

|

||||

|

||||

作者:[Jono Bacon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.jonobacon.com/author/admin/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.cnbc.com/2018/05/30/70-percent-of-people-globally-work-remotely-at-least-once-a-week-iwg-study.html

|

||||

[2]: http://www.cosocloud.com/press-release/connectsolutions-survey-shows-working-remotely-benefits-employers-and-employees

|

||||

[3]: https://www.aftercollege.com/cf/2015-annual-survey

|

||||

[4]: https://www.jonobacon.com/join/

|

||||

[5]: https://www.facebook.com/aftertheburial/

|

||||

[6]: https://www.jonobacon.com/wp-content/uploads/2019/01/aftertheburial2.jpg

|

||||

[7]: https://skullsnbones.com/burial-live-photos-vans-warped-tour-denver-co/

|

||||

[8]: https://www.youtube.com/watch?v=wK1PNNEKZBY

|

||||

[9]: https://www.jonobacon.com/wp-content/uploads/2019/01/IMG_20190114_102429-1024x768.jpg

|

||||

[10]: https://www.jonobacon.com/wp-content/uploads/2019/01/15381733956_3325670fda_k-1024x576.jpg

|

||||

[11]: https://www.jonobacon.com/2018/12/11/10-ways-to-up-your-public-speaking-game/

|

||||

[12]: https://www.jonobacon.com/wp-content/uploads/2019/01/DwVBxhjX4AgtJgV-1024x532.jpg

|

||||

[13]: https://www.jonobacon.com/2017/11/13/150-dollar-personal-development-kit/

|

||||

@ -0,0 +1,54 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (The Art of Unix Programming, reformatted)

|

||||

[#]: via: (https://arp242.net/weblog/the-art-of-unix-programming.html)

|

||||

[#]: author: (Martin Tournoij https://arp242.net/)

|

||||

|

||||

The Art of Unix Programming, reformatted

|

||||

======

|

||||

|

||||

tl;dr: I reformatted Eric S. Raymond’s The Art of Unix Programming for readability; [read it here][1].

|

||||

|

||||

I recently wanted to look up a quote for an article I was writing, and I was fairly sure I had read it in The Art of Unix Programming. Eric S. Raymond (esr) has [kindly published it online][2], but it’s difficult to search as it’s distributed over many different pages, and the formatting is not exactly conducive for readability.

|

||||

|

||||

I `wget --mirror`’d it to my drive, and started out with a simple [script][3] to join everything to a single page, but eventually ended up rewriting a lot of the HTML from crappy 2003 docbook-generated tagsoup to more modern standards, and I slapped on some CSS to make it more readable.

|

||||

|

||||