mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

commit

0a051d6067

166

published/20181220 7 CI-CD tools for sysadmins.md

Normal file

166

published/20181220 7 CI-CD tools for sysadmins.md

Normal file

@ -0,0 +1,166 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (jdh8383)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10578-1.html)

|

||||

[#]: subject: (7 CI/CD tools for sysadmins)

|

||||

[#]: via: (https://opensource.com/article/18/12/cicd-tools-sysadmins)

|

||||

[#]: author: (Dan Barker https://opensource.com/users/barkerd427)

|

||||

|

||||

系统管理员的 7 个 CI/CD 工具

|

||||

======

|

||||

|

||||

> 本文是一篇简单指南:介绍一些顶级的开源的持续集成、持续交付和持续部署(CI/CD)工具。

|

||||

|

||||

|

||||

|

||||

虽然持续集成、持续交付和持续部署(CI/CD)在开发者社区里已经存在很多年,一些机构在其运维部门也有实施经验,但大多数公司并没有做这样的尝试。对于很多机构来说,让运维团队能够像他们的开发同行一样熟练操作 CI/CD 工具,已经变得十分必要了。

|

||||

|

||||

无论是基础设施、第三方应用还是内部开发的应用,都可以开展 CI/CD 实践。尽管你会发现有很多不同的工具,但它们都有着相似的设计模型。而且可能最重要的一点是:通过带领你的公司进行这些实践,会让你在公司内部变得举足轻重,成为他人学习的榜样。

|

||||

|

||||

一些机构在自己的基础设施上已有多年的 CI/CD 实践经验,常用的工具包括 [Ansible][1]、[Chef][2] 或者 [Puppet][3]。另一些工具,比如 [Test Kitchen][4],允许在最终要部署应用的基础设施上运行测试。事实上,如果使用更高级的配置方法,你甚至可以将应用部署到有真实负载的仿真“生产环境”上,来运行应用级别的测试。然而,单单是能够测试基础设施就是一项了不起的成就了。配置管理工具 Terraform 可以通过 Test Kitchen 来快速创建更[短暂][5]和[冥等的][6]的基础设施配置,这比它的前辈要强不少。再加上 Linux 容器和 Kubernetes,在数小时内,你就可以创建一套类似于生产环境的配置参数和系统资源,来测试整个基础设施和其上部署的应用,这在以前可能需要花费几个月的时间。而且,删除和再次创建整个测试环境也非常容易。

|

||||

|

||||

当然,作为初学者,你也可以把网络配置和 DDL(<ruby>数据定义语言<rt>data definition language</rt></ruby>)文件加入版本控制,然后开始尝试一些简单的 CI/CD 流程。虽然只能帮你检查一下语义语法或某些最佳实践,但实际上大多数开发的管道都是这样起步的。只要你把脚手架搭起来,建造就容易得多了。而一旦起步,你就会发现各种管道的使用场景。

|

||||

|

||||

举个例子,我经常会在公司内部写新闻简报,我使用 [MJML][7] 制作邮件模板,然后把它加入版本控制。我一般会维护一个 web 版本,但是一些同事喜欢 PDF 版,于是我创建了一个[管道][8]。每当我写好一篇新闻稿,就在 Gitlab 上提交一个合并请求。这样做会自动创建一个 index.html 文件,生成这篇新闻稿的 HTML 和 PDF 版链接。HTML 和 PDF 文件也会在该管道里同时生成。除非有人来检查确认,这些文件不会被直接发布出去。使用 GitLab Pages 发布这个网站后,我就可以下载一份 HTML 版,用来发送新闻简报。未来,我会修改这个流程,当合并请求成功或者在某个审核步骤后,自动发出对应的新闻稿。这些处理逻辑并不复杂,但的确为我节省了不少时间。实际上这些工具最核心的用途就是替你节省时间。

|

||||

|

||||

关键是要在抽象层创建出工具,这样稍加修改就可以处理不同的问题。值得留意的是,我创建的这套流程几乎不需要任何代码,除了一些[轻量级的 HTML 模板][9],一些[把 HTML 文件转换成 PDF 的 nodejs 代码][10],还有一些[生成索引页面的 nodejs 代码][11]。

|

||||

|

||||

这其中一些东西可能看起来有点复杂,但其中大部分都源自我使用的不同工具的教学文档。而且很多开发人员也会乐意跟你合作,因为他们在完工时会发现这些东西也挺有用。上面我提供的那些代码链接是给 [DevOps KC][12](LCTT 译注:一个地方性 DevOps 组织) 发送新闻简报用的,其中大部分用来创建网站的代码来自我在内部新闻简报项目上所作的工作。

|

||||

|

||||

下面列出的大多数工具都可以提供这种类型的交互,但是有些工具提供的模型略有不同。这一领域新兴的模型是用声明式的方法例如 YAML 来描述一个管道,其中的每个阶段都是短暂而幂等的。许多系统还会创建[有向无环图(DAG)][13],来确保管道上不同的阶段排序的正确性。

|

||||

|

||||

这些阶段一般运行在 Linux 容器里,和普通的容器并没有区别。有一些工具,比如 [Spinnaker][14],只关注部署组件,而且提供一些其他工具没有的操作特性。[Jenkins][15] 则通常把管道配置存成 XML 格式,大部分交互都可以在图形界面里完成,但最新的方案是使用[领域专用语言(DSL)][16](如 [Groovy][17])。并且,Jenkins 的任务(job)通常运行在各个节点里,这些节点上会装一个专门的 Java 代理,还有一堆混杂的插件和预装组件。

|

||||

|

||||

Jenkins 在自己的工具里引入了管道的概念,但使用起来却并不轻松,甚至包含一些禁区。最近,Jenkins 的创始人决定带领社区向新的方向前进,希望能为这个项目注入新的活力,把 CI/CD 真正推广开(LCTT 译注:详见后面的 Jenkins 章节)。我认为其中最有意思的想法是构建一个云原生 Jenkins,能把 Kubernetes 集群转变成 Jenkins CI/CD 平台。

|

||||

|

||||

当你更多地了解这些工具并把实践带入你的公司和运维部门,你很快就会有追随者,因为你有办法提升自己和别人的工作效率。我们都有多年积累下来的技术债要解决,如果你能给同事们提供足够的时间来处理这些积压的工作,他们该会有多感激呢?不止如此,你的客户也会开始看到应用变得越来越稳定,管理层会把你看作得力干将,你也会在下次谈薪资待遇或参加面试时更有底气。

|

||||

|

||||

让我们开始深入了解这些工具吧,我们将对每个工具做简短的介绍,并分享一些有用的链接。

|

||||

|

||||

### GitLab CI

|

||||

|

||||

- [项目主页](https://about.gitlab.com/product/continuous-integration/)

|

||||

- [源代码](https://gitlab.com/gitlab-org/gitlab-ce/)

|

||||

- 许可证:MIT

|

||||

|

||||

GitLab 可以说是 CI/CD 领域里新登场的玩家,但它却在权威调研机构 [Forrester 的 CI 集成工具的调查报告][20]中位列第一。在一个高水平、竞争充分的领域里,这是个了不起的成就。是什么让 GitLab CI 这么成功呢?它使用 YAML 文件来描述整个管道。另有一个功能叫做 Auto DevOps,可以为较简单的项目用多种内置的测试单元自动生成管道。这套系统使用 [Herokuish buildpacks][21] 来判断语言的种类以及如何构建应用。有些语言也可以管理数据库,它真正改变了构建新应用程序和从开发的开始将它们部署到生产环境的过程。它原生集成于 Kubernetes,可以根据不同的方案将你的应用自动部署到 Kubernetes 集群,比如灰度发布、蓝绿部署等。

|

||||

|

||||

除了它的持续集成功能,GitLab 还提供了许多补充特性,比如:将 Prometheus 和你的应用一同部署,以提供操作监控功能;通过 GitLab 提供的 Issues、Epics 和 Milestones 功能来实现项目评估和管理;管道中集成了安全检测功能,多个项目的检测结果会聚合显示;你可以通过 GitLab 提供的网页版 IDE 在线编辑代码,还可以快速查看管道的预览或执行状态。

|

||||

|

||||

### GoCD

|

||||

|

||||

- [项目主页](https://www.gocd.org/)

|

||||

- [源代码](https://github.com/gocd/gocd)

|

||||

- 许可证:Apache 2.0

|

||||

|

||||

GoCD 是由老牌软件公司 Thoughtworks 出品,这已经足够证明它的能力和效率。对我而言,GoCD 最具亮点的特性是它的[价值流视图(VSM)][22]。实际上,一个管道的输出可以变成下一个管道的输入,从而把管道串联起来。这样做有助于提高不同开发团队在整个开发流程中的独立性。比如在引入 CI/CD 系统时,有些成立较久的机构希望保持他们各个团队相互隔离,这时候 VSM 就很有用了:让每个人都使用相同的工具就很容易在 VSM 中发现工作流程上的瓶颈,然后可以按图索骥调整团队或者想办法提高工作效率。

|

||||

|

||||

为公司的每个产品配置 VSM 是非常有价值的;GoCD 可以使用 [JSON 或 YAML 格式存储配置][23],还能以可视化的方式展示数据等待时间,这让一个机构能有效减少学习它的成本。刚开始使用 GoCD 创建你自己的流程时,建议使用人工审核的方式。让每个团队也采用人工审核,这样你就可以开始收集数据并且找到可能的瓶颈点。

|

||||

|

||||

### Travis CI

|

||||

|

||||

- [项目主页](https://docs.travis-ci.com/)

|

||||

- [源代码](https://github.com/travis-ci/travis-ci)

|

||||

- 许可证:MIT

|

||||

|

||||

我使用的第一个软件既服务(SaaS)类型的 CI 系统就是 Travis CI,体验很不错。管道配置以源码形式用 YAML 保存,它与 GitHub 等工具无缝整合。我印象中管道从来没有失效过,因为 Travis CI 的在线率很高。除了 SaaS 版之外,你也可以使用自行部署的版本。我还没有自行部署过,它的组件非常多,要全部安装的话,工作量就有点吓人了。我猜更简单的办法是把它部署到 Kubernetes 上,[Travis CI 提供了 Helm charts][26],这些 charts 目前不包含所有要部署的组件,但我相信以后会越来越丰富的。如果你不想处理这些细枝末节的问题,还有一个企业版可以试试。

|

||||

|

||||

假如你在开发一个开源项目,你就能免费使用 SaaS 版的 Travis CI,享受顶尖团队提供的优质服务!这样能省去很多麻烦,你可以在一个相对通用的平台上(如 GitHub)研发开源项目,而不用找服务器来运行任何东西。

|

||||

|

||||

### Jenkins

|

||||

|

||||

- [项目主页](https://jenkins.io/)

|

||||

- [源代码](https://github.com/jenkinsci/jenkins)

|

||||

- 许可证:MIT

|

||||

|

||||

Jenkins 在 CI/CD 界绝对是元老级的存在,也是事实上的标准。我强烈建议你读一读这篇文章:“[Jenkins: Shifting Gears][27]”,作者 Kohsuke 是 Jenkins 的创始人兼 CloudBees 公司 CTO。这篇文章契合了我在过去十年里对 Jenkins 及其社区的感受。他在文中阐述了一些这几年呼声很高的需求,我很乐意看到 CloudBees 引领这场变革。长期以来,Jenkins 对于非开发人员来说有点难以接受,并且一直是其管理员的重担。还好,这些问题正是他们想要着手解决的。

|

||||

|

||||

[Jenkins 配置既代码][28](JCasC)应该可以帮助管理员解决困扰了他们多年的配置复杂性问题。与其他 CI/CD 系统类似,只需要修改一个简单的 YAML 文件就可以完成 Jenkins 主节点的配置工作。[Jenkins Evergreen][29] 的出现让配置工作变得更加轻松,它提供了很多预设的使用场景,你只管套用就可以了。这些发行版会比官方的标准版本 Jenkins 更容易维护和升级。

|

||||

|

||||

Jenkins 2 引入了两种原生的管道功能,我在 LISA(LCTT 译注:一个系统架构和运维大会) 2017 年的研讨会上已经[讨论过了][30]。这两种功能都没有 YAML 简便,但在处理复杂任务时它们很好用。

|

||||

|

||||

[Jenkins X][31] 是 Jenkins 的一个全新变种,用来实现云端原生 Jenkins(至少在用户看来是这样)。它会使用 JCasC 及 Evergreen,并且和 Kubernetes 整合的更加紧密。对于 Jenkins 来说这是个令人激动的时刻,我很乐意看到它在这一领域的创新,并且继续发挥领袖作用。

|

||||

|

||||

### Concourse CI

|

||||

|

||||

- [项目主页](https://concourse-ci.org/)

|

||||

- [源代码](https://github.com/concourse/concourse)

|

||||

- 许可证:Apache 2.0

|

||||

|

||||

我第一次知道 Concourse 是通过 Pivotal Labs 的伙计们介绍的,当时它处于早期 beta 版本,而且那时候也很少有类似的工具。这套系统是基于微服务构建的,每个任务运行在一个容器里。它独有的一个优良特性是能够在你本地系统上运行任务,体现你本地的改动。这意味着你完全可以在本地开发(假设你已经连接到了 Concourse 的服务器),像在真实的管道构建流程一样从你本地构建项目。而且,你可以在修改过代码后从本地直接重新运行构建,来检验你的改动结果。

|

||||

|

||||

Concourse 还有一个简单的扩展系统,它依赖于“资源”这一基础概念。基本上,你想给管道添加的每个新功能都可以用一个 Docker 镜像实现,并作为一个新的资源类型包含在你的配置中。这样可以保证每个功能都被封装在一个不可变的独立工件中,方便对其单独修改和升级,改变其中一个时不会影响其他构建。

|

||||

|

||||

### Spinnaker

|

||||

|

||||

- [项目主页](https://www.spinnaker.io/)

|

||||

- [源代码](https://github.com/spinnaker/spinnaker)

|

||||

- 许可证:Apache 2.0

|

||||

|

||||

Spinnaker 出自 Netflix,它更关注持续部署而非持续集成。它可以与其他工具整合,比如 Travis 和 Jenkins,来启动测试和部署流程。它也能与 Prometheus、Datadog 这样的监控工具集成,参考它们提供的指标来决定如何部署。例如,在<ruby>金丝雀发布<rt>canary deployment</rt></ruby>里,我们可以根据收集到的相关监控指标来做出判断:最近的这次发布是否导致了服务降级,应该立刻回滚;还是说看起来一切 OK,应该继续执行部署。

|

||||

|

||||

谈到持续部署,一些另类但却至关重要的问题往往被忽略掉了,说出来可能有点让人困惑:Spinnaker 可以帮助持续部署不那么“持续”。在整个应用部署流程期间,如果发生了重大问题,它可以让流程停止执行,以阻止可能发生的部署错误。但它也可以在最关键的时刻让人工审核强制通过,发布新版本上线,使整体收益最大化。实际上,CI/CD 的主要目的就是在商业模式需要调整时,能够让待更新的代码立即得到部署。

|

||||

|

||||

### Screwdriver

|

||||

|

||||

- [项目主页](http://screwdriver.cd/)

|

||||

- [源代码](https://github.com/screwdriver-cd/screwdriver)

|

||||

- 许可证:BSD

|

||||

|

||||

Screwdriver 是个简单而又强大的软件。它采用微服务架构,依赖像 Nomad、Kubernetes 和 Docker 这样的工具作为执行引擎。官方有一篇很不错的[部署教学文档][34],介绍了如何将它部署到 AWS 和 Kubernetes 上,但如果正在开发中的 [Helm chart][35] 也完成的话,就更完美了。

|

||||

|

||||

Screwdriver 也使用 YAML 来描述它的管道,并且有很多合理的默认值,这样可以有效减少各个管道重复的配置项。用配置文件可以组织起高级的工作流,来描述各个任务间复杂的依赖关系。例如,一项任务可以在另一个任务开始前或结束后运行;各个任务可以并行也可以串行执行;更赞的是你可以预先定义一项任务,只在特定的拉取请求时被触发,而且与之有依赖关系的任务并不会被执行,这能让你的管道具有一定的隔离性:什么时候被构造的工件应该被部署到生产环境,什么时候应该被审核。

|

||||

|

||||

---

|

||||

|

||||

以上只是我对这些 CI/CD 工具的简单介绍,它们还有许多很酷的特性等待你深入探索。而且它们都是开源软件,可以自由使用,去部署一下看看吧,究竟哪个才是最适合你的那个。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/12/cicd-tools-sysadmins

|

||||

|

||||

作者:[Dan Barker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[jdh8383](https://github.com/jdh8383)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/barkerd427

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.ansible.com/

|

||||

[2]: https://www.chef.io/

|

||||

[3]: https://puppet.com/

|

||||

[4]: https://github.com/test-kitchen/test-kitchen

|

||||

[5]: https://www.merriam-webster.com/dictionary/ephemeral

|

||||

[6]: https://en.wikipedia.org/wiki/Idempotence

|

||||

[7]: https://mjml.io/

|

||||

[8]: https://gitlab.com/devopskc/newsletter/blob/master/.gitlab-ci.yml

|

||||

[9]: https://gitlab.com/devopskc/newsletter/blob/master/index/index.html

|

||||

[10]: https://gitlab.com/devopskc/newsletter/blob/master/html-to-pdf.js

|

||||

[11]: https://gitlab.com/devopskc/newsletter/blob/master/populate-index.js

|

||||

[12]: https://devopskc.com/

|

||||

[13]: https://en.wikipedia.org/wiki/Directed_acyclic_graph

|

||||

[14]: https://www.spinnaker.io/

|

||||

[15]: https://jenkins.io/

|

||||

[16]: https://martinfowler.com/books/dsl.html

|

||||

[17]: http://groovy-lang.org/

|

||||

[18]: https://about.gitlab.com/product/continuous-integration/

|

||||

[19]: https://gitlab.com/gitlab-org/gitlab-ce/

|

||||

[20]: https://about.gitlab.com/2017/09/27/gitlab-leader-continuous-integration-forrester-wave/

|

||||

[21]: https://github.com/gliderlabs/herokuish

|

||||

[22]: https://www.gocd.org/getting-started/part-3/#value_stream_map

|

||||

[23]: https://docs.gocd.org/current/advanced_usage/pipelines_as_code.html

|

||||

[24]: https://docs.travis-ci.com/

|

||||

[25]: https://github.com/travis-ci/travis-ci

|

||||

[26]: https://github.com/travis-ci/kubernetes-config

|

||||

[27]: https://jenkins.io/blog/2018/08/31/shifting-gears/

|

||||

[28]: https://jenkins.io/projects/jcasc/

|

||||

[29]: https://github.com/jenkinsci/jep/blob/master/jep/300/README.adoc

|

||||

[30]: https://danbarker.codes/talk/lisa17-becoming-plumber-building-deployment-pipelines/

|

||||

[31]: https://jenkins-x.io/

|

||||

[32]: https://concourse-ci.org/

|

||||

[33]: https://github.com/concourse/concourse

|

||||

[34]: https://docs.screwdriver.cd/cluster-management/kubernetes

|

||||

[35]: https://github.com/screwdriver-cd/screwdriver-chart

|

||||

@ -0,0 +1,156 @@

|

||||

每个 Linux 游戏玩家都绝不想要的恼人体验

|

||||

===================

|

||||

|

||||

[][10]

|

||||

|

||||

(LCTT 译注:本文原文发表于 2016 年,可能有些信息已经过时。)

|

||||

|

||||

[在 Linux 平台上玩游戏][12] 并不是什么新鲜事,现在甚至有专门的 [Linux 游戏发行版][13],但是这不意味着在 Linux 上打游戏的体验和在 Windows 上一样顺畅。

|

||||

|

||||

为了确保我们和 Windows 用户同样地享受游戏乐趣,哪些问题是我们应该考虑的呢?

|

||||

|

||||

[Wine][14]、[PlayOnLinux][15] 和其它类似软件不总是能够让我们玩所有流行的 Windows 游戏。在这篇文章里,我想讨论一下为了拥有最好的 Linux 游戏体验所必须处理好的若干因素。

|

||||

|

||||

### #1 SteamOS 是开源平台,但 Steam for Linux 并不是

|

||||

|

||||

正如 [StemOS 主页][16]所说, 即便 SteamOS 是一个开源平台,但 Steam for Linux 仍然是专有的软件。如果 Steam for Linux 也开源,那么它从开源社区得到的支持将会是巨大的。既然它不是,那么 [Ascension 计划的诞生自然是不可避免的][17]:

|

||||

|

||||

- [Destination: Project Ascension • UI Design Mockups Reveal](https://youtu.be/07UiS5iAknA)

|

||||

|

||||

Ascension 是一个开源的游戏启动器,旨在能够启动从任何平台购买、下载的游戏。这些游戏可以是 Steam 平台的、[Origin 游戏][18]平台的、Uplay 平台的,以及直接从游戏开发者主页下载的,或者来自 DVD、CD-ROM 的。

|

||||

|

||||

Ascension 计划的开端是这样:[某个观点的分享][19]激发了一场与游戏社区读者之间有趣的讨论,在这场讨论中读者们纷纷发表了自己的观点并给出建议。

|

||||

|

||||

### #2 与 Windows 平台的性能比较

|

||||

|

||||

在 Linux 平台上运行 Windows 游戏并不总是一件轻松的任务。但是得益于一个叫做 [CSMT][20](多线程命令流)的特性,尽管离 Windows 级别的性能还有相当长的路要走,PlayOnLinux 现在依旧可以更好地解决这些性能方面的问题。

|

||||

|

||||

Linux 对游戏的原生支持在过去发行的游戏中从未尽如人意。

|

||||

|

||||

去年,有报道说 SteamOS 比 Windows 在游戏方面的表现要[差得多][21]。古墓丽影去年在 SteamOS 及 Steam for Linux 上发行,然而其基准测试的结果与 Windows 上的性能无法抗衡。

|

||||

|

||||

- [Destination: Tomb Raider benchmark video comparison, Linux vs Windows 10](https://youtu.be/nkWUBRacBNE)

|

||||

|

||||

这明显是因为游戏是基于 [DirectX][23] 而不是 [OpenGL][24] 开发的缘故。

|

||||

|

||||

古墓丽影是[第一个使用 TressFX 的游戏][25]。下面这个视频包涵了 TressFX 的比较:

|

||||

|

||||

- [Destination: Tomb Raider Benchmark - Ubuntu 15.10 vs Windows 8.1 + Ubuntu 16.04 vs Windows 10](https://youtu.be/-IeY5ZS-LlA)

|

||||

|

||||

下面是另一个有趣的比较,它显示出使用 Wine + CSMT 带来的游戏性能比 Steam 上原生的 Linux 版游戏带来的游戏性能要好得多!这就是开源的力量!

|

||||

|

||||

- [Destination: [LinuxBenchmark] Tomb Raider Linux vs Wine comparison](https://youtu.be/sCJkC6oJ08A)

|

||||

|

||||

以防 FPS 损失,TressFX 已经被关闭。

|

||||

|

||||

以下是另一个有关在 Linux 上最新发布的 “[Life is Strange][27]” 在 Linux 与 Windows 上的比较:

|

||||

|

||||

- [Destination: Life is Strange on radeonsi (Linux nine_csmt vs Windows 10)](https://youtu.be/Vlflu-pIgIY)

|

||||

|

||||

[Steam for Linux][28] 开始在这个新游戏上展示出比 Windows 更好的游戏性能,这是一件好事。

|

||||

|

||||

在发布任何 Linux 版的游戏前,开发者都应该考虑优化游戏,特别是基于 DirectX 并需要进行 OpenGL 转制的游戏。我们十分希望 Linux 上的<ruby>[杀出重围:人类分裂][29]<rt>Deus Ex: Mankind Divided</rt></ruby> 在正式发行时能有一个好的基准测试结果。由于它是基于 DirectX 的游戏,我们希望它能良好地移植到 Linux 上。[该游戏执行总监说过这样的话][30]。

|

||||

|

||||

### #3 专有的 NVIDIA 驱动

|

||||

|

||||

相比于 [NVIDIA][32],[AMD 对于开源的支持][31]绝对是值得称赞的。尽管 [AMD][33] 因其更好的开源驱动在 Linux 上的驱动支持挺不错,而 NVIDIA 显卡用户由于开源版本的 NVIDIA 显卡驱动 “Nouveau” 有限的能力,仍不得不用专有的 NVIDIA 驱动。

|

||||

|

||||

曾经,Linus Torvalds 大神也分享过他关于“来自 NVIDIA 的 Linux 支持完全不可接受”的想法。

|

||||

|

||||

- [Destination: Linus Torvalds Publicly Attacks NVidia for lack of Linux & Android Support](https://youtu.be/O0r6Pr_mdio)

|

||||

|

||||

你可以在这里观看完整的[谈话][35],尽管 NVIDIA 回应 [承诺更好的 Linux 平台支持][36],但其开源显卡驱动仍如之前一样毫无起色。

|

||||

|

||||

### #4 需要 Linux 平台上的 Uplay 和 Origin 的 DRM 支持

|

||||

|

||||

- [Destination: Uplay #1 Rayman Origins em Linux - como instalar - ago 2016](https://youtu.be/rc96NFwyxWU)

|

||||

|

||||

以上的视频描述了如何在 Linux 上安装 [Uplay][37] DRM。视频上传者还建议说并不推荐使用 Wine 作为 Linux 上的主要的应用和游戏支持软件。相反,更鼓励使用原生的应用。

|

||||

|

||||

以下视频是一个关于如何在 Linux 上安装 [Origin][38] DRM 的教程。

|

||||

|

||||

- [Destination: Install EA Origin in Ubuntu with PlayOnLinux (Updated)](https://youtu.be/ga2lNM72-Kw)

|

||||

|

||||

数字版权管理(DRM)软件给游戏运行又加了一层阻碍,使得在 Linux 上良好运行 Windows 游戏这一本就充满挑战性的任务更有难度。因此除了使游戏能够运行之外,W.I.N.E 不得不同时负责运行像 Uplay 或 Origin 之类的 DRM 软件。如果能像 Steam 一样,Linux 也能够有自己原生版本的 Uplay 和 Origin 那就好了。

|

||||

|

||||

### #5 DirectX 11 对于 Linux 的支持

|

||||

|

||||

尽管我们在 Linux 平台上有可以运行 Windows 应用的工具,每个游戏为了能在 Linux 上运行都带有自己的配套调整需求。尽管去年在 Code Weavers 有一篇关于 [DirectX 11 对于 Linux 的支持][40] 的公告,在 Linux 上畅玩新发大作仍是长路漫漫。

|

||||

|

||||

现在你可以[从 Codweavers 购买 Crossover][41] 以获得可得到的最佳 DirectX 11 支持。这个在 Arch Linux 论坛上的[频道][42]清楚展现了将这个梦想成真需要多少的努力。以下是一个 [Reddit 频道][44] 上的有趣 [发现][43]。这个发现提到了[来自 Codeweavers 的 DirectX 11 补丁][45],现在看来这无疑是好消息。

|

||||

|

||||

### #6 不是全部的 Steam 游戏都可跑在 Linux 上

|

||||

|

||||

随着 Linux 游戏玩家一次次错过主要游戏的发行,这是需要考虑的一个重点,因为大部分主要游戏都在 Windows 上发行。这是[如何在 Linux 上安装 Windows 版的 Steam 的教程][46]。

|

||||

|

||||

### #7 游戏发行商对 OpenGL 更好的支持

|

||||

|

||||

目前开发者和发行商主要着眼于用 DirectX 而不是 OpenGL 来开发游戏。现在随着 Steam 正式登录 Linux,开发者应该同样考虑在 OpenGL 下开发。

|

||||

|

||||

[Direct3D][47] 仅仅是为 Windows 平台而打造。而 OpenGL API 拥有开放性标准,并且它不仅能在 Windows 上同样也能在其它各种各样的平台上实现。

|

||||

|

||||

尽管是一篇很老的文章,但[这个很有价值的资源][48]分享了许多有关 OpenGL 和 DirectX 现状的很有想法的信息。其所提出的观点确实十分明智,基于按时间排序的事件也能给予读者启迪。

|

||||

|

||||

在 Linux 平台上发布大作的发行商绝不应该忽视一个事实:在 OpenGL 下直接开发游戏要比从 DirectX 移植到 OpenGL 合算得多。如果必须进行平台转制,移植必须被仔细优化并谨慎研究。发布游戏可能会有延迟,但这绝对值得。

|

||||

|

||||

有更多的烦恼要分享?务必在评论区让我们知道。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/linux-gaming-problems/

|

||||

|

||||

作者:[Avimanyu Bandyopadhyay][a]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/avimanyu/

|

||||

[1]:https://itsfoss.com/author/avimanyu/

|

||||

[2]:https://itsfoss.com/linux-gaming-problems/#comments

|

||||

[3]:https://www.facebook.com/share.php?u=https%3A%2F%2Fitsfoss.com%2Flinux-gaming-problems%2F%3Futm_source%3Dfacebook%26utm_medium%3Dsocial%26utm_campaign%3DSocialWarfare

|

||||

[4]:https://twitter.com/share?original_referer=/&text=Annoying+Experiences+Every+Linux+Gamer+Never+Wanted%21&url=https://itsfoss.com/linux-gaming-problems/%3Futm_source%3Dtwitter%26utm_medium%3Dsocial%26utm_campaign%3DSocialWarfare&via=itsfoss2

|

||||

[5]:https://plus.google.com/share?url=https%3A%2F%2Fitsfoss.com%2Flinux-gaming-problems%2F%3Futm_source%3DgooglePlus%26utm_medium%3Dsocial%26utm_campaign%3DSocialWarfare

|

||||

[6]:https://www.linkedin.com/cws/share?url=https%3A%2F%2Fitsfoss.com%2Flinux-gaming-problems%2F%3Futm_source%3DlinkedIn%26utm_medium%3Dsocial%26utm_campaign%3DSocialWarfare

|

||||

[7]:http://www.stumbleupon.com/submit?url=https://itsfoss.com/linux-gaming-problems/&title=Annoying+Experiences+Every+Linux+Gamer+Never+Wanted%21

|

||||

[8]:https://www.reddit.com/submit?url=https://itsfoss.com/linux-gaming-problems/&title=Annoying+Experiences+Every+Linux+Gamer+Never+Wanted%21

|

||||

[9]:https://itsfoss.com/wp-content/uploads/2016/09/Linux-Gaming-Problems.jpg

|

||||

[10]:https://itsfoss.com/wp-content/uploads/2016/09/Linux-Gaming-Problems.jpg

|

||||

[11]:http://pinterest.com/pin/create/bookmarklet/?media=https://itsfoss.com/wp-content/uploads/2016/09/Linux-Gaming-Problems.jpg&url=https://itsfoss.com/linux-gaming-problems/&is_video=false&description=Linux%20gamer%27s%20problem

|

||||

[12]:https://itsfoss.com/linux-gaming-guide/

|

||||

[13]:https://itsfoss.com/linux-gaming-distributions/

|

||||

[14]:https://itsfoss.com/use-windows-applications-linux/

|

||||

[15]:https://www.playonlinux.com/en/

|

||||

[16]:http://store.steampowered.com/steamos/

|

||||

[17]:http://www.ibtimes.co.uk/reddit-users-want-replace-steam-open-source-game-launcher-project-ascension-1498999

|

||||

[18]:https://www.origin.com/

|

||||

[19]:https://www.reddit.com/r/pcmasterrace/comments/33xcvm/we_hate_valves_monopoly_over_pc_gaming_why/

|

||||

[20]:https://github.com/wine-compholio/wine-staging/wiki/CSMT

|

||||

[21]:http://arstechnica.com/gaming/2015/11/ars-benchmarks-show-significant-performance-hit-for-steamos-gaming/

|

||||

[22]:https://www.gamingonlinux.com/articles/tomb-raider-benchmark-video-comparison-linux-vs-windows-10.7138

|

||||

[23]:https://en.wikipedia.org/wiki/DirectX

|

||||

[24]:https://en.wikipedia.org/wiki/OpenGL

|

||||

[25]:https://www.gamingonlinux.com/articles/tomb-raider-released-for-linux-video-thoughts-port-report-included-the-first-linux-game-to-use-tresfx.7124

|

||||

[26]:https://itsfoss.com/osu-new-linux/

|

||||

[27]:http://lifeisstrange.com/

|

||||

[28]:https://itsfoss.com/install-steam-ubuntu-linux/

|

||||

[29]:https://itsfoss.com/deus-ex-mankind-divided-linux/

|

||||

[30]:http://wccftech.com/deus-ex-mankind-divided-director-console-ports-on-pc-is-disrespectful/

|

||||

[31]:http://developer.amd.com/tools-and-sdks/open-source/

|

||||

[32]:http://nvidia.com/

|

||||

[33]:http://amd.com/

|

||||

[34]:http://www.makeuseof.com/tag/open-source-amd-graphics-now-awesome-heres-get/

|

||||

[35]:https://youtu.be/MShbP3OpASA

|

||||

[36]:https://itsfoss.com/nvidia-optimus-support-linux/

|

||||

[37]:http://uplay.com/

|

||||

[38]:http://origin.com/

|

||||

[39]:https://itsfoss.com/linux-foundation-head-uses-macos/

|

||||

[40]:http://www.pcworld.com/article/2940470/hey-gamers-directx-11-is-coming-to-linux-thanks-to-codeweavers-and-wine.html

|

||||

[41]:https://itsfoss.com/deal-run-windows-software-and-games-on-linux-with-crossover-15-66-off/

|

||||

[42]:https://bbs.archlinux.org/viewtopic.php?id=214771

|

||||

[43]:https://ghostbin.com/paste/sy3e2

|

||||

[44]:https://www.reddit.com/r/linux_gaming/comments/3ap3uu/directx_11_support_coming_to_codeweavers/

|

||||

[45]:https://www.codeweavers.com/about/blogs/caron/2015/12/10/directx-11-really-james-didnt-lie

|

||||

[46]:https://itsfoss.com/linux-gaming-guide/

|

||||

[47]:https://en.wikipedia.org/wiki/Direct3D

|

||||

[48]:http://blog.wolfire.com/2010/01/Why-you-should-use-OpenGL-and-not-DirectX

|

||||

@ -0,0 +1,96 @@

|

||||

8 个在 KDE Plasma 桌面环境下提高生产力的技巧和提示

|

||||

======

|

||||

|

||||

|

||||

|

||||

众所周知,KDE 的 Plasma 是 Linux 下最强大的桌面环境之一。它是高度可定制的,并且看起来也很棒。当你完成所有的配置工作后,你才能体会到它的所有特性。

|

||||

|

||||

你能够轻松地配置 Plasma 桌面并且使用它大量方便且节省时间的特性来加速你的工作,拥有一个能够帮助你而非阻碍你的桌面环境。

|

||||

|

||||

以下这些提示并没有特定顺序,因此你无需按次序阅读。你只需要挑出最适合你的工作流的那几个即可。

|

||||

|

||||

**相关阅读**:[10 个你应该尝试的最佳 KDE Plasma 应用][1]

|

||||

|

||||

### 1、多媒体控制

|

||||

|

||||

这点不太算得上是一条提示,因为它是很容易被记在脑海里的。Plasma 可在各处进行多媒体控制。当你需要暂停、继续或跳过一首歌时,你不需要每次都打开你的媒体播放器。你能够通过将鼠标移至那个最小化窗口之上,甚至通过锁屏进行控制。当你需要切换歌曲或忘了暂停时,你也不必麻烦地登录再进行操作。

|

||||

|

||||

### 2、KRunner

|

||||

|

||||

![KDE Plasma KRunner][2]

|

||||

|

||||

KRunner 是 Plasma 桌面中一个经常受到赞誉的特性。大部分人习惯于穿过层层的应用启动菜单来找到想要启动的程序。当你使用 KRunner 时就不需要这么做。

|

||||

|

||||

为了使用 KRunner,确保你当前的活动焦点在桌面本身(点击桌面而不是窗口)。然后开始输入你想要启动的应用名称,KRunner 将会带着建议项从你的屏幕顶部自动下拉。在你寻找的匹配项上点击或敲击回车键。这比记住你每个应用所属的类别要更快。

|

||||

|

||||

### 3、跳转列表

|

||||

|

||||

![KDE Plasma 的跳转列表][3]

|

||||

|

||||

跳转列表功能是最近才被添加进 Plasma 桌面的。它允许你在启动应用时直接跳转至特定的区域或特性部分。

|

||||

|

||||

因此如果你在菜单栏上有一个应用启动图标,你可以通过右键得到可跳转位置的列表。选择你想要跳转的位置,然后就可以“起飞”了。

|

||||

|

||||

### 4、KDE Connect

|

||||

|

||||

![KDE Connect Android 客户端菜单][4]

|

||||

|

||||

如果你有一个安卓手机,那么 [KDE Connect][5] 会为你提供大量帮助。它可以将你的手机连接至你的桌面,由此你可以在两台设备间无缝地共享。

|

||||

|

||||

通过 KDE Connect,你能够在你的桌面上实时地查看 [Android 设备通知][6]。它同时也让你能够从 Plasma 中收发文字信息,甚至不需要拿起你的手机。

|

||||

|

||||

KDE Connect 也允许你在手机和电脑间发送文件或共享网页。你可以轻松地从一个设备转移至另一设备,而无需烦恼或打乱思绪。

|

||||

|

||||

### 5、Plasma Vaults

|

||||

|

||||

![KDE Plasma Vault][7]

|

||||

|

||||

Plasma Vaults 是 Plasma 桌面的另一个新功能。它的 KDE 为加密文件和文件夹提供的简单解决方案。如果你不使用加密文件,此项功能不会为你节省时间。如果你使用,Vaults 是一个更简单的途径。

|

||||

|

||||

Plasma Vaults 允许你以无 root 权限的普通用户创建加密目录,并通过你的任务栏来管理它们。你能够快速地挂载或卸载目录,而无需外部程序或附加权限。

|

||||

|

||||

### 6、Pager 控件

|

||||

|

||||

![KDE Plasma Pager][8]

|

||||

|

||||

配置你的桌面的 pager 控件。它允许你轻松地切换至另三个附加工作区,带来更大的屏幕空间。

|

||||

|

||||

将控件添加到你的菜单栏上,然后你就可以在多个工作区间滑动切换。每个工作区都与你原桌面的尺寸相同,因此你能够得到数倍于完整屏幕的空间。这就使你能够排布更多的窗口,而不必受到一堆混乱的最小化窗口的困扰。

|

||||

|

||||

### 7、创建一个 Dock

|

||||

|

||||

![KDE Plasma Dock][9]

|

||||

|

||||

Plasma 以其灵活性和可配置性出名,同时也是它的优势。如果你有常用的程序,你可以考虑将常用程序设置为 OS X 风格的 dock。你能够通过单击启动,而不必深入菜单或输入它们的名字。

|

||||

|

||||

### 8、为 Dolphin 添加文件树

|

||||

|

||||

![Plasma Dolphin 目录][10]

|

||||

|

||||

通过目录树来浏览文件夹会更加简单。Dolphin 作为 Plasma 的默认文件管理器,具有在文件夹窗口一侧,以树的形式展示目录列表的内置功能。

|

||||

|

||||

为了启用目录树,点击“控制”标签,然后“配置 Dolphin”、“显示模式”、“详细”,最后选择“可展开文件夹”。

|

||||

|

||||

记住这些仅仅是提示,不要强迫自己做阻碍自己的事情。你可能讨厌在 Dolphin 中使用文件树,你也可能从不使用 Pager,这都没关系。当然也可能会有你喜欢但是此处没列举出来的功能。选择对你有用处的,也就是说,这些技巧中总有一些能帮助你度过日常工作中的艰难时刻。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.maketecheasier.com/kde-plasma-tips-tricks-improve-productivity/

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[cycoe](https://github.com/cycoe)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.maketecheasier.com/author/nickcongleton/

|

||||

[1]:https://www.maketecheasier.com/10-best-kde-plasma-applications/ (10 of the Best KDE Plasma Applications You Should Try)

|

||||

[2]:https://www.maketecheasier.com/assets/uploads/2017/10/pe-krunner.jpg (KDE Plasma KRunner)

|

||||

[3]:https://www.maketecheasier.com/assets/uploads/2017/10/pe-jumplist.jpg (KDE Plasma Jump Lists)

|

||||

[4]:https://www.maketecheasier.com/assets/uploads/2017/05/kde-connect-menu-e1494899929112.jpg (KDE Connect Menu Android)

|

||||

[5]:https://www.maketecheasier.com/send-receive-sms-linux-kde-connect/

|

||||

[6]:https://www.maketecheasier.com/android-notifications-ubuntu-kde-connect/

|

||||

[7]:https://www.maketecheasier.com/assets/uploads/2017/10/pe-vault.jpg (KDE Plasma Vault)

|

||||

[8]:https://www.maketecheasier.com/assets/uploads/2017/10/pe-pager.jpg (KDE Plasma Pager)

|

||||

[9]:https://www.maketecheasier.com/assets/uploads/2017/10/pe-dock.jpg (KDE Plasma Dock)

|

||||

[10]:https://www.maketecheasier.com/assets/uploads/2017/10/pe-dolphin.jpg (Plasma Dolphin Directory)

|

||||

@ -0,0 +1,79 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lujun9972)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10574-1.html)

|

||||

[#]: subject: (Get started with Org mode without Emacs)

|

||||

[#]: via: (https://opensource.com/article/19/1/productivity-tool-org-mode)

|

||||

[#]: author: (Kevin Sonney https://opensource.com/users/ksonney (Kevin Sonney))

|

||||

|

||||

开始使用 Org 模式吧,在没有 Emacs 的情况下

|

||||

======

|

||||

|

||||

> 不,你不需要 Emacs 也能用 Org,这是我开源工具系列的第 16 集,将会让你在 2019 年变得更加有生产率。

|

||||

|

||||

|

||||

|

||||

每到年初似乎总有这么一个疯狂的冲动来寻找提高生产率的方法。新年决心,正确地开始一年的冲动,以及“向前看”的态度都是这种冲动的表现。软件推荐通常都会选择闭源和专利软件。但这不是必须的。

|

||||

|

||||

这是我 2019 年改进生产率的 19 个新工具中的第 16 个。

|

||||

|

||||

### Org (非 Emacs)

|

||||

|

||||

[Org 模式][1] (或者就称为 Org) 并不是新鲜货,但依然有许多人没有用过。他们很乐意试用一下以体验 Org 是如何改善生产率的。但最大的障碍来自于 Org 是与 Emacs 相关联的,而且很多人都认为两者缺一不可。并不是这样的!一旦你理解了其基础,Org 就可以与各种其他工具和编辑器一起使用。

|

||||

|

||||

|

||||

|

||||

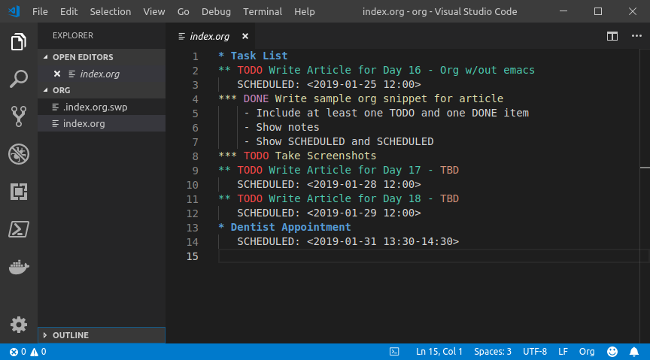

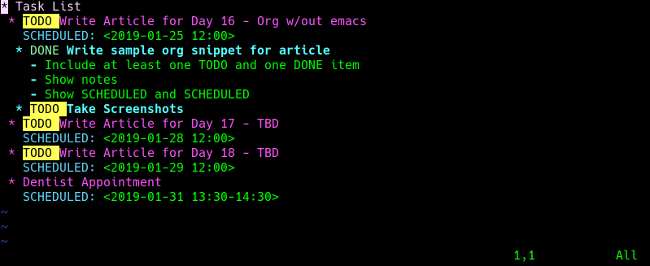

Org,本质上,是一个结构化的文本文件。它有标题、子标题,以及各种关键字,其他工具可以根据这些关键字将文件解析成日程表和代办列表。Org 文件可以被任何纯文本编辑器编辑(例如,[Vim][2]、[Atom][3] 或 [Visual Studio Code][4]),而且很多编辑器都有插件可以帮你创建和管理 Org 文件。

|

||||

|

||||

一个基础的 Org 文件看起来是这样的:

|

||||

|

||||

```

|

||||

* Task List

|

||||

** TODO Write Article for Day 16 - Org w/out emacs

|

||||

DEADLINE: <2019-01-25 12:00>

|

||||

*** DONE Write sample org snippet for article

|

||||

- Include at least one TODO and one DONE item

|

||||

- Show notes

|

||||

- Show SCHEDULED and DEADLINE

|

||||

*** TODO Take Screenshots

|

||||

** Dentist Appointment

|

||||

SCHEDULED: <2019-01-31 13:30-14:30>

|

||||

```

|

||||

|

||||

Org 是一种大纲格式,它使用 `*` 作为标识指明事项的级别。任何以 `TODO`(是的,全大些)开头的事项都是代办事项。标注为 `DONE` 的工作表示该工作已经完成。`SCHEDULED` 和 `DEADLINE` 标识与该事务相关的日期和时间。如何任何地方都没有时间,则该事务被视为全天活动。

|

||||

|

||||

使用正确的插件,你喜欢的文本编辑器可以成为一个充满生产率和组织能力的强大工具。例如,[vim-orgmode][5] 插件包括创建 Org 文件、语法高亮的功能,以及各种用来生成跨文件的日程和综合代办事项列表的关键命令。

|

||||

|

||||

|

||||

|

||||

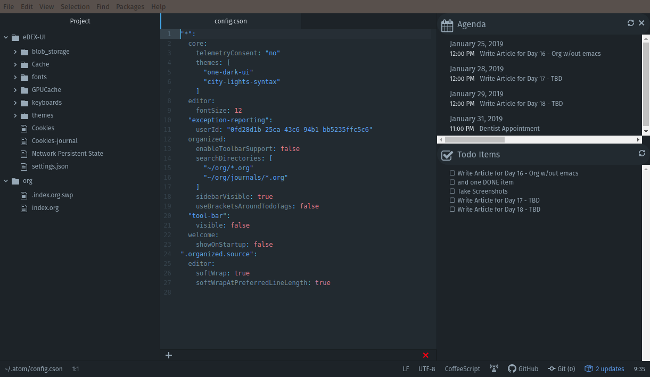

Atom 的 [Organized][6] 插件可以在屏幕右边添加一个侧边栏,用来显示 Org 文件中的日程和代办事项。默认情况下它从配置项中设置的路径中读取多个 Org 文件。Todo 侧边栏允许你通过点击未完事项来将其标记为已完成,它会自动更新源 Org 文件。

|

||||

|

||||

|

||||

|

||||

还有一大堆 Org 工具可以帮助你保持生产率。使用 Python、Perl、PHP、NodeJS 等库,你可以开发自己的脚本和工具。当然,少不了 [Emacs][7],它的核心功能就包括支持 Org。

|

||||

|

||||

|

||||

|

||||

Org 模式是跟踪需要完成的工作和时间的最好工具之一。而且,与传闻相反,它无需 Emacs,任何一个文本编辑器都行。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/productivity-tool-org-mode

|

||||

|

||||

作者:[Kevin Sonney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ksonney (Kevin Sonney)

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://orgmode.org/

|

||||

[2]: https://www.vim.org/

|

||||

[3]: https://atom.io/

|

||||

[4]: https://code.visualstudio.com/

|

||||

[5]: https://github.com/jceb/vim-orgmode

|

||||

[6]: https://atom.io/packages/organized

|

||||

[7]: https://www.gnu.org/software/emacs/

|

||||

@ -0,0 +1,124 @@

|

||||

Qalculate! :全宇宙最好的计算器软件

|

||||

======

|

||||

|

||||

十多年来,我一直都是 GNU-Linux 以及 [Debian][1] 的用户。随着我越来越频繁的使用桌面环境,我发现对我来说除了少数基于 web 的服务以外我的大多数需求都可以通过 Debian 软件库里自带的[桌面应用][2]解决。

|

||||

|

||||

我的需求之一就是进行单位换算。尽管有很多很多在线服务可以做这件事,但是我还是需要一个可以在桌面环境使用的应用。这主要是因为隐私问题以及我不想一而再再而三的寻找在线服务做事。为此我搜寻良久,直到找到 Qalculate!。

|

||||

|

||||

### Qalculate! 最强多功能计算器应用

|

||||

|

||||

![最佳计算器应用 Qalculator][3]

|

||||

|

||||

这是 aptitude 上关于 [Qalculate!][4] 的介绍,我没法总结的比他们更好了:

|

||||

|

||||

> 强大易用的桌面计算器 - GTK+ 版

|

||||

>

|

||||

> Qalculate! 是一款外表简单易用,内核强大且功能丰富的应用。其功能包含自定义函数、单位、高计算精度、作图以及可以输入一行表达式(有容错措施)的图形界面(也可以选择使用传统按钮)。

|

||||

|

||||

这款应用也发行过 KDE 的界面,但是至少在 Debian Testing 软件库里,只出现了 GTK+ 版的界面,你也可以在 GitHub 上的这个[仓库][5]里面看到。

|

||||

|

||||

不必多说,Qalculate! 在 Debian 的软件源内处于可用状态,因此可以使用 [apt][6] 命令或者是基于 Debian 的发行版比如 Ubuntu 提供的软件中心轻松安装。在 Windows 或者 macOS 上也可以使用这款软件。

|

||||

|

||||

#### Qalculate! 特性一览

|

||||

|

||||

列出全部的功能清单会有点长,请允许我只列出一部分功能并使用截图来展示极少数 Qalculate! 提供的功能。这么做是为了让你熟悉 Qalculate! 的基本功能,并在之后可以自由探索 Qalculate! 到底还能干什么。

|

||||

|

||||

* 代数

|

||||

* 微积分

|

||||

* 组合数学

|

||||

* 复数

|

||||

* 数据集

|

||||

* 日期与时间

|

||||

* 经济学

|

||||

* 对数和指数

|

||||

* 几何

|

||||

* 逻辑学

|

||||

* 向量和矩阵

|

||||

* 杂项

|

||||

* 数论

|

||||

* 统计学

|

||||

* 三角学

|

||||

|

||||

#### 使用 Qalculate!

|

||||

|

||||

Qalculate! 的使用不是很难。你甚至可以在里面写简单的英文。但是我还是推荐先[阅读手册][7]以便充分发挥 Qalculate! 的潜能。

|

||||

|

||||

![使用 Qalculate 进行字节到 GB 的换算][8]

|

||||

|

||||

![摄氏度到华氏度的换算][9]

|

||||

|

||||

#### qalc 是 Qalculate! 的命令行版

|

||||

|

||||

你也可以使用 Qalculate! 的命令行版 `qalc`:

|

||||

|

||||

```

|

||||

$ qalc 62499836 byte to gibibyte

|

||||

62499836 * byte = approx. 0.058207508 gibibyte

|

||||

|

||||

$ qalc 40 degree celsius to fahrenheit

|

||||

(40 * degree) * celsius = 104 deg*oF

|

||||

```

|

||||

|

||||

Qalculate! 的命令行界面可以让不喜欢 GUI 而是喜欢命令行界面(CLI)或者是使用无头结点(没有 GUI)的人可以使用 Qalculate!。这些人大多是在服务器环境下工作。

|

||||

|

||||

如果你想要在脚本里使用这一软件的话,我想 libqalculate 是最好的解决方案。看一看 `qalc` 以及 qalculate-gtk 是如何依赖于它工作的就足以知晓如何使用了。

|

||||

|

||||

再提一嘴,你还可以了解下如何根据一系列数据绘图,其他应用方式就留给你自己发掘了。不要忘记查看 `/usr/share/doc/qalculate/index.html` 以获取 Qalculate! 的全部功能。

|

||||

|

||||

注释:注意 Debian 更喜欢 [gnuplot][10],因为其输出的图片很精美。

|

||||

|

||||

#### 附加技巧:你可以通过在 Debian 下通过命令行感谢开发者

|

||||

|

||||

如果你使用 Debian 而且喜欢哪个包的话,你可以使用如下命令感谢 Debian 下这个软件包的开发者或者是维护者:

|

||||

|

||||

```

|

||||

reportbug --kudos $PACKAGENAME

|

||||

```

|

||||

|

||||

因为我喜欢 Qalculate!,我想要对 Debian 的开发者以及维护者 Vincent Legout 的卓越工作表示感谢:

|

||||

|

||||

```

|

||||

reportbug --kudos qalculate

|

||||

```

|

||||

|

||||

建议各位阅读我写的关于如何使用报错工具[在 Debian 中上报 BUG][11]的详细指南。

|

||||

|

||||

#### 一位高分子化学家对 Qalculate! 的评价

|

||||

|

||||

经由作者 [Philip Prado][12],我们联系上了 Timothy Meyers 先生,他目前是在高分子实验室工作的高分子化学家。

|

||||

|

||||

他对 Qaclulate! 的专业评价是:

|

||||

|

||||

> 看起来几乎任何科学家都可以使用这个软件,因为如果你知道指令以及如何使其生效的话,几乎任何数据计算都可以使用这个软件计算。

|

||||

|

||||

> 我觉得这个软件少了些物理常数,但我想不起来缺了哪些。我觉得它没有太多有关[流体动力学][13]的东西,再就是少了点部分化合物的[光吸收][14]系数,但这些东西只对我这个化学家来说比较重要,我不知道这些是不是对别人来说也是特别必要的。[自由能][15]可能也是。

|

||||

|

||||

最后,我分享的关于 Qalculate! 的介绍十分简陋,其实际功能与你的需要以及你的想象力有关系。希望你能喜欢 Qalculate!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/qalculate/

|

||||

|

||||

作者:[Shirish][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[name1e5s](https://github.com/name1e5s)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/shirish/

|

||||

[1]:https://www.debian.org/

|

||||

[2]:https://itsfoss.com/essential-linux-applications/

|

||||

[3]:https://i2.wp.com/itsfoss.com/wp-content/uploads/2018/05/qalculate-app-featured-1.jpeg?w=800&ssl=1

|

||||

[4]:https://qalculate.github.io/

|

||||

[5]:https://github.com/Qalculate

|

||||

[6]:https://itsfoss.com/apt-command-guide/

|

||||

[7]:https://qalculate.github.io/manual/index.html

|

||||

[8]:https://i0.wp.com/itsfoss.com/wp-content/uploads/2018/04/qalculate-byte-conversion.png?zoom=2&ssl=1

|

||||

[9]:https://i0.wp.com/itsfoss.com/wp-content/uploads/2018/04/qalculate-gtk-weather-conversion.png?zoom=2&ssl=1

|

||||

[10]:http://www.gnuplot.info/

|

||||

[11]:https://itsfoss.com/bug-report-debian/

|

||||

[12]:https://itsfoss.com/author/phillip/

|

||||

[13]:https://en.wikipedia.org/wiki/Fluid_dynamics

|

||||

[14]:https://en.wikipedia.org/wiki/Absorption_(electromagnetic_radiation)

|

||||

[15]:https://en.wikipedia.org/wiki/Gibbs_free_energy

|

||||

144

published/201902/20181123 Three SSH GUI Tools for Linux.md

Normal file

144

published/201902/20181123 Three SSH GUI Tools for Linux.md

Normal file

@ -0,0 +1,144 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: subject: (Three SSH GUI Tools for Linux)

|

||||

[#]: via: (https://www.linux.com/blog/learn/intro-to-linux/2018/11/three-ssh-guis-linux)

|

||||

[#]: author: (Jack Wallen https://www.linux.com/users/jlwallen)

|

||||

[#]: url: (https://linux.cn/article-10559-1.html)

|

||||

|

||||

3 个 Linux 上的 SSH 图形界面工具

|

||||

======

|

||||

|

||||

> 了解一下这三个用于 Linux 上的 SSH 图形界面工具。

|

||||

|

||||

|

||||

|

||||

在你担任 Linux 管理员的职业生涯中,你会使用 Secure Shell(SSH)远程连接到 Linux 服务器或桌面。可能你曾经在某些情况下,会同时 SSH 连接到多个 Linux 服务器。实际上,SSH 可能是 Linux 工具箱中最常用的工具之一。因此,你应该尽可能提高体验效率。对于许多管理员来说,没有什么比命令行更有效了。但是,有些用户更喜欢使用 GUI 工具,尤其是在从台式机连接到远程并在服务器上工作时。

|

||||

|

||||

如果你碰巧喜欢好的图形界面工具,你肯定很乐于了解一些 Linux 上优秀的 SSH 图形界面工具。让我们来看看这三个工具,看看它们中的一个(或多个)是否完全符合你的需求。

|

||||

|

||||

我将在 [Elementary OS][1] 上演示这些工具,但它们都可用于大多数主要发行版。

|

||||

|

||||

### PuTTY

|

||||

|

||||

已经有一些经验的人都知道 [PuTTY][2]。实际上,从 Windows 环境通过 SSH 连接到 Linux 服务器时,PuTTY 是事实上的标准工具。但 PuTTY 不仅适用于 Windows。事实上,通过标准软件库,PuTTY 也可以安装在 Linux 上。 PuTTY 的功能列表包括:

|

||||

|

||||

* 保存会话。

|

||||

* 通过 IP 或主机名连接。

|

||||

* 使用替代的 SSH 端口。

|

||||

* 定义连接类型。

|

||||

* 日志。

|

||||

* 设置键盘、响铃、外观、连接等等。

|

||||

* 配置本地和远程隧道。

|

||||

* 支持代理。

|

||||

* 支持 X11 隧道。

|

||||

|

||||

PuTTY 图形工具主要是一种保存 SSH 会话的方法,因此可以更轻松地管理所有需要不断远程进出的各种 Linux 服务器和桌面。一旦连接成功,PuTTY 就会建立一个到 Linux 服务器的连接窗口,你将可以在其中工作。此时,你可能会有疑问,为什么不在终端窗口工作呢?对于一些人来说,保存会话的便利确实使 PuTTY 值得使用。

|

||||

|

||||

在 Linux 上安装 PuTTY 很简单。例如,你可以在基于 Debian 的发行版上运行命令:

|

||||

|

||||

```

|

||||

sudo apt-get install -y putty

|

||||

```

|

||||

|

||||

安装后,你可以从桌面菜单运行 PuTTY 图形工具或运行命令 `putty`。在 PuTTY “Configuration” 窗口(图 1)中,在 “HostName (or IP address) ” 部分键入主机名或 IP 地址,配置 “Port”(如果不是默认值 22),从 “Connection type”中选择 SSH,然后单击“Open”。

|

||||

|

||||

![PuTTY Connection][4]

|

||||

|

||||

*图 1:PuTTY 连接配置窗口*

|

||||

|

||||

建立连接后,系统将提示你输入远程服务器上的用户凭据(图2)。

|

||||

|

||||

![log in][7]

|

||||

|

||||

*图 2:使用 PuTTY 登录到远程服务器*

|

||||

|

||||

要保存会话(以便你不必始终键入远程服务器信息),请填写主机名(或 IP 地址)、配置端口和连接类型,然后(在单击 “Open” 之前),在 “Saved Sessions” 部分的顶部文本区域中键入名称,然后单击 “Save”。这将保存会话的配置。若要连接到已保存的会话,请从 “Saved Sessions” 窗口中选择它,单击 “Load”,然后单击 “Open”。系统会提示你输入远程服务器上的远程凭据。

|

||||

|

||||

### EasySSH

|

||||

|

||||

虽然 [EasySSH][8] 没有提供 PuTTY 中的那么多的配置选项,但它(顾名思义)非常容易使用。 EasySSH 的最佳功能之一是它提供了一个标签式界面,因此你可以打开多个 SSH 连接并在它们之间快速切换。EasySSH 的其他功能包括:

|

||||

|

||||

* 分组(出于更好的体验效率,可以对标签进行分组)。

|

||||

* 保存用户名、密码。

|

||||

* 外观选项。

|

||||

* 支持本地和远程隧道。

|

||||

|

||||

在 Linux 桌面上安装 EasySSH 很简单,因为可以通过 Flatpak 安装应用程序(这意味着你必须在系统上安装 Flatpak)。安装 Flatpak 后,使用以下命令添加 EasySSH:

|

||||

|

||||

```

|

||||

sudo flatpak remote-add --if-not-exists flathub https://flathub.org/repo/flathub.flatpakrepo

|

||||

|

||||

sudo flatpak install flathub com.github.muriloventuroso.easyssh

|

||||

```

|

||||

|

||||

用如下命令运行 EasySSH:

|

||||

|

||||

```

|

||||

flatpak run com.github.muriloventuroso.easyssh

|

||||

```

|

||||

|

||||

将会打开 EasySSH 应用程序,你可以单击左上角的 “+” 按钮。 在结果窗口(图 3)中,根据需要配置 SSH 连接。

|

||||

|

||||

![Adding a connection][10]

|

||||

|

||||

*图 3:在 EasySSH 中添加连接很简单*

|

||||

|

||||

添加连接后,它将显示在主窗口的左侧导航中(图 4)。

|

||||

|

||||

![EasySSH][12]

|

||||

|

||||

*图 4:EasySSH 主窗口*

|

||||

|

||||

要在 EasySSH 连接到远程服务器,请从左侧导航栏中选择它,然后单击 “Connect” 按钮(图 5)。

|

||||

|

||||

![Connecting][14]

|

||||

|

||||

*图 5:用 EasySSH 连接到远程服务器*

|

||||

|

||||

对于 EasySSH 的一个警告是你必须将用户名和密码保存在连接配置中(否则连接将失败)。这意味着任何有权访问运行 EasySSH 的桌面的人都可以在不知道密码的情况下远程访问你的服务器。因此,你必须始终记住在你离开时锁定桌面屏幕(并确保使用强密码)。否则服务器容易受到意外登录的影响。

|

||||

|

||||

### Terminator

|

||||

|

||||

(LCTT 译注:这个选择不符合本文主题,本节删节)

|

||||

|

||||

### termius

|

||||

|

||||

(LCTT 译注:本节是根据网友推荐补充的)

|

||||

|

||||

termius 是一个商业版的 SSH、Telnet 和 Mosh 客户端,不是开源软件。支持包括 [Linux](https://www.termius.com/linux)、Windows、Mac、iOS 和安卓在内的各种操作系统。对于单一设备是免费的,支持多设备的白金账号需要按月付费。

|

||||

|

||||

### 很少(但值得)的选择

|

||||

|

||||

Linux 上没有很多可用的 SSH 图形界面工具。为什么?因为大多数管理员更喜欢简单地打开终端窗口并使用标准命令行工具来远程访问其服务器。但是,如果你需要图形界面工具,则有两个可靠选项,可以更轻松地登录多台计算机。虽然对于那些寻找 SSH 图形界面工具的人来说只有不多的几个选择,但那些可用的工具当然值得你花时间。尝试其中一个,亲眼看看。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/learn/intro-to-linux/2018/11/three-ssh-guis-linux

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/jlwallen

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://elementary.io/

|

||||

[2]: https://www.chiark.greenend.org.uk/~sgtatham/putty/latest.html

|

||||

[3]: https://www.linux.com/files/images/sshguis1jpg

|

||||

[4]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_1.jpg?itok=DiNTz_wO (PuTTY Connection)

|

||||

[5]: https://www.linux.com/licenses/category/used-permission

|

||||

[6]: https://www.linux.com/files/images/sshguis2jpg

|

||||

[7]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_2.jpg?itok=4ORsJlz3 (log in)

|

||||

[8]: https://github.com/muriloventuroso/easyssh

|

||||

[9]: https://www.linux.com/files/images/sshguis3jpg

|

||||

[10]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_3.jpg?itok=bHC2zlda (Adding a connection)

|

||||

[11]: https://www.linux.com/files/images/sshguis4jpg

|

||||

[12]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_4.jpg?itok=hhJzhRIg (EasySSH)

|

||||

[13]: https://www.linux.com/files/images/sshguis5jpg

|

||||

[14]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_5.jpg?itok=piFEFYTQ (Connecting)

|

||||

[15]: https://www.linux.com/files/images/sshguis6jpg

|

||||

[16]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_6.jpg?itok=-kYl6iSE (Terminator)

|

||||

@ -1,76 +1,68 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10560-1.html)

|

||||

[#]: subject: (PowerTOP – Monitors Power Usage and Improve Laptop Battery Life in Linux)

|

||||

[#]: via: (https://www.2daygeek.com/powertop-monitors-laptop-battery-usage-linux/)

|

||||

[#]: author: (Vinoth Kumar https://www.2daygeek.com/author/vinoth/)

|

||||

|

||||

PowerTOP – Monitors Power Usage and Improve Laptop Battery Life in Linux

|

||||

PowerTOP:在 Linux 上监视电量使用和改善笔记本电池寿命

|

||||

======

|

||||

|

||||

We all know, we almost 80-90% migrated from PC (Desktop) to laptop.

|

||||

我们都知道,现在几乎都从 PC 机换到了笔记本电脑了。但是使用笔记本有个问题,我们希望电池耐用,我们可以使用到每一点电量。所以,我们需要知道电量都去哪里了,是不是浪费了。

|

||||

|

||||

But one thing we want from a laptop, it’s long battery life and we want to use every drop of power.

|

||||

你可以使用 PowerTOP 工具来查看没有接入电源线时电量都用在了何处。你需要在终端中使用超级用户权限来运行 PowerTOP 工具。它可以访问该电池硬件并测量电量使用情况。

|

||||

|

||||

So it’s good to know where our power is going and getting waste.

|

||||

### 什么是 PowerTOP

|

||||

|

||||

You can use the powertop utility to see what’s drawing power when your system’s not plugged in.

|

||||

PowerTOP 是一个 Linux 工具,用于诊断电量消耗和电源管理的问题。

|

||||

|

||||

You need to run the powertop utility in terminal with super user privilege.

|

||||

它是由 Intel 开发的,可以在内核、用户空间和硬件中启用各种节电模式。

|

||||

|

||||

It will access the hardware and measure power usage.

|

||||

除了作为一个一个诊断工具之外,PowweTop 还有一个交互模式,可以让你实验 Linux 发行版没有启用的各种电源管理设置。

|

||||

|

||||

### What is PowerTOP

|

||||

它也能监控进程,并展示其中哪个正在使用 CPU,以及从休眠状态页将其唤醒,也可以找出电量消耗特别高的应用程序。

|

||||

|

||||

PowerTOP is a Linux tool to diagnose issues with power consumption and power management.

|

||||

### 如何安装 PowerTOP

|

||||

|

||||

It was developed by Intel to enable various power-saving modes in kernel, userspace, and hardware.

|

||||

PowerTOP 软件包在大多数发行版的软件库中可用,使用发行版的 [包管理器][1] 安装即可。

|

||||

|

||||

In addition to being a diagnostic tool, PowerTOP also has an interactive mode where the user can experiment various power management settings for cases where the Linux distribution has not enabled these settings.

|

||||

|

||||

It is possible to monitor processes and show which of them are utilizing the CPU and wake it from its Idle-States, allowing to identify applications with particular high power demands.

|

||||

|

||||

### How to Install PowerTOP

|

||||

|

||||

PowerTOP package is available in most of the distributions official repository so, use the distributions **[Package Manager][1]** to install it.

|

||||

|

||||

For **`Fedora`** system, use **[DNF Command][2]** to install PowerTOP.

|

||||

对于 Fedora 系统,使用 [DNF 命令][2] 来安装 PowerTOP。

|

||||

|

||||

```

|

||||

$ sudo dnf install powertop

|

||||

```

|

||||

|

||||

For **`Debian/Ubuntu`** systems, use **[APT-GET Command][3]** or **[APT Command][4]** to install PowerTOP.

|

||||

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][3] 或 [APT 命令][4] 来安装 PowerTOP。

|

||||

|

||||

```

|

||||

$ sudo apt install powertop

|

||||

```

|

||||

|

||||

For **`Arch Linux`** based systems, use **[Pacman Command][5]** to install PowerTOP.

|

||||

对于基于 Arch Linux 的系统,使用 [Pacman 命令][5] 来安装 PowerTOP。

|

||||

|

||||

```

|

||||

$ sudo pacman -S powertop

|

||||

```

|

||||

|

||||

For **`RHEL/CentOS`** systems, use **[YUM Command][6]** to install PowerTOP.

|

||||

对于 RHEL/CentOS 系统,使用 [YUM 命令][6] 来安装 PowerTOP。

|

||||

|

||||

```

|

||||

$ sudo yum install powertop

|

||||

```

|

||||

|

||||

For **`openSUSE Leap`** system, use **[Zypper Command][7]** to install PowerTOP.

|

||||

对于 openSUSE Leap 系统,使用 [Zypper 命令][7] 来安装 PowerTOP。

|

||||

|

||||

```

|

||||

$ sudo zypper install powertop

|

||||

```

|

||||

|

||||

### How To Access PowerTOP

|

||||

### 如何使用 PowerTOP

|

||||

|

||||

PowerTOP requires super user privilege so, run as root to use PowerTOP utility on your Linux system.

|

||||

PowerTOP 需要超级用户权限,所以在 Linux 系统中以 root 身份运行 PowerTOP 工具。

|

||||

|

||||

By default it shows `Overview` tab where we can see the power usage consumption for all the devices. Also shows your system wakeups seconds.

|

||||

默认情况下其显示 “概览” 页,在这里我们可以看到所有设备的电量消耗情况,也可以看到系统的唤醒秒数。

|

||||

|

||||

```

|

||||

$ sudo powertop

|

||||

@ -132,11 +124,11 @@ Summary: 1692.9 wakeups/second, 0.0 GPU ops/seconds, 0.0 VFS ops/sec and 54.9%

|

||||

Exit | / Navigate |

|

||||

```

|

||||

|

||||

The powertop output looks similar to the above screenshot, it will be slightly different based on your hardware. This have many screen you can switch between screen the using `Tab` and `Shift+Tab` button.

|

||||

PowerTOP 的输出类似如上截屏,在你的机器上由于硬件不同会稍有不同。它的显示有很多页,你可以使用 `Tab` 和 `Shift+Tab` 在它们之间切换。

|

||||

|

||||

### Idle Stats Tab

|

||||

### 空闲状态页

|

||||

|

||||

It displays various information about the processor.

|

||||

它会显示处理器的各种信息。

|

||||

|

||||

```

|

||||

PowerTOP v2.9 Overview Idle stats Frequency stats Device stats Tunables

|

||||

@ -194,9 +186,9 @@ C10 (pc10) 0.0% | | C10 39.5% 4.7 ms 41.4%

|

||||

Exit | / Navigate |

|

||||

```

|

||||

|

||||

### Frequency Stats Tab

|

||||

### 频率状态页

|

||||

|

||||

It displays the frequency of CPU.

|

||||

它会显示 CPU 的主频。

|

||||

|

||||

```

|

||||

PowerTOP v2.9 Overview Idle stats Frequency stats Device stats Tunables

|

||||

@ -220,9 +212,9 @@ Idle | Idle | Idle

|

||||

|

||||

```

|

||||

|

||||

### Device Stats Tab

|

||||

### 设备状态页

|

||||

|

||||

It displays power usage information against only devices.

|

||||

它仅针对设备显示其电量使用信息。

|

||||

|

||||

```

|

||||

PowerTOP v2.9 Overview Idle stats Frequency stats Device stats Tunables

|

||||

@ -277,12 +269,12 @@ The power consumed was 280 J

|

||||

0.0% runtime-coretemp.0

|

||||

0.0% runtime-alarmtimer

|

||||

|

||||

Exit | / Navigate |

|

||||

Exit | / Navigate |

|

||||

```

|

||||

|

||||

### Tunables Stats Tab

|

||||

### 可调整状态页

|

||||

|

||||

This tab is important area that provides suggestions to optimize your laptop battery.

|

||||

这个页面是个重要区域,可以为你的笔记本电池优化提供建议。

|

||||

|

||||

```

|

||||

PowerTOP v2.9 Overview Idle stats Frequency stats Device stats Tunables

|

||||

@ -340,9 +332,9 @@ PowerTOP v2.9 Overview Idle stats Frequency stats Device stats Tunab

|

||||

Exit | Toggle tunable | Window refresh

|

||||

```

|

||||

|

||||

### How To Generate PowerTop HTML Report

|

||||

### 如何生成 PowerTop 的 HTML 报告

|

||||

|

||||

Run the following command to generate the PowerTop HTML report.

|

||||

运行如下命令生成 PowerTop 的 HTML 报告。

|

||||

|

||||

```

|

||||

$ sudo powertop --html=powertop.html

|

||||

@ -363,12 +355,13 @@ Taking 1 measurement(s) for a duration of 20 second(s) each.

|

||||

PowerTOP outputing using base filename powertop.html

|

||||

```

|

||||

|

||||

Navigate to `file:///home/daygeek/powertop.html` file to access the generated PowerTOP HTML report.

|

||||

打开 `file:///home/daygeek/powertop.html` 文件以访问生成的 PowerTOP 的 HTML 报告。

|

||||

|

||||

![][9]

|

||||

|

||||

### Auto-Tune mode

|

||||

### 自动调整模式

|

||||

|

||||

This feature sets all tunable options from `BAD` to `GOOD` which increase the laptop battery life in Linux.

|

||||

这个功能可以将所有可调整选项从 BAD 设置为 GOOD,这可以提升 Linux 中的笔记本电池寿命。

|

||||

|

||||

```

|

||||

$ sudo powertop --auto-tune

|

||||

@ -393,8 +386,8 @@ via: https://www.2daygeek.com/powertop-monitors-laptop-battery-usage-linux/

|

||||

|

||||

作者:[Vinoth Kumar][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,95 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lujun9972)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10564-1.html)

|

||||

[#]: subject: (How to use Magit to manage Git projects)

|

||||

[#]: via: (https://opensource.com/article/19/1/how-use-magit)

|

||||

[#]: author: (Sachin Patil https://opensource.com/users/psachin)

|

||||

|

||||

如何在 Emacs 中使用 Magit 管理 Git 项目

|

||||

======

|

||||

|

||||

> Emacs 的 Magit 扩展插件使得使用 Git 进行版本控制变得简单起来。

|

||||

|

||||

|

||||

|

||||

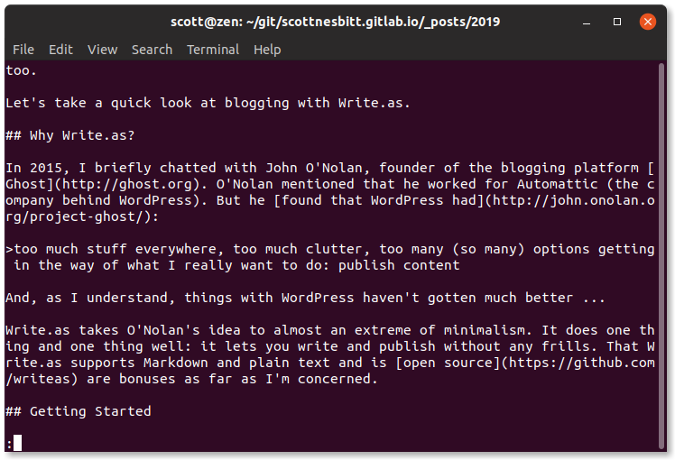

[Git][1] 是一个很棒的用于项目管理的 [版本控制][2] 工具,就是新人学习起来太难。Git 的命令行工具很难用,你不仅需要熟悉它的标志和选项,还需要知道什么环境下使用它们。这使人望而生畏,因此不少人只会非常有限的几个用法。

|

||||

|

||||

好在,现今大多数的集成开发环境 (IDE) 都包含了 Git 扩展,大大地简化了使用使用的难度。Emacs 中就有这么一款 Git 扩展名叫 [Magit][3]。

|

||||

|

||||

Magit 项目成立有差不多 10 年了,它将自己定义为 “一件 Emacs 内的 Git 瓷器”。也就是说,它是一个操作界面,每个操作都能一键完成。本文会带你领略一下 Magit 的操作界面并告诉你如何使用它来管理 Git 项目。

|

||||

|

||||

若你还没有做,请在开始本教程之前先 [安装 Emacs][4],再 [安装 Magit][5]。

|

||||

|

||||

### Magit 的界面

|

||||

|

||||

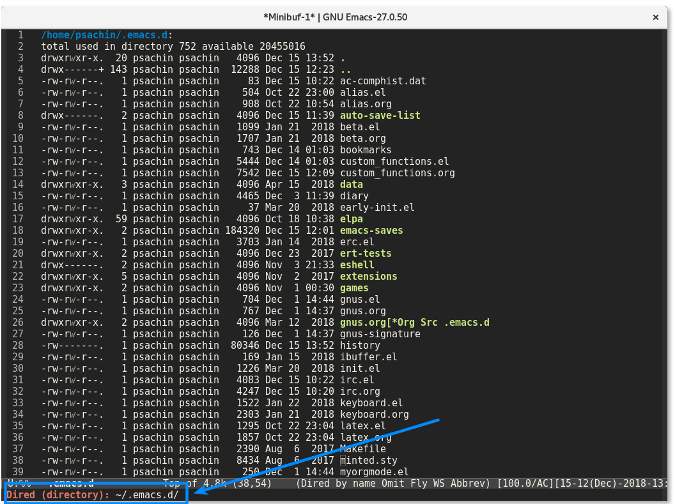

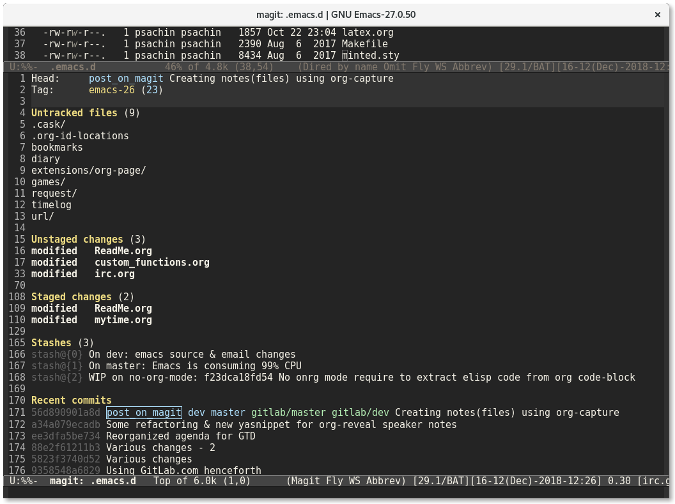

首先用 Emacs 的 [Dired 模式][6] 访问一个项目的目录。比如我所有的 Emacs 配置存储在 `~/.emacs.d/` 目录中,就是用 Git 来进行管理的。

|

||||

|

||||

|

||||

|

||||

若你在命令行下工作,则你需要输入 `git status` 来查看项目的当前状态。Magit 也有类似的功能:`magit-status`。你可以通过 `M-x magit-status` (快捷方式是 `Alt+x magit-status` )来调用该功能。结果看起来像下面这样:

|

||||

|

||||

|

||||

|

||||

Magit 显示的信息比 `git status` 命令的要多得多。它分别列出了未追踪文件列表、未暂存文件列表以及已暂存文件列表。它还列出了<ruby>储藏<rt>stash</rt></ruby>列表以及最近几次的提交 —— 所有这些信息都在一个窗口中展示。

|

||||

|

||||

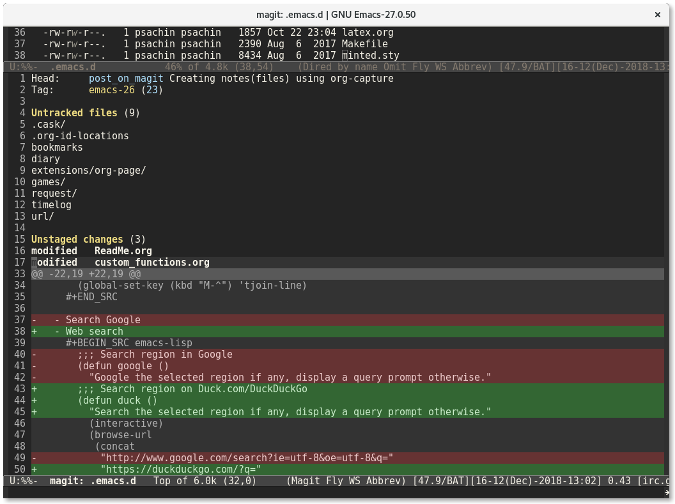

如果你想查看修改了哪些内容,按下 `Tab` 键。比如,我移动光标到未暂存的文件 `custom_functions.org` 上,然后按下 `Tab` 键,Magit 会显示修改了哪些内容:

|

||||

|

||||

|

||||

|

||||

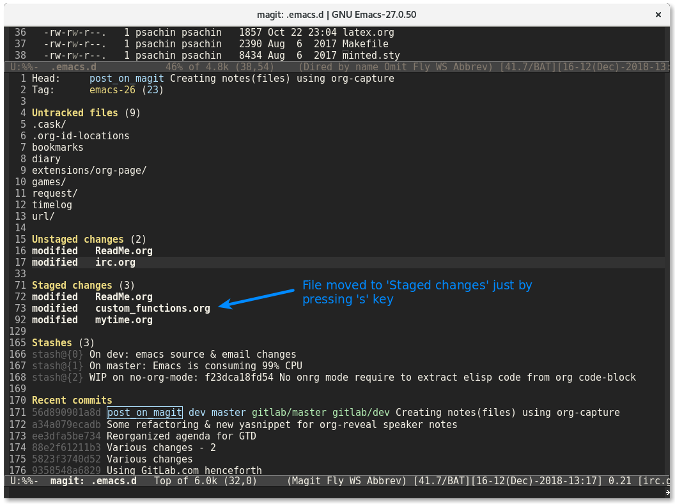

这跟运行命令 `git diff custom_functions.org` 类似。储藏文件更简单。只需要移动光标到文件上然后按下 `s` 键。该文件就会迅速移动到已储藏文件列表中:

|

||||

|

||||

|

||||

|

||||

要<ruby>反储藏<rt>unstage</rt></ruby>某个文件,使用 `u` 键。按下 `s` 和 `u` 键要比在命令行输入 `git add -u <file>` 和 `git reset HEAD <file>` 快的多也更有趣的多。

|

||||

|

||||

### 提交更改

|

||||

|

||||

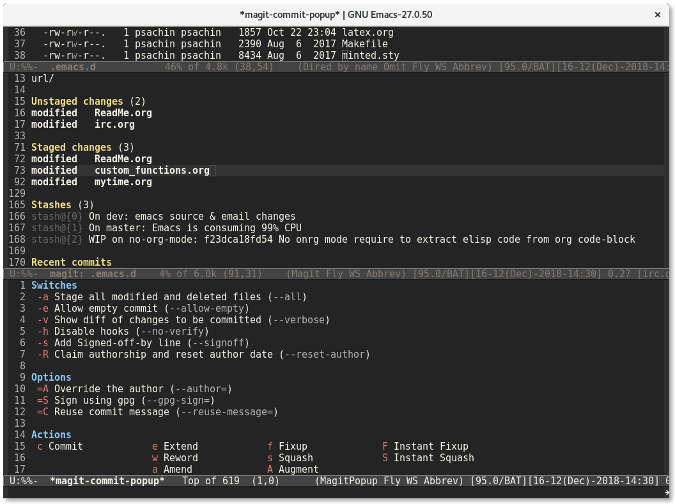

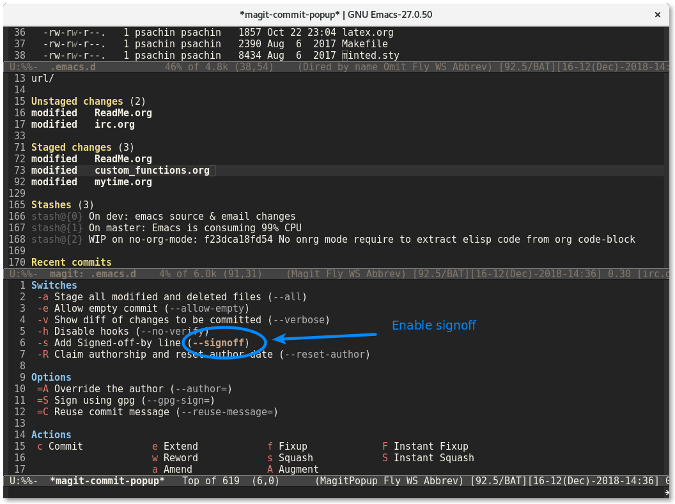

在同一个 Magit 窗口中,按下 `c` 键会显示一个提交窗口,其中提供了许多标志,比如 `--all` 用来暂存所有文件或者 `--signoff` 来往提交信息中添加签名行。

|

||||

|

||||

|

||||

|

||||

将光标移动到想要启用签名标志的行,然后按下回车。`--signoff` 文本会变成高亮,这说明该标志已经被启用。

|

||||

|

||||

|

||||

|

||||

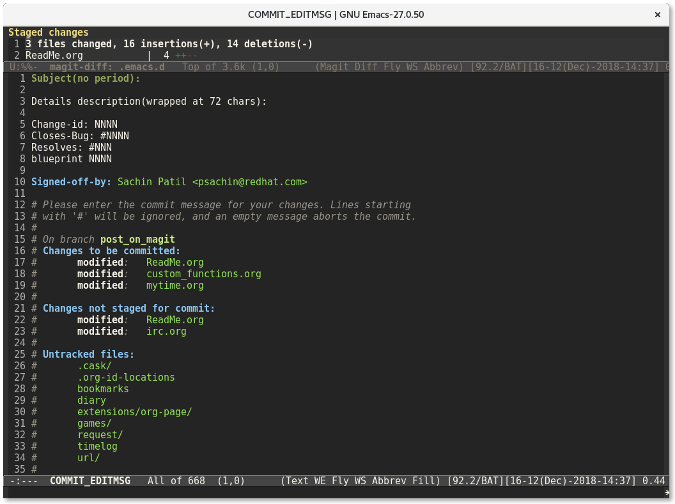

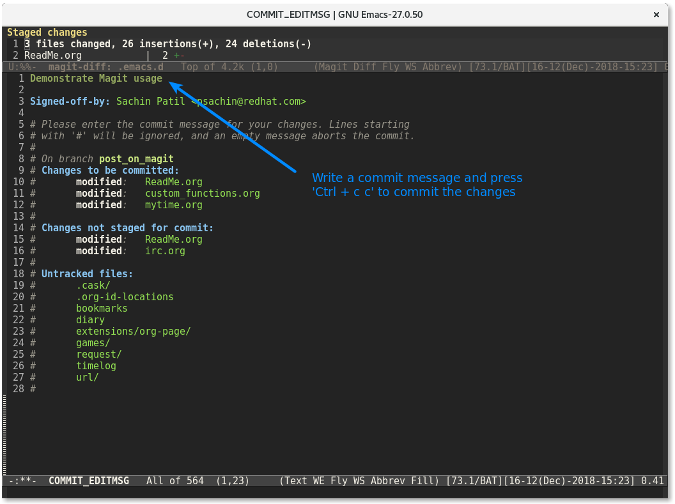

再次按下 `c` 键会显示一个窗口供你输入提交信息。

|

||||

|

||||

|

||||

|

||||

最后,使用 `C-c C-c `(按键 `Ctrl+cc` 的缩写形式) 来提交更改。

|

||||

|

||||

|

||||

|

||||

### 推送更改

|

||||

|

||||

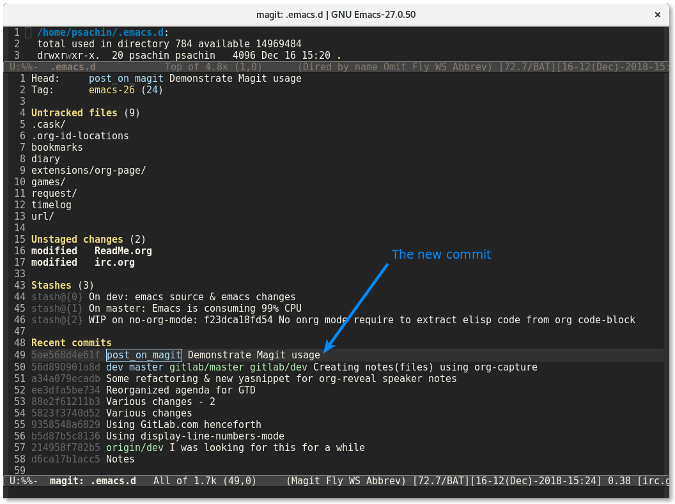

更改提交后,提交行将会显示在 `Recent commits` 区域中显示。

|

||||

|

||||

|

||||

|

||||

将光标放到该提交处然后按下 `p` 来推送该变更。

|

||||

|

||||

若你想感受一下使用 Magit 的感觉,我已经在 YouTube 上传了一段 [演示][7]。本文只涉及到 Magit 的一点皮毛。它有许多超酷的功能可以帮你使用 Git 分支、变基等功能。你可以在 Magit 的主页上找到 [文档、支持,以及更多][8] 的链接。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/how-use-magit

|

||||

|

||||

作者:[Sachin Patil][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/psachin

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://git-scm.com

|

||||

[2]: https://git-scm.com/book/en/v2/Getting-Started-About-Version-Control

|

||||

[3]: https://magit.vc

|

||||

[4]: https://www.gnu.org/software/emacs/download.html

|

||||

[5]: https://magit.vc/manual/magit/Installing-from-Melpa.html#Installing-from-Melpa

|

||||

[6]: https://www.gnu.org/software/emacs/manual/html_node/emacs/Dired-Enter.html#Dired-Enter

|

||||

[7]: https://youtu.be/Vvw75Pqp7Mc

|

||||

[8]: https://magit.vc/

|

||||

371

published/201902/20190110 5 useful Vim plugins for developers.md

Normal file

371

published/201902/20190110 5 useful Vim plugins for developers.md

Normal file

@ -0,0 +1,371 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (pityonline)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10563-1.html)

|

||||

[#]: subject: (5 useful Vim plugins for developers)

|

||||

[#]: via: (https://opensource.com/article/19/1/vim-plugins-developers)

|

||||

[#]: author: (Ricardo Gerardi https://opensource.com/users/rgerardi)

|

||||

|

||||

5 个好用的开发者 Vim 插件

|

||||

======

|

||||

|

||||

> 通过这 5 个插件扩展 Vim 功能来提升你的编码效率。

|

||||

|

||||

|

||||

|

||||

我用 Vim 已经超过 20 年了,两年前我决定把它作为我的首要文本编辑器。我用 Vim 来编写代码、配置文件、博客文章及其它任意可以用纯文本表达的东西。Vim 有很多超级棒的功能,一旦你适合了它,你的工作会变得非常高效。

|

||||

|

||||

在日常编辑工作中,我更倾向于使用 Vim 稳定的原生功能,但开源社区对 Vim 开发了大量的插件,可以扩展 Vim 的功能、改进你的工作流程和提升工作效率。

|

||||

|

||||

以下列举 5 个非常好用的可以用于编写任意编程语言的插件。

|

||||

|

||||

### 1、Auto Pairs

|

||||

|

||||

[Auto Pairs][2] 插件可以帮助你插入和删除成对的文字,如花括号、圆括号或引号。这在编写代码时非常有用,因为很多编程语言都有成对标记的语法,就像圆括号用于函数调用,或引号用于字符串定义。

|

||||

|

||||

Auto Pairs 最基本的功能是在你输入一个左括号时会自动补全对应的另一半括号。比如,你输入了一个 `[`,它会自动帮你补充另一半 `]`。相反,如果你用退格键删除开头的一半括号,Auto Pairs 会删除另一半。

|

||||

|

||||

如果你设置了自动缩进,当你按下回车键时 Auto Pairs 会在恰当的缩进位置补全另一半括号,这比你找到放置另一半的位置并选择一个正确的括号要省劲多了。

|

||||

|

||||

例如下面这段代码:

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import "fmt"

|

||||

|

||||

func main() {

|

||||

x := true

|

||||

items := []string{"tv", "pc", "tablet"}

|

||||

|

||||

if x {

|

||||

for _, i := range items

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

在 `items` 后面输入一个左花括号按下回车会产生下面的结果:

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import "fmt"

|

||||

|

||||

func main() {

|

||||

x := true

|

||||

items := []string{"tv", "pc", "tablet"}

|

||||

|

||||

if x {

|

||||

for _, i := range items {

|

||||

| (cursor here)

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Auto Pairs 提供了大量其它选项(你可以在 [GitHub][3] 上找到),但最基本的功能已经很让人省时间了。

|

||||

|

||||

### 2、NERD Commenter

|

||||

|

||||

[NERD Commenter][4] 插件给 Vim 增加了代码注释的功能,类似在 <ruby>IDE<rt>integrated development environment</rt></ruby> 中注释功能。有了这个插件,你可以一键注释单行或多行代码。

|

||||

|

||||

NERD Commenter 可以与标准的 Vim [filetype][5] 插件配合,所以它能理解一些编程语言并使用合适的方式来注释代码。

|

||||

|

||||

最易上手的方法是按 `Leader+Space` 组合键来切换注释当前行。Vim 默认的 Leader 键是 `\`。

|

||||

|

||||

在<ruby>可视化模式<rt>Visual mode</rt></ruby>中,你可以选择多行一并注释。NERD Commenter 也可以按计数注释,所以你可以加个数量 n 来注释 n 行。

|

||||

|

||||

还有个有用的特性 “Sexy Comment” 可以用 `Leader+cs` 来触发,它的块注释风格更漂亮一些。例如下面这段代码:

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import "fmt"

|

||||

|

||||

func main() {

|

||||

x := true

|

||||

items := []string{"tv", "pc", "tablet"}

|

||||

|

||||

if x {

|

||||

for _, i := range items {

|

||||

fmt.Println(i)

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

选择 `main` 函数中的所有行然后按下 `Leader+cs` 会出来以下注释效果:

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import "fmt"

|

||||

|

||||

func main() {

|

||||

/*

|

||||

* x := true

|

||||

* items := []string{"tv", "pc", "tablet"}

|

||||

*

|

||||

* if x {

|

||||

* for _, i := range items {

|

||||

* fmt.Println(i)

|

||||

* }

|

||||

* }

|

||||

*/

|

||||

}

|

||||

```

|

||||

|

||||

因为这些行都是在一个块中注释的,你可以用 `Leader+Space` 组合键一次去掉这里所有的注释。

|

||||

|

||||

NERD Commenter 是任何使用 Vim 写代码的开发者都必装的插件。

|

||||

|

||||

### 3、VIM Surround

|

||||

|

||||

[Vim Surround][6] 插件可以帮你“环绕”现有文本插入成对的符号(如括号或双引号)或标签(如 HTML 或 XML 标签)。它和 Auto Pairs 有点儿类似,但是用于处理已有文本,在编辑文本时更有用。

|

||||

|

||||

比如你有以下一个句子:

|

||||

|

||||

```

|

||||

"Vim plugins are awesome !"

|

||||

```

|

||||

|

||||

当你的光标处于引起来的句中任何位置时,你可以用 `ds"` 组合键删除句子两端的双引号。

|

||||

|

||||

```

|

||||

Vim plugins are awesome !

|

||||

```

|

||||

|

||||

你也可以用 `cs"'` 把双端的双引号换成单引号:

|

||||

|

||||

```

|

||||

'Vim plugins are awesome !'

|

||||

```

|

||||

|

||||

或者再用 `cs'[` 替换成中括号:

|

||||

|

||||

```

|

||||

[ Vim plugins are awesome ! ]

|

||||

```

|

||||

|

||||

它对编辑 HTML 或 XML 文本中的<ruby>标签<rt>tag</rt></ruby>尤其在行。假如你有以下一行 HTML 代码:

|

||||

|

||||

```

|

||||

<p>Vim plugins are awesome !</p>

|

||||

```

|

||||

|

||||

当光标在 “awesome” 这个单词的任何位置时,你可以按 `ysiw<em>` 直接给它加上着重标签(`<em>`):

|

||||

|

||||

```

|

||||

<p>Vim plugins are <em>awesome</em> !</p>

|

||||

```

|

||||

|

||||

注意它聪明地加上了 `</em>` 闭合标签。

|

||||

|

||||

Vim Surround 也可以用 `ySS` 缩进文本并加上标签。比如你有以下文本:

|

||||

|

||||

```

|

||||

<p>Vim plugins are <em>awesome</em> !</p>

|

||||

```

|

||||

|

||||

你可以用 `ySS<div class="normal">` 加上 `div` 标签,注意生成的段落是自动缩进的。

|

||||

|

||||

```

|

||||

<div class="normal">

|

||||

<p>Vim plugins are <em>awesome</em> !</p>

|

||||

</div>

|

||||

```

|

||||

|

||||

Vim Surround 有很多其它选项,你可以参照 [GitHub][7] 上的说明尝试它们。

|

||||

|

||||

### 4、Vim Gitgutter

|

||||

|

||||

[Vim Gitgutter][8] 插件对使用 Git 作为版本控制工具的人来说非常有用。它会在 Vim 的行号列旁显示 `git diff` 的差异标记。假设你有如下已提交过的代码:

|

||||

|

||||

```

|

||||

1 package main

|

||||

2

|

||||

3 import "fmt"

|

||||

4

|

||||

5 func main() {

|

||||

6 x := true

|

||||

7 items := []string{"tv", "pc", "tablet"}

|

||||

8

|

||||

9 if x {

|

||||

10 for _, i := range items {

|

||||

11 fmt.Println(i)

|

||||

12 }

|

||||

13 }

|

||||

14 }

|

||||

```

|

||||

|

||||

当你做出一些修改后,Vim Gitgutter 会显示如下标记:

|

||||

|

||||

```

|

||||

1 package main

|

||||

2

|

||||

3 import "fmt"

|

||||

4

|

||||

_ 5 func main() {

|

||||

6 items := []string{"tv", "pc", "tablet"}

|

||||

7

|

||||

~ 8 if len(items) > 0 {

|

||||

9 for _, i := range items {

|

||||

10 fmt.Println(i)

|

||||

+ 11 fmt.Println("------")

|

||||

12 }

|

||||

13 }

|

||||

14 }

|

||||

```

|

||||

|

||||

`_` 标记表示在第 5 行和第 6 行之间删除了一行。`~` 表示第 8 行有修改,`+` 表示新增了第 11 行。

|

||||

|

||||

另外,Vim Gitgutter 允许你用 `[c` 和 `]c` 在多个有修改的块之间跳转,甚至可以用 `Leader+hs` 来暂存某个变更集。

|

||||

|

||||

这个插件提供了对变更的即时视觉反馈,如果你用 Git 的话,有了它简直是如虎添翼。

|

||||

|

||||

### 5、VIM Fugitive

|

||||

|

||||

[Vim Fugitive][9] 是另一个将 Git 工作流集成到 Vim 中的超棒插件。它对 Git 做了一些封装,可以让你在 Vim 里直接执行 Git 命令并将结果集成在 Vim 界面里。这个插件有超多的特性,更多信息请访问它的 [GitHub][10] 项目页面。

|

||||

|

||||

这里有一个使用 Vim Fugitive 的基础 Git 工作流示例。设想我们已经对下面的 Go 代码做出修改,你可以用 `:Gblame` 调用 `git blame` 来查看每行最后的提交信息:

|

||||

|

||||

```

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 1 package main

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 2

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 3 import "fmt"

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 4

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│_ 5 func main() {

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 6 items := []string{"tv", "pc", "tablet"}

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 7

|

||||

00000000 (Not Committed Yet 2018-12-05 18:55:00 -0500)│~ 8 if len(items) > 0 {

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 9 for _, i := range items {

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 10 fmt.Println(i)

|

||||

00000000 (Not Committed Yet 2018-12-05 18:55:00 -0500)│+ 11 fmt.Println("------")

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 12 }

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 13 }

|

||||

e9949066 (Ricardo Gerardi 2018-12-05 18:17:19 -0500)│ 14 }

|

||||

```

|

||||

|

||||

可以看到第 8 行和第 11 行显示还未提交。用 `:Gstatus` 命令检查仓库当前的状态:

|

||||

|

||||

```

|

||||

1 # On branch master

|

||||

2 # Your branch is up to date with 'origin/master'.

|

||||

3 #

|

||||

4 # Changes not staged for commit:

|

||||

5 # (use "git add <file>..." to update what will be committed)

|

||||

6 # (use "git checkout -- <file>..." to discard changes in working directory)

|

||||

7 #

|

||||

8 # modified: vim-5plugins/examples/test1.go

|

||||

9 #

|

||||

10 no changes added to commit (use "git add" and/or "git commit -a")

|

||||

--------------------------------------------------------------------------------------------------------

|

||||

1 package main

|

||||

2

|

||||