mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-19 22:51:41 +08:00

commit

05b6ddac75

@ -0,0 +1,293 @@

|

||||

完全指南之在 Ubuntu 操作系统中安装及卸载软件

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

摘要:这篇文章详尽地说明了在 Ubuntu Linux 系统中安装及卸载软件的各种方法。

|

||||

|

||||

当你从 Windows 系统[转向 Linux 系统][14]的时候,刚开始的体验绝对是非比寻常的。在 Ubuntu 系统下就连最基本的事情,比如安装个应用程序都会让(刚从 Windows 世界来的)人感到无比困惑。

|

||||

|

||||

但是你也不用太担心。因为 Linux 系统提供了各种各样的方法来完成同一个任务,刚开始你感到困惑那也是正常的。你并不孤单,我们大家都是这么经历过来的。

|

||||

|

||||

在这篇初学者指南中,我将会教大家在 Ubuntu 系统里以最常用的方式来安装软件,以及如何卸载之前已安装的软件。

|

||||

|

||||

关于在 Ubuntu 上应使用哪种方法来安装软件,我也会提出自己的建议。请用心学习。这篇文章写得很长也很详细,你从中绝对能够学到东西。

|

||||

|

||||

### 在 Ubuntu 系统中安装和卸载软件

|

||||

|

||||

在这篇教程中我使用的是运行着 Unity 桌面环境的 Ubuntu 16.04 版本的系统。除了一些截图外,这篇教程也同样适用于其它版本的 Ubuntu 系统。

|

||||

|

||||

### 1.1 使用 Ubuntu 软件中心来安装软件 【推荐使用】

|

||||

|

||||

在 Ubuntu 系统中查找和安装软件最简单便捷的方法是使用 Ubuntu 软件中心。在 Ubuntu Unity 桌面里,你可以在 Dash 下搜索 Ubuntu 软件中心,然后选中打开即可:

|

||||

|

||||

[

|

||||

|

||||

][15]

|

||||

|

||||

你可以把 Ubuntu 软件中心想像成 Google 的 Play 商店或者是苹果的 App 商店。它包含 Ubuntu 系统下所有可用的软件。你可以通过应用程序的名称来搜索应用程序或者是通过浏览各种软件目录来进行查找软件。你还可以根据作者进行查询。这由你自己来选择。

|

||||

|

||||

|

||||

|

||||

一旦你找到自己想要的应用程序,选中它。软件中心将打开该应用程序的描述页面。你可以阅读关于这款软件的说明,评分等级和用户的评论。如果你愿意,也可以写一条评论。

|

||||

|

||||

一旦你确定想安装这款软件,你可以点击安装按钮来安装已选择的应用程序。在 Ubuntu 系统中,你需要输入 root 账号的密码才能安装该应用程序。

|

||||

|

||||

[

|

||||

|

||||

][16]

|

||||

|

||||

还有什么比这更简单的吗?我觉得应该没有了吧!

|

||||

|

||||

提示:正如我[在 Ubuntu 16.04 系统安装完成后你需要做的事情][17]这篇文章提到的那样,你应该启用 Canonical 合作伙伴仓库。默认情况下,Ubuntu 系统仅提供了那些源自自身软件库(Ubuntu 认证)的软件。

|

||||

|

||||

但是还有一个 Canonical 合伙伙伴软件库,它包含一些闭源专属软件,Ubuntu 并不直接管控它。启用该仓库后将让你能够访问更多的软件。[在 Ubuntu 系统下安装 Skype 软件][18]就是通过那种方式安装完成的。

|

||||

|

||||

在 Unity Dash 中,找到软件或更新工具。

|

||||

|

||||

[

|

||||

|

||||

][19]

|

||||

|

||||

如下图,打开其它软件标签面,勾选 Canonical 合作伙伴选项。

|

||||

|

||||

[

|

||||

|

||||

][20]

|

||||

|

||||

### 1.2 从 Ubuntu 软件中心卸载软件(推荐方式)

|

||||

|

||||

我们刚刚演示了如何在 Ubuntu 软件中心安装软件。那么如何使用同样的方法来卸载已安装的软件呢?

|

||||

|

||||

在 Ubuntu 软件中心卸载软件跟安装软件的步骤一样简单。

|

||||

|

||||

打开软件中心然后点击已安装的软件标签面。它将显示所有已安装的软件。或者,你也可以只搜索应用程序的名称。

|

||||

|

||||

要卸载 Ubuntu 系统中的应用程序,点击删除按钮即中。你同样需要输入 root 账号的密码。

|

||||

|

||||

[

|

||||

|

||||

][22]

|

||||

|

||||

### 2.1 在 Ubuntu 系统中使用 .DEB 文件来安装软件

|

||||

|

||||

.deb 文件跟 Windows 下的 .exe 文件很相似。这是一种安装软件的简易方式。很多软件开发商都会提供 .deb 格式的安装包。

|

||||

|

||||

Google Chrome 浏览器就是这样的。你可以下载从其官网下载 .deb 安装文件

|

||||

|

||||

[

|

||||

|

||||

][23]

|

||||

|

||||

一旦你下载完成 .deb 安装文件之后,只需要双击运行即可。它将在 Ubuntu 软件中心打开,你就可以使用前面 1.1 节中同样的方式来安装软件。

|

||||

|

||||

或者,你也可以使用轻量级的安装程序 [在 Ubuntu 系统中使用 Gdebi 工具来安装 .deb 安装文件][24]。

|

||||

|

||||

软件安装完成后,你可以随意删除下载的 .deb 安装包。

|

||||

|

||||

提示:在使用 .deb 文件的过程中需要注意的一些问题:

|

||||

|

||||

* 确保你是从官网下载的 .deb 安装文件。仅使用官网或者 GitHub 上提供的软件包。

|

||||

* 确保你下载的 .deb 文件系统类型正确(32 位或是 64 位)。请阅读我们写的快速指南[如何查看你的 Ubuntu 系统是 32 位的还是 64 位的][8]

|

||||

|

||||

### 2.2 使用 .DEB 文件来删除已安装的软件

|

||||

|

||||

卸载 .deb 文件安装的软件跟我们在 1.2 节看到的步骤一样的。只需要打开 Ubuntu 软件中心,搜索应用程序名称,然后单击移除并卸载即可。

|

||||

|

||||

或者你也可以使用[新立得包管理器][25]。这也不是必须的,但是如果在 Ubuntu 软件中心找不到已安装的应用程序的情况下,就可以使用这个工具了。新立得软件包管理器会列出你系统里已安装的所有可用的软件。这是一个非常强大和有用的工具。

|

||||

|

||||

这个工具很强大非常有用。在 Ubuntu 软件中心被开发出来提供一种更友好的安装软件方式之前,新立得包管理器是 Ubuntu 系统中默认的安装和卸载软件的工具。

|

||||

|

||||

你可以单击下面的链接来安装新立得软件包管器(它将会在 Ubuntu 软件中心中打开)。

|

||||

|

||||

- [安装新立得包管理器][26]

|

||||

|

||||

打开新立得包管理器,然后找到你想卸载的软件。已安装的软件标记为绿色按钮。单击并选择“标记为删除”。然后单击“应用”来删除你所选择的软件。

|

||||

|

||||

[

|

||||

|

||||

][27]

|

||||

|

||||

### 3.1 在 Ubuntu 系统中使用 APT 命令来安装软件(推荐方式)

|

||||

|

||||

你应该看到过一些网站告诉你使用 `sudo apt-get install` 命令在 Ubuntu 系统下安装软件。

|

||||

|

||||

实际上这种命令行方式跟第 1 节中我们看到的安装方式一样。只是你没有使用 Ubuntu 软件中心来安装或卸载软件,而是使用的是命令行接口。别的没什么不同。

|

||||

|

||||

使用 `apt-get` 命令来安装软件超级简单。你只需要执行下面的命令:

|

||||

|

||||

```

|

||||

sudo apt-get install package_name

|

||||

```

|

||||

|

||||

上面使用 `sudo` 是为了获取“管理员”或 “root” (Linux 专用术语)账号权限。你可以替换 package_name 为你想要安装的软件包名。

|

||||

|

||||

`apt-get` 命令可以自动补全,你只需要输入一些字符并按 tab 键即可, `apt-get` 命令将会列出所有与该字符相匹配的程序。

|

||||

|

||||

### 3.2 在 Ubuntu 系统下使用 APT 命令来卸载软件(推荐方式)

|

||||

|

||||

在命令行下,你可以很轻易的卸载 Ubuntu 软件中心安装的软件,以及使用 `apt` 命令或是使用 .deb 安装包安装的各种软件。

|

||||

|

||||

你只需要使用下面的命令,替换 package-name 为你想要删除的软件名。

|

||||

|

||||

```

|

||||

sudo apt-get remove package_name

|

||||

```

|

||||

|

||||

同样地,你也可以通过按 tab 键来利用 `apt-get` 命令的自动补全功能。

|

||||

|

||||

使用 `apt-get` 命令来安装卸载或卸载并不算什么高深的技能。这实际上非常简便。通过这些简单命令的运用,你可以熟悉 Ubuntu Linux 系统的命令行操作,长期使用对你学习 Linux 系统的帮忙也很大。建议你看下我写的一篇很详细的[apt-get 命令使用指导][28]文章来进一步的了解该命令的使用。

|

||||

|

||||

- 建议阅读:[Linux 系统下 apt-get 命令初学者完全指南][29]

|

||||

|

||||

### 4.1 使用 PPA 命令在 Ubuntu 系统下安装应用程序

|

||||

|

||||

PPA 是[个人软件包归档( Personal Package Archive)][30]的缩写。这是开发者为 Ubuntu 用户提供软件的另一种方式。

|

||||

|

||||

在第 1 节中出现了一个叫做 ‘仓库(repository)’ 的术语。仓库本质上是一个软件集。 Ubuntu 官方仓库主要用于提供经过 Ubuntu 自己认证过的软件。 Canonical 合作伙伴仓库包含来自合作厂商提供的各种应用软件。

|

||||

|

||||

同时,PPA 允许开发者创建自己的 APT 仓库。当用户在系统里添加了一个仓库时(`sources.list` 中增加了该仓库),用户就可以使用开发者自己的仓库里提供的软件了。

|

||||

|

||||

现在你也许要问既然我们已经有 Ubuntu 的官方仓库了,还有什么必要使用 PPA 方式呢?

|

||||

|

||||

答案是并不是所有的软件都会自动添加到 Ubuntu 的官方仓库中。只有受信任的软件才会添加到其中。假设你开发出一款很棒的 Linux 应用程序,然后你想为用户提供定期的更新,但是在它被添加到 Ubuntu 仓库之前,这需要花费好几个月的时间(如果是在被允许的情况下)。 PPA 的出现就是为了解决这个问题。

|

||||

|

||||

除此之外, Ubuntu 官方仓库通常不会把最新版的软件添加进来。这会影响到 Ubuntu 系统的安全性及稳定性。新版本的软件或许会有影响到系统的[回退][31]。这就是为什么在新款软件进入到官方仓库前要花费一定的时间,有时候需要等待几个月。

|

||||

|

||||

但是,如果你不想等待最新版出现在 Ubuntu 仓库中呢?这个时候 PPA 就对你有帮助了。通过 PPA 方式,你可以获得该应用程序的最新版本。

|

||||

|

||||

通常情况下, PPA 通过这三个命令来进行使用。第一个命令添加 PPA 仓库到源列表中。第二个命令更新软件缓存列表,这样你的系统就可以获取到可用的新版本软件了。第三个命令用于从 PPA 安装软件。

|

||||

|

||||

我将演示使用 PPA 方式来安装 [Numix 主题][32]:

|

||||

|

||||

```

|

||||

sudo add-apt-repository ppa:numix/ppa

|

||||

sudo apt-get update

|

||||

sudo apt-get install numix-gtk-theme numix-icon-theme-circle

|

||||

```

|

||||

|

||||

在上面的实例中,我们添加了一个[Numix 项目][33]提供的 PPA 。在更新软件信息之后,我们安装了两个 Numix PPA 中可用的应用程序。

|

||||

|

||||

如果你想使用带有图形界面的应用程序,你可以使用 [Y-PPA 应用程序][34]。通过它你可以很方便地查询 PPA,添加和删除软件。

|

||||

|

||||

注意:PPA 的安全性经常受到争议。我的建议是你应该从受信任的源添加 PPA,最好是从官方软件源添加。

|

||||

|

||||

### 4.2 卸载使用 PPA 方式安装的应用程序

|

||||

|

||||

在之前的文章[在 Ubuntu 系统下移除 PPA][35] 中我已经写得很详细了。你可以跳转到这篇文章去深入学习卸载 PPA 方式安装的软件。

|

||||

|

||||

这里简要提一下,你可以使用下面的两个命令来卸载:

|

||||

|

||||

```

|

||||

sudo apt-get remove numix-gtk-theme numix-icon-theme-circle

|

||||

|

||||

sudo add-apt-repository --remove ppa:numix/ppa

|

||||

```

|

||||

|

||||

第一个命令是卸载通过 PPA 方式安装的软件。第二个命令是从 `source.list` 中删除该 PPA。

|

||||

|

||||

### 5.1 在 Ubuntu Linux 系统中使用源代码来安装软件(不推荐使用)

|

||||

|

||||

我并不建议你使用[软件源代码][36]来安装该应用程序。这种方法很麻烦,容易出问题而且还非常地不方便。你得费尽周折去解决依赖包的问题。你还得保留源代码文件,以便将来卸载该应用程序。

|

||||

|

||||

但是还是有一些用户喜欢通过源代码编译的方式来安装软件,尽管他们自己本身并不会开发软件。实话告诉你,我曾经也经常使用这种方式来安装软件,不过那都是 5 年前的事了,那时候我还是一个实习生,我必须在 Ubuntu 系统下开发一款软件出来。但是,从那之后我更喜欢使用其它方式在 Ubuntu 系统中安装应用程序。我觉得,对于普通的 Linux 桌面用户,最好不要使用源代码的方式来安装软件。

|

||||

|

||||

在这一小节中我将简要地列出使用源代码方式来安装软件的几个步骤:

|

||||

* 下载你想要安装软件的源代码。

|

||||

* 解压下载的文件。

|

||||

* 进入到解压目录里并找到 `README` 或者 `INSTALL` 文件。一款开发完善的软件都会包含这样的文件,用于提供安装或卸载软件的指导方法。

|

||||

* 找到名为 `configure` 的配置文件。如果在当前目录下,使用这个命令来执行该文件:`./configure` 。它将会检查你的系统是否包含所有的必须的软件(在软件术语中叫做‘依赖包’)来安装该应用程序。(LCTT 译注:你可以先使用 `./configure --help` 来查看有哪些编译选项,包括安装的位置、可选的特性和模块等等。)注意并不是所有的软件都包括该配置文件,我觉得那些开发很糟糕的软件就没有这个配置文件。

|

||||

* 如果配置文件执行结果提示你缺少依赖包,你得先安装它们。

|

||||

* 一旦你安装完成所有的依赖包后,使用 `make` 命令来编译该应用程序。

|

||||

* 编译完成后,执行 `sudo make install` 命令来安装该应用程序。

|

||||

|

||||

注意有一些软件包会提供一个安装软件的脚本文件,你只需要运行这个文件即可安装完成。但是大多数情况下,你可没那么幸运。

|

||||

|

||||

还有,使用这种方式安装的软件并不会像使用 Ubuntu 软件库、 PPA 方式或者 .deb 安装方式那样安装的软件会自动更新。

|

||||

|

||||

如果你坚持使用源代码方式来安装软件,我建议你看下这篇很详细的文章[在 Ubuntu 系统中使用源代码安装软件][37]。

|

||||

|

||||

### 5.2 卸载使用源代码方式安装的软件(不推荐使用)

|

||||

|

||||

如果你觉得使用源代码安装软件的方式太难了,再想想看,当你卸载使用这种方式安装的软件将会更痛苦。

|

||||

|

||||

* 首先,你不能删除用于安装该软件的源代码。

|

||||

* 其次,你必须确保在安装的时候也有对应的方式来卸载它。一款设计上很糟糕的应用程序就不会提供卸载软件的方法,因此你不得不手动去删除那个软件包安装的所有文件。

|

||||

|

||||

正常情况下,你应该切换到源代码的解压目录下,使用下面的命令来卸载那个应用程序:

|

||||

|

||||

```

|

||||

sudo make uninstall

|

||||

```

|

||||

|

||||

但是,这也不能保证你每次都会很顺利地卸载完成。

|

||||

|

||||

看到了吧,使用源代码方式来安装软件实在是太麻烦了。这就是为什么我不推荐大家在 Ubuntu 系统中使用源代码来安装软件的原因。

|

||||

|

||||

### 其它一些在 Ubuntu 系统中安装软件的方法

|

||||

|

||||

另外,还有一些在 Ubuntu 系统下并不常用的安装软件的方法。由于这篇文章已经写得够长了,我就不再深入探讨了。下面我将把它们列出来:

|

||||

* Ubuntu 新推出的 [Snap 打包][9]方式

|

||||

* 使用 [dpkg][10] 命令

|

||||

* [AppImage][11] 方式

|

||||

* [pip][12] : 用于安装基于 Python 语言的应用程序

|

||||

|

||||

### 你是如何在 UBUNTU 系统中安装软件的呢?

|

||||

|

||||

如果你一直都在使用 Ubuntu 系统,那么你在 Ubuntu Linux 系统下最喜欢使用什么方式来安装软件呢?你觉得这篇文章对你有用吗?请分享你的一些观点,建议和提出相关的问题。

|

||||

|

||||

--------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

|

||||

我叫 Abhishek Prakash ,F.O.S.S 开发者。我的工作是一名专业的软件开发人员。我是一名狂热的 Linux 系统及开源软件爱好者。我使用 Ubuntu 系统,并且相信分享是一种美德。除了 Linux 系统之外,我喜欢经典的侦探神秘小说。我是 Agatha Christie 作品的真爱粉。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/remove-install-software-ubuntu/

|

||||

|

||||

作者:[ABHISHEK PRAKASH][a]

|

||||

译者:[rusking](https://github.com/rusking)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/abhishek/

|

||||

[1]:https://itsfoss.com/author/abhishek/

|

||||

[2]:https://itsfoss.com/remove-install-software-ubuntu/#comments

|

||||

[3]:http://www.facebook.com/share.php?u=https%3A%2F%2Fitsfoss.com%2Fremove-install-software-ubuntu%2F%3Futm_source%3Dfacebook%26utm_medium%3Dsocial%26utm_campaign%3DSocialWarfare

|

||||

[4]:https://twitter.com/share?original_referer=/&text=How+To+Install+And+Remove+Software+In+Ubuntu+%5BComplete+Guide%5D&url=https://itsfoss.com/remove-install-software-ubuntu/%3Futm_source%3Dtwitter%26utm_medium%3Dsocial%26utm_campaign%3DSocialWarfare&via=abhishek_pc

|

||||

[5]:https://plus.google.com/share?url=https%3A%2F%2Fitsfoss.com%2Fremove-install-software-ubuntu%2F%3Futm_source%3DgooglePlus%26utm_medium%3Dsocial%26utm_campaign%3DSocialWarfare

|

||||

[6]:https://www.linkedin.com/cws/share?url=https%3A%2F%2Fitsfoss.com%2Fremove-install-software-ubuntu%2F%3Futm_source%3DlinkedIn%26utm_medium%3Dsocial%26utm_campaign%3DSocialWarfare

|

||||

[7]:https://www.reddit.com/submit?url=https://itsfoss.com/remove-install-software-ubuntu/&title=How+To+Install+And+Remove+Software+In+Ubuntu+%5BComplete+Guide%5D

|

||||

[8]:https://itsfoss.com/32-bit-64-bit-ubuntu/

|

||||

[9]:https://itsfoss.com/use-snap-packages-ubuntu-16-04/

|

||||

[10]:https://help.ubuntu.com/lts/serverguide/dpkg.html

|

||||

[11]:http://appimage.org/

|

||||

[12]:https://pypi.python.org/pypi/pip

|

||||

[13]:https://itsfoss.com/remove-install-software-ubuntu/managing-software-in-ubuntu-1/

|

||||

[14]:https://itsfoss.com/reasons-switch-linux-windows-xp/

|

||||

[15]:https://itsfoss.com/wp-content/uploads/2016/12/Ubuntu-Software-Center.png

|

||||

[16]:https://itsfoss.com/remove-install-software-ubuntu/install-software-ubuntu-linux-1/

|

||||

[17]:https://itsfoss.com/things-to-do-after-installing-ubuntu-16-04/

|

||||

[18]:https://itsfoss.com/install-skype-ubuntu-1404/

|

||||

[19]:https://itsfoss.com/ubuntu-notify-updates-frequently/software_update_ubuntu/

|

||||

[20]:https://itsfoss.com/things-to-do-after-installing-ubuntu-14-04/enable_canonical_partner/

|

||||

[21]:https://itsfoss.com/essential-linux-applications/

|

||||

[22]:https://itsfoss.com/remove-install-software-ubuntu/uninstall-software-ubuntu/

|

||||

[23]:https://itsfoss.com/remove-install-software-ubuntu/install-software-deb-package/

|

||||

[24]:https://itsfoss.com/gdebi-default-ubuntu-software-center/

|

||||

[25]:http://www.nongnu.org/synaptic/

|

||||

[26]:apt://synaptic

|

||||

[27]:https://itsfoss.com/remove-install-software-ubuntu/uninstall-software-ubuntu-synaptic/

|

||||

[28]:https://itsfoss.com/apt-get-linux-guide/

|

||||

[29]:https://itsfoss.com/apt-get-linux-guide/

|

||||

[30]:https://help.launchpad.net/Packaging/PPA

|

||||

[31]:https://en.wikipedia.org/wiki/Software_regression

|

||||

[32]:https://itsfoss.com/install-numix-ubuntu/

|

||||

[33]:https://numixproject.org/

|

||||

[34]:https://itsfoss.com/easily-manage-ppas-ubuntu-1310-ppa-manager/

|

||||

[35]:https://itsfoss.com/how-to-remove-or-delete-ppas-quick-tip/

|

||||

[36]:https://en.wikipedia.org/wiki/Source_code

|

||||

[37]:http://www.howtogeek.com/105413/how-to-compile-and-install-from-source-on-ubuntu/

|

||||

100

published/20170102 A Guide To Buying A Linux Laptop.md

Normal file

100

published/20170102 A Guide To Buying A Linux Laptop.md

Normal file

@ -0,0 +1,100 @@

|

||||

Linux 笔记本电脑选购指南

|

||||

============================================================

|

||||

|

||||

众所周知,如果你去电脑城[购买一个新的笔记本电脑][5],你所见到的尽是预安装了 Windows 或是 Mac 系统的笔记本电脑。无论怎样,你都会被迫支付一笔额外的费用—— 微软系统的许可费用或是苹果电脑背后的商标使用权费用。

|

||||

|

||||

当然,你也可以选择购买一款笔记本电脑,然后安装自己喜欢的操作系统。然而,最困难的可能是需要找到一款硬件跟你想安装的操作系统兼容性良好的笔记本电脑。

|

||||

|

||||

在此之上,我们还需要考虑硬件驱动程序的可用性。那么,你应该怎么办呢?答案很简单:[购买一款预安装了 Linux 系统的笔记本电脑][6]。

|

||||

|

||||

幸运的是,正好有几家值得依赖的公司提供质量好、有名气,并且预安装了 Linux 系统的笔记本电脑,这样你就不用再担心驱动程序的可用性了。

|

||||

|

||||

也就是说,在这篇文章中,我们将根据用户对笔记本电脑的用途列出 3 款可供用户选择的高性价比机器。

|

||||

|

||||

### 普通用户使用的 Linux 笔记本电脑

|

||||

|

||||

如果你正在寻找一款能够满足日常工作及娱乐需求的 Linux 笔记本电脑,它能够正常运行办公软件,有诸如 Firefox或是 Chrome 这样的 Web 浏览器,有局域网 / Wifi 连接功能,那么你可以考虑选择 [System76][7] 公司生产的 Linux 笔记本电脑,它可以根据用户的定制化需求选择处理器类型,内存及磁盘大小,以及其它配件。

|

||||

|

||||

除此之外, System76 公司为他们所有的 Ubuntu 系统的笔记本电脑提供终身技术支持。如果你觉得这听起来不错,并且也比较感兴趣,你可以考虑下 [Lemur][8] 或者 [Gazelle][9] 这两款笔记本电脑。

|

||||

|

||||

|

||||

|

||||

*Lemur Linux 笔记本电脑*

|

||||

|

||||

|

||||

|

||||

|

||||

*Gazelle Linux 笔记本电脑*

|

||||

|

||||

|

||||

### 开发者使用的 Linux 笔记本电脑

|

||||

|

||||

如果你想找一款坚固可靠,外观精美,并且性能强悍的笔记本电脑用于开发工作,你可以考虑一下 [Dell 的 XPS 13 笔记本电脑][10]。

|

||||

|

||||

这款 13 英寸的精美笔记本电脑,配置全高清 HD 显示器,触摸板,售价范围视其配置情况而定,CPU 代号/型号:Intel 的第 7 代处理器 i5 和 i7,固态硬盘大小:128 至 512 GB,内存大小:8 至 16 GB。

|

||||

|

||||

|

||||

|

||||

|

||||

*Dell XPS Linux 笔记本电脑*

|

||||

|

||||

这些都是你应该考虑在内的重要因素,Dell 已经做得很到位了。不幸的是,Dell ProSupport 为该型号的笔记本电脑仅提供 Ubuntu 16.04 LTS 系统的技术支持(在写本篇文章的时候 - 2016 年 12 月)。

|

||||

|

||||

### 系统管理员使用的 Linux 笔记本电脑

|

||||

|

||||

虽然系统管理员可以顺利搞定在裸机上安装 Linux 系统的工作,但是使用 System76 的产品,你可以避免寻找各种驱动并解决兼容性问题上的麻烦。

|

||||

|

||||

之后,你可以根据自己的需求来配置电脑特性,你可以提高笔记本电脑的性能,增加内存到 32 GB 以确保你可以运行虚拟化环境并进行各种系统管理相关的任务。

|

||||

|

||||

如果你对此比较感兴趣,你可以考虑购买 [Kudu][12] 或者是 [Oryx Pro][13] 笔记本电脑。

|

||||

|

||||

|

||||

|

||||

*Kudu Linux 笔记本电脑*

|

||||

|

||||

|

||||

|

||||

|

||||

*Oryx Pro 笔记本电脑*

|

||||

|

||||

### 总结

|

||||

|

||||

在这篇文章中,我们探讨了对于普通用户、开发者及系统管理员来说,为什么购买一款预安装了 Linux 系统的笔记本是一个不错的选择。一旦你决定好,你就可以轻松自如的考虑下应该如何消费这笔省下来的钱了。

|

||||

|

||||

你觉得在购买一款 Linux 系统的笔记本电脑时还应该注意些什么?请在下面的评论区与大家分享。

|

||||

|

||||

像往常一样,如果你对这篇文章有什么意见和看法,请随时提出来。我们很期待看到你的回复。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

|

||||

|

||||

Gabriel Cánepa 来自 Argentina,San Luis,Villa Mercedes ,他是一名 GNU/Linux 系统管理员和网站开发工程师。目前在一家世界领先的消费品公司工作,在日常工作中,他非常善于使用 FOSS 工具来提高公司在各个领域的生产率。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/buy-linux-laptops/

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[rusking](https://github.com/rusking)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

[1]:http://www.tecmint.com/wp-content/uploads/2016/11/Lemur-Laptop.png

|

||||

[2]:http://www.tecmint.com/wp-content/uploads/2016/11/Gazelle-Laptop.png

|

||||

[3]:http://www.tecmint.com/wp-content/uploads/2016/11/Kudu-Linux-Laptop.png

|

||||

[4]:http://www.tecmint.com/wp-content/uploads/2016/11/Oryx-Pro-Linux-Laptop.png

|

||||

[5]:http://amzn.to/2fPxTms

|

||||

[6]:http://amzn.to/2fPxTms

|

||||

[7]:https://system76.com/laptops

|

||||

[8]:https://system76.com/laptops/lemur

|

||||

[9]:https://system76.com/laptops/gazelle

|

||||

[10]:http://amzn.to/2fBLMGj

|

||||

[11]:http://www.tecmint.com/wp-content/uploads/2016/11/Dells-XPS-Laptops.png

|

||||

[12]:https://system76.com/laptops/kudu

|

||||

[13]:https://system76.com/laptops/oryx

|

||||

@ -0,0 +1,152 @@

|

||||

在 Linux 终端中自定义 Bash 配色和提示内容

|

||||

============================================================

|

||||

|

||||

现今,大多数(如果不是全部的话)现代 Linux 发行版的默认 shell 都是 Bash。然而,你可能已经注意到这样一个现象,在各个发行版中,其终端配色和提示内容都各不相同。

|

||||

|

||||

如果你一直都在考虑,或者只是一时好奇,如何定制可以使 Bash 更好用。不管怎样,请继续读下去 —— 本文将告诉你怎么做。

|

||||

|

||||

### PS1 Bash 环境变量

|

||||

|

||||

命令提示符和终端外观是通过一个叫 `PS1` 的变量来进行管理的。根据 **Bash** 手册页说明,**PS1** 代表了 shell 准备好读取命令时显示的主体的提示字符串。

|

||||

|

||||

**PS1** 所允许的内容包括一些反斜杠转义的特殊字符,可以查看手册页中 **PRMPTING** 部分的内容来了解它们的含义。

|

||||

|

||||

为了演示,让我们先来显示下我们系统中 `PS1` 的当前内容吧(这或许看上去和你们的有那么点不同):

|

||||

|

||||

```

|

||||

$ echo $PS1

|

||||

[\u@\h \W]\$

|

||||

```

|

||||

|

||||

现在,让我们来了解一下怎样自定义 PS1 吧,以满足我们各自的需求。

|

||||

|

||||

#### 自定义 PS1 格式

|

||||

|

||||

根据手册页 PROMPTING 章节的描述,下面对各个特殊字符的含义作如下说明:

|

||||

|

||||

- `\u:` 显示当前用户的 **用户名**。

|

||||

- `\h:` <ruby>完全限定域名 <rt>Fully-Qualified Domain Name</rt></ruby>(FQDN)中第一个点(.)之前的**主机名**。

|

||||

- `\W:` 当前工作目录的**基本名**,如果是位于 `$HOME` (家目录)通常使用波浪符号简化表示(`~`)。

|

||||

- `\$:` 如果当前用户是 root,显示为 `#`,否则为 `$`。

|

||||

|

||||

例如,如果我们想要显示当前命令的历史数量,可以考虑添加 `\!`;如果我们想要显示 FQDN 全称而不是短服务器名,那么可以考虑添加 `\H`。

|

||||

|

||||

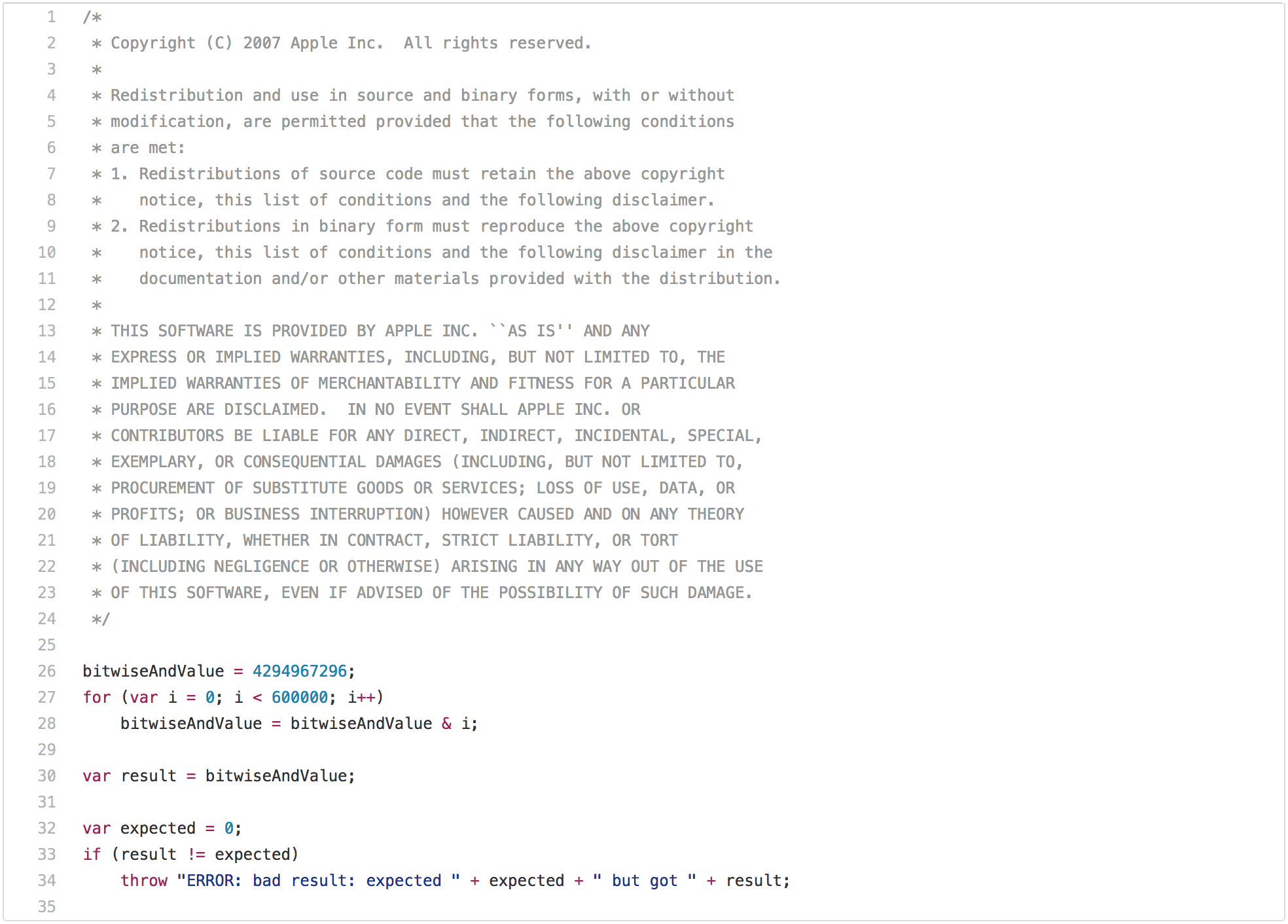

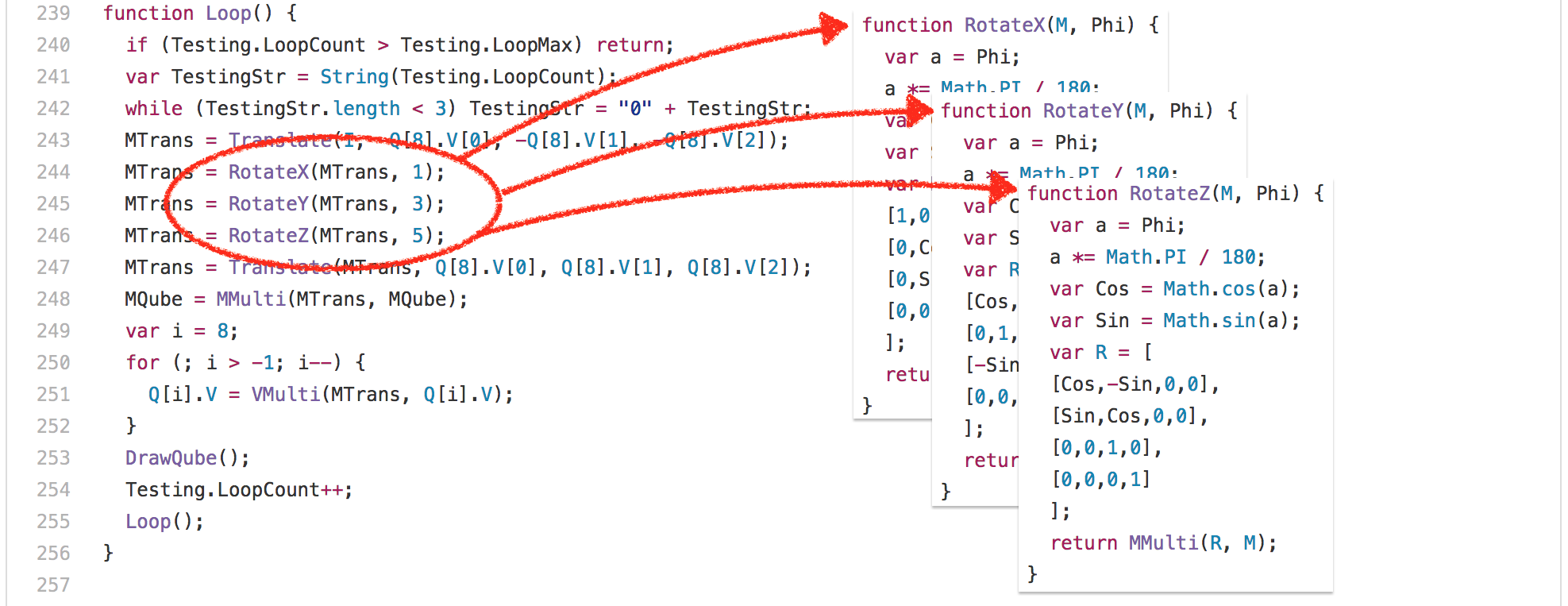

在下面的例子中,我们同时将这两个特殊字符引入我们当前的环境中,命令如下:

|

||||

|

||||

```

|

||||

PS1="[\u@\H \W \!]\$"

|

||||

```

|

||||

|

||||

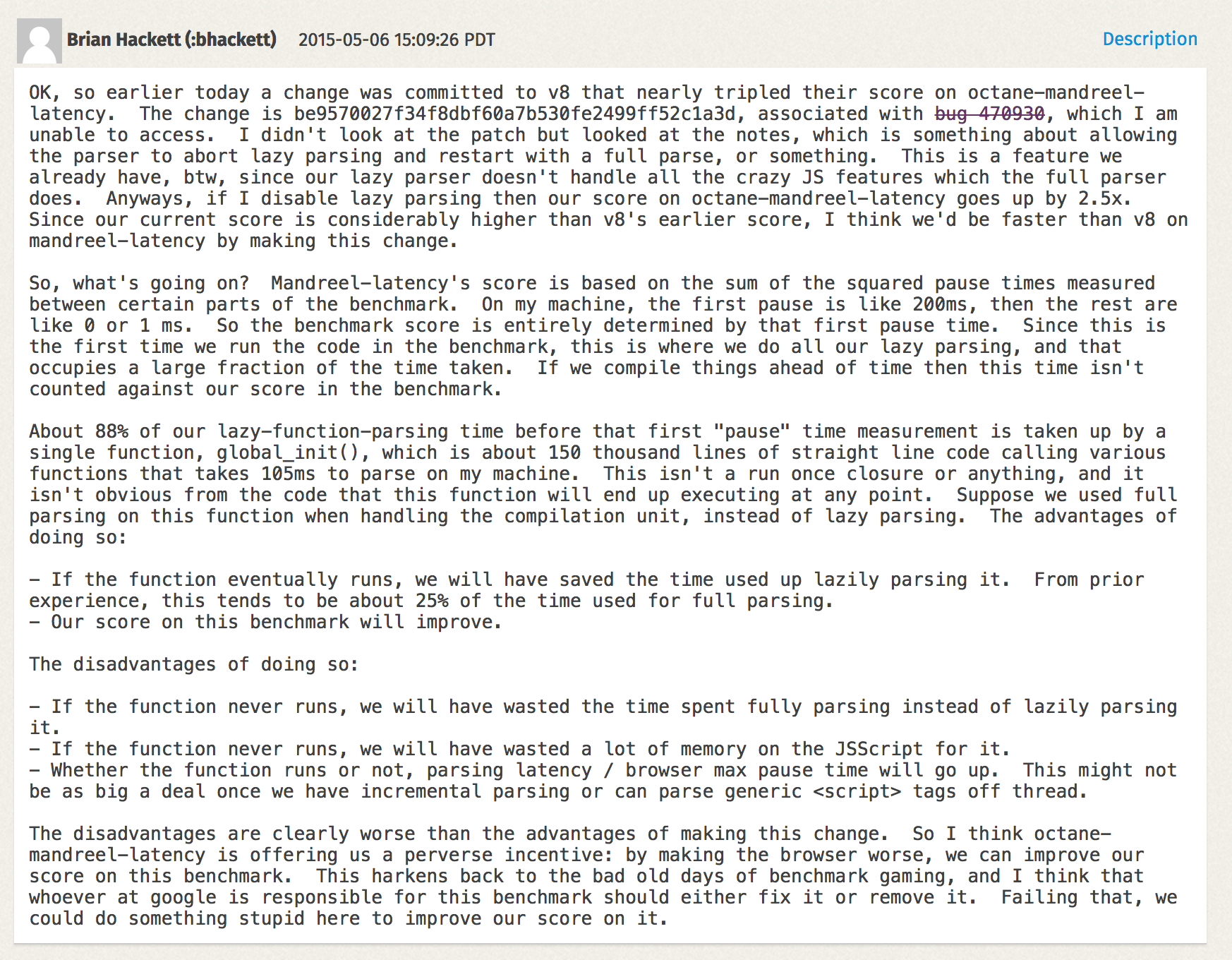

当按下回车键后,你将会看到提示内容会变成下面这样。可以对比执行命令修改前和修改后的提示内容:

|

||||

|

||||

[

|

||||

|

||||

][1]

|

||||

|

||||

*自定义 Linux 终端提示符 PS1*

|

||||

|

||||

现在,让我们再深入一点,修改命令提示符中的用户名和主机名 —— 同时修改文本和环境背景。

|

||||

|

||||

实际上,我们可以对提示符进行 3 个方面的自定义:

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<th>文本格式</th>

|

||||

<th>前景色(文本)</th>

|

||||

<th>背景色 </th>

|

||||

</tr>

|

||||

<tr>

|

||||

<th>0: 常规文本</th>

|

||||

<th>30: 黑色</th>

|

||||

<th>40: 黑色</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<th>1: 加粗</th>

|

||||

<th>31: 红色</th>

|

||||

<th>41: 红色</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<th>4: 下划线文本</th>

|

||||

<th> 32: 绿色</th>

|

||||

<th>42: 绿色</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<th></th>

|

||||

<th>33: 黄色</th>

|

||||

<th>43: 黄色</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<th></th>

|

||||

<th>34: 蓝色</th>

|

||||

<th>44: 蓝色</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<th></th>

|

||||

<th>35: 紫色</th>

|

||||

<th>45: 紫色</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<th></th>

|

||||

<th>36: 青色</th>

|

||||

<th>46: 青色</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<th></th>

|

||||

<th>37: 白色</th>

|

||||

<th>47: 白色</th>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

我们将在开头使用 `\e` 特殊字符,跟着颜色序列,在结尾使用 `m` 来表示结束。

|

||||

|

||||

在该序列中,三个值(**背景**,**格式**和**前景**)由分号分隔(如果不赋值,则假定为默认值)。

|

||||

|

||||

**建议阅读:** [在 Linux 中学习 Bash shell 脚本][2]。

|

||||

|

||||

此外,由于值的范围不同,指定背景,格式,或者前景的先后顺序没有关系。

|

||||

|

||||

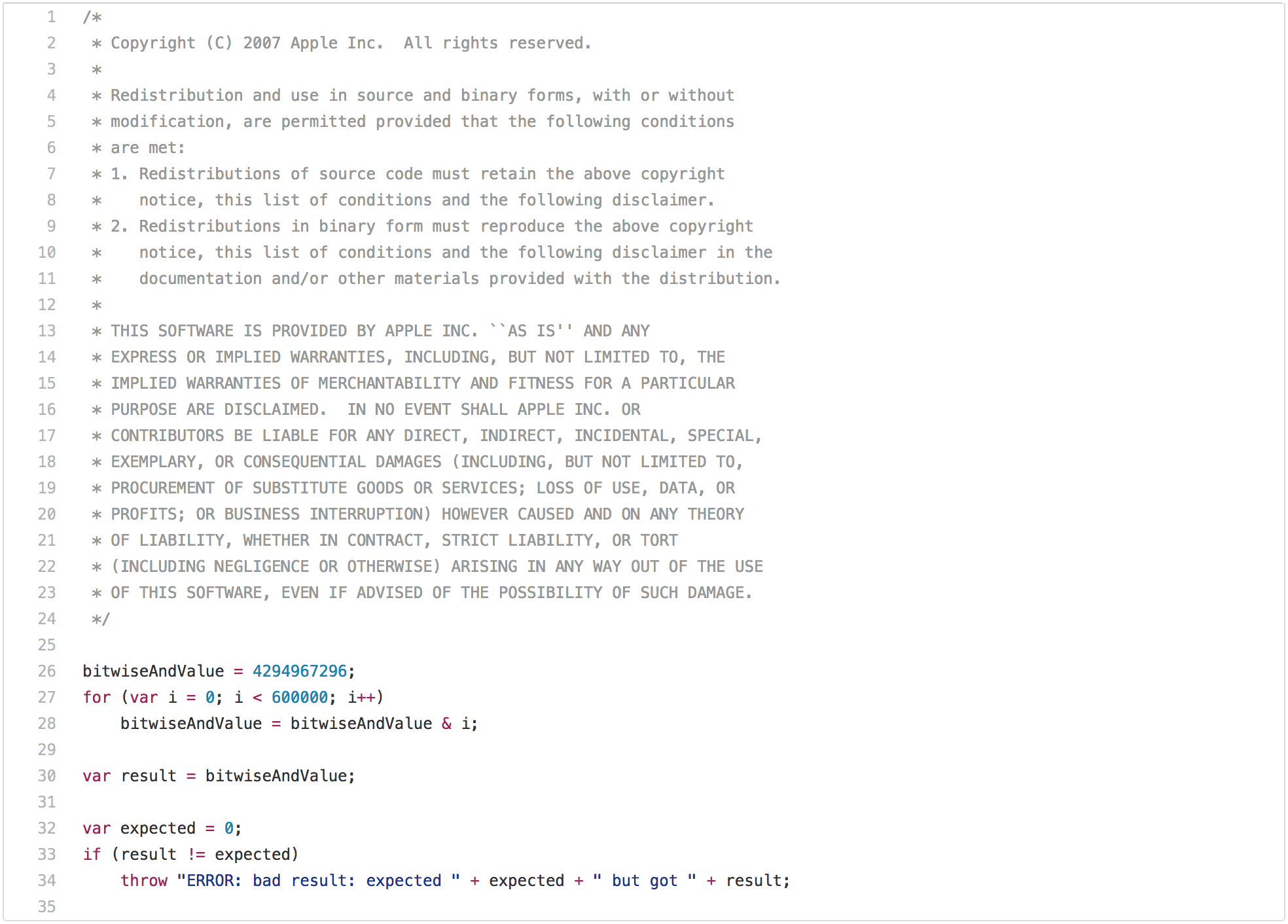

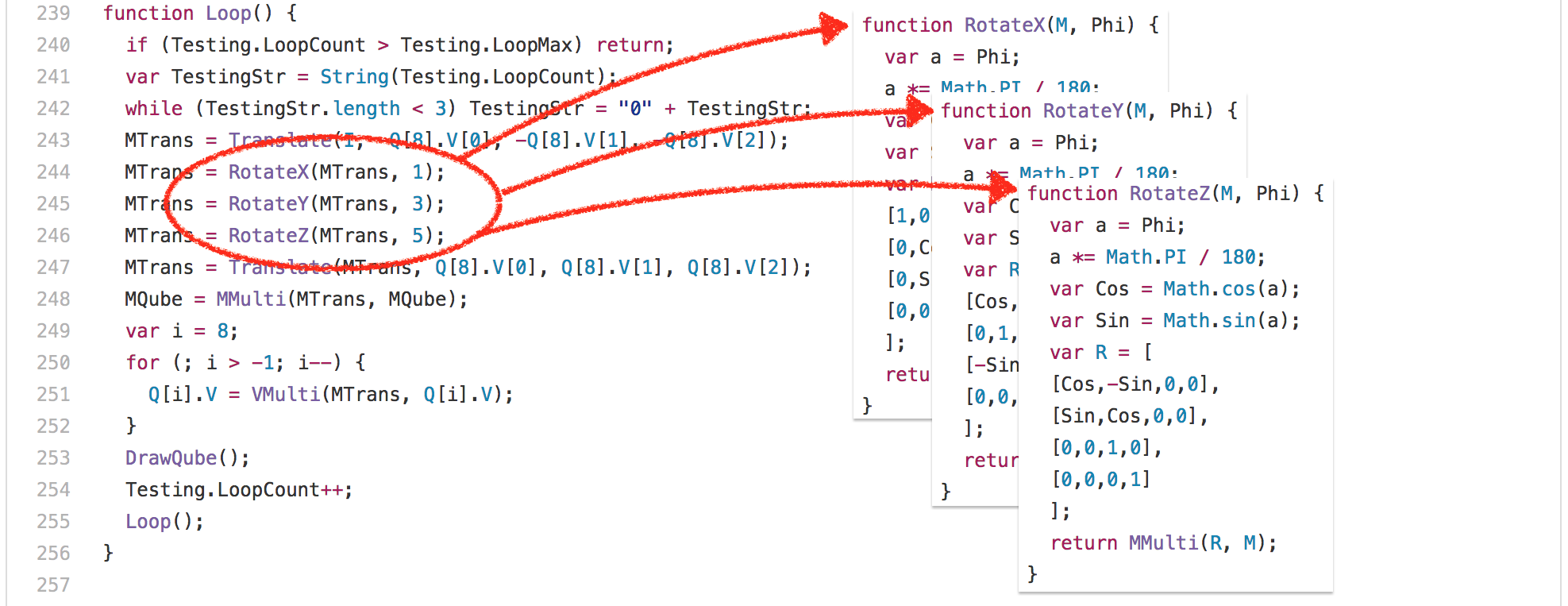

例如,下面的 `PS1` 将导致提示符为黄色带下划线文本,并且背景为红色:

|

||||

|

||||

```

|

||||

PS1="\e[41;4;33m[\u@\h \W]$ "

|

||||

```

|

||||

[

|

||||

|

||||

][3]

|

||||

|

||||

*修改 Linux 终端提示符配色 PS1*

|

||||

|

||||

虽然它看起来那么漂亮,但是这个自定义将只会持续到当前用户会话结束。如果你关闭终端,或者退出本次会话,所有修改都会丢失。

|

||||

|

||||

为了让修改永久生效,你必须将下面这行添加到 `~/.bashrc`或者 `~/.bash_profile`,这取决于你的版本。

|

||||

|

||||

```

|

||||

PS1="\e[41;4;33m[\u@\h \W]$ "

|

||||

```

|

||||

|

||||

尽情去玩耍吧,你可以尝试任何色彩,直到找出最适合你的。

|

||||

|

||||

##### 小结

|

||||

|

||||

在本文中,我们讲述了如何来自定义 Bash 提示符的配色和提示内容。如果你对本文还有什么问题或者建议,请在下面评论框中写下来吧。我们期待你们的声音。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:Aaron Kili 是一位 Linux 及 F.O.S.S 的狂热爱好者,一位未来的 Linux 系统管理员,web 开发者,而当前是 TechMint 的原创作者,他热爱计算机工作,并且信奉知识分享。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/customize-bash-colors-terminal-prompt-linux/

|

||||

|

||||

作者:[Aaron Kili][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/aaronkili/

|

||||

[1]:http://www.tecmint.com/wp-content/uploads/2017/01/Customize-Linux-Terminal-Prompt.png

|

||||

[2]:http://www.tecmint.com/category/bash-shell/

|

||||

[3]:http://www.tecmint.com/wp-content/uploads/2017/01/Change-Linux-Terminal-Color-Prompt.png

|

||||

@ -3,27 +3,27 @@ LXD 2.0 系列(五):镜像管理

|

||||

|

||||

这是 [LXD 2.0 系列介绍文章][0]的第五篇。

|

||||

|

||||

因为lxd容器管理有很多命令,因此这篇文章会很长。 如果你想要快速地浏览这些相同的命令,你可以[尝试下我们的在线演示][1]!

|

||||

因为 lxd 容器管理有很多命令,因此这篇文章会很长。 如果你想要快速地浏览这些相同的命令,你可以[尝试下我们的在线演示][1]!

|

||||

|

||||

|

||||

|

||||

### 容器镜像

|

||||

|

||||

如果你以前使用过LXC,你可能还记得那些LXC“模板”,基本上都是导出一个容器文件系统以及一点配置的shell脚本。

|

||||

如果你以前使用过 LXC,你可能还记得那些 LXC “模板”,基本上都是导出一个容器文件系统以及一点配置的 shell 脚本。

|

||||

|

||||

大多数模板通过在本机上根据发行版自举来生成文件系统。这可能需要相当长的时间,并且无法在所有的发行版上可用,另外可能需要大量的网络带宽。

|

||||

大多数模板是通过在本机上执行一个完整的发行版自举来生成该文件系统。这可能需要相当长的时间,并且无法在所有的发行版上可用,另外可能需要大量的网络带宽。

|

||||

|

||||

回到LXC 1.0,我写了一个“下载”模板,它允许用户下载预先打包的容器镜像,在中央服务器上的模板脚本生成,接着高度压缩、签名并通过https分发。我们很多用户从旧版生成容器切换到使用这种新的,更快更可靠的创建容器的方法。

|

||||

回到 LXC 1.0,我写了一个“下载”模板,它允许用户下载预先打包的容器镜像,用模板脚本在中央服务器上生成,接着高度压缩、签名并通过 https 分发。我们很多用户从旧版的容器生成方式切换到了使用这种新的、更快更可靠的创建容器的方式。

|

||||

|

||||

使用LXD,我们通过全面的基于镜像的工作流程向前迈进了一步。所有容器都是从镜像创建的,我们在LXD中具有高级镜像缓存和预加载支持,以使镜像存储保持最新。

|

||||

使用 LXD,我们通过全面的基于镜像的工作流程向前迈进了一步。所有容器都是从镜像创建的,我们在 LXD 中具有高级镜像缓存和预加载支持,以使镜像存储保持最新。

|

||||

|

||||

### 与LXD镜像交互

|

||||

### 与 LXD 镜像交互

|

||||

|

||||

在更深入了解镜像格式之前,让我们快速了解下LXD可以让你做些什么。

|

||||

在更深入了解镜像格式之前,让我们快速了解下 LXD 可以让你做些什么。

|

||||

|

||||

#### 透明地导入镜像

|

||||

|

||||

所有的容器都是有镜像创建的。镜像可以来自一台远程服务器并使用它的完整hash、短hash或者别名拉取下来,但是最终每个LXD容器都是创建自一个本地镜像。

|

||||

所有的容器都是由镜像创建的。镜像可以来自一台远程服务器并使用它的完整 hash、短 hash 或者别名拉取下来,但是最终每个 LXD 容器都是创建自一个本地镜像。

|

||||

|

||||

这有个例子:

|

||||

|

||||

@ -33,9 +33,9 @@ lxc launch ubuntu:75182b1241be475a64e68a518ce853e800e9b50397d2f152816c24f038c94d

|

||||

lxc launch ubuntu:75182b1241be c3

|

||||

```

|

||||

|

||||

所有这些引用相同的远程镜像(在写这篇文章时)在第一次运行其中之一时,远程镜像将作为缓存镜像导入本地LXD镜像存储,接着从中创建容器。

|

||||

所有这些引用相同的远程镜像(在写这篇文章时),在第一次运行这些命令其中之一时,远程镜像将作为缓存镜像导入本地 LXD 镜像存储,接着从其创建容器。

|

||||

|

||||

下一次运行其中一个命令时,LXD将只检查镜像是否仍然是最新的(当不是由指纹引用时),如果是,它将创建容器而不下载任何东西。

|

||||

下一次运行其中一个命令时,LXD 将只检查镜像是否仍然是最新的(当不是由指纹引用时),如果是,它将创建容器而不下载任何东西。

|

||||

|

||||

现在镜像被缓存在本地镜像存储中,你也可以从那里启动它,甚至不检查它是否是最新的:

|

||||

|

||||

@ -49,13 +49,13 @@ lxc launch 75182b1241be c4

|

||||

lxc launch my-image c5

|

||||

```

|

||||

|

||||

如果你想要改变一些自动缓存或者过期行为,在本系列之前的文章中有一些命令。

|

||||

如果你想要改变一些自动缓存或者过期行为,在本系列之前的文章中有[一些命令](https://linux.cn/article-7687-1.html)。

|

||||

|

||||

#### 手动导入镜像

|

||||

|

||||

##### 从镜像服务器中复制

|

||||

|

||||

如果你想复制远程某个镜像到你本地镜像存储但不立即从它创建一个容器,你可以使用“lxc image copy”命令。它可以让你调整一些镜像标志,比如:

|

||||

如果你想复制远程的某个镜像到你本地镜像存储,但不立即从它创建一个容器,你可以使用`lxc image copy`命令。它可以让你调整一些镜像标志,比如:

|

||||

|

||||

```

|

||||

lxc image copy ubuntu:14.04 local:

|

||||

@ -63,28 +63,29 @@ lxc image copy ubuntu:14.04 local:

|

||||

|

||||

这只是简单地复制一个远程镜像到本地存储。

|

||||

|

||||

如果您想要通过比其指纹更容易的方式来记住你引用的镜像副本,则可以在复制时添加别名:

|

||||

|

||||

如果您想要通过比记住其指纹更容易的方式来记住你引用的镜像副本,则可以在复制时添加别名:

|

||||

|

||||

```

|

||||

lxc image copy ubuntu:12.04 local: --alias old-ubuntu

|

||||

lxc launch old-ubuntu c6

|

||||

```

|

||||

|

||||

如果你想要使用源服务器上设置的别名,你可以要求LXD复制下来:

|

||||

如果你想要使用源服务器上设置的别名,你可以要求 LXD 复制下来:

|

||||

|

||||

```

|

||||

lxc image copy ubuntu:15.10 local: --copy-aliases

|

||||

lxc launch 15.10 c7

|

||||

```

|

||||

|

||||

上面的副本都是一次性拷贝,也就是复制远程镜像的当前版本到本地镜像存储中。如果你想要LXD保持镜像最新,就像它缓存中存储的那样,你需要使用`–auto-update`标志:

|

||||

上面的副本都是一次性拷贝,也就是复制远程镜像的当前版本到本地镜像存储中。如果你想要 LXD 保持镜像最新,就像它在缓存中存储的那样,你需要使用 `–auto-update` 标志:

|

||||

|

||||

```

|

||||

lxc image copy images:gentoo/current/amd64 local: --alias gentoo --auto-update

|

||||

```

|

||||

|

||||

##### 导入tarball

|

||||

##### 导入 tarball

|

||||

|

||||

如果某人给你提供了一个单独的tarball,你可以用下面的命令导入:

|

||||

如果某人给你提供了一个单独的 tarball,你可以用下面的命令导入:

|

||||

|

||||

```

|

||||

lxc image import <tarball>

|

||||

@ -96,15 +97,15 @@ lxc image import <tarball>

|

||||

lxc image import <tarball> --alias random-image

|

||||

```

|

||||

|

||||

现在如果你被给了有两个tarball,识别哪个含有LXD的元数据。通常可以通过tarball名称,如果不行就选择最小的那个,元数据tarball包是很小的。 然后将它们一起导入:

|

||||

现在如果你被给了两个 tarball,要识别哪个是含有 LXD 元数据的。通常可以通过 tarball 的名称来识别,如果不行就选择最小的那个,元数据 tarball 包是很小的。 然后将它们一起导入:

|

||||

|

||||

```

|

||||

lxc image import <metadata tarball> <rootfs tarball>

|

||||

```

|

||||

|

||||

##### 从URL中导入

|

||||

##### 从 URL 中导入

|

||||

|

||||

“lxc image import”也可以与指定的URL一起使用。如果你的一台https网络服务器的某个路径中有LXD-Image-URL和LXD-Image-Hash的标头设置,那么LXD就会把这个镜像拉到镜像存储中。

|

||||

`lxc image import` 也可以与指定的 URL 一起使用。如果你的一台 https Web 服务器的某个路径中有 `LXD-Image-URL` 和 `LXD-Image-Hash` 的标头设置,那么 LXD 就会把这个镜像拉到镜像存储中。

|

||||

|

||||

可以参照例子这么做:

|

||||

|

||||

@ -112,18 +113,17 @@ lxc image import <metadata tarball> <rootfs tarball>

|

||||

lxc image import https://dl.stgraber.org/lxd --alias busybox-amd64

|

||||

```

|

||||

|

||||

当拉取镜像时,LXD还会设置一些标头,远程服务器可以检查它们以返回适当的镜像。 它们是LXD-Server-Architectures和LXD-Server-Version。

|

||||

|

||||

这意味着它可以是一个穷人的镜像服务器。 它可以使任何静态Web服务器提供一个用户友好的方式导入你的镜像。

|

||||

当拉取镜像时,LXD 还会设置一些标头,远程服务器可以检查它们以返回适当的镜像。 它们是 `LXD-Server-Architectures` 和 `LXD-Server-Version`。

|

||||

|

||||

这相当于一个简陋的镜像服务器。 它可以通过任何静态 Web 服务器提供一中用户友好的导入镜像的方式。

|

||||

|

||||

#### 管理本地镜像存储

|

||||

|

||||

现在我们本地已经有一些镜像了,让我们瞧瞧可以做些什么。我们已经涵盖了最主要的部分,从它们来创建容器,但是你还可以在本地镜像存储上做更多。

|

||||

现在我们本地已经有一些镜像了,让我们瞧瞧可以做些什么。我们已经介绍了最主要的部分,可以从它们来创建容器,但是你还可以在本地镜像存储上做更多。

|

||||

|

||||

##### 列出镜像

|

||||

|

||||

要列出所有的镜像,运行“lxc image list”:

|

||||

要列出所有的镜像,运行 `lxc image list`:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc image list

|

||||

@ -174,7 +174,7 @@ stgraber@dakara:~$ lxc image list os=ubuntu

|

||||

+-------------+--------------+--------+---------------------------------------------+--------+----------+------------------------------+

|

||||

```

|

||||

|

||||

要了解所有镜像的信息,你可以使用“lxc image info”:

|

||||

要了解镜像的所有信息,你可以使用`lxc image info`:

|

||||

|

||||

```

|

||||

stgraber@castiana:~$ lxc image info ubuntu

|

||||

@ -206,7 +206,7 @@ Source:

|

||||

|

||||

##### 编辑镜像

|

||||

|

||||

一个编辑镜像的属性和标志的简单方法是使用:

|

||||

编辑镜像的属性和标志的简单方法是使用:

|

||||

|

||||

```

|

||||

lxc image edit <alias or fingerprint>

|

||||

@ -228,7 +228,7 @@ properties:

|

||||

public: false

|

||||

```

|

||||

|

||||

你可以修改任何属性,打开或者关闭自动更新,后者标记一个镜像是公共的(以后还有更多)

|

||||

你可以修改任何属性,打开或者关闭自动更新,或者标记一个镜像是公共的(后面详述)。

|

||||

|

||||

##### 删除镜像

|

||||

|

||||

@ -238,11 +238,11 @@ public: false

|

||||

lxc image delete <alias or fingerprint>

|

||||

```

|

||||

|

||||

注意你不必移除缓存对象,它们会在过期后被LXD自动移除(默认上,在最后一次使用的10天后)。

|

||||

注意你不必移除缓存对象,它们会在过期后被 LXD 自动移除(默认上,在最后一次使用的 10 天后)。

|

||||

|

||||

##### 导出镜像

|

||||

|

||||

如果你想得到目前镜像的tarball,你可以使用“lxc image export”,像这样:

|

||||

如果你想得到目前镜像的 tarball,你可以使用`lxc image export`,像这样:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc image export old-ubuntu .

|

||||

@ -254,34 +254,34 @@ stgraber@dakara:~$ ls -lh *.tar.xz

|

||||

|

||||

#### 镜像格式

|

||||

|

||||

LXD现在支持两种镜像布局,unified或者split。这两者都是有效的LXD格式,虽然后者在与其他容器或虚拟机一起运行时更容易重新使用文件系统。

|

||||

LXD 现在支持两种镜像布局,unified 或者 split。这两者都是有效的 LXD 格式,虽然后者在与其他容器或虚拟机一起运行时更容易重用其文件系统。

|

||||

|

||||

LXD专注于系统容器,不支持任何应用程序容器的“标准”镜像格式,我们也不打算这么做。

|

||||

LXD 专注于系统容器,不支持任何应用程序容器的“标准”镜像格式,我们也不打算这么做。

|

||||

|

||||

我们的镜像很简单,它们是由容器文件系统,以及包含了镜像制作时间、到期时间、什么架构,以及可选的一堆文件模板的元数据文件组成。

|

||||

|

||||

有关[镜像格式][1]的最新详细信息,请参阅此文档。

|

||||

|

||||

##### unified镜像 (一个tarball)

|

||||

##### unified 镜像(一个 tarball)

|

||||

|

||||

unified镜像格式是LXD在生成镜像时使用的格式。它们是一个单独的大型tarball,包含“rootfs”目录的容器文件系统,在tarball根目录下有metadata.yaml文件,任何模板都进入“templates”目录。

|

||||

unified 镜像格式是 LXD 在生成镜像时使用的格式。它们是一个单独的大型 tarball,包含 `rootfs` 目录下的容器文件系统,在 tarball 根目录下有 `metadata.yaml` 文件,任何模板都放到 `templates` 目录。

|

||||

|

||||

tarball可以用任何方式压缩(或者不压缩)。镜像散列是压缩后的tarball的sha256。

|

||||

tarball 可以用任何方式压缩(或者不压缩)。镜像散列是压缩后的 tarball 的 sha256 。

|

||||

|

||||

|

||||

##### Split镜像 (两个tarball)

|

||||

##### Split 镜像(两个 tarball)

|

||||

|

||||

这种格式最常用于滚动更新镜像以及某人已经有了一个压缩文件系统tarball。

|

||||

这种格式最常用于滚动更新镜像并已经有了一个压缩文件系统 tarball 时。

|

||||

|

||||

它们由两个不同的tarball组成,第一个只包含LXD使用的元数据,因此metadata.yaml文件在根目录,任何模板都在“templates”目录。

|

||||

它们由两个不同的 tarball 组成,第一个只包含 LXD 使用的元数据, `metadata.yaml` 文件在根目录,任何模板都在 `templates` 目录。

|

||||

|

||||

第二个tarball只包含直接位于其根目录下的容器文件系统。大多数发行版已经有这样的tarball,因为它们常用于引导新机器。 此镜像格式允许不修改重新使用。

|

||||

第二个 tarball 只包含直接位于其根目录下的容器文件系统。大多数发行版已经有这样的 tarball,因为它们常用于引导新机器。 此镜像格式允许不经修改就重用。

|

||||

|

||||

两个tarball都可以压缩(或者不压缩),它们可以使用不同的压缩算法。 镜像散列是元数据和rootfs tarball结合的sha256。

|

||||

两个 tarball 都可以压缩(或者不压缩),它们可以使用不同的压缩算法。 镜像散列是元数据的 tarball 和 rootfs 的 tarball 结合的 sha256。

|

||||

|

||||

##### 镜像元数据

|

||||

|

||||

典型的metadata.yaml文件看起来像这样:

|

||||

典型的 `metadata.yaml` 文件看起来像这样:

|

||||

|

||||

```

|

||||

architecture: "i686"

|

||||

@ -336,31 +336,31 @@ templates:

|

||||

|

||||

##### 属性

|

||||

|

||||

两个唯一的必填字段是“creation date”(UNIX EPOCH)和“architecture”。 其他都可以保持未设置,镜像就可以正常地导入。

|

||||

两个唯一的必填字段是 `creation date`(UNIX 纪元时间)和 `architecture`。 其他都可以保持未设置,镜像就可以正常地导入。

|

||||

|

||||

额外的属性主要是帮助用户弄清楚镜像是什么。 例如“description”属性是在“lxc image list”中可见的。 用户可以使用其他属性的键/值对来搜索特定镜像。

|

||||

额外的属性主要是帮助用户弄清楚镜像是什么。 例如 `description` 属性是在 `lxc image list` 中可见的。 用户可以使用其它属性的键/值对来搜索特定镜像。

|

||||

|

||||

相反,这些属性用户可以通过“lxc image edit”来编辑,“creation date”和“architecture”字段是不可变的。

|

||||

相反,这些属性用户可以通过 `lxc image edit`来编辑,`creation date` 和 `architecture` 字段是不可变的。

|

||||

|

||||

##### 模板

|

||||

|

||||

模板机制允许在容器生命周期中的某一点生成或重新生成容器中的一些文件。

|

||||

|

||||

我们使用pongo2模板引擎来做这些,我们将所有我们知道的容器导出到模板。 这样,你可以使用用户定义的容器属性或常规LXD属性的自定义镜像来更改某些特定文件的内容。

|

||||

我们使用 [pongo2 模板引擎](https://github.com/flosch/pongo2)来做这些,我们将所有我们知道的容器信息都导出到模板。 这样,你可以使用用户定义的容器属性或常规 LXD 属性来自定义镜像,从而更改某些特定文件的内容。

|

||||

|

||||

正如你在上面的例子中看到的,我们使用在Ubuntu中的模板找出cloud-init并关闭一些init脚本。

|

||||

正如你在上面的例子中看到的,我们使用在 Ubuntu 中使用它们来进行 `cloud-init` 并关闭一些 init 脚本。

|

||||

|

||||

### 创建你的镜像

|

||||

|

||||

LXD专注于运行完整的Linux系统,这意味着我们期望大多数用户只使用干净的发行版镜像,而不是只用自己的镜像。

|

||||

LXD 专注于运行完整的 Linux 系统,这意味着我们期望大多数用户只使用干净的发行版镜像,而不是只用自己的镜像。

|

||||

|

||||

但是有一些情况下,你有自己的镜像是有用的。 例如生产服务器上的预配置镜像,或者构建那些我们没有构建的发行版或者架构的镜像。

|

||||

但是有一些情况下,你有自己的镜像是有必要的。 例如生产服务器上的预配置镜像,或者构建那些我们没有构建的发行版或者架构的镜像。

|

||||

|

||||

#### 将容器变成镜像

|

||||

|

||||

目前使用LXD构造镜像最简单的方法是将容器变成镜像。

|

||||

目前使用 LXD 构造镜像最简单的方法是将容器变成镜像。

|

||||

|

||||

可以这么做

|

||||

可以这么做:

|

||||

|

||||

```

|

||||

lxc launch ubuntu:14.04 my-container

|

||||

@ -369,7 +369,7 @@ lxc exec my-container bash

|

||||

lxc publish my-container --alias my-new-image

|

||||

```

|

||||

|

||||

你甚至可以将一个容器过去的snapshot变成镜像:

|

||||

你甚至可以将一个容器过去的快照变成镜像:

|

||||

|

||||

```

|

||||

lxc publish my-container/some-snapshot --alias some-image

|

||||

@ -379,25 +379,22 @@ lxc publish my-container/some-snapshot --alias some-image

|

||||

|

||||

构建你自己的镜像也很简单。

|

||||

|

||||

1.生成容器文件系统。 这完全取决于你使用的发行版。 对于Ubuntu和Debian,它将用于启动。

|

||||

2.配置容器中正常工作所需的任何东西(如果需要任何东西)。

|

||||

3.制作该容器文件系统的tarball,可选择压缩它。

|

||||

4.根据上面描述的内容写一个新的metadata.yaml文件。

|

||||

5.创建另一个包含metadata.yaml文件的压缩包。

|

||||

6.用下面的命令导入这两个tarball作为LXD镜像:

|

||||

```

|

||||

lxc image import <metadata tarball> <rootfs tarball> --alias some-name

|

||||

```

|

||||

1. 生成容器文件系统。这完全取决于你使用的发行版。对于 Ubuntu 和 Debian,它将用于启动。

|

||||

2. 配置容器中该发行版正常工作所需的任何东西(如果需要任何东西)。

|

||||

3. 制作该容器文件系统的 tarball,可选择压缩它。

|

||||

4. 根据上面描述的内容写一个新的 `metadata.yaml` 文件。

|

||||

5. 创建另一个包含 `metadata.yaml` 文件的 tarball。

|

||||

6. 用下面的命令导入这两个 tarball 作为 LXD 镜像:`lxc image import <metadata tarball> <rootfs tarball> --alias some-name`

|

||||

|

||||

正常工作前你可能需要经历几次这样的工作,调整这里或那里,可能会添加一些模板和属性。

|

||||

在一切都正常工作前你可能需要经历几次这样的工作,调整这里或那里,可能会添加一些模板和属性。

|

||||

|

||||

### 发布你的镜像

|

||||

|

||||

所有LXD守护程序都充当镜像服务器。除非另有说明,否则加载到镜像存储中的所有镜像都会被标记为私有,因此只有受信任的客户端可以检索这些镜像,但是如果要创建公共镜像服务器,你需要做的是将一些镜像标记为公开,并确保你的LXD守护进程监听网络。

|

||||

所有 LXD 守护程序都充当镜像服务器。除非另有说明,否则加载到镜像存储中的所有镜像都会被标记为私有,因此只有受信任的客户端可以检索这些镜像,但是如果要创建公共镜像服务器,你需要做的是将一些镜像标记为公开,并确保你的 LXD 守护进程监听网络。

|

||||

|

||||

#### 只运行LXD公共服务器

|

||||

#### 只运行 LXD 公共服务器

|

||||

|

||||

最简单的共享镜像的方式是运行一个公共的LXD守护进程。

|

||||

最简单的共享镜像的方式是运行一个公共的 LXD 守护进程。

|

||||

|

||||

你只要运行:

|

||||

|

||||

@ -411,35 +408,34 @@ lxc config set core.https_address "[::]:8443"

|

||||

lxc remote add <some name> <IP or DNS> --public

|

||||

```

|

||||

|

||||

他们就可以像任何默认的镜像服务器一样使用它们。 由于远程服务器添加了“-public”,因此不需要身份验证,并且客户端仅限于使用已标记为public的镜像。

|

||||

他们就可以像使用任何默认的镜像服务器一样使用它们。 由于远程服务器添加了 `-public` 选项,因此不需要身份验证,并且客户端仅限于使用已标记为 `public` 的镜像。

|

||||

|

||||

要将镜像设置成公共的,只需“lxc image edit”它们,并将public标志设置为true。

|

||||

要将镜像设置成公共的,只需使用 `lxc image edit` 编辑它们,并将 `public` 标志设置为 `true`。

|

||||

|

||||

#### 使用一台静态web服务器

|

||||

#### 使用一台静态 web 服务器

|

||||

|

||||

如上所述,“lxc image import”支持从静态http服务器下载。 基本要求是:

|

||||

如上所述,`lxc image import` 支持从静态 https 服务器下载。 基本要求是:

|

||||

|

||||

*服务器必须支持具有有效证书的HTTPS,TLS1.2和EC密钥

|

||||

*当点击“lxc image import”提供的URL时,服务器必须返回一个包含LXD-Image-Hash和LXD-Image-URL的HTTP标头。

|

||||

* 服务器必须支持具有有效证书的 HTTPS、TLS 1.2 和 EC 算法。

|

||||

* 当访问 `lxc image import` 提供的 URL 时,服务器必须返回一个包含 `LXD-Image-Hash` 和 `LXD-Image-URL` 的 HTTP 标头。

|

||||

|

||||

如果你想使它动态化,你可以让你的服务器查找LXD在请求镜像中发送的LXD-Server-Architectures和LXD-Server-Version的HTTP头。 这可以让你返回架构正确的镜像。

|

||||

如果你想使它动态化,你可以让你的服务器查找 LXD 在请求镜像时发送的 `LXD-Server-Architectures` 和 `LXD-Server-Version` 的 HTTP 标头,这可以让你返回符合该服务器架构的正确镜像。

|

||||

|

||||

#### 构建一个简单流服务器

|

||||

|

||||

“ubuntu:”和“ubuntu-daily:”在远端不使用LXD协议(“images:”是的),而是使用不同的协议称为简单流。

|

||||

`ubuntu:` 和 `ubuntu-daily:` 远端服务器不使用 LXD 协议(`images:` 使用),而是使用称为简单流(simplestreams)的不同协议。

|

||||

|

||||

简单流基本上是一个镜像服务器的描述格式,使用JSON来描述产品以及相关产品的文件列表。

|

||||

简单流基本上是一个镜像服务器的描述格式,使用 JSON 来描述产品以及相关产品的文件列表。

|

||||

|

||||

它被各种工具,如OpenStack,Juju,MAAS等用来查找,下载或者做镜像系统,LXD将它作为原生协议支持用于镜像检索。

|

||||

它被各种工具,如 OpenStack、Juju、MAAS 等用来查找、下载或者做镜像系统,LXD 将它作为用于镜像检索的原生协议。

|

||||

|

||||

虽然的确不是提供LXD镜像的最简单的方法,但是如果你的镜像也被其他一些工具使用,那这也许值得考虑一下。

|

||||

虽然这的确不是提供 LXD 镜像的最简单的方法,但是如果你的镜像也被其它一些工具使用,那这也许值得考虑一下。

|

||||

|

||||

更多信息可以在这里找到。

|

||||

关于简单流的更多信息可以在[这里](https://launchpad.net/simplestreams)找到。

|

||||

|

||||

### 总结

|

||||

|

||||

我希望关于如何使用LXD管理镜像以及构建和发布镜像这点给你提供了一个好点子。对于以前的LXC而言可以在一组全球分布式系统上得到完全相同的镜像是一个很大的进步,并且让将来的道路更加可复制。

|

||||

|

||||

我希望这篇关于如何使用 LXD 管理镜像以及构建和发布镜像文章让你有所了解。对于以前的 LXC 而言,可以在一组全球分布式系统上得到完全相同的镜像是一个很大的进步,并且引导了更多可复制性的发展方向。

|

||||

|

||||

### 额外信息

|

||||

|

||||

@ -460,7 +456,7 @@ via: https://www.stgraber.org/2016/03/30/lxd-2-0-image-management-512/

|

||||

|

||||

作者:[Stéphane Graber][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

alim0x translating

|

||||

|

||||

### Android 6.0 Marshmallow

|

||||

|

||||

In October 2015, Google brought Android 6.0 Marshmallow into the world. For the OS's launch, Google commissioned two new Nexus devices: the [Huawei Nexus 6P and LG Nexus 5X][39]. Rather than just the usual speed increase, the new phones also included a key piece of hardware: a fingerprint reader for Marshmallow's new fingerprint API. Marshmallow was also packing a crazy new search feature called "Google Now on Tap," user controlled app permissions, a new data backup system, and plenty of other refinements.

|

||||

|

||||

@ -0,0 +1,261 @@

|

||||

|

||||

beyondworld 翻译中

|

||||

|

||||

|

||||

Powerline – Adds Powerful Statuslines and Prompts to Vim Editor and Bash Terminal

|

||||

============================================================

|

||||

|

||||

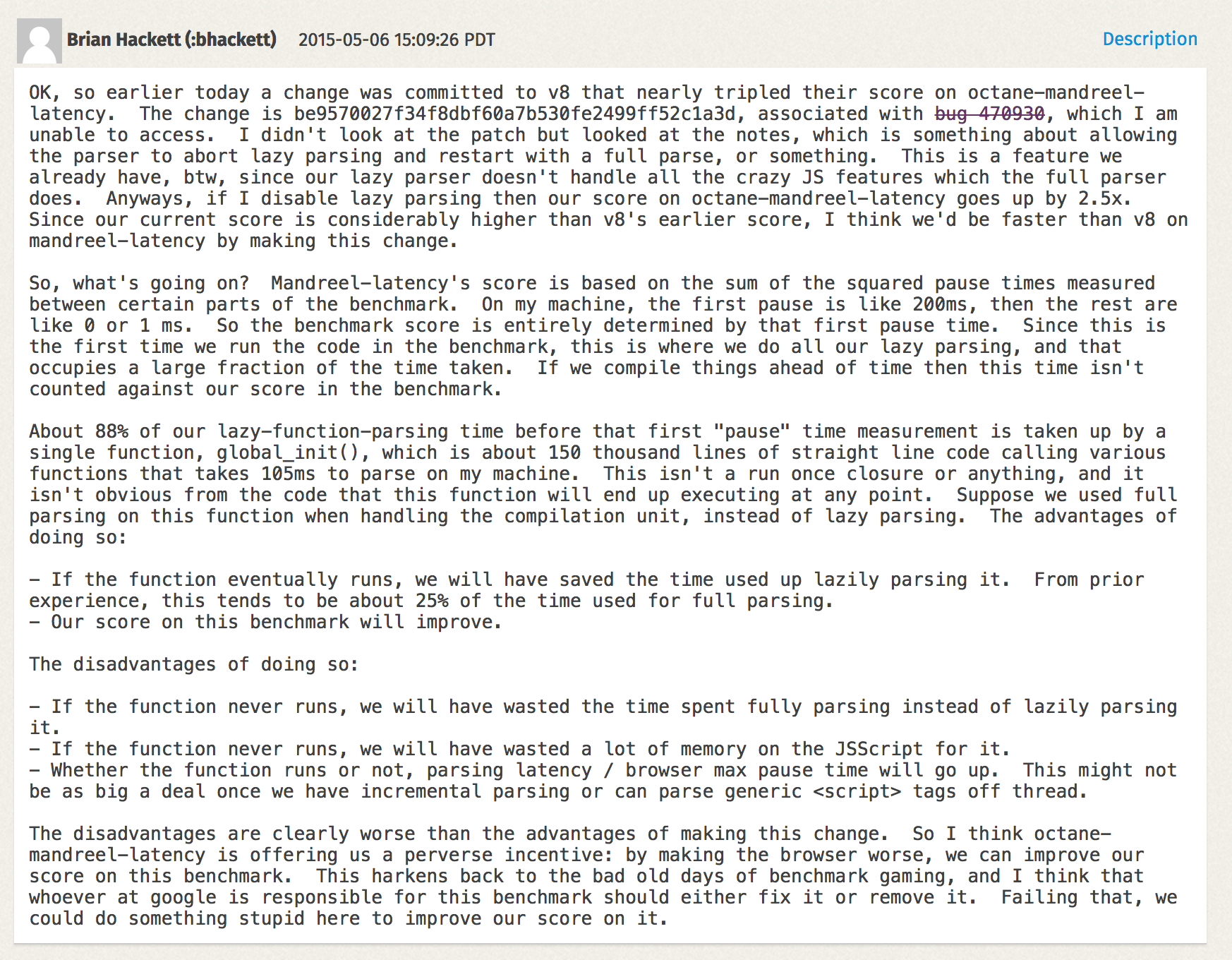

Powerline is a great statusline plugin for [Vim editor][1], which is developed in Python and provides statuslines and prompts for many other applications such as bash, zsh, tmux and many more.

|

||||

|

||||

[

|

||||

|

||||

][2]

|

||||

|

||||

Add Power to Linux Terminal with Powerline Tool

|

||||

|

||||

#### Features

|

||||

|

||||

1. It is written in Python, which makes it extensible and feature rich.

|

||||

2. Stable and testable code base, which works well with Python 2.6+ and Python 3.

|

||||

3. It also supports prompts and statuslines in several Linux utilities and tools.

|

||||

4. It has configurations and decorator colors developed using JSON.

|

||||

5. Fast and lightweight, with daemon support, which provides even more better performance.

|

||||

|

||||

#### Powerline Screenshots

|

||||

|

||||

[

|

||||

|

||||

][3]

|

||||

|

||||

Powerline Vim Statuslines

|

||||

|

||||

In this article, I will show you how to install Powerline and Powerline fonts and how to use with Bash and Vimunder RedHat and Debian based systems.

|

||||

|

||||

### Step 1: Installing Generic Requirements for Powerline

|

||||

|

||||

Due to a naming conflict with some other unrelated projects, powerline program is available on PyPI (Python Package Index) under the package name as powerline-status.

|

||||

|

||||

To install packages from PyPI, we need a ‘pip‘ (package management tool for installing Python packages). So, let’s first install pip tool under our Linux systems.

|

||||

|

||||

#### Install Pip on Debian, Ubuntu and Linux Mint

|

||||

|

||||

```

|

||||

# apt-get install python-pip

|

||||

```

|

||||

|

||||

##### Sample Output

|

||||

|

||||

```

|

||||

Reading package lists... Done

|

||||

Building dependency tree

|

||||

Reading state information... Done

|

||||

Recommended packages:

|

||||

python-dev-all python-wheel

|

||||

The following NEW packages will be installed:

|

||||

python-pip

|

||||

0 upgraded, 1 newly installed, 0 to remove and 533 not upgraded.

|

||||

Need to get 97.2 kB of archives.

|

||||

After this operation, 477 kB of additional disk space will be used.

|

||||

Get:1 http://archive.ubuntu.com/ubuntu/ trusty-updates/universe python-pip all 1.5.4-1ubuntu3 [97.2 kB]

|

||||

Fetched 97.2 kB in 1s (73.0 kB/s)

|

||||

Selecting previously unselected package python-pip.

|

||||

(Reading database ... 216258 files and directories currently installed.)

|

||||

Preparing to unpack .../python-pip_1.5.4-1ubuntu3_all.deb ...

|

||||

Unpacking python-pip (1.5.4-1ubuntu3) ...

|

||||

Processing triggers for man-db (2.6.7.1-1ubuntu1) ...

|

||||

Setting up python-pip (1.5.4-1ubuntu3) ...

|

||||

```

|

||||

|

||||

#### Install Pip on CentOS, RHEL and Fedora

|

||||

|

||||

Under Fedora-based systems, you need to first [enable epel-repository][4] and then install pip package as shown.

|

||||

|

||||

```

|

||||

# yum install python-pip

|

||||

# dnf install python-pip [On Fedora 22+ versions]

|

||||

```

|

||||

|

||||

##### Sample Output

|

||||

|

||||

```

|

||||

Installing:

|

||||

python-pip noarch 7.1.0-1.el7 epel 1.5 M

|

||||

Transaction Summary

|

||||

=================================================================================

|

||||

Install 1 Package

|

||||

Total download size: 1.5 M

|

||||

Installed size: 6.6 M

|

||||

Is this ok [y/d/N]: y

|

||||

Downloading packages:

|

||||

python-pip-7.1.0-1.el7.noarch.rpm | 1.5 MB 00:00:01

|

||||

Running transaction check

|

||||

Running transaction test

|

||||

Transaction test succeeded

|

||||

Running transaction

|

||||

Installing : python-pip-7.1.0-1.el7.noarch 1/1

|

||||

Verifying : python-pip-7.1.0-1.el7.noarch 1/1

|

||||

Installed:

|

||||

python-pip.noarch 0:7.1.0-1.el7

|

||||

Complete!

|

||||

```

|

||||

|

||||

### Step 2: Installing Powerline Tool in Linux

|

||||

|

||||

Now it’s’ time to install Powerline latest development version from the Git repository. For this, your system must have git package installed in order to fetch the packages from Git.

|

||||

|

||||

```

|

||||

# apt-get install git

|

||||

# yum install git

|

||||

# dnf install git

|

||||

```

|

||||

|

||||

Next you can install Powerline with the help of pip command as shown.

|

||||

|

||||

```

|

||||

# pip install git+git://github.com/Lokaltog/powerline

|

||||

```

|

||||

|

||||

##### Sample Output

|

||||

|

||||

```

|

||||

Cloning git://github.com/Lokaltog/powerline to /tmp/pip-WAlznH-build

|

||||

Running setup.py (path:/tmp/pip-WAlznH-build/setup.py) egg_info for package from git+git://github.com/Lokaltog/powerline

|

||||

warning: no previously-included files matching '*.pyc' found under directory 'powerline/bindings'

|

||||

warning: no previously-included files matching '*.pyo' found under directory 'powerline/bindings'

|

||||

Installing collected packages: powerline-status

|

||||

Found existing installation: powerline-status 2.2

|

||||

Uninstalling powerline-status:

|

||||

Successfully uninstalled powerline-status

|

||||

Running setup.py install for powerline-status

|

||||

warning: no previously-included files matching '*.pyc' found under directory 'powerline/bindings'

|

||||

warning: no previously-included files matching '*.pyo' found under directory 'powerline/bindings'

|

||||

changing mode of build/scripts-2.7/powerline-lint from 644 to 755

|

||||

changing mode of build/scripts-2.7/powerline-daemon from 644 to 755

|

||||

changing mode of build/scripts-2.7/powerline-render from 644 to 755

|

||||

changing mode of build/scripts-2.7/powerline-config from 644 to 755

|

||||

changing mode of /usr/local/bin/powerline-config to 755

|

||||

changing mode of /usr/local/bin/powerline-lint to 755

|

||||

changing mode of /usr/local/bin/powerline-render to 755

|

||||

changing mode of /usr/local/bin/powerline-daemon to 755

|

||||

Successfully installed powerline-status

|

||||

Cleaning up...

|

||||

```

|

||||

|

||||

### Step 3: Installing Powerline Fonts in Linux

|

||||

|

||||

Powerline uses special glyphs to show special arrow effect and symbols for developers. For this, you must have a symbol font or a patched font installed on your systems.

|

||||

|

||||

Download the most recent version of the symbol font and fontconfig configuration file using following [wget command][5].

|

||||

|

||||

```

|

||||

# wget https://github.com/powerline/powerline/raw/develop/font/PowerlineSymbols.otf

|

||||

# wget https://github.com/powerline/powerline/raw/develop/font/10-powerline-symbols.conf

|

||||

```

|

||||

|

||||

Then you need to move the font to your fonts directory, /usr/share/fonts/ or /usr/local/share/fonts as follows or you can get the valid font paths by using command `xset q`.

|

||||

|

||||

```

|

||||

# mv PowerlineSymbols.otf /usr/share/fonts/

|

||||

```

|

||||

|

||||

Next, you need to update your system’s font cache as follows.

|

||||

|

||||

```

|

||||

# fc-cache -vf /usr/share/fonts/

|

||||

```

|

||||

|

||||

Now install the fontconfig file.

|

||||

|

||||

```

|

||||

# mv 10-powerline-symbols.conf /etc/fonts/conf.d/

|

||||

```

|

||||

|

||||

Note: If custom symbols doesn’t appear, then try to close all terminal sessions and restart X window for the changes to take effect.

|

||||

|

||||

### Step 4: Setting Powerline for Bash Shell and Vim Statuslines

|

||||

|

||||

In this section we shall look at configuring Powerline for bash shell and vim editor. First make your terminal to support 256color by adding the following line to ~/.bashrc file as follows.

|

||||

|

||||

```

|

||||

export TERM=”screen-256color”

|

||||

```

|

||||

|

||||

#### Enable Powerline on Bash Shell

|

||||

|

||||

To enable Powerline in bash shell by default, you need to add the following snippet to your ~/.bashrc file.

|

||||

|

||||

First get the location of installed powerline using following command.

|

||||

|

||||

```

|

||||

# pip show powerline-status

|

||||

Name: powerline-status

|

||||

Version: 2.2.dev9999-git.aa33599e3fb363ab7f2744ce95b7c6465eef7f08

|

||||

Location: /usr/local/lib/python2.7/dist-packages

|

||||

Requires:

|

||||

```

|

||||

|

||||

Once you know the actual location of powerline, make sure to replace the location in the below line as per your system suggested.

|

||||

|

||||

```

|

||||

powerline-daemon -q

|

||||

POWERLINE_BASH_CONTINUATION=1

|

||||

POWERLINE_BASH_SELECT=1

|

||||

. /usr/local/lib/python2.7/dist-packages/powerline/bindings/bash/powerline.sh

|

||||

```

|

||||

|

||||

Now try to logout and login back again, you will see powerline statuesline as shown below.

|

||||

|

||||

[

|

||||

|

||||

][6]

|

||||

|

||||

Try changing or switching to different directories and keep a eye on “breadcrumb” prompt changes to show your current location.

|

||||

|

||||

You will also be able to watch pending background jobs and if powerline is installed on a remote Linux machine, you can notice that the prompt adds the hostname when you connect via SSH.

|

||||

|

||||

#### Enable Powerline for Vim

|

||||

|

||||

If vim is your favorite editor, luckily there is a powerful plugin for vim, too. To enable this plugin, add these lines to `~/.vimrc` file.

|

||||

|

||||

```

|

||||

set rtp+=/usr/local/lib/python2.7/dist-packages/powerline/bindings/vim/

|

||||

set laststatus=2

|

||||

set t_Co=256

|

||||

```

|

||||

|

||||

Now you can launch vim and see a spiffy new status line:

|

||||

|

||||

[

|

||||

|

||||

][7]

|

||||

|

||||

### Summary

|

||||

|

||||

Powerline helps to set colorful and beautiful statuslines and prompts in several applications, good for coding environments. I hope you find this guide helpful and remember to post a comment if you need any help or have additional ideas.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

|

||||

|

||||

I am Ravi Saive, creator of TecMint. A Computer Geek and Linux Guru who loves to share tricks and tips on Internet. Most Of My Servers runs on Open Source Platform called Linux. Follow Me: Twitter, Facebook and Google+

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/powerline-adds-powerful-statuslines-and-prompts-to-vim-and-bash/

|

||||

|

||||

作者:[Ravi Saive][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/admin/

|

||||

[1]:http://www.tecmint.com/vi-editor-usage/

|

||||

[2]:http://www.tecmint.com/wp-content/uploads/2015/10/Install-Powerline-Statuslines-in-Linux.png

|

||||

[3]:http://www.tecmint.com/wp-content/uploads/2015/10/Powerline-Vim-Statuslines.png

|

||||

[4]:http://www.tecmint.com/how-to-enable-epel-repository-for-rhel-centos-6-5/

|

||||

[5]:http://www.tecmint.com/10-wget-command-examples-in-linux/

|

||||

[6]:http://www.tecmint.com/wp-content/uploads/2015/10/Bash-Powerline-Statuslines.gif

|

||||

[7]:http://www.tecmint.com/wp-content/uploads/2015/10/Vim-Powerline-Statuslines.gif

|

||||

@ -1,182 +0,0 @@

|

||||

Cathon is translating---

|

||||

|

||||

What is Docker?

|

||||

================

|

||||

|

||||

|

||||

|

||||

This is an excerpt from [Docker: Up and Running][3] by Karl Matthias and Sean P. Kane. It may contain references to unavailable content that is part of the larger resource.

|

||||

|

||||

|

||||

Docker was first introduced to the world—with no pre-announcement and little fanfare—by Solomon Hykes, founder and CEO of dotCloud, in a five-minute [lightning talk][4] at the Python Developers Conference in Santa Clara, California, on March 15, 2013\. At the time of this announcement, only about 40 people outside dotCloud been given the opportunity to play with Docker.

|

||||

|

||||

Within a few weeks of this announcement, there was a surprising amount of press. The project was quickly open-sourced and made publicly available on [GitHub][5], where anyone could download and contribute to the project. Over the next few months, more and more people in the industry started hearing about Docker and how it was going to revolutionize the way software was built, delivered, and run. And within a year, almost no one in the industry was unaware of Docker, but many were still unsure what it was exactly, and why people were so excited about.

|

||||

|

||||

Docker is a tool that promises to easily encapsulate the process of creating a distributable artifact for any application, deploying it at scale into any environment, and streamlining the workflow and responsiveness of agile software organizations.

|

||||

|

||||

|

||||

|

||||

### The Promise of Docker

|

||||

|

||||

While ostensibly viewed as a virtualization platform, Docker is far more than that. Docker’s domain spans a few crowded segments of the industry that include technologies like KVM, Xen, OpenStack, Mesos, Capistrano, Fabric, Ansible, Chef, Puppet, SaltStack, and so on. There is something very telling about the list of products that Docker competes with, and maybe you’ve spotted it already. For example, most engineers would not say that virtualization products compete with configuration management tools, yet both technologies are being disrupted by Docker. The technologies in that list are also generally acclaimed for their ability to improve productivity and that’s what is causing a great deal of the buzz. Docker sits right in the middle of some of the most enabling technologies of the last decade.

|

||||

|

||||

If you were to do a feature-by-feature comparison of Docker and the reigning champion in any of these areas, Docker would very likely look like a middling competitor. It’s stronger in some areas than others, but what Docker brings to the table is a feature set that crosses a broad range of workflow challenges. By combining the ease of application deployment tools like Capistrano and Fabric, with the ease of administrating virtualization systems, and then providing hooks that make workflow automation and orchestration easy to implement, Docker provides a very enabling feature set.

|

||||

|

||||

Lots of new technologies come and go, and a dose of skepticism about the newest rage is always healthy. Without digging deeper, it would be easy to dismiss Docker as just another technology that solves a few very specific problems for developers or operations teams. If you look at Docker as a virtualization or deployment technology alone, it might not seem very compelling. But Docker is much more than it seems on the surface.

|

||||

|

||||

It is hard and often expensive to get communication and processes right between teams of people, even in smaller organizations. Yet we live in a world where the communication of detailed information between teams is increasingly required to be successful. A tool that reduces the complexity of that communication while aiding in the production of more robust software would be a big win. And that’s exactly why Docker merits a deeper look. It’s no panacea, and implementing Docker well requires some thought, but Docker is a good approach to solving some real-world organizational problems and helping enable companies to ship better software faster. Delivering a well-designed Docker workflow can lead to happier technical teams and real money for the organization’s bottom line.

|

||||

|

||||

So where are companies feeling the most pain? Shipping software at the speed expected in today’s world is hard to do well, and as companies grow from one or two developers to many teams of developers, the burden of communication around shipping new releases becomes much heavier and harder to manage. Developers have to understand a lot of complexity about the environment they will be shipping software into, and production operations teams need to increasingly understand the internals of the software they ship. These are all generally good skills to work on because they lead to a better understanding of the environment as a whole and therefore encourage the designing of robust software, but these same skills are very difficult to scale effectively as an organization’s growth accelerates.

|

||||

|

||||

The details of each company’s environment often require a lot of communication that doesn’t directly build value in the teams involved. For example, requiring developers to ask an operations team for _release 1.2.1_ of a particular library slows them down and provides no direct business value to the company. If developers could simply upgrade the version of the library they use, write their code, test with the new version, and ship it, the delivery time would be measurably shortened. If operations people could upgrade software on the host system without having to coordinate with multiple teams of application developers, they could move faster. Docker helps to build a layer of isolation in software that reduces the burden of communication in the world of humans.

|

||||

|

||||

Beyond helping with communication issues, Docker is opinionated about software architecture in a way that encourages more robustly crafted applications. Its architectural philosophy centers around atomic or throwaway containers. During deployment, the whole running environment of the old application is thrown away with it. Nothing in the environment of the application will live longer than the application itself and that’s a simple idea with big repercussions. It means that applications are not likely to accidentally rely on artifacts left by a previous release. It means that ephemeral debugging changes are less likely to live on in future releases that picked them up from the local filesystem. And it means that applications are highly portable between servers because all state has to be included directly into the deployment artifact and be immutable, or sent to an external dependency like a database, cache, or file server.

|

||||

|

||||

This leads to applications that are not only more scalable, but more reliable. Instances of the application container can come and go with little repercussion on the uptime of the frontend site. These are proven architectural choices that have been successful for non-Docker applications, but the design choices included in Docker’s own design mean that Dockerized applications will follow these best practices by requirement and that’s a good thing.

|

||||

|

||||

|

||||

|

||||

### Benefits of the Docker Workflow

|

||||

|

||||

It’s hard to cohesively group into categories all of the things Docker brings to the table. When implemented well, it benefits organizations, teams, developers, and operations engineers in a multitude of ways. It makes architectural decisions simpler because all applications essentially look the same on the outside from the hosting system’s perspective. It makes tooling easier to write and share between applications. Nothing in this world comes with benefits and no challenges, but Docker is surprisingly skewed toward the benefits. Here are some more of the things you get with Docker:

|

||||

|

||||

|

||||

|

||||

Packaging software in a way that leverages the skills developers already have.

|

||||

|

||||

|

||||

|

||||

Many companies have had to create positions for release and build engineers in order to manage all the knowledge and tooling required to create software packages for their supported platforms. Tools like rpm, mock, dpkg, and pbuilder can be complicated to use, and each one must be learned independently. Docker wraps up all your requirements together into one package that is defined in a single file.

|

||||

|

||||

|

||||

|

||||

Bundling application software and required OS filesystems together in a single standardized image format.

|

||||

|

||||

|

||||

|

||||

In the past, you typically needed to package not only your application, but many of the dependencies that it relied on, including libraries and daemons. However, you couldn’t ever ensure that 100 percent of the execution environment was identical. All of this made packaging difficult to master, and hard for many companies to accomplish reliably. Often someone running Scientific Linux would resort to trying to deploy a community package tested on Red Hat Linux, hoping that the package was close enough to what they needed. With Docker you deploy your application along with every single file required to run it. Docker’s layered images make this an efficient process that ensures that your application is running in the expected environment.

|

||||

|

||||

|

||||

|

||||

Using packaged artifacts to test and deliver the exact same artifact to all systems in all environments.

|

||||

|

||||

|

||||

|

||||

When developers commit changes to a version control system, a new Docker image can be built, which can go through the whole testing process and be deployed to production without any need to recompile or repackage at any step in the process.

|

||||

|

||||

|

||||

|

||||

Abstracting software applications from the hardware without sacrificing resources.

|

||||

|

||||

|

||||

|

||||

Traditional enterprise virtualization solutions like VMware are typically used when people need to create an abstraction layer between the physical hardware and the software applications that run on it, at the cost of resources. The hypervisors that manage the VMs and each VM’s running kernel use a percentage of the hardware system’s resources, which are then no longer available to the hosted applications. A container, on the other hand, is just another process that talks directly to the Linux kernel and therefore can utilize more resources, up until the system or quota-based limits are reached.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

When Docker was first released, Linux containers had been around for quite a few years, and many of the other technologies that it is built on are not entirely new. However, Docker’s unique mix of strong architectural and workflow choices combine together into a whole that is much more powerful than the sum of its parts. Docker finally makes Linux containers, which have been around for more than a decade, approachable to the average technologist. It fits containers relatively easily into the existing workflow and processes of real companies. And the problems discussed above have been felt by so many people that interest in the Docker project has been accelerating faster than anyone could have reasonably expected.

|

||||

|

||||

In the first year, newcomers to the project were surprised to find out that Docker wasn’t already production-ready, but a steady stream of commits from the open source Docker community has moved the project forward at a very brisk pace. That pace seems to only pick up steam as time goes on. As Docker has now moved well into the 1.x release cycle, stability is good, production adoption is here, and many companies are looking to Docker as a solution to some of the serious complexity issues that they face in their application delivery processes.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### What Docker Isn’t

|

||||

|

||||