mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-16 22:42:21 +08:00

commit

0570fabae8

@ -1,25 +1,31 @@

|

||||

Linux 系统查询机器最近重新启动的日期和时间的命令

|

||||

如何在 Linux 系统查询机器最近重启时间

|

||||

======

|

||||

|

||||

在你的 Linux 或 类 UNIX 系统中,你是如何查询系统重新启动的日期和时间?你是如何查询系统关机的日期和时间? last 命令不仅可以按照时间从近到远的顺序列出指定的用户,终端和主机名,而且还可以列出指定日期和时间登录的用户。输出到终端的每一行都包括用户名,会话终端,主机名,会话开始和结束的时间,会话持续的时间。使用下面的命令来查看 Linux 或类 UNIX 系统重启和关机的时间和日期。

|

||||

在你的 Linux 或类 UNIX 系统中,你是如何查询系统上次重新启动的日期和时间?怎样显示系统关机的日期和时间? `last` 命令不仅可以按照时间从近到远的顺序列出该会话的特定用户、终端和主机名,而且还可以列出指定日期和时间登录的用户。输出到终端的每一行都包括用户名、会话终端、主机名、会话开始和结束的时间、会话持续的时间。要查看 Linux 或类 UNIX 系统重启和关机的时间和日期,可以使用下面的命令。

|

||||

|

||||

- last 命令

|

||||

- who 命令

|

||||

- `last` 命令

|

||||

- `who` 命令

|

||||

|

||||

|

||||

### 使用 who 命令来查看系统重新启动的时间/日期

|

||||

|

||||

你需要在终端使用 [who][1] 命令来打印有哪些人登陆了系统。who 命令同时也会显示上次系统启动的时间,使用 last 命令来查看系统重启和关机的日期和时间,运行:

|

||||

你需要在终端使用 [who][1] 命令来打印有哪些人登录了系统,`who` 命令同时也会显示上次系统启动的时间。使用 `last` 命令来查看系统重启和关机的日期和时间,运行:

|

||||

|

||||

`$ who -b`

|

||||

```

|

||||

$ who -b

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

`system boot 2017-06-20 17:41`

|

||||

```

|

||||

system boot 2017-06-20 17:41

|

||||

```

|

||||

|

||||

使用 last 命令来查询最近登陆到系统的用户和系统重启的时间和日期。输入:

|

||||

使用 `last` 命令来查询最近登录到系统的用户和系统重启的时间和日期。输入:

|

||||

|

||||

`$ last reboot | less`

|

||||

```

|

||||

$ last reboot | less

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

@ -27,7 +33,9 @@ Linux 系统查询机器最近重新启动的日期和时间的命令

|

||||

|

||||

或者,尝试输入:

|

||||

|

||||

`$ last reboot | head -1`

|

||||

```

|

||||

$ last reboot | head -1

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

@ -35,13 +43,15 @@ Linux 系统查询机器最近重新启动的日期和时间的命令

|

||||

reboot system boot 4.9.0-3-amd64 Sat Jul 15 19:19 still running

|

||||

```

|

||||

|

||||

last 命令通过查看文件 /var/log/wtmp 来显示自 wtmp 文件被创建时的所有登陆(和注销)的用户。每当系统重新启动时,伪用户将重启信息记录到日志。因此,`last reboot` 命令将会显示自日志文件被创建以来的所有重启信息。

|

||||

`last` 命令通过查看文件 `/var/log/wtmp` 来显示自 wtmp 文件被创建时的所有登录(和登出)的用户。每当系统重新启动时,这个伪用户 `reboot` 就会登录。因此,`last reboot` 命令将会显示自该日志文件被创建以来的所有重启信息。

|

||||

|

||||

### 查看系统上次关机的时间和日期

|

||||

|

||||

可以使用下面的命令来显示上次关机的日期和时间:

|

||||

|

||||

`$ last -x|grep shutdown | head -1`

|

||||

```

|

||||

$ last -x|grep shutdown | head -1

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

@ -51,10 +61,10 @@ shutdown system down 2.6.15.4 Sun Apr 30 13:31 - 15:08 (01:37)

|

||||

|

||||

命令中,

|

||||

|

||||

* **-x**:显示系统开关机和运行等级改变信息

|

||||

* `-x`:显示系统关机和运行等级改变信息

|

||||

|

||||

|

||||

这里是 last 命令的其它的一些选项:

|

||||

这里是 `last` 命令的其它的一些选项:

|

||||

|

||||

```

|

||||

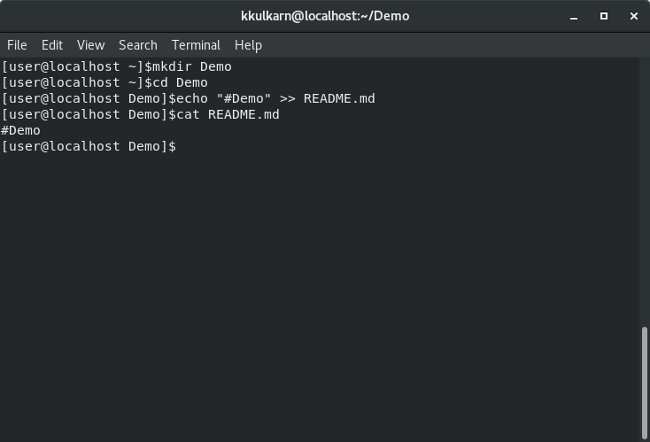

$ last

|

||||

@ -62,6 +72,7 @@ $ last -x

|

||||

$ last -x reboot

|

||||

$ last -x shutdown

|

||||

```

|

||||

|

||||

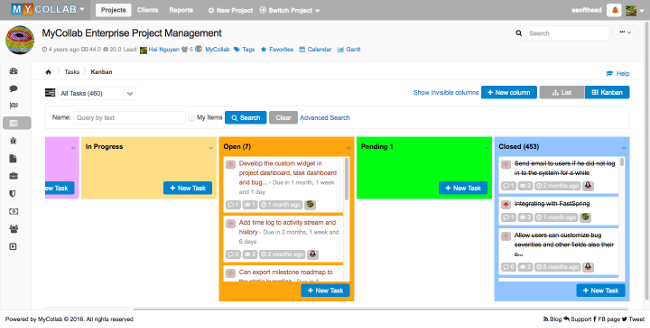

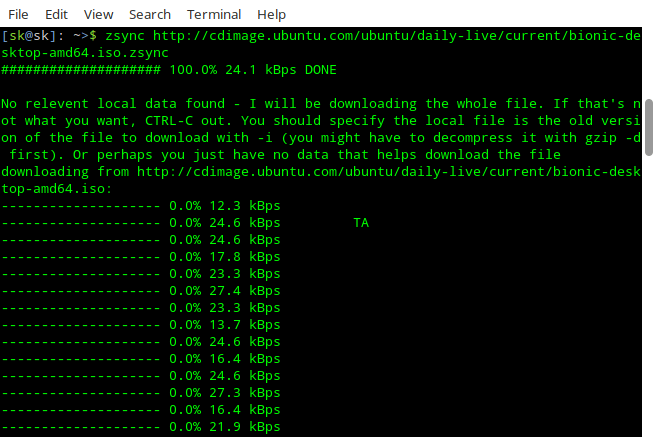

示例输出:

|

||||

|

||||

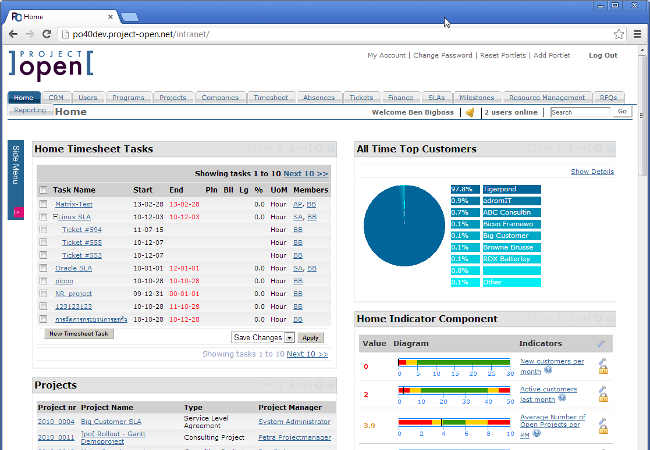

![Fig.01: How to view last Linux System Reboot Date/Time ][3]

|

||||

@ -70,7 +81,9 @@ $ last -x shutdown

|

||||

|

||||

评论区的读者建议的另一个命令如下:

|

||||

|

||||

`$ uptime -s`

|

||||

```

|

||||

$ uptime -s

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

@ -82,7 +95,9 @@ $ last -x shutdown

|

||||

|

||||

在终端输入下面的命令:

|

||||

|

||||

`$ last reboot`

|

||||

```

|

||||

$ last reboot

|

||||

```

|

||||

|

||||

在 OS X 示例输出结果如下:

|

||||

|

||||

@ -108,7 +123,9 @@ wtmp begins Sat Oct 3 18:57

|

||||

|

||||

查看关机日期和时间,输入:

|

||||

|

||||

`$ last shutdown`

|

||||

```

|

||||

$ last shutdown

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

@ -130,7 +147,7 @@ wtmp begins Sat Oct 3 18:57

|

||||

|

||||

### 如何查看是谁重启和关闭机器?

|

||||

|

||||

你需要[启动 psacct 服务然后运行下面的命令][4]来查看执行过的命令,同时包括用户名,在终端输入 [lastcomm][5] 命令查看信息

|

||||

你需要[启用 psacct 服务然后运行下面的命令][4]来查看执行过的命令(包括用户名),在终端输入 [lastcomm][5] 命令查看信息

|

||||

|

||||

```

|

||||

# lastcomm userNameHere

|

||||

@ -138,9 +155,10 @@ wtmp begins Sat Oct 3 18:57

|

||||

# lastcomm | more

|

||||

# lastcomm reboot

|

||||

# lastcomm shutdown

|

||||

### OR see both reboot and shutdown time

|

||||

### 或者查看重启和关机时间

|

||||

# lastcomm | egrep 'reboot|shutdown'

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

@ -152,13 +170,11 @@ shutdown S root pts/1 0.00 secs Sun Dec 27 23:45

|

||||

|

||||

### 参见

|

||||

|

||||

* 更多信息可以查看 man 手册( man last )和参考文章 [如何在 Linux 服务器上使用 tuptime 命令查看历史和统计的正常的运行时间][6].

|

||||

|

||||

* 更多信息可以查看 man 手册(`man last`)和参考文章 [如何在 Linux 服务器上使用 tuptime 命令查看历史和统计的正常的运行时间][6]。

|

||||

|

||||

### 关于作者

|

||||

|

||||

作者是 nixCraft 的创立者同时也是一名经验丰富的系统管理员,也是 Linux,类 Unix 操作系统 shell 脚本的培训师。他曾与全球各行各业的客户工作过,包括 IT,教育,国防和空间研究以及非营利部门等等。你可以在 [Twitter][7] ,[Facebook][8],[Google+][9] 关注他。

|

||||

|

||||

作者是 nixCraft 的创立者,同时也是一名经验丰富的系统管理员,也是 Linux,类 Unix 操作系统 shell 脚本的培训师。他曾与全球各行各业的客户工作过,包括 IT,教育,国防和空间研究以及非营利部门等等。你可以在 [Twitter][7]、[Facebook][8]、[Google+][9] 关注他。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -167,7 +183,7 @@ via: https://www.cyberciti.biz/tips/linux-last-reboot-time-and-date-find-out.htm

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[amwps290](https://github.com/amwps290)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,158 @@

|

||||

在 Linux 上检测 IDE/SATA SSD 硬盘的传输速度

|

||||

======

|

||||

|

||||

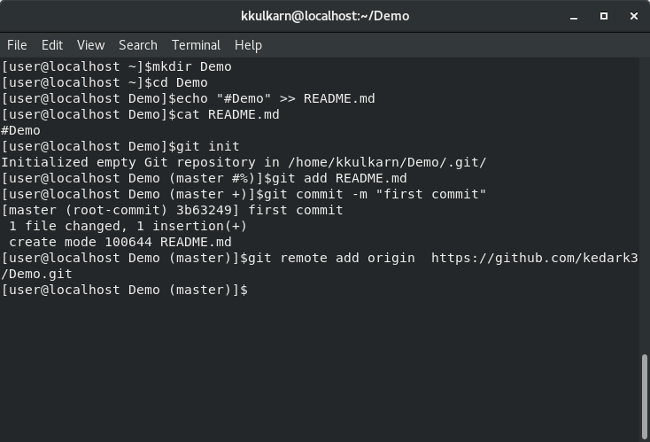

你知道你的硬盘在 Linux 下传输有多快吗?不打开电脑的机箱或者机柜,你知道它运行在 SATA I (150 MB/s) 、 SATA II (300 MB/s) 还是 SATA III (6.0Gb/s) 呢?

|

||||

|

||||

你能够使用 `hdparm` 和 `dd` 命令来检测你的硬盘速度。它为各种硬盘的 ioctls 提供了命令行界面,这是由 Linux 系统的 ATA / IDE / SATA 设备驱动程序子系统所支持的。有些选项只能用最新的内核才能正常工作(请确保安装了最新的内核)。我也推荐使用最新的内核源代码的包含头文件来编译 `hdparm` 命令。

|

||||

|

||||

### 如何使用 hdparm 命令来检测硬盘的传输速度

|

||||

|

||||

以 root 管理员权限登录并执行命令:

|

||||

|

||||

```

|

||||

$ sudo hdparm -tT /dev/sda

|

||||

```

|

||||

|

||||

或者,

|

||||

|

||||

```

|

||||

$ sudo hdparm -tT /dev/hda

|

||||

```

|

||||

|

||||

输出:

|

||||

|

||||

```

|

||||

/dev/sda:

|

||||

Timing cached reads: 7864 MB in 2.00 seconds = 3935.41 MB/sec

|

||||

Timing buffered disk reads: 204 MB in 3.00 seconds = 67.98 MB/sec

|

||||

```

|

||||

|

||||

为了检测更精准,这个操作应该**重复2-3次** 。这显示了无需访问磁盘,直接从 Linux 缓冲区缓存中读取的速度。这个测量实际上是被测系统的处理器、高速缓存和存储器的吞吐量的指标。这是一个 [for 循环的例子][1],连续运行测试 3 次:

|

||||

|

||||

```

|

||||

for i in 1 2 3; do hdparm -tT /dev/hda; done

|

||||

```

|

||||

|

||||

这里,

|

||||

|

||||

* `-t` :执行设备读取时序

|

||||

* `-T` :执行缓存读取时间

|

||||

* `/dev/sda` :硬盘设备文件

|

||||

|

||||

|

||||

要 [找出 SATA 硬盘的连接速度][2] ,请输入:

|

||||

|

||||

```

|

||||

sudo hdparm -I /dev/sda | grep -i speed

|

||||

```

|

||||

|

||||

输出:

|

||||

|

||||

```

|

||||

* Gen1 signaling speed (1.5Gb/s)

|

||||

* Gen2 signaling speed (3.0Gb/s)

|

||||

* Gen3 signaling speed (6.0Gb/s)

|

||||

|

||||

```

|

||||

|

||||

以上输出表明我的硬盘可以使用 1.5Gb/s、3.0Gb/s 或 6.0Gb/s 的速度。请注意,您的 BIOS/主板必须支持 SATA-II/III 才行:

|

||||

|

||||

```

|

||||

$ dmesg | grep -i sata | grep 'link up'

|

||||

```

|

||||

|

||||

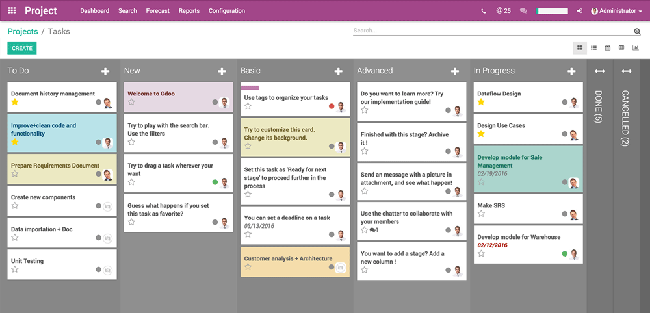

[![Linux Check IDE SATA SSD Hard Disk Transfer Speed][3]][3]

|

||||

|

||||

### dd 命令

|

||||

|

||||

你使用 `dd` 命令也可以获取到相应的速度信息:

|

||||

|

||||

```

|

||||

dd if=/dev/zero of=/tmp/output.img bs=8k count=256k

|

||||

rm /tmp/output.img

|

||||

```

|

||||

|

||||

输出:

|

||||

|

||||

```

|

||||

262144+0 records in

|

||||

262144+0 records out

|

||||

2147483648 bytes (2.1 GB) copied, 23.6472 seconds, `90.8 MB/s`

|

||||

```

|

||||

|

||||

下面是 [推荐的 dd 命令参数][4]:

|

||||

|

||||

```

|

||||

dd if=/dev/input.file of=/path/to/output.file bs=block-size count=number-of-blocks oflag=dsync

|

||||

|

||||

## GNU dd syntax ##

|

||||

dd if=/dev/zero of=/tmp/test1.img bs=1G count=1 oflag=dsync

|

||||

|

||||

## OR alternate syntax for GNU/dd ##

|

||||

dd if=/dev/zero of=/tmp/testALT.img bs=1G count=1 conv=fdatasync

|

||||

```

|

||||

|

||||

这是上面命令的第三个命令的输出结果:

|

||||

|

||||

```

|

||||

1+0 records in

|

||||

1+0 records out

|

||||

1073741824 bytes (1.1 GB, 1.0 GiB) copied, 4.23889 s, 253 MB/s

|

||||

```

|

||||

|

||||

### “磁盘与存储” - GUI 工具

|

||||

|

||||

您还可以使用位于“系统>管理>磁盘实用程序”菜单中的磁盘实用程序。请注意,在最新版本的 Gnome 中,它简称为“磁盘”。

|

||||

|

||||

#### 如何使用 Linux 上的“磁盘”测试我的硬盘的性能?

|

||||

|

||||

要测试硬盘的速度:

|

||||

|

||||

1. 从“活动概览”中打开“磁盘”(按键盘上的 super 键并键入“disks”)

|

||||

2. 从“左侧窗格”的列表中选择“磁盘”

|

||||

3. 选择菜单按钮并从菜单中选择“测试磁盘性能……”

|

||||

4. 单击“开始性能测试……”并根据需要调整传输速率和访问时间参数。

|

||||

5. 选择“开始性能测试”来测试从磁盘读取数据的速度。需要管理权限请输入密码。

|

||||

|

||||

以上操作的快速视频演示:

|

||||

|

||||

https://www.cyberciti.biz/tips/wp-content/uploads/2007/10/disks-performance.mp4

|

||||

|

||||

#### 只读 Benchmark (安全模式下)

|

||||

|

||||

然后,选择 > 只读:

|

||||

|

||||

![Fig.01: Linux Benchmarking Hard Disk Read Only Test Speed][5]

|

||||

|

||||

上述选项不会销毁任何数据。

|

||||

|

||||

#### 读写的 Benchmark(所有数据将丢失,所以要小心)

|

||||

|

||||

访问“系统>管理>磁盘实用程序菜单>单击性能测试>单击开始读/写性能测试按钮:

|

||||

|

||||

![Fig.02:Linux Measuring read rate, write rate and access time][6]

|

||||

|

||||

### 作者

|

||||

|

||||

作者是 nixCraft 的创造者,是经验丰富的系统管理员,也是 Linux 操作系统/ Unix shell 脚本的培训师。他曾与全球客户以及 IT,教育,国防和空间研究以及非营利部门等多个行业合作。在Twitter,Facebook和Google+上关注他。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/tips/how-fast-is-linux-sata-hard-disk.html

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[MonkeyDEcho](https://github.com/MonkeyDEcho)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz/

|

||||

[1]:https://www.cyberciti.biz/faq/bash-for-loop/

|

||||

[2]:https://www.cyberciti.biz/faq/linux-command-to-find-sata-harddisk-link-speed/

|

||||

[3]:https://www.cyberciti.biz/tips/wp-content/uploads/2007/10/Linux-Check-IDE-SATA-SSD-Hard-Disk-Transfer-Speed.jpg

|

||||

[4]:https://www.cyberciti.biz/faq/howto-linux-unix-test-disk-performance-with-dd-command/

|

||||

[5]:https://www.cyberciti.biz/media/new/tips/2007/10/Linux-Hard-Disk-Speed-Benchmark.png (Linux Benchmark Hard Disk Speed)

|

||||

[6]:https://www.cyberciti.biz/media/new/tips/2007/10/Linux-Hard-Disk-Read-Write-Benchmark.png (Linux Hard Disk Benchmark Read / Write Rate and Access Time)

|

||||

[7]:https://twitter.com/nixcraft

|

||||

[8]:https://facebook.com/nixcraft

|

||||

[9]:https://plus.google.com/+CybercitiBiz

|

||||

100

published/20170511 Working with VI editor - The Basics.md

Normal file

100

published/20170511 Working with VI editor - The Basics.md

Normal file

@ -0,0 +1,100 @@

|

||||

使用 Vi/Vim 编辑器:基础篇

|

||||

=========

|

||||

|

||||

VI 编辑器是一个基于命令行的、功能强大的文本编辑器,最早为 Unix 系统开发,后来也被移植到许多的 Unix 和 Linux 发行版上。

|

||||

|

||||

在 Linux 上还存在着另一个 VI 编辑器的高阶版本 —— VIM(也被称作 VI IMproved)。VIM 只是在 VI 已经很强的功能上添加了更多的功能,这些功能有:

|

||||

|

||||

- 支持更多 Linux 发行版,

|

||||

- 支持多种编程语言,包括 python、c++、perl 等语言的代码块折叠,语法高亮,

|

||||

- 支持通过多种网络协议,包括 http、ssh 等编辑文件,

|

||||

- 支持编辑压缩归档中的文件,

|

||||

- 支持分屏同时编辑多个文件。

|

||||

|

||||

接下来我们会讨论 VI/VIM 的命令以及选项。本文出于教学的目的,我们使用 VI 来举例,但所有的命令都可以被用于 VIM。首先我们先介绍 VI 编辑器的两种模式。

|

||||

|

||||

### 命令模式

|

||||

|

||||

命令模式下,我们可以执行保存文件、在 VI 内运行命令、复制/剪切/粘贴操作,以及查找/替换等任务。当我们处于插入模式时,我们可以按下 `Escape`(`Esc`)键返回命令模式

|

||||

|

||||

### 插入模式

|

||||

|

||||

在插入模式下,我们可以键入文件内容。在命令模式下按下 `i` 进入插入模式。

|

||||

|

||||

### 创建文件

|

||||

|

||||

我们可以通过下述命令建立一个文件(LCTT 译注:如果该文件存在,则编辑已有文件):

|

||||

|

||||

```

|

||||

$ vi filename

|

||||

```

|

||||

|

||||

一旦该文件被创建或者打开,我们首先进入命令模式,我们需要进入输入模式以在文件中输入内容。我们通过前文已经大致上了解这两种模式。

|

||||

|

||||

### 退出 Vi

|

||||

|

||||

如果是想从插入模式中退出,我们首先需要按下 `Esc` 键进入命令模式。接下来我们可以根据不同的需要分别使用两种命令退出 Vi。

|

||||

|

||||

1. 不保存退出 - 在命令模式中输入 `:q!`

|

||||

2. 保存并退出 - 在命令模式中输入 `:wq`

|

||||

|

||||

### 移动光标

|

||||

|

||||

下面我们来讨论下那些在命令模式中移动光标的命令和选项:

|

||||

|

||||

1. `k` 将光标上移一行

|

||||

2. `j` 将光标下移一行

|

||||

3. `h` 将光标左移一个字母

|

||||

4. `l` 将光标右移一个字母

|

||||

|

||||

注意:如果你想通过一个命令上移或下移多行,或者左移、右移多个字母,你可以使用 `4k` 或者 `5l`,这两条命令会分别上移 4 行或者右移 5 个字母。

|

||||

1. `0` 将光标移动到该行行首

|

||||

2. `$` 将光标移动到该行行尾

|

||||

3. `nG` 将光标移动到第 n 行

|

||||

4. `G` 将光标移动到文件的最后一行

|

||||

5. `{` 将光标移动到上一段

|

||||

6. `}` 将光标移动到下一段

|

||||

|

||||

除此之外还有一些命令可以用于控制光标的移动,但上述列出的这些命令应该就能应付日常工作所需。

|

||||

|

||||

### 编辑文本

|

||||

|

||||

这部分会列出一些用于命令模式的命令,可以进入插入模式来编辑当前文件

|

||||

|

||||

|

||||

1. `i` 在当前光标位置之前插入内容

|

||||

2. `I` 在光标所在行的行首插入内容

|

||||

3. `a` 在当前光标位置之后插入内容

|

||||

4. `A` 在光标所在行的行尾插入内容

|

||||

5. `o` 在当前光标所在行之后添加一行

|

||||

6. `O` 在当前光标所在行之前添加一行

|

||||

|

||||

|

||||

### 删除文本

|

||||

|

||||

|

||||

以下的这些命令都只能在命令模式下使用,所以首先需要按下 `Esc` 进入命令模式,如果你正处于插入模式:

|

||||

|

||||

1. `dd` 删除光标所在的整行内容,可以在 `dd` 前增加数字,比如 `2dd` 可以删除从光标所在行开始的两行

|

||||

2. `d$` 删除从光标所在位置直到行尾

|

||||

3. `d^` 删除从光标所在位置直到行首

|

||||

4. `dw` 删除从光标所在位置直到下一个词开始的所有内容

|

||||

|

||||

### 复制与黏贴

|

||||

|

||||

1. `yy` 复制当前行,在 `yy` 前添加数字可以复制多行

|

||||

2. `p` 在光标之后粘贴复制行

|

||||

3. `P` 在光标之前粘贴复制行

|

||||

|

||||

上述就是可以在 VI/VIM 编辑器上使用的一些基本命令。在未来的教程中还会继续教授一些更高级的命令。如果有任何疑问和建议,请在下方评论区留言。

|

||||

|

||||

---------

|

||||

via: http://linuxtechlab.com/working-vi-editor-basics/

|

||||

|

||||

作者:[Shusain][a]

|

||||

译者:[ljgibbslf](https://github.com/ljgibbslf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 LCTT 原创编译,Linux中国 荣誉推出

|

||||

|

||||

[a]: http://linuxtechlab.com/author/shsuain/

|

||||

@ -0,0 +1,312 @@

|

||||

使用 Ansible 让你的系统管理自动化

|

||||

======

|

||||

|

||||

>精进你的系统管理能力和 Linux 技能,学习如何设置工具来简化管理多台机器。

|

||||

|

||||

|

||||

|

||||

你是否想精进你的系统管理能力和 Linux 技能?也许你的本地局域网上跑了一些东西,而你又想让生活更轻松一点--那该怎么办呢?在本文中,我会向你演示如何设置工具来简化管理多台机器。

|

||||

|

||||

远程管理工具有很多,SaltStack、Puppet、Chef,以及 Ansible 都是很流行的选择。在本文中,我将重点放在 Ansible 上并会解释它是如何帮到你的,不管你是有 5 台还是 1000 台虚拟机。

|

||||

|

||||

让我们从多机(不管这些机器是虚拟的还是物理的)的基本管理开始。我假设你知道要做什么,有基础的 Linux 管理技能(至少要有能找出执行每个任务具体步骤的能力)。我会向你演示如何使用这一工具,而是否使用它由你自己决定。

|

||||

|

||||

### 什么是 Ansible?

|

||||

|

||||

Ansible 的网站上将之解释为 “一个超级简单的 IT 自动化引擎,可以自动进行云供给、配置管理、应用部署、服务内部编排,以及其他很多 IT 需求。” 通过在一个集中的位置定义好服务器集合,Ansible 可以在多个服务器上执行相同的任务。

|

||||

|

||||

如果你对 Bash 的 `for` 循环很熟悉,你会发现 Ansible 操作跟这很类似。区别在于 Ansible 是<ruby>幕等的<rt>idempotent</rt></ruby>。通俗来说就是 Ansible 一般只有在确实会发生改变时才执行所请求的动作。比如,假设你执行一个 Bash 的 for 循环来为多个机器创建用户,像这样子:

|

||||

|

||||

```

|

||||

for server in serverA serverB serverC; do ssh ${server} "useradd myuser"; done

|

||||

```

|

||||

|

||||

这会在 serverA、serverB,以及 serverC 上创建 myuser 用户;然而不管这个用户是否存在,每次运行这个 for 循环时都会执行 `useradd` 命令。一个幕等的系统会首先检查用户是否存在,只有在不存在的情况下才会去创建它。当然,这个例子很简单,但是幕等工具的好处将会随着时间的推移变得越发明显。

|

||||

|

||||

#### Ansible 是如何工作的?

|

||||

|

||||

Ansible 会将 Ansible playbooks 转换成通过 SSH 运行的命令,这在管理类 UNIX 环境时有很多优势:

|

||||

|

||||

1. 绝大多数类 UNIX 机器默认都开了 SSH。

|

||||

2. 依赖 SSH 意味着远程主机不需要有代理。

|

||||

3. 大多数情况下都无需安装额外的软件,Ansible 需要 2.6 或更新版本的 Python。而绝大多数 Linux 发行版默认都安装了这一版本(或者更新版本)的 Python。

|

||||

4. Ansible 无需主节点。他可以在任何安装有 Ansible 并能通过 SSH 访问的主机上运行。

|

||||

5. 虽然可以在 cron 中运行 Ansible,但默认情况下,Ansible 只会在你明确要求的情况下运行。

|

||||

|

||||

#### 配置 SSH 密钥认证

|

||||

|

||||

使用 Ansible 的一种常用方法是配置无需密码的 SSH 密钥登录以方便管理。(可以使用 Ansible Vault 来为密码等敏感信息提供保护,但这不在本文的讨论范围之内)。现在只需要使用下面命令来生成一个 SSH 密钥,如示例 1 所示。

|

||||

|

||||

```

|

||||

[09:44 user ~]$ ssh-keygen

|

||||

Generating public/private rsa key pair。

|

||||

Enter file in which to save the key (/home/user/.ssh/id_rsa):

|

||||

Created directory '/home/user/.ssh'。

|

||||

Enter passphrase (empty for no passphrase):

|

||||

Enter same passphrase again:

|

||||

Your identification has been saved in /home/user/.ssh/id_rsa。

|

||||

Your public key has been saved in /home/user/.ssh/id_rsa.pub。

|

||||

The key fingerprint is:

|

||||

SHA256:TpMyzf4qGqXmx3aqZijVv7vO9zGnVXsh6dPbXAZ+LUQ user@user-fedora

|

||||

The key's randomart image is:

|

||||

+---[RSA 2048]----+

|

||||

| |

|

||||

| |

|

||||

| E |

|

||||

| o . .。|

|

||||

| . + S o+。|

|

||||

| . .o * . .+ooo|

|

||||

| . .+o o o oo+。*|

|

||||

|。.ooo* o。* .*+|

|

||||

| . o+*BO.o+ .o|

|

||||

+----[SHA256]-----+

|

||||

```

|

||||

|

||||

*示例 1 :生成一个 SSH 密钥*

|

||||

|

||||

|

||||

在示例 1 中,直接按下回车键来接受默认值。任何非特权用户都能生成 SSH 密钥,也能安装到远程系统中任何用户的 SSH 的 `authorized_keys` 文件中。生成密钥后,还需要将之拷贝到远程主机上去,运行下面命令:

|

||||

|

||||

```

|

||||

ssh-copy-id root@servera

|

||||

```

|

||||

|

||||

注意:运行 Ansible 本身无需 root 权限;然而如果你使用非 root 用户,你_需要_为要执行的任务配置合适的 sudo 权限。

|

||||

|

||||

输入 servera 的 root 密码,这条命令会将你的 SSH 密钥安装到远程主机上去。安装好 SSH 密钥后,再通过 SSH 登录远程主机就不再需要输入 root 密码了。

|

||||

|

||||

### 安装 Ansible

|

||||

|

||||

只需要在示例 1 中生成 SSH 密钥的那台主机上安装 Ansible。若你使用的是 Fedora,输入下面命令:

|

||||

|

||||

```

|

||||

sudo dnf install ansible -y

|

||||

```

|

||||

|

||||

若运行的是 CentOS,你需要为 EPEL 仓库配置额外的包:

|

||||

|

||||

```

|

||||

sudo yum install epel-release -y

|

||||

```

|

||||

|

||||

然后再使用 yum 来安装 Ansible:

|

||||

|

||||

```

|

||||

sudo yum install ansible -y

|

||||

```

|

||||

|

||||

对于基于 Ubuntu 的系统,可以从 PPA 上安装 Ansible:

|

||||

|

||||

```

|

||||

sudo apt-get install software-properties-common -y

|

||||

sudo apt-add-repository ppa:ansible/ansible

|

||||

sudo apt-get update

|

||||

sudo apt-get install ansible -y

|

||||

```

|

||||

|

||||

若你使用的是 macOS,那么推荐通过 Python PIP 来安装:

|

||||

|

||||

```

|

||||

sudo pip install ansible

|

||||

```

|

||||

|

||||

对于其他发行版,请参见 [Ansible 安装文档 ][2]。

|

||||

|

||||

### Ansible Inventory

|

||||

|

||||

Ansible 使用一个 INI 风格的文件来追踪要管理的服务器,这种文件被称之为<ruby>库存清单<rt>Inventory</rt></ruby>。默认情况下该文件位于 `/etc/ansible/hosts`。本文中,我使用示例 2 中所示的 Ansible 库存清单来对所需的主机进行操作(为了简洁起见已经进行了裁剪):

|

||||

|

||||

```

|

||||

[arch]

|

||||

nextcloud

|

||||

prometheus

|

||||

desktop1

|

||||

desktop2

|

||||

vm-host15

|

||||

|

||||

[fedora]

|

||||

netflix

|

||||

|

||||

[centos]

|

||||

conan

|

||||

confluence

|

||||

7-repo

|

||||

vm-server1

|

||||

gitlab

|

||||

|

||||

[ubuntu]

|

||||

trusty-mirror

|

||||

nwn

|

||||

kids-tv

|

||||

media-centre

|

||||

nas

|

||||

|

||||

[satellite]

|

||||

satellite

|

||||

|

||||

[ocp]

|

||||

lb00

|

||||

ocp_dns

|

||||

master01

|

||||

app01

|

||||

infra01

|

||||

```

|

||||

|

||||

*示例 2 : Ansible 主机文件*

|

||||

|

||||

每个分组由中括号和组名标识(像这样 `[group1]` ),是应用于一组服务器的任意组名。一台服务器可以存在于多个组中,没有任何问题。在这个案例中,我有根据操作系统进行的分组(`arch`、`ubuntu`、`centos`、`fedora`),也有根据服务器功能进行的分组(`ocp`、`satellite`)。Ansible 主机文件可以处理比这复杂的多的情况。详细内容,请参阅 [库存清单文档][3]。

|

||||

|

||||

### 运行命令

|

||||

|

||||

将你的 SSH 密钥拷贝到库存清单中所有服务器上后,你就可以开始使用 Ansible 了。Ansible 的一项基本功能就是运行特定命令。语法为:

|

||||

|

||||

```

|

||||

ansible -a "some command"

|

||||

```

|

||||

例如,假设你想升级所有的 CentOS 服务器,可以运行:

|

||||

|

||||

```

|

||||

ansible centos -a 'yum update -y'

|

||||

```

|

||||

|

||||

_注意:不是必须要根据服务器操作系统来进行分组的。我下面会提到,[Ansible Facts][4] 可以用来收集这一信息;然而,若使用 Facts 的话,则运行特定命令会变得很复杂,因此,如果你在管理异构环境的话,那么为了方便起见,我推荐创建一些根据操作系统来划分的组。_

|

||||

|

||||

这会遍历 `centos` 组中的所有服务器并安装所有的更新。一个更加有用的命令应该是 Ansible 的 `ping` 模块了,可以用来验证服务器是否准备好接受命令了:

|

||||

|

||||

```

|

||||

ansible all -m ping

|

||||

```

|

||||

|

||||

这会让 Ansible 尝试通过 SSH 登录库存清单中的所有服务器。在示例 3 中可以看到 `ping` 命令的部分输出结果。

|

||||

|

||||

```

|

||||

nwn | SUCCESS => {

|

||||

"changed":false,

|

||||

"ping":"pong"

|

||||

}

|

||||

media-centre | SUCCESS => {

|

||||

"changed":false,

|

||||

"ping":"pong"

|

||||

}

|

||||

nas | SUCCESS => {

|

||||

"changed":false,

|

||||

"ping":"pong"

|

||||

}

|

||||

kids-tv | SUCCESS => {

|

||||

"changed":false,

|

||||

"ping":"pong"

|

||||

}

|

||||

...

|

||||

```

|

||||

|

||||

*示例 3 :Ansible ping 命令输出*

|

||||

|

||||

运行指定命令的能力有助于完成快速任务(LCTT 译注:应该指的那种一次性任务),但是如果我想在以后也能以同样的方式运行同样的任务那该怎么办呢?Ansible [playbooks][5] 就是用来做这个的。

|

||||

|

||||

### 复杂任务使用 Ansible playbooks

|

||||

|

||||

Ansible <ruby>剧本<rt>playbook<rt></ruby> 就是包含 Ansible 指令的 YAML 格式的文件。我这里不打算讲解类似 Roles 和 Templates 这些比较高深的内容。有兴趣的话,请阅读 [Ansible 文档][6]。

|

||||

|

||||

在前一章节,我推荐你使用 `ssh-copy-id` 命令来传递你的 SSH 密钥;然而,本文关注于如何以一种一致的、可重复性的方式来完成任务。示例 4 演示了一种以冥等的方式,即使 SSH 密钥已经存在于目标主机上也能保证正确性的实现方法。

|

||||

|

||||

```

|

||||

---

|

||||

- hosts:all

|

||||

gather_facts:false

|

||||

vars:

|

||||

ssh_key:'/root/playbooks/files/laptop_ssh_key'

|

||||

tasks:

|

||||

- name:copy ssh key

|

||||

authorized_key:

|

||||

key:"{{ lookup('file',ssh_key) }}"

|

||||

user:root

|

||||

```

|

||||

|

||||

*示例 4:Ansible 剧本 “push_ssh_keys.yaml”*

|

||||

|

||||

`- hosts:` 行标识了这个剧本应该在那个主机组上执行。在这个例子中,它会检查库存清单里的所有主机。

|

||||

|

||||

`gather_facts:` 行指明 Ansible 是否去搜索每个主机的详细信息。我稍后会做一次更详细的检查。现在为了节省时间,我们设置 `gather_facts` 为 `false`。

|

||||

|

||||

`vars:` 部分,顾名思义,就是用来定义剧本中所用变量的。在示例 4 的这个简短剧本中其实不是必要的,但是按惯例我们还是设置了一个变量。

|

||||

|

||||

最后由 `tasks:` 标注的这个部分,是存放主体指令的地方。每个任务都有一个 `-name:`。Ansbile 在运行剧本时会显示这个名字。

|

||||

|

||||

`authorized_key:` 是剧本所使用 Ansible 模块的名字。可以通过命令 `ansible-doc -a` 来查询 Ansible 模块的相关信息; 不过通过网络浏览器查看 [文档 ][7] 可能更方便一些。[authorized_key 模块][8] 有很多很好的例子可以参考。要运行示例 4 中的剧本,只要运行 `ansible-playbook` 命令就行了:

|

||||

|

||||

```

|

||||

ansible-playbook push_ssh_keys.yaml

|

||||

```

|

||||

|

||||

如果是第一次添加 SSH 密钥,SSH 会提示你输入 root 用户的密码。

|

||||

|

||||

现在 SSH 密钥已经传输到服务器中去了,可以来做点有趣的事了。

|

||||

|

||||

### 使用 Ansible 收集信息

|

||||

|

||||

Ansible 能够收集目标系统的各种信息。如果你的主机数量很多,那它会特别的耗时。按我的经验,每台主机大概要花个 1 到 2 秒钟,甚至更长时间;然而有时收集信息是有好处的。考虑下面这个剧本,它会禁止 root 用户通过密码远程登录系统:

|

||||

|

||||

```

|

||||

---

|

||||

- hosts:all

|

||||

gather_facts:true

|

||||

vars:

|

||||

tasks:

|

||||

- name:Enabling ssh-key only root access

|

||||

lineinfile:

|

||||

dest:/etc/ssh/sshd_config

|

||||

regexp:'^PermitRootLogin'

|

||||

line:'PermitRootLogin without-password'

|

||||

notify:

|

||||

- restart_sshd

|

||||

- restart_ssh

|

||||

|

||||

handlers:

|

||||

- name:restart_sshd

|

||||

service:

|

||||

name:sshd

|

||||

state:restarted

|

||||

enabled:true

|

||||

when:ansible_distribution == 'RedHat'

|

||||

- name:restart_ssh

|

||||

service:

|

||||

name:ssh

|

||||

state:restarted

|

||||

enabled:true

|

||||

when:ansible_distribution == 'Debian'

|

||||

```

|

||||

|

||||

*示例 5:锁定 root 的 SSH 访问*

|

||||

|

||||

在示例 5 中 `sshd_config` 文件的修改是有[条件][9] 的,只有在找到匹配的发行版的情况下才会执行。在这个案例中,基于 Red Hat 的发行版与基于 Debian 的发行版对 SSH 服务的命名是不一样的,这也是使用条件语句的目的所在。虽然也有其他的方法可以达到相同的效果,但这个例子很好演示了 Ansible 信息的作用。若你想查看 Ansible 默认收集的所有信息,可以在本地运行 `setup` 模块:

|

||||

|

||||

```

|

||||

ansible localhost -m setup |less

|

||||

```

|

||||

|

||||

Ansible 收集的所有信息都能用来做判断,就跟示例 4 中 `vars:` 部分所演示的一样。所不同的是,Ansible 信息被看成是**内置** 变量,无需由系统管理员定义。

|

||||

|

||||

### 更近一步

|

||||

|

||||

现在可以开始探索 Ansible 并创建自己的基本了。Ansible 是一个富有深度、复杂性和灵活性的工具,只靠一篇文章不可能就把它讲透。希望本文能够激发你的兴趣,鼓励你去探索 Ansible 的功能。在下一篇文章中,我会再聊聊 `Copy`、`systemd`、`service`、`apt`、`yum`、`virt`,以及 `user` 模块。我们可以在剧本中组合使用这些模块,还可以创建一个简单的 Git 服务器来存储这些所有剧本。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/7/automate-sysadmin-ansible

|

||||

|

||||

作者:[Steve Ovens][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/stratusss

|

||||

[1]:https://opensource.com/tags/ansible

|

||||

[2]:http://docs.ansible.com/ansible/intro_installation.html

|

||||

[3]:http://docs.ansible.com/ansible/intro_inventory.html

|

||||

[4]:http://docs.ansible.com/ansible/playbooks_variables.html#information-discovered-from-systems-facts

|

||||

[5]:http://docs.ansible.com/ansible/playbooks.html

|

||||

[6]:http://docs.ansible.com/ansible/playbooks_roles.html

|

||||

[7]:http://docs.ansible.com/ansible/modules_by_category.html

|

||||

[8]:http://docs.ansible.com/ansible/authorized_key_module.html

|

||||

[9]:http://docs.ansible.com/ansible/lineinfile_module.html

|

||||

@ -1,13 +1,15 @@

|

||||

使用 TLS 加密保护 VNC 服务器的简单指南

|

||||

======

|

||||

在本教程中,我们将学习使用 TLS 加密安装 VNC 服务器并保护 VNC 会话。

|

||||

此方法已经在 CentOS 6&7 上测试过了,但是也可以在其他的版本/操作系统上运行(RHEL、Scientific Linux 等)。

|

||||

|

||||

在本教程中,我们将学习安装 VNC 服务器并使用 TLS 加密保护 VNC 会话。

|

||||

|

||||

此方法已经在 CentOS 6&7 上测试过了,但是也可以在其它的版本/操作系统上运行(RHEL、Scientific Linux 等)。

|

||||

|

||||

**(推荐阅读:[保护 SSH 会话终极指南][1])**

|

||||

|

||||

### 安装 VNC 服务器

|

||||

|

||||

在机器上安装 VNC 服务器之前,请确保我们有一个可用的 GUI。如果机器上还没有安装 GUI,我们可以通过执行以下命令来安装:

|

||||

在机器上安装 VNC 服务器之前,请确保我们有一个可用的 GUI(图形用户界面)。如果机器上还没有安装 GUI,我们可以通过执行以下命令来安装:

|

||||

|

||||

```

|

||||

yum groupinstall "GNOME Desktop"

|

||||

@ -38,7 +40,7 @@ yum groupinstall "GNOME Desktop"

|

||||

现在我们需要编辑 VNC 配置文件:

|

||||

|

||||

```

|

||||

**# vim /etc/sysconfig/vncservers**

|

||||

# vim /etc/sysconfig/vncservers

|

||||

```

|

||||

|

||||

并添加下面这几行:

|

||||

@ -63,7 +65,7 @@ VNCSERVERARGS[1]= "-geometry 1024×768″

|

||||

|

||||

#### CentOS 7

|

||||

|

||||

在 CentOS 7 上,/etc/sysconfig/vncservers 已经改为 /lib/systemd/system/vncserver@.service。我们将使用这个配置文件作为参考,所以创建一个文件的副本,

|

||||

在 CentOS 7 上,`/etc/sysconfig/vncservers` 已经改为 `/lib/systemd/system/vncserver@.service`。我们将使用这个配置文件作为参考,所以创建一个文件的副本,

|

||||

|

||||

```

|

||||

# cp /lib/systemd/system/vncserver@.service /etc/systemd/system/vncserver@:1.service

|

||||

@ -85,8 +87,8 @@ PIDFile=/home/vncuser/.vnc/%H%i.pid

|

||||

保存文件并退出。接下来重启服务并在启动时启用它:

|

||||

|

||||

```

|

||||

systemctl restart[[email protected]][2]:1.service

|

||||

systemctl enable[[email protected]][2]:1.service

|

||||

# systemctl restart vncserver@:1.service

|

||||

# systemctl enable vncserver@:1.service

|

||||

```

|

||||

|

||||

现在我们已经设置好了 VNC 服务器,并且可以使用 VNC 服务器的 IP 地址从客户机连接到它。但是,在此之前,我们将使用 TLS 加密保护我们的连接。

|

||||

@ -105,7 +107,9 @@ systemctl enable[[email protected]][2]:1.service

|

||||

|

||||

现在,我们可以使用客户机上的 VNC 浏览器访问服务器,使用以下命令以安全连接启动 vnc 浏览器:

|

||||

|

||||

**# vncviewer -SecurityTypes=VeNCrypt,TLSVnc 192.168.1.45:1**

|

||||

```

|

||||

# vncviewer -SecurityTypes=VeNCrypt,TLSVnc 192.168.1.45:1

|

||||

```

|

||||

|

||||

这里,192.168.1.45 是 VNC 服务器的 IP 地址。

|

||||

|

||||

@ -115,14 +119,13 @@ systemctl enable[[email protected]][2]:1.service

|

||||

|

||||

这篇教程就完了,欢迎随时使用下面的评论栏提交你的建议或疑问。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxtechlab.com/secure-vnc-server-tls-encryption/

|

||||

|

||||

作者:[Shusain][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -3,31 +3,33 @@

|

||||

|

||||

|

||||

|

||||

在本教程中,我们将讨论如何在 Arch Linux 中设置日语环境。在其他类 Unix 操作系统中,设置日文布局并不是什么大不了的事情。你可以从设置中轻松选择日文键盘布局。然而,在 Arch Linux 下有点困难,ArchWiki 中没有合适的文档。如果你正在使用 Arch Linux 和/或其衍生产品如 Antergos,Manajaro Linux,请遵循本指南在 Arch Linux 及其衍生系统中使用日语。

|

||||

在本教程中,我们将讨论如何在 Arch Linux 中设置日语环境。在其他类 Unix 操作系统中,设置日文布局并不是什么大不了的事情。你可以从设置中轻松选择日文键盘布局。然而,在 Arch Linux 下有点困难,ArchWiki 中没有合适的文档。如果你正在使用 Arch Linux 和/或其衍生产品如 Antergos、Manajaro Linux,请遵循本指南以在 Arch Linux 及其衍生系统中使用日语。

|

||||

|

||||

### 在Arch Linux中设置日语环境

|

||||

### 在 Arch Linux 中设置日语环境

|

||||

|

||||

首先,为了正确查看日语字符,先安装必要的日语字体:

|

||||

|

||||

首先,为了正确查看日语 ASCII 格式,先安装必要的日语字体:

|

||||

```

|

||||

sudo pacman -S adobe-source-han-sans-jp-fonts otf-ipafont

|

||||

```

|

||||

```

|

||||

pacaur -S ttf-monapo

|

||||

```

|

||||

|

||||

如果你尚未安装 pacaur,请参阅[**此链接**][1]。

|

||||

如果你尚未安装 `pacaur`,请参阅[此链接][1]。

|

||||

|

||||

确保你在 `/etc/locale.gen` 中注释掉了(添加 `#` 注释)下面的行。

|

||||

|

||||

确保你在 **/etc/locale.gen** 中注释掉了(添加 # 注释)下面的行。

|

||||

```

|

||||

#ja_JP.UTF-8

|

||||

```

|

||||

|

||||

然后,安装 **iBus** 和 **ibus-anthy**。对于那些想知道原因的,iBus 是类 Unix 系统的输入法(IM)框架,而 ibus-anthy 是 iBus 的日语输入法。

|

||||

然后,安装 iBus 和 ibus-anthy。对于那些想知道原因的,iBus 是类 Unix 系统的输入法(IM)框架,而 ibus-anthy 是 iBus 的日语输入法。

|

||||

|

||||

```

|

||||

sudo pacman -S ibus ibus-anthy

|

||||

```

|

||||

|

||||

在 **~/.xprofile** 中添加以下几行(如果不存在,创建一个):

|

||||

在 `~/.xprofile` 中添加以下几行(如果不存在,创建一个):

|

||||

|

||||

```

|

||||

# Settings for Japanese input

|

||||

export GTK_IM_MODULE='ibus'

|

||||

@ -38,21 +40,21 @@ export XMODIFIERS=@im='ibus'

|

||||

ibus-daemon -drx

|

||||

```

|

||||

|

||||

~/.xprofile 允许我们在 X 用户会话开始时且在窗口管理器启动之前执行命令。

|

||||

|

||||

`~/.xprofile` 允许我们在 X 用户会话开始时且在窗口管理器启动之前执行命令。

|

||||

|

||||

保存并关闭文件。重启 Arch Linux 系统以使更改生效。

|

||||

|

||||

登录到系统后,右键单击任务栏中的 iBus 图标,然后选择 **Preferences**。如果不存在,请从终端运行以下命令来启动 iBus 并打开偏好设置窗口。

|

||||

登录到系统后,右键单击任务栏中的 iBus 图标,然后选择 “Preferences”。如果不存在,请从终端运行以下命令来启动 iBus 并打开偏好设置窗口。

|

||||

|

||||

```

|

||||

ibus-setup

|

||||

```

|

||||

|

||||

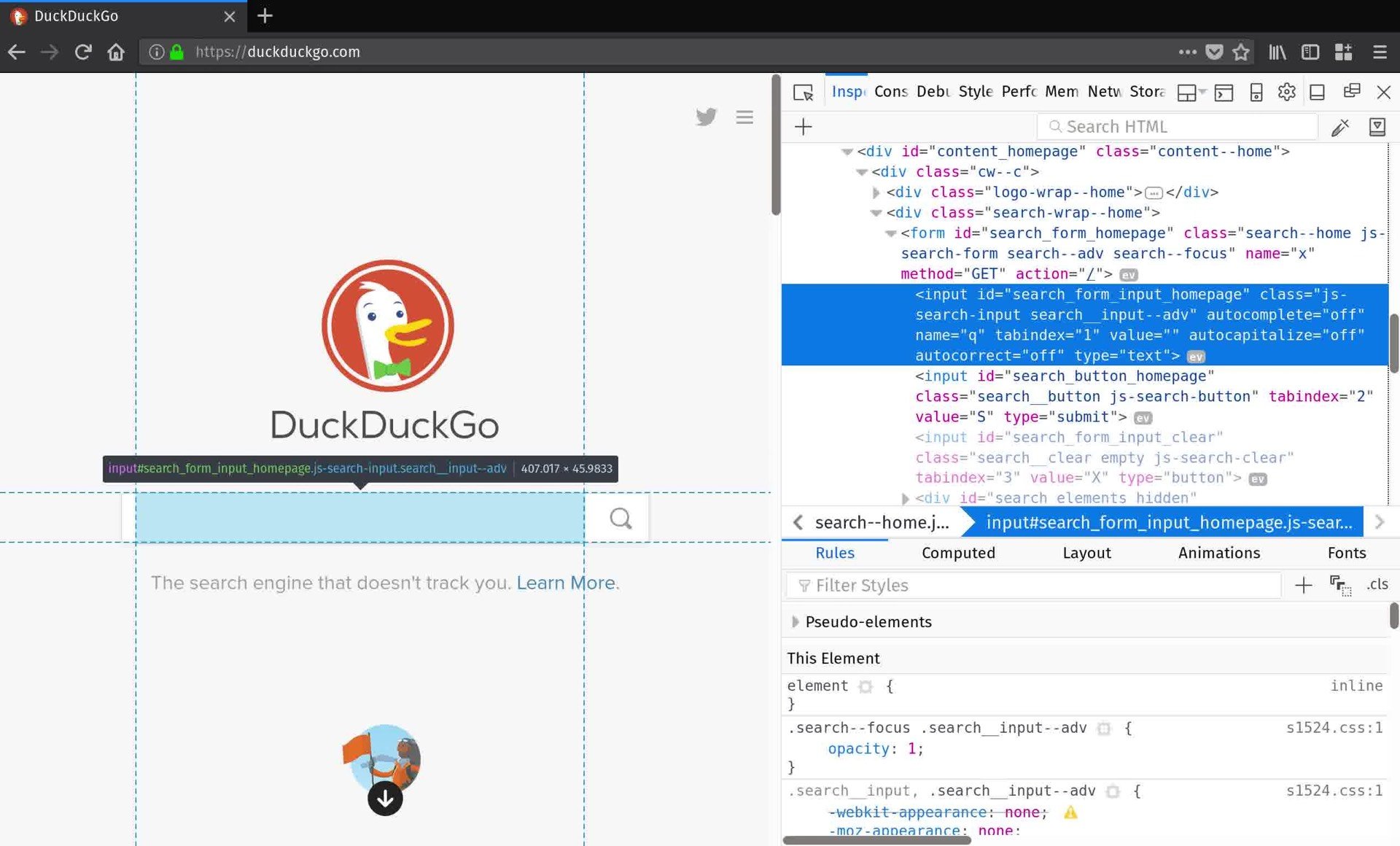

选择 Yes 来启动 iBus。你会看到一个像下面的页面。点击 Ok 关闭它。

|

||||

选择 “Yes” 来启动 iBus。你会看到一个像下面的页面。点击 Ok 关闭它。

|

||||

|

||||

[![][2]][3]

|

||||

|

||||

现在,你将看到 iBus 偏好设置窗口。进入 **Input Method** 选项卡,然后单击 “Add” 按钮。

|

||||

现在,你将看到 iBus 偏好设置窗口。进入 “Input Method” 选项卡,然后单击 “Add” 按钮。

|

||||

|

||||

[![][2]][4]

|

||||

|

||||

@ -60,29 +62,27 @@ ibus-setup

|

||||

|

||||

[![][2]][5]

|

||||

|

||||

然后,选择 “Anthy” 并点击添加。

|

||||

然后,选择 “Anthy” 并点击添加:

|

||||

|

||||

[![][2]][6]

|

||||

|

||||

就是这样了。你现在将在输入法栏看到 “Japanese - Anthy”。

|

||||

就是这样了。你现在将在输入法栏看到 “Japanese - Anthy”:

|

||||

|

||||

[![][2]][7]

|

||||

|

||||

根据你的需求在偏好设置中更改日语输入法的选项(点击 Japanese - Anthy -> Preferences)。

|

||||

根据你的需求在偏好设置中更改日语输入法的选项(点击 “Japanese-Anthy” -> “Preferences”)。

|

||||

|

||||

[![][2]][8]

|

||||

|

||||

你还可以在键盘绑定中编辑默认的快捷键。完成所有更改后,点击应用并确定。就是这样。从任务栏中的 iBus 图标中选择日语,或者按下**SUPER 键+空格键**(LCTT译注:SUPER KEY 通常为 Command/Window KEY)来在日语和英语(或者系统中的其他默认语言)之间切换。你可以从 iBus 首选项窗口更改键盘快捷键。

|

||||

|

||||

现在你知道如何在 Arch Linux 及其衍生版中使用日语了。如果你发现我们的指南很有用,那么请您在社交、专业网络上分享,并支持 OSTechNix。

|

||||

|

||||

你还可以在键盘绑定中编辑默认的快捷键。完成所有更改后,点击应用并确定。就是这样。从任务栏中的 iBus 图标中选择日语,或者按下 `SUPER + 空格键”(LCTT 译注:SUPER 键通常为 `Command` 或 `Window` 键)来在日语和英语(或者系统中的其他默认语言)之间切换。你可以从 iBus 首选项窗口更改键盘快捷键。

|

||||

|

||||

现在你知道如何在 Arch Linux 及其衍生版中使用日语了。如果你发现我们的指南很有用,那么请您在社交、专业网络上分享,并支持我们。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/setup-japanese-language-environment-arch-linux/

|

||||

|

||||

作者:[][a]

|

||||

作者:[SK][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[Locez](https://github.com/locez)

|

||||

|

||||

@ -91,9 +91,9 @@ via: https://www.ostechnix.com/setup-japanese-language-environment-arch-linux/

|

||||

[a]:https://www.ostechnix.com

|

||||

[1]:https://www.ostechnix.com/install-pacaur-arch-linux/

|

||||

[2]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/11/ibus.png ()

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2017/11/iBus-preferences.png ()

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2017/11/Choose-Japanese.png ()

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2017/11/Japanese-Anthy.png ()

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2017/11/iBus-preferences-1.png ()

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2017/11/ibus-anthy.png ()

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/11/ibus.png

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2017/11/iBus-preferences.png

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2017/11/Choose-Japanese.png

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2017/11/Japanese-Anthy.png

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2017/11/iBus-preferences-1.png

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2017/11/ibus-anthy.png

|

||||

@ -0,0 +1,140 @@

|

||||

为 Linux 初学者讲解 wc 命令

|

||||

======

|

||||

|

||||

在命令行工作时,有时您可能想要知道一个文件中的单词数量、字节数、甚至换行数量。如果您正在寻找这样做的工具,您会很高兴地知道,在 Linux 中,存在一个命令行实用程序,它被称为 `wc` ,它为您完成所有这些工作。在本文中,我们将通过简单易懂的例子来讨论这个工具。

|

||||

|

||||

但是在我们开始之前,值得一提的是,本教程中提供的所有示例都在 Ubuntu 16.04 上进行了测试。

|

||||

|

||||

### Linux wc 命令

|

||||

|

||||

`wc` 命令打印每个输入文件的新行、单词和字节数。以下是该命令行工具的语法:

|

||||

|

||||

```

|

||||

wc [OPTION]... [FILE]...

|

||||

```

|

||||

|

||||

以下是 `wc` 的 man 文档的解释:

|

||||

|

||||

```

|

||||

为每个文件打印新行、单词和字节数,如果指定多于一个文件,也列出总的行数。单词是由空格分隔的非零长度的字符序列。如果没有指定文件,或当文件为 `-`,则读取标准输入。

|

||||

```

|

||||

|

||||

下面的 Q&A 样式的示例将会让您更好地了解 `wc` 命令的基本用法。

|

||||

|

||||

注意:在所有示例中我们将使用一个名为 `file.txt` 的文件作为输入文件。以下是该文件包含的内容:

|

||||

|

||||

```

|

||||

hi

|

||||

hello

|

||||

how are you

|

||||

thanks.

|

||||

```

|

||||

|

||||

### Q1. 如何打印字节数

|

||||

|

||||

使用 `-c` 命令选项打印字节数.

|

||||

|

||||

```

|

||||

wc -c file.txt

|

||||

```

|

||||

|

||||

下面是这个命令在我们的系统上产生的输出:

|

||||

|

||||

[![如何打印字节数][1]][2]

|

||||

|

||||

文件包含 29 个字节。

|

||||

|

||||

### Q2. 如何打印字符数

|

||||

|

||||

要打印字符数,请使用 `-m` 命令行选项。

|

||||

|

||||

```

|

||||

wc -m file.txt

|

||||

```

|

||||

|

||||

下面是这个命令在我们的系统上产生的输出:

|

||||

|

||||

[![如何打印字符数][3]][4]

|

||||

|

||||

文件包含 29 个字符。

|

||||

|

||||

### Q3. 如何打印换行数

|

||||

|

||||

使用 `-l` 命令选项来打印文件中的新行数:

|

||||

|

||||

```

|

||||

wc -l file.txt

|

||||

```

|

||||

|

||||

这里是我们的例子的输出:

|

||||

|

||||

[![如何打印换行数][5]][6]

|

||||

|

||||

### Q4. 如何打印单词数

|

||||

|

||||

要打印文件中的单词数量,请使用 `-w` 命令选项。

|

||||

|

||||

```

|

||||

wc -w file.txt

|

||||

```

|

||||

|

||||

在我们的例子中命令的输出如下:

|

||||

|

||||

[![如何打印字数][7]][8]

|

||||

|

||||

这显示文件中有 6 个单词。

|

||||

|

||||

### Q5. 如何打印最长行的显示宽度或长度

|

||||

|

||||

如果您想要打印输入文件中最长行的长度,请使用 `-l` 命令行选项。

|

||||

|

||||

```

|

||||

wc -L file.txt

|

||||

```

|

||||

|

||||

下面是在我们的案例中命令产生的结果:

|

||||

|

||||

[![如何打印最长行的显示宽度或长度][9]][10]

|

||||

|

||||

所以文件中最长的行长度是 11。

|

||||

|

||||

### Q6. 如何从文件读取输入文件名

|

||||

|

||||

如果您有多个文件名,并且您希望 `wc` 从一个文件中读取它们,那么使用`-files0-from` 选项。

|

||||

|

||||

```

|

||||

wc --files0-from=names.txt

|

||||

```

|

||||

|

||||

[![如何从文件读取输入文件名][11]][12]

|

||||

|

||||

如你所见 `wc` 命令,在这个例子中,输出了文件 `file.txt` 的行、单词和字符计数。文件名为 `file.txt` 的文件在 `name.txt` 文件中提及。值得一提的是,要成功地使用这个选项,文件中的文件名应该用 NUL 终止——您可以通过键入`Ctrl + v` 然后按 `Ctrl + Shift + @` 来生成这个字符。

|

||||

|

||||

### 结论

|

||||

|

||||

正如您所认同的一样,从理解和使用目的来看, `wc` 是一个简单的命令。我们已经介绍了几乎所有的命令行选项,所以一旦你练习了我们这里介绍的内容,您就可以随时在日常工作中使用该工具了。想了解更多关于 `wc` 的信息,请参考它的 [man 文档][13]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/linux-wc-command-explained-for-beginners-6-examples/

|

||||

|

||||

作者:[Himanshu Arora][a]

|

||||

译者:[stevenzdg988](https://github.com/stevenzdg988)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com

|

||||

[1]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/wc-c-option.png

|

||||

[2]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/big/wc-c-option.png

|

||||

[3]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/wc-m-option.png

|

||||

[4]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/big/wc-m-option.png

|

||||

[5]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/wc-l-option.png

|

||||

[6]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/big/wc-l-option.png

|

||||

[7]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/wc-w-option.png

|

||||

[8]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/big/wc-w-option.png

|

||||

[9]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/wc-L-option.png

|

||||

[10]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/big/wc-L-option.png

|

||||

[11]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/wc-file0-from-option.png

|

||||

[12]:https://www.howtoforge.com/images/usage_of_pfsense_to_block_dos_attack_/big/wc-file0-from-option.png

|

||||

[13]:https://linux.die.net/man/1/wc

|

||||

@ -1,40 +1,41 @@

|

||||

cURL VS wget:根据两者的差异和使用习惯,你应该选用哪一个?

|

||||

cURL 与 wget:你应该选用哪一个?

|

||||

======

|

||||

|

||||

|

||||

|

||||

当想要直接通过 Linux 命令行下载文件,马上就能想到两个工具:‘wget’和‘cURL’。它们有很多共享的特征,可以很轻易的完成一些相同的任务。

|

||||

当想要直接通过 Linux 命令行下载文件,马上就能想到两个工具:wget 和 cURL。它们有很多一样的特征,可以很轻易的完成一些相同的任务。

|

||||

|

||||

虽然它们有一些相似的特征,但它们并不是完全一样。这两个程序适用与不同的场合,在特定场合下,都拥有各自的特性。

|

||||

|

||||

### cURL vs wget: 相似之处

|

||||

### cURL vs wget: 相似之处

|

||||

|

||||

wget 和 cURL 都可以下载内容。它们的内核就是这么设计的。它们都可以向互联网发送请求并返回请求项。这可以是文件、图片或者是其他诸如网站的原始 HTML 之类。

|

||||

wget 和 cURL 都可以下载内容。它们的核心就是这么设计的。它们都可以向互联网发送请求并返回请求项。这可以是文件、图片或者是其他诸如网站的原始 HTML 之类。

|

||||

|

||||

这两个程序都可以进行 HTTP POST 请求。这意味着它们都可以向网站发送数据,比如说填充表单什么的。

|

||||

|

||||

由于这两者都是命令行工具,它们都被设计成脚本程序。wget 和 cURL 都可以写进你的 [Bash 脚本][1] ,自动与新内容交互,下载所需内容。

|

||||

由于这两者都是命令行工具,它们都被设计成可脚本化。wget 和 cURL 都可以写进你的 [Bash 脚本][1] ,自动与新内容交互,下载所需内容。

|

||||

|

||||

### wget 的优势

|

||||

|

||||

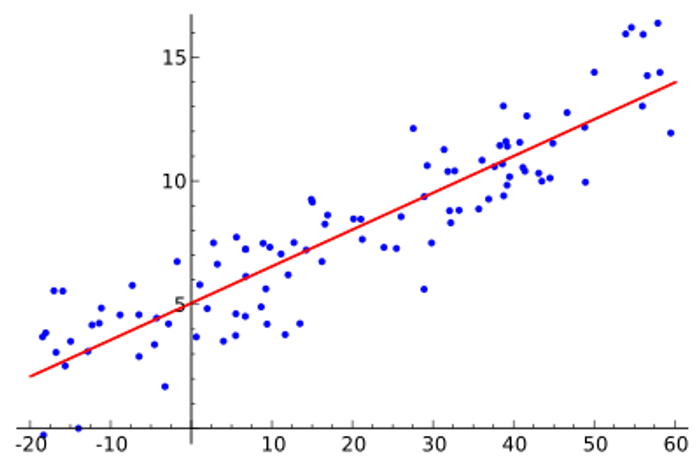

![wget download][2]

|

||||

|

||||

wget 简单直接。这意味着你能享受它超凡的下载速度。wget 是一个独立的程序,无需额外的资源库,更不会做出格的事情。

|

||||

wget 简单直接。这意味着你能享受它超凡的下载速度。wget 是一个独立的程序,无需额外的资源库,更不会做其范畴之外的事情。

|

||||

|

||||

wget 是专业的直接下载程序,支持递归下载。同时,它也允许你在网页或是 FTP 目录下载任何事物。

|

||||

wget 是专业的直接下载程序,支持递归下载。同时,它也允许你下载网页中或是 FTP 目录中的任何内容。

|

||||

|

||||

wget 拥有智能的默认项。他规定了很多在常规浏览器里的事物处理方式,比如 cookies 和重定向,这都不需要额外的配置。可以说,wget 简直就是无需说明,开罐即食!

|

||||

wget 拥有智能的默认设置。它规定了很多在常规浏览器里的事物处理方式,比如 cookies 和重定向,这都不需要额外的配置。可以说,wget 简直就是无需说明,开罐即食!

|

||||

|

||||

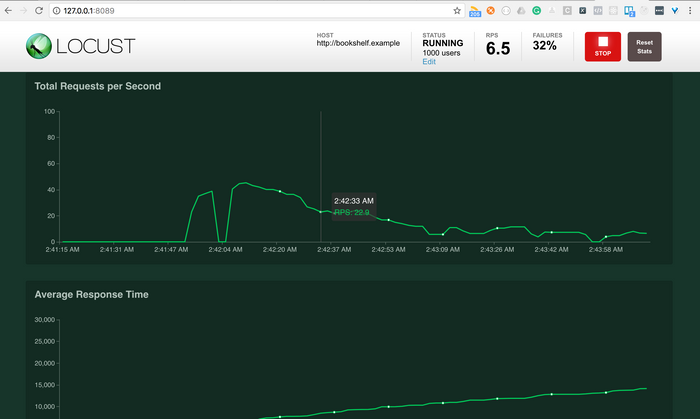

### cURL 优势

|

||||

|

||||

![cURL Download][3]

|

||||

|

||||

cURL是一个多功能工具。当然,他可以下载网络内容,但同时它也能做更多别的事情。

|

||||

cURL是一个多功能工具。当然,它可以下载网络内容,但同时它也能做更多别的事情。

|

||||

|

||||

cURL 技术支持库是:libcurl。这就意味着你可以基于 cURL 编写整个程序,允许你在 libcurl 库中基于图形环境下载程序,访问它所有的功能。

|

||||

cURL 技术支持库是:libcurl。这就意味着你可以基于 cURL 编写整个程序,允许你基于 libcurl 库中编写图形环境的下载程序,访问它所有的功能。

|

||||

|

||||

cURL 宽泛的网络协议支持可能是其最大的卖点。cURL 支持访问 HTTP 和 HTTPS 协议,能够处理 FTP 传送。它支持 LDAP 协议,甚至支持 Samba 分享。实际上,你还可以用 cURL 收发邮件。

|

||||

cURL 宽泛的网络协议支持可能是其最大的卖点。cURL 支持访问 HTTP 和 HTTPS 协议,能够处理 FTP 传输。它支持 LDAP 协议,甚至支持 Samba 分享。实际上,你还可以用 cURL 收发邮件。

|

||||

|

||||

cURL 也有一些简洁的安全特性。cURL 支持安装许多 SSL/TLS 库,也支持通过网络代理访问,包括 SOCKS。这意味着,你可以越过 Tor. 使用cURL。

|

||||

cURL 也有一些简洁的安全特性。cURL 支持安装许多 SSL/TLS 库,也支持通过网络代理访问,包括 SOCKS。这意味着,你可以越过 Tor 来使用cURL。

|

||||

|

||||

cURL 同样支持让数据发送变得更容易的 gzip 压缩技术。

|

||||

|

||||

@ -42,15 +43,15 @@ cURL 同样支持让数据发送变得更容易的 gzip 压缩技术。

|

||||

|

||||

那你应该使用 cURL 还是使用 wget?这个比较得看实际用途。如果你想快速下载并且没有担心参数标识的需求,那你应该使用轻便有效的 wget。如果你想做一些更复杂的使用,直觉告诉你,你应该选择 cRUL。

|

||||

|

||||

cURL 支持你做很多事情。你可以把 cURL想象成一个精简的命令行网页浏览器。它支持几乎你能想到的所有协议,可以交互访问几乎所有在线内容。唯一和浏览器不同的是,cURL 不能显示接收到的相应信息。

|

||||

cURL 支持你做很多事情。你可以把 cURL 想象成一个精简的命令行网页浏览器。它支持几乎你能想到的所有协议,可以交互访问几乎所有在线内容。唯一和浏览器不同的是,cURL 不会渲染接收到的相应信息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.maketecheasier.com/curl-vs-wget/

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[译者ID](https://github.com/CYLeft)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[CYLeft](https://github.com/CYLeft)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

100

published/20180106 Meltdown and Spectre Linux Kernel Status.md

Normal file

100

published/20180106 Meltdown and Spectre Linux Kernel Status.md

Normal file

@ -0,0 +1,100 @@

|

||||

Gerg:meltdown 和 spectre 影响下的 Linux 内核状况

|

||||

============================================================

|

||||

|

||||

现在(LCTT 译注:本文发表于 1 月初),每个人都知道一件关乎电脑安全的“大事”发生了,真见鬼,等[每日邮报报道][1]的时候,你就知道什么是糟糕了...

|

||||

|

||||

不管怎样,除了告诉你这篇写的及其出色的[披露该问题的 Zero 项目的论文][2]之外,我不打算去跟进这个问题已经被报道出来的细节。他们应该现在就直接颁布 2018 年的 [Pwnie][3] 奖,干的太棒了。

|

||||

|

||||

如果你想了解我们如何在内核中解决这些问题的技术细节,你可以保持关注了不起的 [lwn.net][4],他们会把这些细节写成文章。

|

||||

|

||||

此外,这有一条很好的关于[这些公告][5]的摘要,包括了各个厂商的公告。

|

||||

|

||||

至于这些涉及的公司是如何处理这些问题的,这可以说是如何**不**与 Linux 内核社区保持沟通的教科书般的例子。这件事涉及到的人和公司都知道发生了什么,我确定这件事最终会出现,但是目前我需要去关注的是如何修复这些涉及到的问题,然后不去点名指责,不管我有多么的想去这么做。

|

||||

|

||||

### 你现在能做什么

|

||||

|

||||

如果你的 Linux 系统正在运行一个正常的 Linux 发行版,那么升级你的内核。它们都应该已经更新了,然后在接下来的几个星期里保持更新。我们会统计大量在极端情况下出现的 bug ,这里涉及的测试很复杂,包括庞大的受影响的各种各样的系统和工作任务。如果你的 Linux 发行版没有升级内核,我强烈的建议你马上更换一个 Linux 发行版。

|

||||

|

||||

然而有很多的系统因为各种各样的原因(听说它们比起“传统”的企业发行版更多)不是在运行“正常的” Linux 发行版上。它们依靠长期支持版本(LTS)的内核升级,或者是正常的稳定内核升级,或者是内部的某人打造版本的内核。对于这部分人,这篇介绍了你能使用的上游内核中发生的混乱是怎么回事。

|

||||

|

||||

### Meltdown – x86

|

||||

|

||||

现在,Linus 的内核树包含了我们当前所知的为 x86 架构解决 meltdown 漏洞的所有修复。开启 `CONFIG_PAGE_TABLE_ISOLATION` 这个内核构建选项,然后进行重构和重启,所有的设备应该就安全了。

|

||||

|

||||

然而,Linus 的内核树当前处于 4.15-rc6 这个版本加上一些未完成的补丁。4.15-rc7 版本要明天才会推出,里面的一些补丁会解决一些问题。但是大部分的人不会在一个“正常”的环境里运行 -rc 内核。

|

||||

|

||||

因为这个原因,x86 内核开发者在<ruby>页表隔离<rt>page table isolation</rt></ruby>代码的开发过程中做了一个非常好的工作,好到要反向移植到最新推出的稳定内核 4.14 的话,我们只需要做一些微不足道的工作。这意味着最新的 4.14 版本(本文发表时是 4.14.12 版本),就是你应该运行的版本。4.14.13 会在接下来的几天里推出,这个更新里有一些额外的修复补丁,这些补丁是一些运行 4.14.12 内核且有启动时间问题的系统所需要的(这是一个显而易见的问题,如果它不启动,就把这些补丁加入更新排队中)。

|

||||

|

||||

我个人要感谢 Andy Lutomirski、Thomas Gleixner、Ingo Molnar、 Borislav Petkov、 Dave Hansen、 Peter Zijlstra、 Josh Poimboeuf、 Juergen Gross 和 Linus Torvalds。他们开发出了这些修复补丁,并且为了让我能轻松地使稳定版本能够正常工作,还把这些补丁以一种形式融合到了上游分支里。没有这些工作,我甚至不敢想会发生什么。

|

||||

|

||||

对于老的长期支持内核(LTS),我主要依靠 Hugh Dickins、 Dave Hansen、 Jiri Kosina 和 Borislav Petkov 优秀的工作,来为 4.4 到 4.9 的稳定内核代码树分支带去相同的功能。我同样在追踪讨厌的 bug 和缺失的补丁方面从 Guenter Roeck、 Kees Cook、 Jamie Iles 以及其他很多人那里得到了极大的帮助。我要感谢 David Woodhouse、 Eduardo Valentin、 Laura Abbott 和 Rik van Riel 在反向移植和集成方面的帮助,他们的帮助在许多棘手的地方是必不可少的。

|

||||

|

||||

这些长期支持版本的内核同样有 `CONFIG_PAGE_TABLE_ISOLATION` 这个内核构建选项,你应该开启它来获得全方面的保护。

|

||||

|

||||

从主线版本 4.14 和 4.15 的反向移植是非常不一样的,它们会出现不同的 bug,我们现在知道了一些在工作中遇见的 VDSO 问题。一些特殊的虚拟机安装的时候会报一些奇怪的错,但这是只是现在出现的少数情况,这种情况不应该阻止你进行升级。如果你在这些版本中遇到了问题,请让我们在稳定内核邮件列表中知道这件事。

|

||||

|

||||

如果你依赖于 4.4 和 4.9 或是现在的 4.14 以外的内核代码树分支,并且没有发行版支持你的话,你就太不幸了。比起你当前版本内核包含的上百个已知的漏洞和 bug,缺少补丁去解决 meltdown 问题算是一个小问题了。你现在最需要考虑的就是马上把你的系统升级到最新。

|

||||

|

||||

与此同时,臭骂那些强迫你运行一个已被废弃且不安全的内核版本的人,他们是那些需要知道这是完全不顾后果的行为的人中的一份子。

|

||||

|

||||

### Meltdown – ARM64

|

||||

|

||||

现在 ARM64 为解决 Meltdown 问题而开发的补丁还没有并入 Linus 的代码树,一旦 4.15 在接下来的几周里成功发布,他们就准备[阶段式地并入][6] 4.16-rc1,因为这些补丁还没有在一个 Linus 发布的内核中,我不能把它们反向移植进一个稳定的内核版本里(额……我们有这个[规矩][7]是有原因的)

|

||||

|

||||

由于它们还没有在一个已发布的内核版本中,如果你的系统是用的 ARM64 的芯片(例如 Android ),我建议你选择 [Android 公共内核代码树][8],现在,所有的 ARM64 补丁都并入 [3.18][9]、[4.4][10] 和 [4.9][11] 分支 中。

|

||||

|

||||

我强烈建议你关注这些分支,看随着时间的过去,由于测试了已并入补丁的已发布的上游内核版本,会不会有更多的修复补丁被补充进来,特别是我不知道这些补丁会在什么时候加进稳定的长期支持内核版本里。

|

||||

|

||||

对于 4.4 到 4.9 的长期支持内核版本,这些补丁有很大概率永远不会并入它们,因为需要大量的先决补丁。而所有的这些先决补丁长期以来都一直在 Android 公共内核版本中测试和合并,所以我认为现在对于 ARM 系统来说,仅仅依赖这些内核分支而不是长期支持版本是一个更好的主意。

|

||||

|

||||

同样需要注意的是,我合并所有的长期支持内核版本的更新到这些分支后通常会在一天之内或者这个时间点左右进行发布,所以你无论如何都要关注这些分支,来确保你的 ARM 系统是最新且安全的。

|

||||

|

||||

### Spectre

|

||||

|

||||

现在,事情变得“有趣”了……

|

||||

|

||||

再一次,如果你正在运行一个发行版的内核,一些内核融入了各种各样的声称能缓解目前大部分问题的补丁,你的内核*可能*就被包含在其中。如果你担心这一类的攻击的话,我建议你更新并测试看看。

|

||||

|

||||

对于上游来说,很好,现状就是仍然没有任何的上游代码树分支合并了这些类型的问题相关的修复补丁。有很多的邮件列表在讨论如何去解决这些问题的解决方案,大量的补丁在这些邮件列表中广为流传,但是它们尚处于开发前期,一些补丁系列甚至没有被构建或者应用到任何已知的代码树,这些补丁系列彼此之间相互冲突,这是常见的混乱。

|

||||

|

||||

这是由于 Spectre 问题是最近被内核开发者解决的。我们所有人都在 Meltdown 问题上工作,我们没有精确的 Spectre 问题全部的真实信息,而四处散乱的补丁甚至比公开发布的补丁还要糟糕。

|

||||

|

||||

因为所有的这些原因,我们打算在内核社区里花上几个星期去解决这些问题并把它们合并到上游去。修复补丁会进入到所有内核的各种各样的子系统中,而且在它们被合并后,会集成并在稳定内核的更新中发布,所以再次提醒,无论你使用的是发行版的内核还是长期支持的稳定内核版本,你最好并保持更新到最新版。

|

||||

|

||||

这不是好消息,我知道,但是这就是现实。如果有所安慰的话,似乎没有任何其它的操作系统完全地解决了这些问题,现在整个产业都在同一条船上,我们只需要等待,并让开发者尽快地解决这些问题。

|

||||

|

||||

提出的解决方案并非毫不重要,但是它们中的一些还是非常好的。一些新概念会被创造出来来帮助解决这些问题,Paul Turner 提出的 Retpoline 方法就是其中的一个例子。这将是未来大量研究的一个领域,想出方法去减轻硬件中涉及的潜在问题,希望在它发生前就去预见它。

|

||||

|

||||

### 其他架构的芯片

|

||||

|

||||

现在,我没有看见任何 x86 和 arm64 架构以外的芯片架构的补丁,听说在一些企业发行版中有一些用于其他类型的处理器的补丁,希望他们在这几周里能浮出水面,合并到合适的上游那里。我不知道什么时候会发生,如果你使用着一个特殊的架构,我建议在 arch-specific 邮件列表上问这件事来得到一个直接的回答。

|

||||

|

||||

### 结论

|

||||

|

||||

再次说一遍,更新你的内核,不要耽搁,不要止步。更新会在很长的一段时间里持续地解决这些问题。同样的,稳定和长期支持内核发行版里仍然有很多其它的 bug 和安全问题,它们和问题的类型无关,所以一直保持更新始终是一个好主意。

|

||||

|

||||

现在,有很多非常劳累、坏脾气、缺少睡眠的人,他们通常会生气地让内核开发人员竭尽全力地解决这些问题,即使这些问题完全不是开发人员自己造成的。请关爱这些可怜的程序猿。他们需要爱、支持,我们可以为他们免费提供的他们最爱的饮料,以此来确保我们都可以尽可能快地结束修补系统。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://kroah.com/log/blog/2018/01/06/meltdown-status/

|

||||

|

||||

作者:[Greg Kroah-Hartman][a]

|

||||

译者:[hopefully2333](https://github.com/hopefully2333)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://kroah.com

|

||||

[1]:http://www.dailymail.co.uk/sciencetech/article-5238789/Intel-says-security-updates-fix-Meltdown-Spectre.html

|

||||

[2]:https://googleprojectzero.blogspot.fr/2018/01/reading-privileged-memory-with-side.html

|

||||

[3]:https://pwnies.com/

|

||||

[4]:https://lwn.net/Articles/743265/

|

||||

[5]:https://lwn.net/Articles/742999/

|

||||

[6]:https://git.kernel.org/pub/scm/linux/kernel/git/arm64/linux.git/log/?h=kpti

|

||||

[7]:https://www.kernel.org/doc/html/latest/process/stable-kernel-rules.html

|

||||

[8]:https://android.googlesource.com/kernel/common/

|

||||

[9]:https://android.googlesource.com/kernel/common/+/android-3.18

|

||||

[10]:https://android.googlesource.com/kernel/common/+/android-4.4

|

||||

[11]:https://android.googlesource.com/kernel/common/+/android-4.9

|

||||

[12]:https://support.google.com/faqs/answer/7625886

|

||||

@ -3,25 +3,25 @@ Linux 终端下的多媒体应用

|

||||

|

||||

|

||||

|

||||

Linux 终端是支持多媒体的,所以你可以在终端里听音乐,看电影,看图片,甚至是阅读 PDF。

|

||||

> Linux 终端是支持多媒体的,所以你可以在终端里听音乐,看电影,看图片,甚至是阅读 PDF。

|

||||

|

||||

在我的上一篇文章里,我们了解到 Linux 终端是可以支持多媒体的。是的,这是真的!你可以使用 Mplayer、fbi 和 fbgs 来实现不打开 X 进程就听音乐、看电影、看照片,甚至阅读 PDF。此外,你还可以通过 CMatrix 来体验黑客帝国(Matrix)风格的屏幕保护。

|

||||

在我的上一篇文章里,我们了解到 Linux 终端是可以支持多媒体的。是的,这是真的!你可以使用 Mplayer、fbi 和 fbgs 来实现不打开 X 会话就听音乐、看电影、看照片,甚至阅读 PDF。此外,你还可以通过 CMatrix 来体验黑客帝国(Matrix)风格的屏幕保护。

|

||||

|

||||

不过你可能需要对系统进行一些修改才能达到前面这些目的。下文的操作都是在 Ubuntu 16.04 上进行的。

|

||||

|

||||

### MPlayer

|

||||

|

||||

你可能会比较熟悉功能丰富的 MPlayer。它支持几乎所有格式的视频与音频,并且能在绝大部分现有的平台上运行,像 Linux,Android,Windows,Mac,Kindle,OS/2 甚至是 AmigaOS。不过,要在你的终端运行 MPlayer 可能需要多做一点工作,这些工作与你使用的 Linux 发行版有关。来,我们先试着播放一个视频:

|

||||

你可能会比较熟悉功能丰富的 MPlayer。它支持几乎所有格式的视频与音频,并且能在绝大部分现有的平台上运行,像 Linux、Android、Windows、Mac、Kindle、OS/2 甚至是 AmigaOS。不过,要在你的终端运行 MPlayer 可能需要多做一点工作,这些工作与你使用的 Linux 发行版有关。来,我们先试着播放一个视频:

|

||||

|

||||

```

|

||||

$ mplayer [视频文件名]

|

||||

```

|

||||

|

||||

如果上面的命令正常执行了,那么很好,接下来你可以把时间放在了解 MPlayer 的常用选项上了,譬如设定视频大小等。但是,有些 Linux 发行版在对帧缓冲(framebuffer)的处理方式上与早期的不同,那么你就需要进行一些额外的设置才能让其正常工作了。下面是在最近的 Ubuntu 发行版上需要做的一些操作。

|

||||

如果上面的命令正常执行了,那么很好,接下来你可以把时间放在了解 MPlayer 的常用选项上了,譬如设定视频大小等。但是,有些 Linux 发行版在对<ruby>帧缓冲<rt>framebuffer</rt></ruby>的处理方式上与早期的不同,那么你就需要进行一些额外的设置才能让其正常工作了。下面是在最近的 Ubuntu 发行版上需要做的一些操作。

|

||||

|

||||

首先,将你自己添加到 video 用户组。

|

||||

首先,将你自己添加到 `video` 用户组。

|

||||

|

||||

其次,确认 `/etc/modprobe.d/blacklist-framebuffer.conf` 文件中包含这样一行:`#blacklist vesafb`。这一行应该默认被注释掉了,如果不是的话,那就手动把它注释掉。此外的其他模块行需要确认没有被注释,这样设置才能保证其他那些模块不会被载入。注:如果你想要对控制帧缓冲(framebuffer)有更深入的了解,可以从针对你的显卡的这些模块里获取更深入的认识。

|

||||

其次,确认 `/etc/modprobe.d/blacklist-framebuffer.conf` 文件中包含这样一行:`#blacklist vesafb`。这一行应该默认被注释掉了,如果不是的话,那就手动把它注释掉。此外的其他模块行需要确认没有被注释,这样设置才能保证其他那些模块不会被载入。注:如果你想要更深入的利用<ruby>帧缓冲<rt>framebuffer</rt></ruby>,这些针对你的显卡的模块可以使你获得更好的性能。

|

||||

|

||||

然后,在 `/etc/initramfs-tools/modules` 的结尾增加两个模块:`vesafb` 和 `fbcon`,并且更新 iniramfs 镜像:

|

||||

|

||||

@ -35,7 +35,7 @@ $ sudo nano /etc/initramfs-tools/modules

|

||||

$ sudo update-initramfs -u

|

||||

```

|

||||

|

||||

[fbcon][1] 是 Linux 帧缓冲(framebuffer)终端,它运行在帧缓冲(framebuffer)之上并为其增加图形功能。而它需要一个帧缓冲(framebuffer)设备,这则是由 `vesafb` 模块来提供的。

|

||||

[fbcon][1] 是 Linux <ruby>帧缓冲<rt>framebuffer</rt></ruby>终端,它运行在<ruby>帧缓冲<rt>framebuffer</rt></ruby>之上并为其增加图形功能。而它需要一个<ruby>帧缓冲<rt>framebuffer</rt></ruby>设备,这则是由 `vesafb` 模块来提供的。

|

||||

|

||||

接下来,你需要修改你的 GRUB2 配置。在 `/etc/default/grub` 中你将会看到类似下面的一行:

|

||||

|

||||

@ -49,7 +49,7 @@ GRUB_CMDLINE_LINUX_DEFAULT="quiet splash"

|

||||

GRUB_CMDLINE_LINUX_DEFAULT="quiet splash vga=789"

|

||||

```

|

||||

|

||||

重启之后进入你的终端(Ctrl+Alt+F1)(LCTT 译注:在某些发行版中 Ctrl+Alt+F1 默认为图形界面,可以尝试 Ctrl+Alt+F2),然后就可以尝试播放一个视频了。下面的命令指定了 `fbdev2` 为视频输出设备,虽然我还没弄明白如何去选择用哪个输入设备,但是我用它成功过。默认的视频大小是 320x240,在此我给缩放到了 960:

|

||||

重启之后进入你的终端(`Ctrl+Alt+F1`)(LCTT 译注:在某些发行版中 `Ctrl+Alt+F1` 默认为图形界面,可以尝试 `Ctrl+Alt+F2`),然后就可以尝试播放一个视频了。下面的命令指定了 `fbdev2` 为视频输出设备,虽然我还没弄明白如何去选择用哪个输入设备,但是我用它成功过。默认的视频大小是 320x240,在此我给缩放到了 960:

|

||||

|

||||

```

|

||||

$ mplayer -vo fbdev2 -vf scale -zoom -xy 960 AlienSong_mp4.mov

|

||||

@ -69,19 +69,19 @@ MPlayer 可以播放 CD、DVD 以及网络视频流,并且还有一系列的

|

||||

$ fbi 文件名

|

||||

```

|

||||

|

||||

你可以使用方向键来在大图片中移动视野,使用 + 和 - 来缩放,或者使用 r 或 l 来向右或向左旋转 90 度。Escape 键则可以关闭查看的图片。此外,你还可以给 `fbi` 一个文件列表来实现幻灯播放:

|

||||

你可以使用方向键来在大图片中移动视野,使用 `+` 和 `-` 来缩放,或者使用 `r` 或 `l` 来向右或向左旋转 90 度。`Escape` 键则可以关闭查看的图片。此外,你还可以给 `fbi` 一个文件列表来实现幻灯播放:

|

||||

|

||||

```

|

||||

$ fbi --list 文件列表.txt

|

||||

```

|

||||

|

||||

`fbi` 还支持自动缩放。还可以使用 `-a` 选项来控制缩放比例。`--autoup` 和 `--autodown` 则是用于告知 `fbi` 只进行放大或者缩小。要调整图片切换时淡入淡出的时间则可以使用 `--blend [时间]` 来指定一个以毫秒为单位的时间长度。使用 k 和 j 键则可以切换文件列表中的上一张或下一张图片。

|

||||

`fbi` 还支持自动缩放。还可以使用 `-a` 选项来控制缩放比例。`--autoup` 和 `--autodown` 则是用于告知 `fbi` 只进行放大或者缩小。要调整图片切换时淡入淡出的时间则可以使用 `--blend [时间]` 来指定一个以毫秒为单位的时间长度。使用 `k` 和 `j` 键则可以切换文件列表中的上一张或下一张图片。

|

||||

|

||||

`fbi` 还提供了命令来为你浏览过的文件创建文件列表,或者将你的命令导出到文件中,以及一系列其它很棒的选项。你可以通过 `man fbi` 来查阅完整的选项列表。

|

||||

|

||||

### CMatrix 终端屏保

|

||||

|

||||

黑客帝国(The Matrix)屏保仍然是我非常喜欢的屏保之一(如图 2),仅次于弹跳牛(bouncing cow)。[CMatrix][3] 可以在终端运行。要运行它只需输入 `cmatrix`,然后可以用 Ctrl+C 来停止运行。执行 `cmatrix -s` 则会启动屏保模式,这样的话,按任意键都会直接退出。`-C` 参数可以设定颜色,譬如绿色(green)、红色(red)、蓝色(blue)、黄色(yellow)、白色(white)、紫色(magenta)、青色(cyan)或者黑色(black)。

|

||||

<ruby>黑客帝国<rt>The Matrix</rt></ruby>屏保仍然是我非常喜欢的屏保之一(如图 2),仅次于<ruby>弹跳牛<rt>bouncing cow</rt></ruby>。[CMatrix][3] 可以在终端运行。要运行它只需输入 `cmatrix`,然后可以用 `Ctrl+C` 来停止运行。执行 `cmatrix -s` 则会启动屏保模式,这样的话,按任意键都会直接退出。`-C` 参数可以设定颜色,譬如绿色(`green`)、红色(`red`)、蓝色(`blue`)、黄色(`yellow`)、白色(`white`)、紫色(`magenta`)、青色(`cyan`)或者黑色(`black`)。

|

||||

|

||||

|

||||

|

||||

@ -91,7 +91,7 @@ CMatrix 还支持异步按键,这意味着你可以在它运行的时候改变

|

||||

|

||||

### fbgs PDF 阅读器

|

||||

|

||||

看起来,PDF 文档的流行是普遍且无法阻止的,而且 PDF 比它之前好了很多,譬如超链接、复制粘贴以及更好的文本搜索功能等。`fbgs` 是 `fbida` 包中提供的一个 PDF 阅读器。它可以设置页面大小、分辨率、指定页码以及绝大部分 `fbi` 所提供的选项,当然除了一些在 `man fbgs` 中列举出来的不可用选项。我主要用到的选项是页面大小,你可以选择 `-l`、`xl` 或者 `xxl`:

|

||||

看起来,PDF 文档是普遍流行且无法避免的,而且 PDF 比它之前的功能好了很多,譬如超链接、复制粘贴以及更好的文本搜索功能等。`fbgs` 是 `fbida` 包中提供的一个 PDF 阅读器。它可以设置页面大小、分辨率、指定页码以及绝大部分 `fbi` 所提供的选项,当然除了一些在 `man fbgs` 中列举出来的不可用选项。我主要用到的选项是页面大小,你可以选择 `-l`、`xl` 或者 `xxl`:

|

||||

|

||||

```

|

||||

$ fbgs -xl annoyingpdf.pdf

|

||||

@ -105,7 +105,7 @@ via: https://www.linux.com/learn/intro-to-linux/2018/1/multimedia-apps-linux-con

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[Yinr](https://github.com/Yinr)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,42 +1,42 @@

|

||||

如何启动进入 Linux 命令行

|

||||

======

|

||||

|

||||

|

||||

|

||||

可能有时候你需要或者不想使用 GUI,也就是没有 X,而是选择命令行启动 [Linux][1]。不管是什么原因,幸运的是,直接启动进入 Linux **命令行** 非常简单。在其他内核选项之后,它需要对引导参数进行简单的更改。此更改将系统引导到指定的运行级别。

|

||||

可能有时候你启动 Linux 时需要或者希望不使用 GUI(图形用户界面),也就是没有 X,而是选择命令行。不管是什么原因,幸运的是,直接启动进入 Linux 命令行 非常简单。它需要在其他内核选项之后对引导参数进行简单的更改。此更改将系统引导到指定的运行级别。

|

||||

|

||||

### 为什么要这样做?

|

||||

|

||||

如果你的系统由于无效配置或者显示管理器损坏或任何可能导致 GUI 无法正常启动的情况而无法运行 Xorg,那么启动到命令行将允许你通过登录到终端进行故障排除(假设你知道要怎么开始),并能做任何你需要做的东西。引导到命令行也是一个很好的熟悉终端的方式,不然,你也可以为了好玩这么做。

|

||||

如果你的系统由于无效配置或者显示管理器损坏或任何可能导致 GUI 无法正常启动的情况而无法运行 Xorg,那么启动到命令行将允许你通过登录到终端进行故障排除(假设你知道要怎么做),并能做任何你需要做的东西。引导到命令行也是一个很好的熟悉终端的方式,不然,你也可以为了好玩这么做。

|

||||

|

||||

### 访问 GRUB 菜单

|

||||

|

||||

在启动时,你需要访问 GRUB 启动菜单。如果在每次启动计算机时菜单未设置为显示,那么可能需要在系统启动之前按住 SHIFT 键。在菜单中,需要选择 [Linux 发行版][2]条目。高亮显示后,按下 “e” 编辑引导参数。

|

||||

在启动时,你需要访问 GRUB 启动菜单。如果在每次启动计算机时菜单未设置为显示,那么可能需要在系统启动之前按住 `SHIFT` 键。在菜单中,需要选择 Linux 发行版条目。高亮显示后该条目,按下 `e` 编辑引导参数。

|

||||

|

||||

[][3]

|

||||

|

||||

较老的 GRUB 版本遵循类似的机制。启动管理器应提供有关如何编辑启动参数的说明。

|

||||

较老的 GRUB 版本遵循类似的机制。启动管理器应提供有关如何编辑启动参数的说明。

|

||||

|

||||

### 指定运行级别

|

||||

|

||||

编辑器将出现,你将看到 GRUB 解析到内核的选项。移动到以 “linux” 开头的行(旧的 GRUB 版本可能是 “kernel”,选择它并按照说明操作)。这指定了解析到内核的参数。在该行的末尾(可能会出现跨越多行,具体取决于分辨率),只需指定要引导的运行级别,即 3(多用户模式,纯文本)。

|

||||

会出现一个编辑器,你将看到 GRUB 会解析给内核的选项。移动到以 `linux` 开头的行(旧的 GRUB 版本可能是 `kernel`,选择它并按照说明操作)。这指定了要解析给内核的参数。在该行的末尾(可能会出现跨越多行,具体取决于你的终端分辨率),只需指定要引导的运行级别,即 `3`(多用户模式,纯文本)。

|

||||

|

||||

[][4]

|

||||

|

||||

按下 Ctrl-X 或 F10 将使用这些参数启动系统。开机和以前一样。唯一改变的是启动的运行级别。

|

||||

|

||||

|

||||

按下 `Ctrl-X` 或 `F10` 将使用这些参数启动系统。开机和以前一样。唯一改变的是启动的运行级别。

|

||||

|

||||

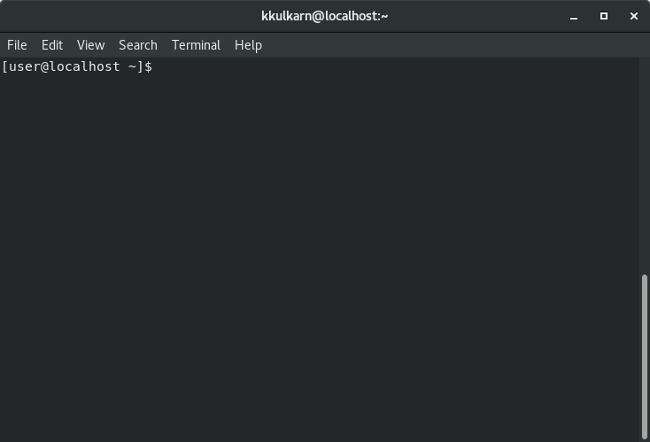

这是启动后的页面:

|

||||

|

||||

[][5]

|

||||

[][5]

|

||||

|

||||

### 运行级别

|

||||

|

||||

你可以指定不同的运行级别,默认运行级别是 5。1 启动到“单用户”模式,它会启动进入 root shell。3 提供了一个多用户命令行系统。

|

||||

你可以指定不同的运行级别,默认运行级别是 `5` (多用户图形界面)。`1` 启动到“单用户”模式,它会启动进入 root shell。`3` 提供了一个多用户命令行系统。

|

||||

|

||||

### 从命令行切换

|

||||

|

||||

在某个时候,你可能想要再次运行显示管理器来使用 GUI,最快的方法是运行这个:

|

||||

在某个时候,你可能想要运行显示管理器来再次使用 GUI,最快的方法是运行这个:

|

||||

|

||||

```

|

||||

$ sudo init 5

|

||||

```

|

||||

@ -49,7 +49,7 @@ via: http://www.linuxandubuntu.com/home/how-to-boot-into-linux-command-line

|

||||

|

||||

作者:[LinuxAndUbuntu][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,62 +1,63 @@

|

||||

在终端显示世界地图

|

||||

MapSCII:在终端显示世界地图

|

||||

======

|

||||

我偶然发现了一个有趣的工具。在终端的世界地图!是的,这太酷了。向 **MapSCII** 问好,这是可在 xterm 兼容终端渲染的盲文和 ASCII 世界地图。它支持 GNU/Linux、Mac OS 和 Windows。我以为这是另一个在 GitHub 上托管的项目。但是我错了!他们做了令人印象深刻的事。我们可以使用我们的鼠标指针在世界地图的任何地方拖拽放大和缩小。其他显著的特性是:

|

||||

|

||||

|

||||

|

||||

我偶然发现了一个有趣的工具。在终端里的世界地图!是的,这太酷了。给 `MapSCII` 打 call,这是可在 xterm 兼容终端上渲染的布莱叶盲文和 ASCII 世界地图。它支持 GNU/Linux、Mac OS 和 Windows。我原以为它只不过是一个在 GitHub 上托管的项目而已,但是我错了!他们做的事令人印象深刻。我们可以使用我们的鼠标指针在世界地图的任何地方拖拽放大和缩小。其他显著的特性是:

|

||||

|

||||

* 发现任何特定地点周围的兴趣点

|

||||

* 高度可定制的图层样式,带有[ Mapbox 样式][1]支持

|

||||

* 连接到任何公共或私有矢量贴片服务器

|

||||

* 高度可定制的图层样式,支持 [Mapbox 样式][1]

|

||||

* 可连接到任何公共或私有的矢量贴片服务器

|

||||

* 或者使用已经提供并已优化的基于 [OSM2VectorTiles][2] 服务器

|

||||

* 离线工作,发现本地 [VectorTile][3]/[MBTiles][4]

|

||||

* 可以离线工作并发现本地的 [VectorTile][3]/[MBTiles][4]

|

||||

* 兼容大多数 Linux 和 OSX 终端

|

||||

* 高度优化算法的流畅体验

|

||||

|

||||

|

||||

|

||||

### 使用 MapSCII 在终端中显示世界地图

|

||||

|

||||

要打开地图,只需从终端运行以下命令:

|

||||

|

||||

```

|

||||

telnet mapscii.me

|

||||

```

|

||||

|

||||

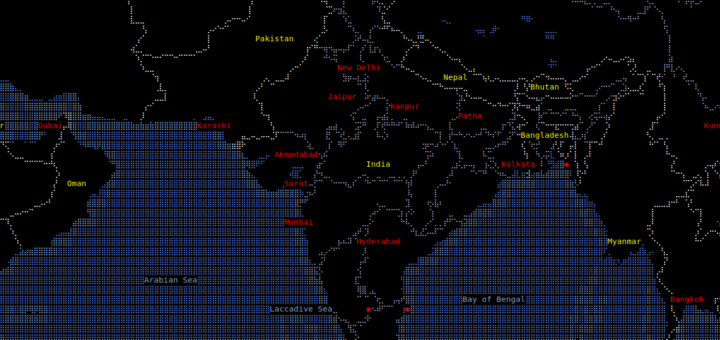

这是我终端上的世界地图。

|

||||

|

||||

[![][5]][6]

|

||||

![][6]

|

||||

|

||||

很酷,是吗?

|

||||

|

||||

要切换到盲文视图,请按 **c**。

|

||||

要切换到布莱叶盲文视图,请按 `c`。

|

||||

|

||||

[![][5]][7]

|

||||

![][7]

|

||||

|

||||

Type **c** again to switch back to the previous format **.**

|

||||

再次输入 **c** 切回以前的格式。

|

||||

再次输入 `c` 切回以前的格式。

|

||||

|

||||

要滚动地图,请使用**向上**、向下**、**向左**、**向右**箭头键。要放大/缩小位置,请使用 **a** 和 **a** 键。另外,你可以使用鼠标的滚轮进行放大或缩小。要退出地图,请按 **q**。

|

||||

要滚动地图,请使用“向上”、“向下”、“向左”、“向右”箭头键。要放大/缩小位置,请使用 `a` 和 `z` 键。另外,你可以使用鼠标的滚轮进行放大或缩小。要退出地图,请按 `q`。

|

||||

|

||||

就像我已经说过的,不要认为这是一个简单的项目。点击地图上的任何位置,然后按 **“a”** 放大。

|

||||

就像我已经说过的,不要认为这是一个简单的项目。点击地图上的任何位置,然后按 `a` 放大。

|

||||

|

||||

放大后,下面是一些示例截图。

|

||||

|

||||

[![][5]][8]

|

||||

![][8]

|

||||

|

||||

我可以放大查看我的国家(印度)的州。

|

||||

|

||||

[![][5]][9]

|

||||

![][9]

|

||||

|

||||

和州内的地区(Tamilnadu):

|

||||

|

||||

[![][5]][10]

|

||||

![][10]

|

||||

|

||||

甚至是地区内的镇 [Taluks][11]:

|

||||

|

||||

[![][5]][12]

|

||||

![][12]

|

||||

|

||||