-

-```

-

-I leave it as an exercise for you to carve out the sections for sidebar.tpl and footer.tpl.

-

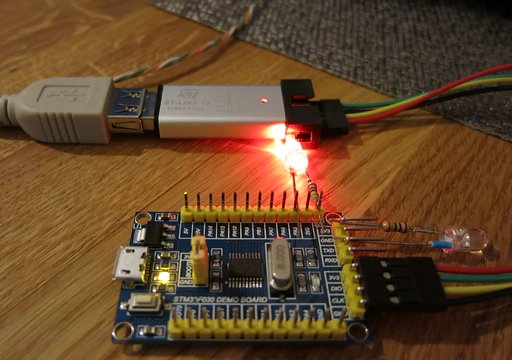

-Note the lines in bold. I added them to facilitate a “login bar” at the top of every webpage. Once you’ve logged into the application, you will see the bar as so:

-

-![][17]

-

-This login bar works in conjunction with the GetSession code snippet we saw in activeContent(). The logic is, if the user is logged in (ie, there is a non-nil session), then we set the InSession parameter to a value (any value), which tells the templating engine to use the “Welcome” bar instead of “Login”. We also extract the user’s first name from the session so that we can present the friendly affectation “Welcome, Richard”.

-

-The home page, represented by index.tpl, uses the following snippet from index.html:

-```

-

-

-

-

Welcome to StarDust

- // to save space, I won't enter the remainder

- // of the snippet

-

-

-

-```

-

-#### Special Note

-

-The template files for the user module reside in the ‘user’ directory within ‘views’, just to keep things tidy. So, for example, the call to activeContent() for login is:

-```

-this.activeContent("user/login")

-

-```

-

-### Controller

-

-A controller handles requests by handing them off to the appropriate function or ‘method’. We only have one controller for our application and it’s defined in default.go. The default method Get() for handling a GET operation is associated with our home page:

-```

-func (this *MainController) Get() {

- this.activeContent("index")

-

-```

-```

- //bin //boot //dev //etc //home //lib //lib64 //media //mnt //opt //proc //root //run //sbin //speedup //srv //sys //tmp //usr //var This page requires login

- sess := this.GetSession("acme")

- if sess == nil {

- this.Redirect("/user/login/home", 302)

- return

- }

- m := sess.(map[string]interface{})

- fmt.Println("username is", m["username"])

- fmt.Println("logged in at", m["timestamp"])

-}

-

-```

-

-I’ve made login a requirement for accessing this page. Logging in means creating a session, which by default expires after 3600 seconds of inactivity. A session is typically maintained on the client side by a ‘cookie’.

-

-In order to support sessions in the application, the ‘SessionOn’ flag must be set to true. There are two ways to do this:

-

- 1. Insert ‘beego.SessionOn = true’ in the main program, main.go.

- 2. Insert ‘sessionon = true’ in the configuration file, app.conf, which can be found in the ‘conf’ directory.

-

-

-

-I chose #1. (But note that I used the configuration file to set ‘EnableAdmin’ to true: ‘enableadmin = true’. EnableAdmin allows you to use the Supervisor Module in Beego that keeps track of CPU, memory, Garbage Collector, threads, etc., via port 8088:

.)

-

-#### The Main Program

-

-The main program is also where we initialize the database to be used with the ORM (Object Relational Mapping) component. ORM makes it more convenient to perform database activities within our application. The main program’s init():

-```

-func init() {

- orm.RegisterDriver("sqlite", orm.DR_Sqlite)

- orm.RegisterDataBase("default", "sqlite3", "acme.db")

- name := "default"

- force := false

- verbose := false

- err := orm.RunSyncdb(name, force, verbose)

- if err != nil {

- fmt.Println(err)

- }

-}

-

-```

-

-To use SQLite, we must import ‘go-sqlite3', which can be installed with the command:

-```

-$ go get github.com/mattn/go-sqlite3

-

-```

-

-As you can see in the code snippet, the SQLite driver must be registered and ‘acme.db’ must be registered as our SQLite database.

-

-Recall in models.go, there was an init() function:

-```

-func init() {

- orm.RegisterModel(new(AuthUser))

-}

-

-```

-

-The database model has to be registered so that the appropriate table can be generated. To ensure that this init() function is executed, you must import ‘models’ without actually using it within the main program, as follows:

-```

-import _ "acme/models"

-

-```

-

-RunSyncdb() is used to autogenerate the tables when you start the program. (This is very handy for creating the database tables without having to **manually** do it in the database command line utility.) If you set ‘force’ to true, it will drop any existing tables and recreate them.

-

-#### The User Module

-

-User.go contains all the methods for handling login, registration, profile, etc. There are several third-party packages we need to import; they provide support for email, PBKDF2, and UUID. But first we must get them into our project…

-```

-$ go get github.com/alexcesaro/mail/gomail

-$ go get github.com/twinj/uuid

-

-```

-

-I originally got **github.com/gokyle/pbkdf2** , but this package was pulled from Github, so you can no longer get it. I’ve incorporated this package into my source under the ‘utilities’ folder, and the import is:

-```

-import pk "acme/utilities/pbkdf2"

-

-```

-

-The ‘pk’ is a convenient alias so that I don’t have to type the rather unwieldy ‘pbkdf2'.

-

-#### ORM

-

-It’s pretty straightforward to use ORM. The basic pattern is to create an ORM object, specify the ‘default’ database, and select which ORM operation you want, eg,

-```

-o := orm.NewOrm()

-o.Using("default")

-err := o.Insert(&user) // or

-err := o.Read(&user, "Email") // or

-err := o.Update(&user) // or

-err := o.Delete(&user)

-

-```

-

-#### Flash

-

-By the way, Beego provides a way to present notifications on your webpage through the use of ‘flash’. Basically, you create a ‘flash’ object, give it your notification message, store the flash in the controller, and then retrieve the message in the template file, eg,

-```

-flash := beego.NewFlash()

-flash.Error("You've goofed!") // or

-flash.Notice("Well done!")

-flash.Store(&this.Controller)

-

-```

-

-And in your template file, reference the Error flash with:

-```

-{{if .flash.error}}

-{{.flash.error}}

-

-{{end}}

-

-```

-

-#### Form Validation

-

-Once the user posts a request (by pressing the Submit button, for example), our handler must extract and validate the form input. So, first, check that we have a POST operation:

-```

-if this.Ctx.Input.Method() == "POST" {

-

-```

-

-Let’s get a form element, say, email:

-```

-email := this.GetString("email")

-

-```

-

-The string “email” is the same as in the HTML form:

-```

-

-

-```

-

-To validate it, we create a validation object, specify the type of validation, and then check to see if there are any errors:

-```

-valid := validation.Validation{}

-valid.Email(email, "email") // must be a proper email address

-if valid.HasErrors() {

- for _, err := range valid.Errors {

-

-```

-

-What you do with the errors is up to you. I like to present all of them at once to the user, so as I go through the range of valid.Errors, I add them to a map of errors that will eventually be used in the template file. Hence, the full snippet:

-```

-if this.Ctx.Input.Method() == "POST" {

- email := this.GetString("email")

- password := this.GetString("password")

- valid := validation.Validation{}

- valid.Email(email, "email")

- valid.Required(password, "password")

- if valid.HasErrors() {

- errormap := []string{}

- for _, err := range valid.Errors {

- errormap = append(errormap, "Validation failed on "+err.Key+": "+err.Message+"\n")

- }

- this.Data["Errors"] = errormap

- return

- }

-

-```

-

-### The User Management Methods

-

-We’ve looked at the major pieces of the controller. Now, we get to the meat of the application, the user management methods:

-

- * Login()

- * Logout()

- * Register()

- * Verify()

- * Profile()

- * Remove()

-

-

-

-Recall that we saw references to these functions in the router. The router associates each URL (and HTTP request) with the corresponding controller method.

-

-#### Login()

-

-Let’s look at the pseudocode for this method:

-```

-if the HTTP request is "POST" then

- Validate the form (extract the email address and password).

- Read the password hash from the database, keying on email.

- Compare the submitted password with the one on record.

- Create a session for this user.

-endif

-

-```

-

-In order to compare passwords, we need to give pk.MatchPassword() a variable with members ‘Hash’ and ‘Salt’ that are **byte slices**. Hence,

-```

-var x pk.PasswordHash

-

-```

-```

-x.Hash = make([]byte, 32)

-x.Salt = make([]byte, 16)

-// after x has the password from the database, then...

-

-```

-```

-if !pk.MatchPassword(password, &x) {

- flash.Error("Bad password")

- flash.Store(&this.Controller)

- return

-}

-

-```

-

-Creating a session is trivial, but we want to store some useful information in the session, as well. So we make a map and store first name, email address, and the time of login:

-```

-m := make(map[string]interface{})

-m["first"] = user.First

-m["username"] = email

-m["timestamp"] = time.Now()

-this.SetSession("acme", m)

-this.Redirect("/"+back, 302) // go to previous page after login

-

-```

-

-Incidentally, the name “acme” passed to SetSession is completely arbitrary; you just need to reference the same name to get the same session.

-

-#### Logout()

-

-This one is trivially easy. We delete the session and redirect to the home page.

-

-#### Register()

-```

-if the HTTP request is "POST" then

- Validate the form.

- Create the password hash for the submitted password.

- Prepare new user record.

- Convert the password hash to hexadecimal string.

- Generate a UUID and insert the user into database.

- Send a verification email.

- Flash a message on the notification page.

-endif

-

-```

-

-To send a verification email to the user, we use **gomail** …

-```

-link := "http://localhost:8080/user/verify/" + u // u is UUID

-host := "smtp.gmail.com"

-port := 587

-msg := gomail.NewMessage()

-msg.SetAddressHeader("From", "acmecorp@gmail.com", "ACME Corporation")

-msg.SetHeader("To", email)

-msg.SetHeader("Subject", "Account Verification for ACME Corporation")

-msg.SetBody("text/html", "To verify your account, please click on the link: "+link+"

Best Regards,

ACME Corporation")

-m := gomail.NewMailer(host, "youraccount@gmail.com", "YourPassword", port)

-if err := m.Send(msg); err != nil {

- return false

-}

-

-```

-

-I chose Gmail as my email relay (you will need to open your own account). Note that Gmail ignores the “From” address (in our case, “[acmecorp@gmail.com][18]”) because Gmail does not permit you to alter the sender address in order to prevent phishing.

-

-#### Notice()

-

-This special router method is for displaying a flash message on a notification page. It’s not really a user module function; it’s general enough that you can use it in many other places.

-

-#### Profile()

-

-We’ve already discussed all the pieces in this function. The pseudocode is:

-```

-Login required; check for a session.

-Get user record from database, keyed on email (or username).

-if the HTTP request is "POST" then

- Validate the form.

- if there is a new password then

- Validate the new password.

- Create the password hash for the new password.

- Convert the password hash to hexadecimal string.

- endif

- Compare submitted current password with the one on record.

- Update the user record.

- - update the username stored in session

-endif

-

-```

-

-#### Verify()

-

-The verification email contains a link which, when clicked by the recipient, causes Verify() to process the UUID. Verify() attempts to read the user record, keyed on the UUID or registration key, and if it’s found, then the registration key is removed from the database.

-

-#### Remove()

-

-Remove() is pretty much like Login(), except that instead of creating a session, you delete the user record from the database.

-

-### Exercise

-

-I left out one user management method: What if the user has forgotten his password? We should provide a way to reset the password. I leave this as an exercise for you. All the pieces you need are in this tutorial. (Hint: You’ll need to do it in a way similar to Registration verification. You should add a new Reset_key to the AuthUser table. And make sure the user email address exists in the database before you send the Reset email!)

-

-[Okay, so I’ll give you the [exercise solution][19]. I’m not cruel.]

-

-### Wrapping Up

-

-Let’s review what we’ve learned. We covered the mapping of URLs to request handlers in the router. We showed how to incorporate a CSS template design into our views. We discussed the ORM package, and how it’s used to perform database operations. We examined a number of third-party utilities useful in writing our application. The end result is a component useful in many scenarios.

-

-This is a great deal of material in a tutorial, but I believe it’s the best way to get started in writing a practical application.

-

-[For further material, look at the [sequel][20] to this article, as well as the [final edition][21].]

-

---------------------------------------------------------------------------------

-

-via: https://medium.com/@richardeng/a-word-from-the-beegoist-d562ff8589d7

-

-作者:[Richard Kenneth Eng][a]

-选题:[lujun9972](https://github.com/lujun9972)

-译者:[译者ID](https://github.com/译者ID)

-校对:[校对者ID](https://github.com/校对者ID)

-

-本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

-

-[a]:https://medium.com/@richardeng?source=post_header_lockup

-[1]:http://tour.golang.org/

-[2]:http://golang.org/

-[3]:http://beego.me/

-[4]:https://medium.com/@richardeng/in-the-beginning-61c7e63a3ea6

-[5]:http://www.mysql.com/

-[6]:http://www.sqlite.org/

-[7]:https://code.google.com/p/liteide/

-[8]:http://macromates.com/

-[9]:http://notepad-plus-plus.org/

-[10]:https://medium.com/@richardeng/back-to-the-future-9db24d6bcee1

-[11]:http://en.wikipedia.org/wiki/Acme_Corporation

-[12]:https://github.com/horrido/acme

-[13]:http://en.wikipedia.org/wiki/Regular_expression

-[14]:http://en.wikipedia.org/wiki/PBKDF2

-[15]:http://en.wikipedia.org/wiki/Universally_unique_identifier

-[16]:http://www.freewebtemplates.com/download/free-website-template/stardust-141989295/

-[17]:https://cdn-images-1.medium.com/max/1600/1*1OpYy1ISYGUaBy0U_RJ75w.png

-[18]:mailto:acmecorp@gmail.com

-[19]:https://github.com/horrido/acme-exercise

-[20]:https://medium.com/@richardeng/a-word-from-the-beegoist-ii-9561351698eb

-[21]:https://medium.com/@richardeng/a-word-from-the-beegoist-iii-dbd6308b2594

-[22]: http://golang.org/

-[23]: http://beego.me/

-[24]: http://revel.github.io/

-[25]: http://www.web2py.com/

-[26]: https://medium.com/@richardeng/the-zen-of-web2py-ede59769d084

-[27]: http://www.seaside.st/

-[28]: http://en.wikipedia.org/wiki/Object-relational_mapping

diff --git a/sources/tech/20151127 Research log- gene signatures and connectivity map.md b/sources/tech/20151127 Research log- gene signatures and connectivity map.md

new file mode 100644

index 0000000000..f4e7faa4bc

--- /dev/null

+++ b/sources/tech/20151127 Research log- gene signatures and connectivity map.md

@@ -0,0 +1,133 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Research log: gene signatures and connectivity map)

+[#]: via: (https://www.jtolio.com/2015/11/research-log-gene-signatures-and-connectivity-map)

+[#]: author: (jtolio.com https://www.jtolio.com/)

+

+Research log: gene signatures and connectivity map

+======

+

+Happy Thanksgiving everyone!

+

+### Context

+

+This is the third post in my continuing series on my attempts at research. Previously we talked about:

+

+ * [what I’m doing, cell states, and microarrays][1]

+ * and then [more about microarrays and R][2].

+

+

+

+By the end of last week we had discussed how to get a table of normalized gene expression intensities that looks like this:

+

+```

+ENSG00000280099_at 0.15484421

+ENSG00000280109_at 0.16881395

+ENSG00000280178_at -0.19621641

+ENSG00000280316_at 0.08622216

+ENSG00000280401_at 0.15966256

+ENSG00000281205_at -0.02085352

+...

+```

+

+The reason for doing this is to figure out which genes are related, and perhaps more importantly, what a cell is even doing.

+

+_Summary:_ new post, also, I’m bringing back the short section summaries.

+

+### Cell lines

+

+The first thing to do when trying to figure out what cells are doing is to choose a cell. There’s all sorts of cells. Healthy brain cells, cancerous blood cells, bruised skin cells, etc.

+

+For any experiment, you’ll need a control to eliminate noise and apply statistical tests for validity. If you don’t use a control, the effect you’re seeing may not even exist, and so for any experiment with cells, you will need a control cell.

+

+Cells often divide, which means that a cell, once chosen, will duplicate itself for you in the presence of the appropriate resources. Not all cells divide ad nauseam which provides some challenges, but many cells under study luckily do.

+

+So, a _cell line_ is simply a set of cells that have all replicated from a specific chosen initial cell. Any set of cells from a cell line will be as identical as possible (unless you screwed up! geez). They will be the same type of cell with the same traits and behaviors, at least, as much as possible.

+

+_Summary:_ a cell line is a large amount of cells that are as close to being the same as possible.

+

+### Perturbagens

+

+There are many things that might affect what a cell is doing. Drugs, agitation, temperature, disease, cancer, gene splicing, small molecules (maybe you give a cell more iron or calcium or something), hormones, light, Jello, ennui, etc. Given any particular cell line, giving a cell from that cell line one of these _perturbagens_, or, perturbing the cell in a specific way, when compared to a control will say what that cell does differently in the face of that perturbagen.

+

+If you’d like to find out what exactly a certain type of cell does when you give it lemon lime soda, then you choose the right cell line, leave out some control cells and give the rest of the cells soda.

+

+Then, you measure gene expression intensities for both the control cells and the perturbed cells. The _differential expression_ of genes between the perturbed cells and the controls cells is likely due to the introduction of the lemon lime soda.

+

+Genes that end up getting expressed _more_ in the presence of the soda are considered _up-regulated_, whereas genes that end up getting expressed _less_ are considered _down-regulated_. The degree to which a gene is up or down regulated constitutes how much of an effect the soda may have had on that gene.

+

+Of course, all of this has such a significant amount of experimental noise that you could find pretty much anything. You’ll need to replicate your experiment independently a few times before you publish that lemon lime soda causes increased expression in the [Sonic hedgehog gene][3].

+

+_Summary:_ A perturbagen is something you introduce/do to a cell to change its behavior, such as drugs or throwing it at a wall or something. The wall perturbagen.

+

+### Gene signature

+

+For a given change or perturbagen to a cell, we now have enough to compute lists of up-regulated and down-regulated genes and the magnitude change in expression for each gene.

+

+This gene expression pattern for some subset of important genes (perhaps the most changed in expression) is called a _gene signature_, and gene signatures are very useful. By comparing signatures, you can:

+

+ * identify or compare cell states

+ * find sets of positively or negatively correlated genes

+ * find similar disease signatures

+ * find similar drug signatures

+ * find drug signatures that might counteract opposite disease signatures.

+

+

+

+(That last bullet point is essentially where I’m headed with my research.)

+

+_Summary:_ a gene signature is a short summary of the most important gene expression differences a perturbagen causes in a cell.

+

+### Drugs!

+

+The pharmaceutical industry is constantly on the lookout for new breakthrough drugs that might represent huge windfalls in cash, and drugs don’t always work as planned. Many drugs spend years in research and development, only to ultimately find poor efficacy or adoption. Sometimes drugs even become known [much more for their side-effects than their originally intended therapy][4].

+

+The practical upshot is that there’s countless FDA-approved drugs that represent decades of work that are simply underused or even unused entirely. These drugs have already cleared many challenging regulatory hurdles, but are simply and quite literally cures looking for a disease.

+

+If even just one of these drugs can be given a new lease on life for some yet-to-be-cured disease, then perhaps we can give some people new leases on life!

+

+_Summary:_ instead of developing new drugs, there’s already lots of drugs that aren’t being used. Maybe we can find matching diseases!

+

+### The Connectivity Map project

+

+The [Broad Institute’s Connectivity Map project][5] isn’t particularly new anymore, but it represents a ground breaking and promising idea - we can dump a bunch of signatures into a database and construct all sorts of new hypotheses we might not even have thought to check before.

+

+To prove out the usefulness of this idea, the Connectivity Map (or cmap) project chose 5 different cell lines (all cancer cells, which are easy to get to replicate!) and a library of FDA approved drugs, and then gave some cells these drugs.

+

+They then constructed a database of all of the signatures they computed for each possible perturbagen they measured. Finally, they constructed a web interface where a user can upload a gene signature and get a result list back of all of the signatures they collected, ordered by the most to least similar. You can totally go sign up and [try it out][5].

+

+This simple tool is surprisingly powerful. It allows you to find similar drugs to a drug you know, but it also allows you to find drugs that might counteract a disease you’ve created a signature for.

+

+Ultimately, the project led to [a number of successful applications][6]. So useful was it that the Broad Institute has doubled down and created the much larger and more comprehensive [LINCS Project][7] that targets an order of magnitude more cell lines (77) and more perturbagens (42,532, compared to cmap’s 6100). You can sign up and use that one too!

+

+_Summary_: building a system that supports querying signature connections has already proved to be super useful.

+

+### Whew

+

+Alright, I wrote most of this on a plane yesterday but since I should now be spending time with family I’m going to cut it short here.

+

+Stay tuned for next week!

+

+--------------------------------------------------------------------------------

+

+via: https://www.jtolio.com/2015/11/research-log-gene-signatures-and-connectivity-map

+

+作者:[jtolio.com][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.jtolio.com/

+[b]: https://github.com/lujun9972

+[1]: https://www.jtolio.com/writing/2015/11/research-log-cell-states-and-microarrays/

+[2]: https://www.jtolio.com/writing/2015/11/research-log-r-and-more-microarrays/

+[3]: https://en.wikipedia.org/wiki/Sonic_hedgehog

+[4]: https://en.wikipedia.org/wiki/Sildenafil#History

+[5]: https://www.broadinstitute.org/cmap/

+[6]: https://www.broadinstitute.org/cmap/publications.jsp

+[7]: http://www.lincscloud.org/

diff --git a/sources/tech/20160302 Go channels are bad and you should feel bad.md b/sources/tech/20160302 Go channels are bad and you should feel bad.md

new file mode 100644

index 0000000000..0ad2a5ed97

--- /dev/null

+++ b/sources/tech/20160302 Go channels are bad and you should feel bad.md

@@ -0,0 +1,443 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Go channels are bad and you should feel bad)

+[#]: via: (https://www.jtolio.com/2016/03/go-channels-are-bad-and-you-should-feel-bad)

+[#]: author: (jtolio.com https://www.jtolio.com/)

+

+Go channels are bad and you should feel bad

+======

+

+_Update: If you’re coming to this blog post from a compendium titled “Go is not good,” I want to make it clear that I am ashamed to be on such a list. Go is absolutely the least worst programming language I’ve ever used. At the time I wrote this, I wanted to curb a trend I was seeing, namely, overuse of one of the more warty parts of Go. I still think channels could be much better, but overall, Go is wonderful. It’s like if your favorite toolbox had [this][1] in it; the tool can have uses (even if it could have had more uses), and it can still be your favorite toolbox!_

+

+_Update 2: I would be remiss if I didn’t point out this excellent survey of real issues: [Understanding Real-World Concurrency Bugs In Go][2]. A significant finding of this survey is that… Go channels cause lots of bugs._

+

+I’ve been using Google’s [Go programming language][3] on and off since mid-to-late 2010, and I’ve had legitimate product code written in Go for [Space Monkey][4] since January 2012 (before Go 1.0!). My initial experience with Go was back when I was researching Hoare’s [Communicating Sequential Processes][5] model of concurrency and the [π-calculus][6] under [Matt Might][7]’s [UCombinator research group][8] as part of my ([now redirected][9]) PhD work to better enable multicore development. Go was announced right then (how serendipitous!) and I immediately started kicking tires.

+

+It quickly became a core part of Space Monkey development. Our production systems at Space Monkey currently account for over 425k lines of pure Go (_not_ counting all of our vendored libraries, which would make it just shy of 1.5 million lines), so not the most Go you’ll ever see, but for the relatively young language we’re heavy users. We’ve [written about our Go usage][10] before. We’ve open-sourced some fairly heavily used libraries; many people seem to be fans of our [OpenSSL bindings][11] (which are faster than [crypto/tls][12], but please keep openssl itself up-to-date!), our [error handling library][13], [logging library][14], and [metric collection library/zipkin client][15]. We use Go, we love Go, we think it’s the least bad programming language for our needs we’ve used so far.

+

+Although I don’t think I can talk myself out of mentioning my widely avoided [goroutine-local-storage library][16] here either (which even though it’s a hack that you shouldn’t use, it’s a beautiful hack), hopefully my other experience will suffice as valid credentials that I kind of know what I’m talking about before I explain my deliberately inflamatory post title.

+

+![][17]

+

+### Wait, what?

+

+If you ask the proverbial programmer on the street what’s so special about Go, she’ll most likely tell you that Go is most known for channels and goroutines. Go’s theoretical underpinnings are heavily based in Hoare’s CSP model, which is itself incredibly fascinating and interesting and I firmly believe has much more to yield than we’ve appropriated so far.

+

+CSP (and the π-calculus) both use communication as the core synchronization primitive, so it makes sense Go would have channels. Rob Pike has been fascinated with CSP (with good reason) for a [considerable][18] [while][19] [now][20].

+

+But from a pragmatic perspective (which Go prides itself on), Go got channels wrong. Channels as implemented are pretty much a solid anti-pattern in my book at this point. Why? Dear reader, let me count the ways.

+

+#### You probably won’t end up using just channels.

+

+Hoare’s Communicating Sequential Processes is a computational model where essentially the only synchronization primitive is sending or receiving on a channel. As soon as you use a mutex, semaphore, or condition variable, bam, you’re no longer in pure CSP land. Go programmers often tout this model and philosophy through the chanting of the [cached thought][21] “[share memory by communicating][22].”

+

+So let’s try and write a small program using just CSP in Go! Let’s make a high score receiver. All we will do is keep track of the largest high score value we’ve seen. That’s it.

+

+First, we’ll make a `Game` struct.

+

+```

+type Game struct {

+ bestScore int

+ scores chan int

+}

+```

+

+`bestScore` isn’t going to be protected by a mutex! That’s fine, because we’ll simply have one goroutine manage its state and receive new scores over a channel.

+

+```

+func (g *Game) run() {

+ for score := range g.scores {

+ if g.bestScore < score {

+ g.bestScore = score

+ }

+ }

+}

+```

+

+Okay, now we’ll make a helpful constructor to start a game.

+

+```

+func NewGame() (g *Game) {

+ g = &Game{

+ bestScore: 0,

+ scores: make(chan int),

+ }

+ go g.run()

+ return g

+}

+```

+

+Next, let’s assume someone has given us a `Player` that can return scores. It might also return an error, cause hey maybe the incoming TCP stream can die or something, or the player quits.

+

+```

+type Player interface {

+ NextScore() (score int, err error)

+}

+```

+

+To handle the player, we’ll assume all errors are fatal and pass received scores down the channel.

+

+```

+func (g *Game) HandlePlayer(p Player) error {

+ for {

+ score, err := p.NextScore()

+ if err != nil {

+ return err

+ }

+ g.scores <- score

+ }

+}

+```

+

+Yay! Okay, we have a `Game` type that can keep track of the highest score a `Player` receives in a thread-safe way.

+

+You wrap up your development and you’re on your way to having customers. You make this game server public and you’re incredibly successful! Lots of games are being created with your game server.

+

+Soon, you discover people sometimes leave your game. Lots of games no longer have any players playing, but nothing stopped the game loop. You are getting overwhelmed by dead `(*Game).run` goroutines.

+

+**Challenge:** fix the goroutine leak above without mutexes or panics. For real, scroll up to the above code and come up with a plan for fixing this problem using just channels.

+

+I’ll wait.

+

+For what it’s worth, it totally can be done with channels only, but observe the simplicity of the following solution which doesn’t even have this problem:

+

+```

+type Game struct {

+ mtx sync.Mutex

+ bestScore int

+}

+

+func NewGame() *Game {

+ return &Game{}

+}

+

+func (g *Game) HandlePlayer(p Player) error {

+ for {

+ score, err := p.NextScore()

+ if err != nil {

+ return err

+ }

+ g.mtx.Lock()

+ if g.bestScore < score {

+ g.bestScore = score

+ }

+ g.mtx.Unlock()

+ }

+}

+```

+

+Which one would you rather work on? Don’t be deceived into thinking that the channel solution somehow makes this more readable and understandable in more complex cases. Teardown is very hard. This sort of teardown is just a piece of cake with a mutex, but the hardest thing to work out with Go-specific channels only. Also, if anyone replies that channels sending channels is easier to reason about here it will cause me an immediate head-to-desk motion.

+

+Importantly, this particular case might actually be _easily_ solved _with channels_ with some runtime assistance Go doesn’t provide! Unfortunately, as it stands, there are simply a surprising amount of problems that are solved better with traditional synchronization primitives than with Go’s version of CSP. We’ll talk about what Go could have done to make this case easier later.

+

+**Exercise:** Still skeptical? Try making both solutions above (channel-only vs. mutex-only) stop asking for scores from `Players` once `bestScore` is 100 or greater. Go ahead and open your text editor. This is a small, toy problem.

+

+The summary here is that you will be using traditional synchronization primitives in addition to channels if you want to do anything real.

+

+#### Channels are slower than implementing it yourself

+

+One of the things I assumed about Go being so heavily based in CSP theory is that there should be some pretty killer scheduler optimizations the runtime can make with channels. Perhaps channels aren’t always the most straightforward primitive, but surely they’re efficient and fast, right?

+

+![][23]

+

+As [Dustin Hiatt][24] points out on [Tyler Treat’s post about Go][25],

+

+> Behind the scenes, channels are using locks to serialize access and provide threadsafety. So by using channels to synchronize access to memory, you are, in fact, using locks; locks wrapped in a threadsafe queue. So how do Go’s fancy locks compare to just using mutex’s from their standard library `sync` package? The following numbers were obtained by using Go’s builtin benchmarking functionality to serially call Put on a single set of their respective types.

+

+```

+> BenchmarkSimpleSet-8 3000000 391 ns/op

+> BenchmarkSimpleChannelSet-8 1000000 1699 ns/o

+>

+```

+

+It’s a similar story with unbuffered channels, or even the same test under contention instead of run serially.

+

+Perhaps the Go scheduler will improve, but in the meantime, good old mutexes and condition variables are very good, efficient, and fast. If you want performance, you use the tried and true methods.

+

+#### Channels don’t compose well with other concurrency primitives

+

+Alright, so hopefully I have convinced you that you’ll at least be interacting with primitives besides channels sometimes. The standard library certainly seems to prefer traditional synchronization primitives over channels.

+

+Well guess what, it’s actually somewhat challenging to use channels alongside mutexes and condition variables correctly!

+

+One of the interesting things about channels that makes a lot of sense coming from CSP is that channel sends are synchronous. A channel send and channel receive are intended to be synchronization barriers, and the send and receive should happen at the same virtual time. That’s wonderful if you’re in well-executed CSP-land.

+

+![][26]

+

+Pragmatically, Go channels also come in a buffered variety. You can allocate a fixed amount of space to account for possible buffering so that sends and receives are disparate events, but the buffer size is capped. Go doesn’t provide a way to have arbitrarily sized buffers - you have to allocate the buffer size in advance. _This is fine_, I’ve seen people argue on the mailing list, _because memory is bounded anyway._

+

+Wat.

+

+This is a bad answer. There’s all sorts of reasons to use an arbitrarily buffered channel. If we knew everything up front, why even have `malloc`?

+

+Not having arbitrarily buffered channels means that a naive send on _any_ channel could block at any time. You want to send on a channel and update some other bookkeeping under a mutex? Careful! Your channel send might block!

+

+```

+// ...

+s.mtx.Lock()

+// ...

+s.ch <- val // might block!

+s.mtx.Unlock()

+// ...

+```

+

+This is a recipe for dining philosopher dinner fights. If you take a lock, you should quickly update state and release it and not do anything blocking under the lock if possible.

+

+There is a way to do a non-blocking send on a channel in Go, but it’s not the default behavior. Assume we have a channel `ch := make(chan int)` and we want to send the value `1` on it without blocking. Here is the minimum amount of typing you have to do to send without blocking:

+

+```

+select {

+case ch <- 1: // it sent

+default: // it didn't

+}

+```

+

+This isn’t what naturally leaps to mind for beginning Go programmers.

+

+The summary is that because many operations on channels block, it takes careful reasoning about philosophers and their dining to successfully use channel operations alongside and under mutex protection, without causing deadlocks.

+

+#### Callbacks are strictly more powerful and don’t require unnecessary goroutines.

+

+![][27]

+

+Whenever an API uses a channel, or whenever I point out that a channel makes something hard, someone invariably points out that I should just spin up a goroutine to read off the channel and make whatever translation or fix I need as it reads of the channel.

+

+Um, no. What if my code is in a hotpath? There’s very few instances that require a channel, and if your API could have been designed with mutexes, semaphores, and callbacks and no additional goroutines (because all event edges are triggered by API events), then using a channel forces me to add another stack of memory allocation to my resource usage. Goroutines are much lighter weight than threads, yes, but lighter weight doesn’t mean the lightest weight possible.

+

+As I’ve formerly [argued in the comments on an article about using channels][28] (lol the internet), your API can _always_ be more general, _always_ more flexible, and take drastically less resources if you use callbacks instead of channels. “Always” is a scary word, but I mean it here. There’s proof-level stuff going on.

+

+If someone provides a callback-based API to you and you need a channel, you can provide a callback that sends on a channel with little overhead and full flexibility.

+

+If, on the other hand, someone provides a channel-based API to you and you need a callback, you have to spin up a goroutine to read off the channel _and_ you have to hope that no one tries to send more on the channel when you’re done reading so you cause blocked goroutine leaks.

+

+For a super simple real-world example, check out the [context interface][29] (which incidentally is an incredibly useful package and what you should be using instead of [goroutine-local storage][16]):

+

+```

+type Context interface {

+ ...

+ // Done returns a channel that closes when this work unit should be canceled.

+ Done() <-chan struct{}

+

+ // Err returns a non-nil error when the Done channel is closed

+ Err() error

+ ...

+}

+```

+

+Imagine all you want to do is log the corresponding error when the `Done()` channel fires. What do you have to do? If you don’t have a good place you’re already selecting on a channel, you have to spin up a goroutine to deal with it:

+

+```

+go func() {

+ <-ctx.Done()

+ logger.Errorf("canceled: %v", ctx.Err())

+}()

+```

+

+What if `ctx` gets garbage collected without closing the channel `Done()` returned? Whoops! Just leaked a goroutine!

+

+Now imagine we changed `Done`’s signature:

+

+```

+// Done calls cb when this work unit should be canceled.

+Done(cb func())

+```

+

+First off, logging is so easy now. Check it out: `ctx.Done(func() { log.Errorf("canceled: %v", ctx.Err()) })`. But lets say you really do need some select behavior. You can just call it like this:

+

+```

+ch := make(chan struct{})

+ctx.Done(func() { close(ch) })

+```

+

+Voila! No expressiveness lost by using a callback instead. `ch` works like the channel `Done()` used to return, and in the logging case we didn’t need to spin up a whole new stack. I got to keep my stack traces (if our log package is inclined to use them); I got to avoid another stack allocation and another goroutine to give to the scheduler.

+

+Next time you use a channel, ask yourself if there’s some goroutines you could eliminate if you used mutexes and condition variables instead. If the answer is yes, your code will be more efficient if you change it. And if you’re trying to use channels just to be able to use the `range` keyword over a collection, I’m going to have to ask you to put your keyboard away or just go back to writing Python books.

+

+![more like Zooey De-channel, amirite][30]

+

+#### The channel API is inconsistent and just cray-cray

+

+Closing or sending on a closed channel panics! Why? If you want to close a channel, you need to either synchronize its closed state externally (with mutexes and so forth that don’t compose well!) so that other writers don’t write to or close a closed channel, or just charge forward and close or write to closed channels and expect you’ll have to recover any raised panics.

+

+This is such bizarre behavior. Almost every other operation in Go has a way to avoid a panic (type assertions have the `, ok =` pattern, for example), but with channels you just get to deal with it.

+

+Okay, so when a send will fail, channels panic. I guess that makes some kind of sense. But unlike almost everything else with nil values, sending to a nil channel won’t panic. Instead, it will block forever! That’s pretty counter-intuitive. That might be useful behavior, just like having a can-opener attached to your weed-whacker might be useful (and found in Skymall), but it’s certainly unexpected. Unlike interacting with nil maps (which do implicit pointer dereferences), nil interfaces (implicit pointer dereferences), unchecked type assertions, and all sorts of other things, nil channels exhibit actual channel behavior, as if a brand new channel was just instantiated for this operation.

+

+Receives are slightly nicer. What happens when you receive on a closed channel? Well, that works - you get a zero value. Okay that makes sense I guess. Bonus! Receives allow you to do a `, ok =`-style check if the channel was open when you received your value. Thank heavens we get `, ok =` here.

+

+But what happens if you receive from a nil channel? _Also blocks forever!_ Yay! Don’t try and use the fact that your channel is nil to keep track of if you closed it!

+

+### What are channels good for?

+

+Of course channels are good for some things (they are a generic container after all), and there are certain things you can only do with them (`select`).

+

+#### They are another special-cased generic datastructure

+

+Go programmers are so used to arguments about generics that I can feel the PTSD coming on just by bringing up the word. I’m not here to talk about it so wipe the sweat off your brow and let’s keep moving.

+

+Whatever your opinion of generics is, Go’s maps, slices, and channels are data structures that support generic element types, because they’ve been special-cased into the language.

+

+In a language that doesn’t allow you to write your own generic containers, _anything_ that allows you to better manage collections of things is valuable. Here, channels are a thread-safe datastructure that supports arbitrary value types.

+

+So that’s useful! That can save some boilerplate I suppose.

+

+I’m having trouble counting this as a win for channels.

+

+#### Select

+

+The main thing you can do with channels is the `select` statement. Here you can wait on a fixed number of inputs for events. It’s kind of like epoll, but you have to know upfront how many sockets you’re going to be waiting on.

+

+This is truly a useful language feature. Channels would be a complete wash if not for `select`. But holy smokes, let me tell you about the first time you decide you might need to select on multiple things but you don’t know how many and you have to use `reflect.Select`.

+

+### How could channels be better?

+

+It’s really tough to say what the most tactical thing the Go language team could do for Go 2.0 is (the Go 1.0 compatibility guarantee is good but hand-tying), but that won’t stop me from making some suggestions.

+

+#### Select on condition variables!

+

+We could just obviate the need for channels! This is where I propose we get rid of some sacred cows, but let me ask you this, how great would it be if you could select on any custom synchronization primitive? (A: So great.) If we had that, we wouldn’t need channels at all.

+

+#### GC could help us?

+

+In the very first example, we could easily solve the high score server cleanup with channels if we were able to use directionally-typed channel garbage collection to help us clean up.

+

+![][31]

+

+As you know, Go has directionally-typed channels. You can have a channel type that only supports reading (`<-chan`) and a channel type that only supports writing (`chan<-`). Great!

+

+Go also has garbage collection. It’s clear that certain kinds of book keeping are just too onerous and we shouldn’t make the programmer deal with them. We clean up unused memory! Garbage collection is useful and neat.

+

+So why not help clean up unused or deadlocked channel reads? Instead of having `make(chan Whatever)` return one bidirectional channel, have it return two single-direction channels (`chanReader, chanWriter := make(chan Type)`).

+

+Let’s reconsider the original example:

+

+```

+type Game struct {

+ bestScore int

+ scores chan<- int

+}

+

+func run(bestScore *int, scores <-chan int) {

+ // we don't keep a reference to a *Game directly because then we'd be holding

+ // onto the send side of the channel.

+ for score := range scores {

+ if *bestScore < score {

+ *bestScore = score

+ }

+ }

+}

+

+func NewGame() (g *Game) {

+ // this make(chan) return style is a proposal!

+ scoreReader, scoreWriter := make(chan int)

+ g = &Game{

+ bestScore: 0,

+ scores: scoreWriter,

+ }

+ go run(&g.bestScore, scoreReader)

+ return g

+}

+

+func (g *Game) HandlePlayer(p Player) error {

+ for {

+ score, err := p.NextScore()

+ if err != nil {

+ return err

+ }

+ g.scores <- score

+ }

+}

+```

+

+If garbage collection closed a channel when we could prove no more values are ever coming down it, this solution is completely fixed. Yes yes, the comment in `run` is indicative of the existence of a rather large gun aimed at your foot, but at least the problem is easily solveable now, whereas it really wasn’t before. Furthermore, a smart compiler could probably make appropriate proofs to reduce the damage from said foot-gun.

+

+#### Other smaller issues

+

+ * **Dup channels?** \- If we could use an equivalent of the `dup` syscall on channels, then we could also solve the multiple producer problem quite easily. Each producer could close their own `dup`-ed channel without ruining the other producers.

+ * **Fix the channel API!** \- Close isn’t idempotent? Send on closed channel panics with no way to avoid it? Ugh!

+ * **Arbitrarily buffered channels** \- If we could make buffered channels with no fixed buffer size limit, then we could make channels that don’t block.

+

+

+

+### What do we tell people about Go then?

+

+If you haven’t yet, please go take a look at my current favorite programming post: [What Color is Your Function][32]. Without being about Go specifically, this blog post much more eloquently than I could lays out exactly why goroutines are Go’s best feature (and incidentally one of the ways Go is better than Rust for some applications).

+

+If you’re still writing code in a programming language that forces keywords like `yield` on you to get high performance, concurrency, or an event-driven model, you are living in the past, whether or not you or anyone else knows it. Go is so far one of the best entrants I’ve seen of languages that implement an M:N threading model that’s not 1:1, and dang that’s powerful.

+

+So, tell folks about goroutines.

+

+If I had to pick one other leading feature of Go, it’s interfaces. Statically-typed [duck typing][33] makes extending and working with your own or someone else’s project so fun and amazing it’s probably worth me writing an entirely different set of words about it some other time.

+

+### So…

+

+I keep seeing people charge in to Go, eager to use channels to their full potential. Here’s my advice to you.

+

+**JUST STAHP IT**

+

+When you’re writing APIs and interfaces, as bad as the advice “never” can be, I’m pretty sure there’s never a time where channels are better, and every Go API I’ve used that used channels I’ve ended up having to fight. I’ve never thought “oh good, there’s a channel here;” it’s always instead been some variant of _**WHAT FRESH HELL IS THIS?**_

+

+So, _please, please use channels where appropriate and only where appropriate._

+

+In all of my Go code I work with, I can count on one hand the number of times channels were really the best choice. Sometimes they are. That’s great! Use them then. But otherwise just stop.

+

+![][34]

+

+_Special thanks for the valuable feedback provided by my proof readers Jeff Wendling, [Andrew Harding][35], [George Shank][36], and [Tyler Treat][37]._

+

+If you want to work on Go with us at Space Monkey, please [hit me up][38]!

+

+--------------------------------------------------------------------------------

+

+via: https://www.jtolio.com/2016/03/go-channels-are-bad-and-you-should-feel-bad

+

+作者:[jtolio.com][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.jtolio.com/

+[b]: https://github.com/lujun9972

+[1]: https://blog.codinghorror.com/content/images/uploads/2012/06/6a0120a85dcdae970b017742d249d5970d-800wi.jpg

+[2]: https://songlh.github.io/paper/go-study.pdf

+[3]: https://golang.org/

+[4]: http://www.spacemonkey.com/

+[5]: https://en.wikipedia.org/wiki/Communicating_sequential_processes

+[6]: https://en.wikipedia.org/wiki/%CE%A0-calculus

+[7]: http://matt.might.net

+[8]: http://www.ucombinator.org/

+[9]: https://www.jtolio.com/writing/2015/11/research-log-cell-states-and-microarrays/

+[10]: https://www.jtolio.com/writing/2014/04/go-space-monkey/

+[11]: https://godoc.org/github.com/spacemonkeygo/openssl

+[12]: https://golang.org/pkg/crypto/tls/

+[13]: https://godoc.org/github.com/spacemonkeygo/errors

+[14]: https://godoc.org/github.com/spacemonkeygo/spacelog

+[15]: https://godoc.org/gopkg.in/spacemonkeygo/monitor.v1

+[16]: https://github.com/jtolds/gls

+[17]: https://www.jtolio.com/images/wat/darth-helmet.jpg

+[18]: https://en.wikipedia.org/wiki/Newsqueak

+[19]: https://en.wikipedia.org/wiki/Alef_%28programming_language%29

+[20]: https://en.wikipedia.org/wiki/Limbo_%28programming_language%29

+[21]: https://lesswrong.com/lw/k5/cached_thoughts/

+[22]: https://blog.golang.org/share-memory-by-communicating

+[23]: https://www.jtolio.com/images/wat/jon-stewart.jpg

+[24]: https://twitter.com/HiattDustin

+[25]: http://bravenewgeek.com/go-is-unapologetically-flawed-heres-why-we-use-it/

+[26]: https://www.jtolio.com/images/wat/obama.jpg

+[27]: https://www.jtolio.com/images/wat/yael-grobglas.jpg

+[28]: http://www.informit.com/articles/article.aspx?p=2359758#comment-2061767464

+[29]: https://godoc.org/golang.org/x/net/context

+[30]: https://www.jtolio.com/images/wat/zooey-deschanel.jpg

+[31]: https://www.jtolio.com/images/wat/joel-mchale.jpg

+[32]: http://journal.stuffwithstuff.com/2015/02/01/what-color-is-your-function/

+[33]: https://en.wikipedia.org/wiki/Duck_typing

+[34]: https://www.jtolio.com/images/wat/michael-cera.jpg

+[35]: https://github.com/azdagron

+[36]: https://twitter.com/taterbase

+[37]: http://bravenewgeek.com

+[38]: https://www.jtolio.com/contact/

diff --git a/sources/tech/20170115 Magic GOPATH.md b/sources/tech/20170115 Magic GOPATH.md

new file mode 100644

index 0000000000..1d4cd16e24

--- /dev/null

+++ b/sources/tech/20170115 Magic GOPATH.md

@@ -0,0 +1,119 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Magic GOPATH)

+[#]: via: (https://www.jtolio.com/2017/01/magic-gopath)

+[#]: author: (jtolio.com https://www.jtolio.com/)

+

+Magic GOPATH

+======

+

+_**Update:** With the advent of Go 1.11 and [Go modules][1], this whole post is now useless. Unset your GOPATH entirely and switch to Go modules today!_

+

+Maybe someday I’ll start writing about things besides Go again.

+

+Go requires that you set an environment variable for your workspace called your `GOPATH`. The `GOPATH` is one of the most confusing aspects of Go to newcomers and even relatively seasoned developers alike. It’s not immediately clear what would be better, but finding a good `GOPATH` value has implications for your source code repository layout, how many separate projects you have on your computer, how default project installation instructions work (via `go get`), and even how you interoperate with other projects and libraries.

+

+It’s taken until Go 1.8 to decide to [set a default][2] and that small change was one of [the most talked about code reviews][3] for the 1.8 release cycle.

+

+After [writing about GOPATH himself][4], [Dave Cheney][5] [asked me][6] to write a blog post about what I do.

+

+### My proposal

+

+I set my `GOPATH` to always be the current working directory, unless a parent directory is clearly the `GOPATH`.

+

+Here’s the relevant part of my `.bashrc`:

+

+```

+# bash command to output calculated GOPATH.

+calc_gopath() {

+ local dir="$PWD"

+

+ # we're going to walk up from the current directory to the root

+ while true; do

+

+ # if there's a '.gopath' file, use its contents as the GOPATH relative to

+ # the directory containing it.

+ if [ -f "$dir/.gopath" ]; then

+ ( cd "$dir";

+ # allow us to squash this behavior for cases we want to use vgo

+ if [ "$(cat .gopath)" != "" ]; then

+ cd "$(cat .gopath)";

+ echo "$PWD";

+ fi; )

+ return

+ fi

+

+ # if there's a 'src' directory, the parent of that directory is now the

+ # GOPATH

+ if [ -d "$dir/src" ]; then

+ echo "$dir"

+ return

+ fi

+

+ # we can't go further, so bail. we'll make the original PWD the GOPATH.

+ if [ "$dir" == "/" ]; then

+ echo "$PWD"

+ return

+ fi

+

+ # now we'll consider the parent directory

+ dir="$(dirname "$dir")"

+ done

+}

+

+my_prompt_command() {

+ export GOPATH="$(calc_gopath)"

+

+ # you can have other neat things in here. I also set my PS1 based on git

+ # state

+}

+

+case "$TERM" in

+xterm*|rxvt*)

+ # Bash provides an environment variable called PROMPT_COMMAND. The contents

+ # of this variable are executed as a regular Bash command just before Bash

+ # displays a prompt. Let's only set it if we're in some kind of graphical

+ # terminal I guess.

+ PROMPT_COMMAND=my_prompt_command

+ ;;

+*)

+ ;;

+esac

+```

+

+The benefits are fantastic. If you want to quickly `go get` something and not have it clutter up your workspace, you can do something like:

+

+```

+cd $(mktemp -d) && go get github.com/the/thing

+```

+

+On the other hand, if you’re jumping between multiple projects (whether or not they have the full workspace checked in or are just library packages), the `GOPATH` is set accurately.

+

+More flexibly, if you have a tree where some parent directory is outside of the `GOPATH` but you want to set the `GOPATH` anyways, you can create a `.gopath` file and it will automatically set your `GOPATH` correctly any time your shell is inside that directory.

+

+The whole thing is super nice. I kinda can’t imagine doing something else anymore.

+

+### Fin.

+

+--------------------------------------------------------------------------------

+

+via: https://www.jtolio.com/2017/01/magic-gopath

+

+作者:[jtolio.com][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.jtolio.com/

+[b]: https://github.com/lujun9972

+[1]: https://golang.org/cmd/go/#hdr-Modules__module_versions__and_more

+[2]: https://rakyll.org/default-gopath/

+[3]: https://go-review.googlesource.com/32019/

+[4]: https://dave.cheney.net/2016/12/20/thinking-about-gopath

+[5]: https://dave.cheney.net/

+[6]: https://twitter.com/davecheney/status/811334240247812097

diff --git a/sources/tech/20170320 Whiteboard problems in pure Lambda Calculus.md b/sources/tech/20170320 Whiteboard problems in pure Lambda Calculus.md

new file mode 100644

index 0000000000..02200befe7

--- /dev/null

+++ b/sources/tech/20170320 Whiteboard problems in pure Lambda Calculus.md

@@ -0,0 +1,836 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Whiteboard problems in pure Lambda Calculus)

+[#]: via: (https://www.jtolio.com/2017/03/whiteboard-problems-in-pure-lambda-calculus)

+[#]: author: (jtolio.com https://www.jtolio.com/)

+

+Whiteboard problems in pure Lambda Calculus

+======

+

+My team at [Vivint][1], the [Space Monkey][2] group, stopped doing whiteboard interviews a while ago. We certainly used to do them, but we’ve transitioned to homework problems or actually just hiring a candidate as a short term contractor for a day or two to solve real work problems and see how that goes. Whiteboard interviews are kind of like [Festivus][3] but in a bad way: you get the feats of strength and then the airing of grievances. Unfortunately, modern programming is nothing like writing code in front of a roomful of strangers with only a whiteboard and a marker, so it’s probably not best to optimize for that.

+

+Nonetheless, [Kyle][4]’s recent (wonderful, amazing) post titled [acing the technical interview][5] got me thinking about fun ways to approach whiteboard problems as an interviewee. Kyle’s [Church-encodings][6] made me wonder how many “standard” whiteboard problems you could solve in pure lambda calculus. If this isn’t seen as a feat of strength by your interviewers, there will certainly be some airing of grievances.

+

+➡️️ **Update**: I’ve made a lambda calculus web playground so you can run lambda calculus right in your browser! I’ve gone through and made links to examples in this post with it. Check it out at

+

+### Lambda calculus

+

+Wait, what is lambda calculus? Did I learn that in high school?

+

+Big-C “Calculus” of course usually refers to derivatives, integrals, Taylor series, etc. You might have learned about Calculus in high school, but this isn’t that.

+

+More generally, a little-c “calculus” is really just any system of calculation. The [lambda calculus][7] is essentially a formalization of the smallest set of primitives needed to make a completely [Turing-complete][8] programming language. Expressions in the language can only be one of three things.

+

+ * An expression can define a function that takes exactly one argument (no more, no less) and then has another expression as the body.

+ * An expression can call a function by applying two subexpressions.

+ * An expression can reference a variable.

+

+

+

+Here is the entire grammar:

+

+```

+ ::=

+ | `λ` `.`

+ | `(` `)`

+```

+

+That’s it. There’s nothing else you can do. There are no numbers, strings, booleans, pairs, structs, anything. Every value is a function that takes one argument. All variables refer to these functions, and all functions can do is return another function, either directly, or by calling yet another function. There’s nothing else to help you.

+

+To be honest, it’s a little surprising that this is even Turing-complete. How do you do branches or loops or recursion? This seems too simple to work, right?

+

+A common whiteboard problem is the [fizz buzz problem][9]. The goal is to write a function that prints out all the numbers from 0 to 100, but instead of printing numbers divisible by 3 it prints “fizz”, and instead of printing numbers divisible by 5 it prints “buzz”, and in the case of both it prints “fizzbuzz”. It’s a simple toy problem but it’s touted as a good whiteboard problem because evidently many self-proclaimed programmers can’t solve it. Maybe part of that is cause whiteboard problems suck? I dunno.

+

+Anyway, here’s fizz buzz in pure lambda calculus:

+

+```

+(λU.(λY.(λvoid.(λ0.(λsucc.(λ+.(λ*.(λ1.(λ2.(λ3.(λ4.(λ5.(λ6.(λ7.(λ8.(λ9.(λ10.(λnum.(λtrue.(λfalse.(λif.(λnot.(λand.(λor.(λmake-pair.(λpair-first.(λpair-second.(λzero?.(λpred.(λ-.(λeq?.(λ/.(λ%.(λnil.(λnil?.(λcons.(λcar.(λcdr.(λdo2.(λdo3.(λdo4.(λfor.(λprint-byte.(λprint-list.(λprint-newline.(λzero-byte.(λitoa.(λfizzmsg.(λbuzzmsg.(λfizzbuzzmsg.(λfizzbuzz.(fizzbuzz (((num 1) 0) 1)) λn.((for n) λi.((do2 (((if (zero? ((% i) 3))) λ_.(((if (zero? ((% i) 5))) λ_.(print-list fizzbuzzmsg)) λ_.(print-list fizzmsg))) λ_.(((if (zero? ((% i) 5))) λ_.(print-list buzzmsg)) λ_.(print-list (itoa i))))) (print-newline nil)))) ((cons (((num 0) 7) 0)) ((cons (((num 1) 0) 5)) ((cons (((num 1) 2) 2)) ((cons (((num 1) 2) 2)) ((cons (((num 0) 9) 8)) ((cons (((num 1) 1) 7)) ((cons (((num 1) 2) 2)) ((cons (((num 1) 2) 2)) nil))))))))) ((cons (((num 0) 6) 6)) ((cons (((num 1) 1) 7)) ((cons (((num 1) 2) 2)) ((cons (((num 1) 2) 2)) nil))))) ((cons (((num 0) 7) 0)) ((cons (((num 1) 0) 5)) ((cons (((num 1) 2) 2)) ((cons (((num 1) 2) 2)) nil))))) λn.(((Y λrecurse.λn.λresult.(((if (zero? n)) λ_.(((if (nil? result)) λ_.((cons zero-byte) nil)) λ_.result)) λ_.((recurse ((/ n) 10)) ((cons ((+ zero-byte) ((% n) 10))) result)))) n) nil)) (((num 0) 4) 8)) λ_.(print-byte (((num 0) 1) 0))) (Y λrecurse.λl.(((if (nil? l)) λ_.void) λ_.((do2 (print-byte (car l))) (recurse (cdr l)))))) PRINT_BYTE) λn.λf.((((Y λrecurse.λremaining.λcurrent.λf.(((if (zero? remaining)) λ_.void) λ_.((do2 (f current)) (((recurse (pred remaining)) (succ current)) f)))) n) 0) f)) λa.do3) λa.do2) λa.λb.b) λl.(pair-second (pair-second l))) λl.(pair-first (pair-second l))) λe.λl.((make-pair true) ((make-pair e) l))) λl.(not (pair-first l))) ((make-pair false) void)) λm.λn.((- m) ((* ((/ m) n)) n))) (Y λ/.λm.λn.(((if ((eq? m) n)) λ_.1) λ_.(((if (zero? ((- m) n))) λ_.0) λ_.((+ 1) ((/ ((- m) n)) n)))))) λm.λn.((and (zero? ((- m) n))) (zero? ((- n) m)))) λm.λn.((n pred) m)) λn.(((λn.λf.λx.(pair-second ((n λp.((make-pair (f (pair-first p))) (pair-first p))) ((make-pair x) x))) n) succ) 0)) λn.((n λ_.false) true)) λp.(p false)) λp.(p true)) λx.λy.λt.((t x) y)) λa.λb.((a true) b)) λa.λb.((a b) false)) λp.λt.λf.((p f) t)) λp.λa.λb.(((p a) b) void)) λt.λf.f) λt.λf.t) λa.λb.λc.((+ ((+ ((* ((* 10) 10)) a)) ((* 10) b))) c)) (succ 9)) (succ 8)) (succ 7)) (succ 6)) (succ 5)) (succ 4)) (succ 3)) (succ 2)) (succ 1)) (succ 0)) λm.λn.λx.(m (n x))) λm.λn.λf.λx.((((m succ) n) f) x)) λn.λf.λx.(f ((n f) x))) λf.λx.x) λx.(U U)) (U λh.λf.(f λx.(((h h) f) x)))) λf.(f f))

+```

+

+➡️️ [Try it out in your browser!][10]

+

+(This program expects a function to be defined called `PRINT_BYTE` which takes a Church-encoded numeral, turns it into a byte, writes it to `stdout`, and then returns the same Church-encoded numeral. Expecting a function that has side-effects might arguably disqualify this from being pure, but it’s definitely arguable.)

+

+Don’t be deceived! I said there were no native numbers or lists or control structures in lambda calculus and I meant it. `0`, `7`, `if`, and `+` are all _variables_ that represent _functions_ and have to be constructed before they can be used in the code block above.

+

+### What? What’s happening here?

+

+Okay let’s start over and build up to fizz buzz. We’re going to need a lot. We’re going to need to build up concepts of numbers, logic, and lists all from scratch. Ask your interviewers if they’re comfortable cause this might be a while.

+

+Here is a basic lambda calculus function:

+

+```

+λx.x

+```

+

+This is the identity function and it is equivalent to the following Javascript:

+

+```

+function(x) { return x; }

+```

+

+It takes an argument and returns it! We can call the identity function with another value. Function calling in many languages looks like `f(x)`, but in lambda calculus, it looks like `(f x)`.

+

+```

+(λx.x y)

+```

+

+This will return `y`. Once again, here’s equivalent Javascript:

+

+```

+function(x) { return x; }(y)

+```

+

+Aside: If you’re already familiar with lambda calculus, my formulation of precedence is such that `(λx.x y)` is not the same as `λx.(x y)`. `(λx.x y)` applies `y` to the identity function `λx.x`, and `λx.(x y)` is a function that applies `y` to its argument `x`. Perhaps not what you’re used to, but the parser was way more straightforward, and programming with it this way seems a bit more natural, believe it or not.

+

+Okay, great. We can call functions. What if we want to pass more than one argument?

+

+### Currying

+

+Imagine the following Javascript function:

+

+```

+let s1 = function(f, x) { return f(x); }

+```

+

+We want to call it with two arguments, another function and a value, and we want the function to then be called on the value, and have its result returned. Can we do this while using only one argument?

+

+[Currying][11] is a technique for dealing with this. Instead of taking two arguments, take the first argument and return another function that takes the second argument. Here’s the Javascript:

+

+```

+let s2 = function(f) {

+ return function(x) {

+ return f(x);

+ }

+};

+```

+

+Now, `s1(f, x)` is the same as `s2(f)(x)`. So the equivalent lambda calculus for `s2` is then

+

+```

+λf.λx.(f x)

+```

+

+Calling this function with `g` for `f` and `y` for `x` is like so:

+

+```

+((s2 g) y)

+```

+

+or

+

+```

+((λf.λx.(f x) g) y)

+```

+

+The equivalent Javascript here is:

+

+```

+function(f) {

+ return function(x) {

+ f(x)

+ }

+}(g)(y)

+```

+

+### Numbers

+

+Since everything is a function, we might feel a little stuck with what to do about numbers. Luckily, [Alonzo Church][12] already figured it out for us! When you have a number, often what you want to do is represent how many times you might do something.

+

+So let’s represent a number as how many times we’ll apply a function to a value. This is called a [Church numeral][13]. If we have `f` and `x`, `0` will mean we don’t call `f` at all, and just return `x`. `1` will mean we call `f` one time, `2` will mean we call `f` twice, and so on.

+

+Here are some definitions! (N.B.: assignment isn’t actually part of lambda calculus, but it makes writing down definitions easier)

+

+```

+0 = λf.λx.x

+```

+

+Here, `0` takes a function `f`, a value `x`, and never calls `f`. It just returns `x`. `f` is called 0 times.

+

+```

+1 = λf.λx.(f x)

+```

+

+Like `0`, `1` takes `f` and `x`, but here it calls `f` exactly once. Let’s see how this continues for other numbers.

+

+```

+2 = λf.λx.(f (f x))

+3 = λf.λx.(f (f (f x)))

+4 = λf.λx.(f (f (f (f x))))

+5 = λf.λx.(f (f (f (f (f x)))))

+```

+

+`5` is a function that takes `f`, `x`, and calls `f` 5 times!

+

+Okay, this is convenient, but how are we going to do math on these numbers?

+

+### Successor

+

+Let’s make a _successor_ function that takes a number and returns a new number that calls `f` just one more time.

+

+```

+succ = λn. λf.λx.(f ((n f) x))

+```

+

+`succ` is a function that takes a Church-encoded number, `n`. The spaces after `λn.` are ignored. I put them there to indicate that we expect to usually call `succ` with one argument, curried or no. `succ` then returns another Church-encoded number, `λf.λx.(f ((n f) x))`. What is it doing? Let’s break it down.

+

+ * `((n f) x)` looks like that time we needed to call a function that took two “curried” arguments. So we’re calling `n`, which is a Church numeral, with two arguments, `f` and `x`. This is going to call `f` `n` times!

+ * `(f ((n f) x))` This is calling `f` again, one more time, on the result of the previous value.

+

+

+

+So does `succ` work? Let’s see what happens when we call `(succ 1)`. We should get the `2` we defined earlier!

+

+```

+ (succ 1)

+-> (succ λf.λx.(f x)) # resolve the variable 1

+-> (λn.λf.λx.(f ((n f) x)) λf.λx.(f x)) # resolve the variable succ

+-> λf.λx.(f ((λf.λx.(f x) f) x)) # call the outside function. replace n

+ # with the argument

+

+let's sidebar and simplify the subexpression

+ (λf.λx.(f x) f)

+-> λx.(f x) # call the function, replace f with f!

+

+now we should be able to simplify the larger subexpression

+ ((λf.λx.(f x) f) x)

+-> (λx.(f x) x) # sidebar above

+-> (f x) # call the function, replace x with x!

+

+let's go back to the original now

+ λf.λx.(f ((λf.λx.(f x) f) x))

+-> λf.λx.(f (f x)) # subexpression simplification above

+```

+

+and done! That last line is identical to the `2` we defined originally! It calls `f` twice.

+

+### Math

+

+Now that we have the successor function, if your interviewers haven’t checked out, tell them that fizz buzz isn’t too far away now; we have [Peano Arithmetic][14]! They can then check their interview bingo cards and see if they’ve increased their winnings.

+

+No but for real, since we have the successor function, we can now easily do addition and multiplication, which we will need for fizz buzz.

+

+First, recall that a number `n` is a function that takes another function `f` and an initial value `x` and applies `f` _n_ times. So if you have two numbers _m_ and _n_, what you want to do is apply `succ` to `m` _n_ times!

+

+```

++ = λm.λn.((n succ) m)

+```

+

+Here, `+` is a variable. If it’s not a lambda expression or a function call, it’s a variable!

+

+Multiplication is similar, but instead of applying `succ` to `m` _n_ times, we’re going to add `m` to `0` `n` times.

+

+First, note that if `((+ m) n)` is adding `m` and `n`, then that means that `(+ m)` is a _function_ that adds `m` to its argument. So we want to apply the function `(+ m)` to `0` `n` times.

+

+```

+* = λm.λn.((n (+ m)) 0)

+```

+

+Yay! We have multiplication and addition now.

+

+### Logic

+

+We’re going to need booleans and if statements and logic tests and so on. So, let’s talk about booleans. Recall how with numbers, what we kind of wanted with a number `n` is to do something _n_ times. Similarly, what we want with booleans is to do one of two things, either/or, but not both. Alonzo Church to the rescue again.

+

+Let’s have booleans be functions that take two arguments (curried of course), where the `true` boolean will return the first option, and the `false` boolean will return the second.

+

+```

+true = λt.λf.t

+false = λt.λf.f

+```

+

+So that we can demonstrate booleans, we’re going to define a simple sample function called `zero?` that returns `true` if a number `n` is zero, and `false` otherwise:

+

+```

+zero? = λn.((n λ_.false) true)

+```

+

+To explain: if we have a Church numeral for 0, it will call the first argument it gets called with 0 times and just return the second argument. In other words, 0 will just return the second argument and that’s it. Otherwise, any other number will call the first argument at least once. So, `zero?` will take `n` and give it a function that throws away its argument and always returns `false` whenever it’s called, and start it off with `true`. Only zero values will return `true`.

+

+➡️️ [Try it out in your browser!][15]

+

+We can now write an `if'` function to make use of these boolean values. `if'` will take a predicate value `p` (the boolean) and two options `a` and `b`.

+

+```

+if' = λp.λa.λb.((p a) b)

+```

+

+You can use it like this:

+

+```

+((if' (zero? n)

+ (something-when-zero x))

+ (something-when-not-zero y))

+```

+

+One thing that’s weird about this construction is that the interpreter is going to evaluate both branches (my lambda calculus interpreter is [eager][16] instead of [lazy][17]). Both `something-when-zero` and `something-when-not-zero` are going to be called to determine what to pass in to `if'`. To make it so that we don’t actually call the function in the branch we don’t want to run, let’s protect the logic in another function. We’ll name the argument to the function `_` to indicate that we want to just throw it away.

+

+```

+((if (zero? n)

+ λ_. (something-when-zero x))

+ λ_. (something-when-not-zero y))

+```

+

+This means we’re going to have to make a new `if` function that calls the correct branch with a throwaway argument, like `0` or something.

+

+```

+if = λp.λa.λb.(((p a) b) 0)

+```

+

+Okay, now we have booleans and `if`!

+

+### Currying part deux

+

+At this point, you might be getting sick of how calling something with multiple curried arguments involves all these extra parentheses. `((f a) b)` is annoying, can’t we just do `(f a b)`?

+

+It’s not part of the strict grammar, but my interpreter makes this small concession. `(a b c)` will be expanded to `((a b) c)` by the parser. `(a b c d)` will be expanded to `(((a b) c) d)` by the parser, and so on.

+

+So, for the rest of the post, for ease of explanation, I’m going to use this [syntax sugar][18]. Observe how using `if` changes:

+

+```

+(if (zero? n)

+ λ_. (something-when-zero x)

+ λ_. (something-when-not-zero y))

+```

+