mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

commit

00c9415ee0

702

sources/tech/20171024 Learn Blockchains by Building One.md

Normal file

702

sources/tech/20171024 Learn Blockchains by Building One.md

Normal file

@ -0,0 +1,702 @@

|

||||

Learn Blockchains by Building One

|

||||

======

|

||||

|

||||

|

||||

You’re here because, like me, you’re psyched about the rise of Cryptocurrencies. And you want to know how Blockchains work—the fundamental technology behind them.

|

||||

|

||||

But understanding Blockchains isn’t easy—or at least wasn’t for me. I trudged through dense videos, followed porous tutorials, and dealt with the amplified frustration of too few examples.

|

||||

|

||||

I like learning by doing. It forces me to deal with the subject matter at a code level, which gets it sticking. If you do the same, at the end of this guide you’ll have a functioning Blockchain with a solid grasp of how they work.

|

||||

|

||||

### Before you get started…

|

||||

|

||||

Remember that a blockchain is an _immutable, sequential_ chain of records called Blocks. They can contain transactions, files or any data you like, really. But the important thing is that they’re _chained_ together using _hashes_ .

|

||||

|

||||

If you aren’t sure what a hash is, [here’s an explanation][1].

|

||||

|

||||

**_Who is this guide aimed at?_** You should be comfy reading and writing some basic Python, as well as have some understanding of how HTTP requests work, since we’ll be talking to our Blockchain over HTTP.

|

||||

|

||||

**_What do I need?_** Make sure that [Python 3.6][2]+ (along with `pip`) is installed. You’ll also need to install Flask and the wonderful Requests library:

|

||||

|

||||

```

|

||||

pip install Flask==0.12.2 requests==2.18.4

|

||||

```

|

||||

|

||||

Oh, you’ll also need an HTTP Client, like [Postman][3] or cURL. But anything will do.

|

||||

|

||||

**_Where’s the final code?_** The source code is [available here][4].

|

||||

|

||||

* * *

|

||||

|

||||

### Step 1: Building a Blockchain

|

||||

|

||||

Open up your favourite text editor or IDE, personally I ❤️ [PyCharm][5]. Create a new file, called `blockchain.py`. We’ll only use a single file, but if you get lost, you can always refer to the [source code][6].

|

||||

|

||||

#### Representing a Blockchain

|

||||

|

||||

We’ll create a `Blockchain` class whose constructor creates an initial empty list (to store our blockchain), and another to store transactions. Here’s the blueprint for our class:

|

||||

|

||||

```

|

||||

class Blockchain(object):

|

||||

def __init__(self):

|

||||

self.chain = []

|

||||

self.current_transactions = []

|

||||

|

||||

def new_block(self):

|

||||

# Creates a new Block and adds it to the chain

|

||||

pass

|

||||

|

||||

def new_transaction(self):

|

||||

# Adds a new transaction to the list of transactions

|

||||

pass

|

||||

|

||||

@staticmethod

|

||||

def hash(block):

|

||||

# Hashes a Block

|

||||

pass

|

||||

|

||||

@property

|

||||

def last_block(self):

|

||||

# Returns the last Block in the chain

|

||||

pass

|

||||

```

|

||||

|

||||

|

||||

Our Blockchain class is responsible for managing the chain. It will store transactions and have some helper methods for adding new blocks to the chain. Let’s start fleshing out some methods.

|

||||

|

||||

#### What does a Block look like?

|

||||

|

||||

Each Block has an index, a timestamp (in Unix time), a list of transactions, a proof (more on that later), and the hash of the previous Block.

|

||||

|

||||

Here’s an example of what a single Block looks like:

|

||||

|

||||

```

|

||||

block = {

|

||||

'index': 1,

|

||||

'timestamp': 1506057125.900785,

|

||||

'transactions': [

|

||||

{

|

||||

'sender': "8527147fe1f5426f9dd545de4b27ee00",

|

||||

'recipient': "a77f5cdfa2934df3954a5c7c7da5df1f",

|

||||

'amount': 5,

|

||||

}

|

||||

],

|

||||

'proof': 324984774000,

|

||||

'previous_hash': "2cf24dba5fb0a30e26e83b2ac5b9e29e1b161e5c1fa7425e73043362938b9824"

|

||||

}

|

||||

```

|

||||

|

||||

At this point, the idea of a chain should be apparent—each new block contains within itself, the hash of the previous Block. This is crucial because it’s what gives blockchains immutability: If an attacker corrupted an earlier Block in the chain then all subsequent blocks will contain incorrect hashes.

|

||||

|

||||

Does this make sense? If it doesn’t, take some time to let it sink in—it’s the core idea behind blockchains.

|

||||

|

||||

#### Adding Transactions to a Block

|

||||

|

||||

We’ll need a way of adding transactions to a Block. Our new_transaction() method is responsible for this, and it’s pretty straight-forward:

|

||||

|

||||

```

|

||||

class Blockchain(object):

|

||||

...

|

||||

|

||||

def new_transaction(self, sender, recipient, amount):

|

||||

"""

|

||||

Creates a new transaction to go into the next mined Block

|

||||

:param sender: <str> Address of the Sender

|

||||

:param recipient: <str> Address of the Recipient

|

||||

:param amount: <int> Amount

|

||||

:return: <int> The index of the Block that will hold this transaction

|

||||

"""

|

||||

|

||||

self.current_transactions.append({

|

||||

'sender': sender,

|

||||

'recipient': recipient,

|

||||

'amount': amount,

|

||||

})

|

||||

|

||||

return self.last_block['index'] + 1

|

||||

```

|

||||

|

||||

After new_transaction() adds a transaction to the list, it returns the index of the block which the transaction will be added to—the next one to be mined. This will be useful later on, to the user submitting the transaction.

|

||||

|

||||

#### Creating new Blocks

|

||||

|

||||

When our Blockchain is instantiated we’ll need to seed it with a genesis block—a block with no predecessors. We’ll also need to add a “proof” to our genesis block which is the result of mining (or proof of work). We’ll talk more about mining later.

|

||||

|

||||

In addition to creating the genesis block in our constructor, we’ll also flesh out the methods for new_block(), new_transaction() and hash():

|

||||

|

||||

```

|

||||

import hashlib

|

||||

import json

|

||||

from time import time

|

||||

|

||||

|

||||

class Blockchain(object):

|

||||

def __init__(self):

|

||||

self.current_transactions = []

|

||||

self.chain = []

|

||||

|

||||

# Create the genesis block

|

||||

self.new_block(previous_hash=1, proof=100)

|

||||

|

||||

def new_block(self, proof, previous_hash=None):

|

||||

"""

|

||||

Create a new Block in the Blockchain

|

||||

:param proof: <int> The proof given by the Proof of Work algorithm

|

||||

:param previous_hash: (Optional) <str> Hash of previous Block

|

||||

:return: <dict> New Block

|

||||

"""

|

||||

|

||||

block = {

|

||||

'index': len(self.chain) + 1,

|

||||

'timestamp': time(),

|

||||

'transactions': self.current_transactions,

|

||||

'proof': proof,

|

||||

'previous_hash': previous_hash or self.hash(self.chain[-1]),

|

||||

}

|

||||

|

||||

# Reset the current list of transactions

|

||||

self.current_transactions = []

|

||||

|

||||

self.chain.append(block)

|

||||

return block

|

||||

|

||||

def new_transaction(self, sender, recipient, amount):

|

||||

"""

|

||||

Creates a new transaction to go into the next mined Block

|

||||

:param sender: <str> Address of the Sender

|

||||

:param recipient: <str> Address of the Recipient

|

||||

:param amount: <int> Amount

|

||||

:return: <int> The index of the Block that will hold this transaction

|

||||

"""

|

||||

self.current_transactions.append({

|

||||

'sender': sender,

|

||||

'recipient': recipient,

|

||||

'amount': amount,

|

||||

})

|

||||

|

||||

return self.last_block['index'] + 1

|

||||

|

||||

@property

|

||||

def last_block(self):

|

||||

return self.chain[-1]

|

||||

|

||||

@staticmethod

|

||||

def hash(block):

|

||||

"""

|

||||

Creates a SHA-256 hash of a Block

|

||||

:param block: <dict> Block

|

||||

:return: <str>

|

||||

"""

|

||||

|

||||

# We must make sure that the Dictionary is Ordered, or we'll have inconsistent hashes

|

||||

block_string = json.dumps(block, sort_keys=True).encode()

|

||||

return hashlib.sha256(block_string).hexdigest()

|

||||

```

|

||||

|

||||

The above should be straight-forward—I’ve added some comments and docstrings to help keep it clear. We’re almost done with representing our blockchain. But at this point, you must be wondering how new blocks are created, forged or mined.

|

||||

|

||||

#### Understanding Proof of Work

|

||||

|

||||

A Proof of Work algorithm (PoW) is how new Blocks are created or mined on the blockchain. The goal of PoW is to discover a number which solves a problem. The number must be difficult to find but easy to verify—computationally speaking—by anyone on the network. This is the core idea behind Proof of Work.

|

||||

|

||||

We’ll look at a very simple example to help this sink in.

|

||||

|

||||

Let’s decide that the hash of some integer x multiplied by another y must end in 0\. So, hash(x * y) = ac23dc...0\. And for this simplified example, let’s fix x = 5\. Implementing this in Python:

|

||||

|

||||

```

|

||||

from hashlib import sha256

|

||||

|

||||

x = 5

|

||||

y = 0 # We don't know what y should be yet...

|

||||

|

||||

while sha256(f'{x*y}'.encode()).hexdigest()[-1] != "0":

|

||||

y += 1

|

||||

|

||||

print(f'The solution is y = {y}')

|

||||

```

|

||||

|

||||

The solution here is y = 21\. Since, the produced hash ends in 0:

|

||||

|

||||

```

|

||||

hash(5 * 21) = 1253e9373e...5e3600155e860

|

||||

```

|

||||

|

||||

The network is able to easily verify their solution.

|

||||

|

||||

#### Implementing basic Proof of Work

|

||||

|

||||

Let’s implement a similar algorithm for our blockchain. Our rule will be similar to the example above:

|

||||

|

||||

> Find a number p that when hashed with the previous block’s solution a hash with 4 leading 0s is produced.

|

||||

|

||||

```

|

||||

import hashlib

|

||||

import json

|

||||

|

||||

from time import time

|

||||

from uuid import uuid4

|

||||

|

||||

|

||||

class Blockchain(object):

|

||||

...

|

||||

|

||||

def proof_of_work(self, last_proof):

|

||||

"""

|

||||

Simple Proof of Work Algorithm:

|

||||

- Find a number p' such that hash(pp') contains leading 4 zeroes, where p is the previous p'

|

||||

- p is the previous proof, and p' is the new proof

|

||||

:param last_proof: <int>

|

||||

:return: <int>

|

||||

"""

|

||||

|

||||

proof = 0

|

||||

while self.valid_proof(last_proof, proof) is False:

|

||||

proof += 1

|

||||

|

||||

return proof

|

||||

|

||||

@staticmethod

|

||||

def valid_proof(last_proof, proof):

|

||||

"""

|

||||

Validates the Proof: Does hash(last_proof, proof) contain 4 leading zeroes?

|

||||

:param last_proof: <int> Previous Proof

|

||||

:param proof: <int> Current Proof

|

||||

:return: <bool> True if correct, False if not.

|

||||

"""

|

||||

|

||||

guess = f'{last_proof}{proof}'.encode()

|

||||

guess_hash = hashlib.sha256(guess).hexdigest()

|

||||

return guess_hash[:4] == "0000"

|

||||

```

|

||||

|

||||

To adjust the difficulty of the algorithm, we could modify the number of leading zeroes. But 4 is sufficient. You’ll find out that the addition of a single leading zero makes a mammoth difference to the time required to find a solution.

|

||||

|

||||

Our class is almost complete and we’re ready to begin interacting with it using HTTP requests.

|

||||

|

||||

* * *

|

||||

|

||||

### Step 2: Our Blockchain as an API

|

||||

|

||||

We’re going to use the Python Flask Framework. It’s a micro-framework and it makes it easy to map endpoints to Python functions. This allows us talk to our blockchain over the web using HTTP requests.

|

||||

|

||||

We’ll create three methods:

|

||||

|

||||

* `/transactions/new` to create a new transaction to a block

|

||||

|

||||

* `/mine` to tell our server to mine a new block.

|

||||

|

||||

* `/chain` to return the full Blockchain.

|

||||

|

||||

#### Setting up Flask

|

||||

|

||||

Our “server” will form a single node in our blockchain network. Let’s create some boilerplate code:

|

||||

|

||||

```

|

||||

import hashlib

|

||||

import json

|

||||

from textwrap import dedent

|

||||

from time import time

|

||||

from uuid import uuid4

|

||||

|

||||

from flask import Flask

|

||||

|

||||

|

||||

class Blockchain(object):

|

||||

...

|

||||

|

||||

|

||||

# Instantiate our Node

|

||||

app = Flask(__name__)

|

||||

|

||||

# Generate a globally unique address for this node

|

||||

node_identifier = str(uuid4()).replace('-', '')

|

||||

|

||||

# Instantiate the Blockchain

|

||||

blockchain = Blockchain()

|

||||

|

||||

|

||||

@app.route('/mine', methods=['GET'])

|

||||

def mine():

|

||||

return "We'll mine a new Block"

|

||||

|

||||

@app.route('/transactions/new', methods=['POST'])

|

||||

def new_transaction():

|

||||

return "We'll add a new transaction"

|

||||

|

||||

@app.route('/chain', methods=['GET'])

|

||||

def full_chain():

|

||||

response = {

|

||||

'chain': blockchain.chain,

|

||||

'length': len(blockchain.chain),

|

||||

}

|

||||

return jsonify(response), 200

|

||||

|

||||

if __name__ == '__main__':

|

||||

app.run(host='0.0.0.0', port=5000)

|

||||

```

|

||||

|

||||

A brief explanation of what we’ve added above:

|

||||

|

||||

* Line 15: Instantiates our Node. Read more about Flask [here][7].

|

||||

|

||||

* Line 18: Create a random name for our node.

|

||||

|

||||

* Line 21: Instantiate our Blockchain class.

|

||||

|

||||

* Line 24–26: Create the /mine endpoint, which is a GET request.

|

||||

|

||||

* Line 28–30: Create the /transactions/new endpoint, which is a POST request, since we’ll be sending data to it.

|

||||

|

||||

* Line 32–38: Create the /chain endpoint, which returns the full Blockchain.

|

||||

|

||||

* Line 40–41: Runs the server on port 5000.

|

||||

|

||||

#### The Transactions Endpoint

|

||||

|

||||

This is what the request for a transaction will look like. It’s what the user sends to the server:

|

||||

|

||||

```

|

||||

{ "sender": "my address", "recipient": "someone else's address", "amount": 5}

|

||||

```

|

||||

|

||||

```

|

||||

import hashlib

|

||||

import json

|

||||

from textwrap import dedent

|

||||

from time import time

|

||||

from uuid import uuid4

|

||||

|

||||

from flask import Flask, jsonify, request

|

||||

|

||||

...

|

||||

|

||||

@app.route('/transactions/new', methods=['POST'])

|

||||

def new_transaction():

|

||||

values = request.get_json()

|

||||

|

||||

# Check that the required fields are in the POST'ed data

|

||||

required = ['sender', 'recipient', 'amount']

|

||||

if not all(k in values for k in required):

|

||||

return 'Missing values', 400

|

||||

|

||||

# Create a new Transaction

|

||||

index = blockchain.new_transaction(values['sender'], values['recipient'], values['amount'])

|

||||

|

||||

response = {'message': f'Transaction will be added to Block {index}'}

|

||||

return jsonify(response), 201

|

||||

```

|

||||

A method for creating Transactions

|

||||

|

||||

#### The Mining Endpoint

|

||||

|

||||

Our mining endpoint is where the magic happens, and it’s easy. It has to do three things:

|

||||

|

||||

1. Calculate the Proof of Work

|

||||

|

||||

2. Reward the miner (us) by adding a transaction granting us 1 coin

|

||||

|

||||

3. Forge the new Block by adding it to the chain

|

||||

|

||||

```

|

||||

import hashlib

|

||||

import json

|

||||

|

||||

from time import time

|

||||

from uuid import uuid4

|

||||

|

||||

from flask import Flask, jsonify, request

|

||||

|

||||

...

|

||||

|

||||

@app.route('/mine', methods=['GET'])

|

||||

def mine():

|

||||

# We run the proof of work algorithm to get the next proof...

|

||||

last_block = blockchain.last_block

|

||||

last_proof = last_block['proof']

|

||||

proof = blockchain.proof_of_work(last_proof)

|

||||

|

||||

# We must receive a reward for finding the proof.

|

||||

# The sender is "0" to signify that this node has mined a new coin.

|

||||

blockchain.new_transaction(

|

||||

sender="0",

|

||||

recipient=node_identifier,

|

||||

amount=1,

|

||||

)

|

||||

|

||||

# Forge the new Block by adding it to the chain

|

||||

previous_hash = blockchain.hash(last_block)

|

||||

block = blockchain.new_block(proof, previous_hash)

|

||||

|

||||

response = {

|

||||

'message': "New Block Forged",

|

||||

'index': block['index'],

|

||||

'transactions': block['transactions'],

|

||||

'proof': block['proof'],

|

||||

'previous_hash': block['previous_hash'],

|

||||

}

|

||||

return jsonify(response), 200

|

||||

```

|

||||

|

||||

Note that the recipient of the mined block is the address of our node. And most of what we’ve done here is just interact with the methods on our Blockchain class. At this point, we’re done, and can start interacting with our blockchain.

|

||||

|

||||

### Step 3: Interacting with our Blockchain

|

||||

|

||||

You can use plain old cURL or Postman to interact with our API over a network.

|

||||

|

||||

Fire up the server:

|

||||

|

||||

```

|

||||

$ python blockchain.py

|

||||

```

|

||||

|

||||

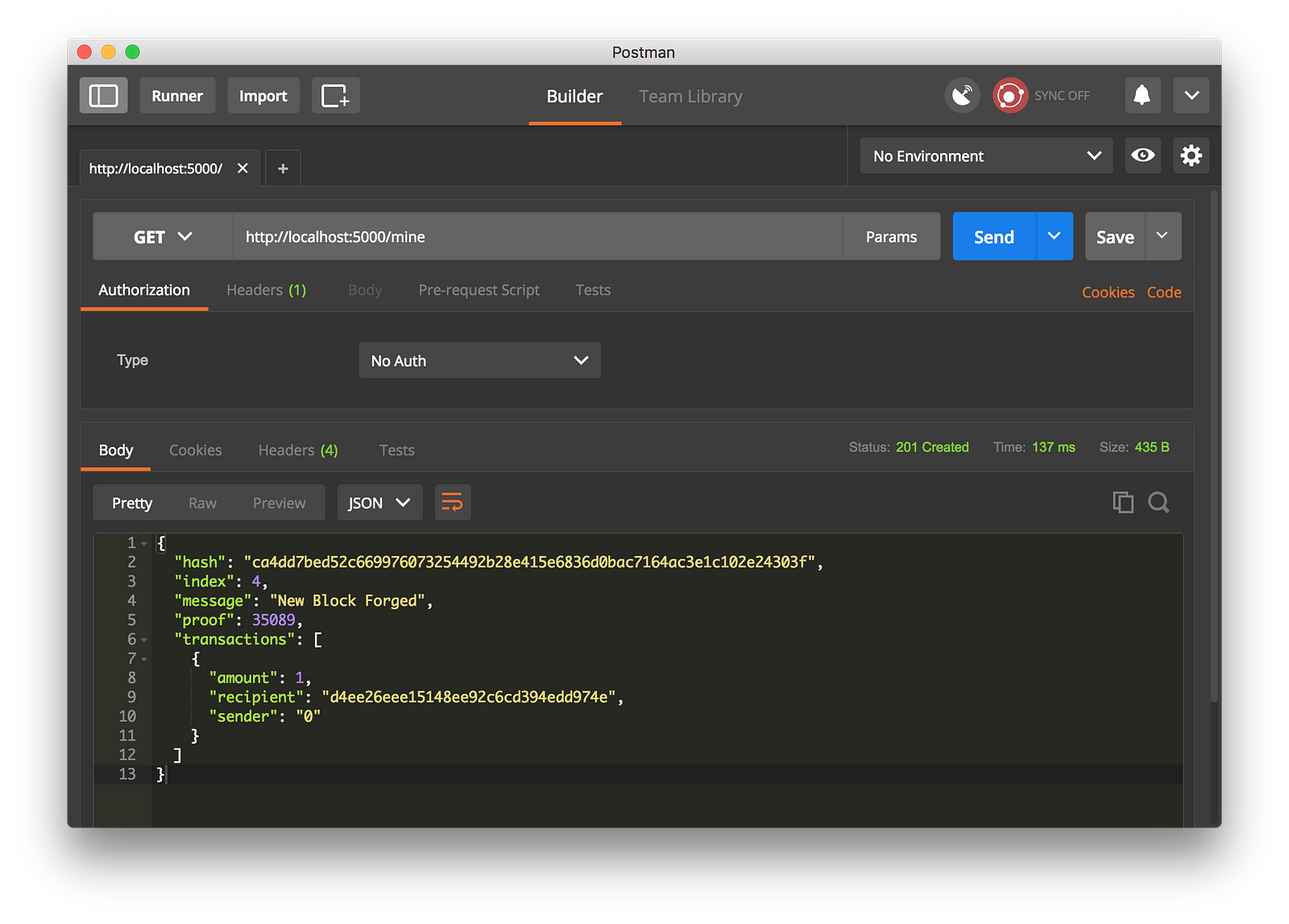

Let’s try mining a block by making a GET request to http://localhost:5000/mine:

|

||||

|

||||

|

||||

Using Postman to make a GET request

|

||||

|

||||

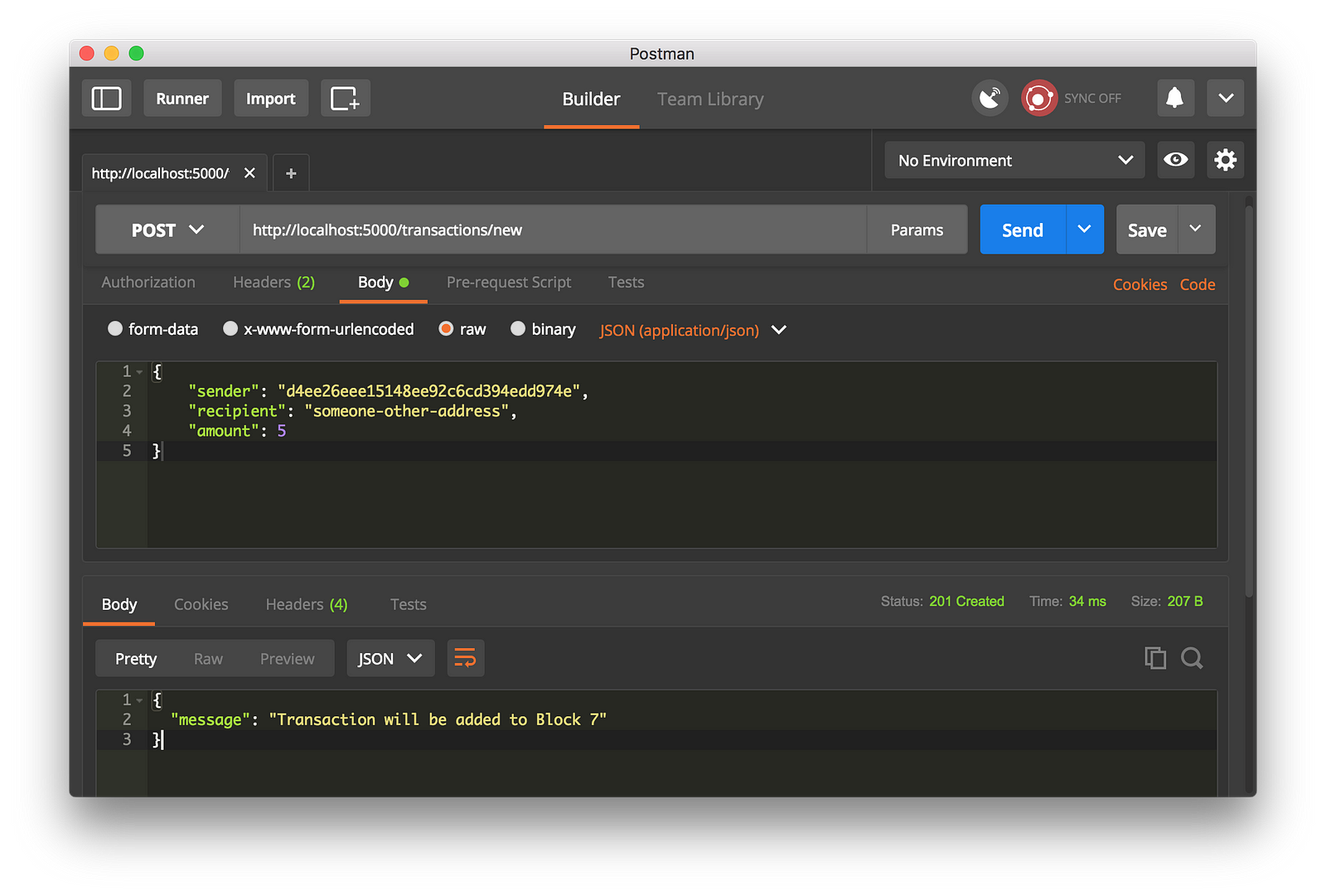

Let’s create a new transaction by making a POST request tohttp://localhost:5000/transactions/new with a body containing our transaction structure:

|

||||

|

||||

|

||||

Using Postman to make a POST request

|

||||

|

||||

If you aren’t using Postman, then you can make the equivalent request using cURL:

|

||||

|

||||

```

|

||||

$ curl -X POST -H "Content-Type: application/json" -d '{ "sender": "d4ee26eee15148ee92c6cd394edd974e", "recipient": "someone-other-address", "amount": 5}' "http://localhost:5000/transactions/new"

|

||||

```

|

||||

I restarted my server, and mined two blocks, to give 3 in total. Let’s inspect the full chain by requesting http://localhost:5000/chain:

|

||||

```

|

||||

{

|

||||

"chain": [

|

||||

{

|

||||

"index": 1,

|

||||

"previous_hash": 1,

|

||||

"proof": 100,

|

||||

"timestamp": 1506280650.770839,

|

||||

"transactions": []

|

||||

},

|

||||

{

|

||||

"index": 2,

|

||||

"previous_hash": "c099bc...bfb7",

|

||||

"proof": 35293,

|

||||

"timestamp": 1506280664.717925,

|

||||

"transactions": [

|

||||

{

|

||||

"amount": 1,

|

||||

"recipient": "8bbcb347e0634905b0cac7955bae152b",

|

||||

"sender": "0"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"index": 3,

|

||||

"previous_hash": "eff91a...10f2",

|

||||

"proof": 35089,

|

||||

"timestamp": 1506280666.1086972,

|

||||

"transactions": [

|

||||

{

|

||||

"amount": 1,

|

||||

"recipient": "8bbcb347e0634905b0cac7955bae152b",

|

||||

"sender": "0"

|

||||

}

|

||||

]

|

||||

}

|

||||

],

|

||||

"length": 3

|

||||

```

|

||||

### Step 4: Consensus

|

||||

|

||||

This is very cool. We’ve got a basic Blockchain that accepts transactions and allows us to mine new Blocks. But the whole point of Blockchains is that they should be decentralized. And if they’re decentralized, how on earth do we ensure that they all reflect the same chain? This is called the problem of Consensus, and we’ll have to implement a Consensus Algorithm if we want more than one node in our network.

|

||||

|

||||

#### Registering new Nodes

|

||||

|

||||

Before we can implement a Consensus Algorithm, we need a way to let a node know about neighbouring nodes on the network. Each node on our network should keep a registry of other nodes on the network. Thus, we’ll need some more endpoints:

|

||||

|

||||

1. /nodes/register to accept a list of new nodes in the form of URLs.

|

||||

|

||||

2. /nodes/resolve to implement our Consensus Algorithm, which resolves any conflicts—to ensure a node has the correct chain.

|

||||

|

||||

We’ll need to modify our Blockchain’s constructor and provide a method for registering nodes:

|

||||

|

||||

```

|

||||

...

|

||||

from urllib.parse import urlparse

|

||||

...

|

||||

|

||||

|

||||

class Blockchain(object):

|

||||

def __init__(self):

|

||||

...

|

||||

self.nodes = set()

|

||||

...

|

||||

|

||||

def register_node(self, address):

|

||||

"""

|

||||

Add a new node to the list of nodes

|

||||

:param address: <str> Address of node. Eg. 'http://192.168.0.5:5000'

|

||||

:return: None

|

||||

"""

|

||||

|

||||

parsed_url = urlparse(address)

|

||||

self.nodes.add(parsed_url.netloc)

|

||||

```

|

||||

A method for adding neighbouring nodes to our Network

|

||||

|

||||

Note that we’ve used a set() to hold the list of nodes. This is a cheap way of ensuring that the addition of new nodes is idempotent—meaning that no matter how many times we add a specific node, it appears exactly once.

|

||||

|

||||

#### Implementing the Consensus Algorithm

|

||||

|

||||

As mentioned, a conflict is when one node has a different chain to another node. To resolve this, we’ll make the rule that the longest valid chain is authoritative. In other words, the longest chain on the network is the de-facto one. Using this algorithm, we reach Consensus amongst the nodes in our network.

|

||||

|

||||

```

|

||||

...

|

||||

import requests

|

||||

|

||||

|

||||

class Blockchain(object)

|

||||

...

|

||||

|

||||

def valid_chain(self, chain):

|

||||

"""

|

||||

Determine if a given blockchain is valid

|

||||

:param chain: <list> A blockchain

|

||||

:return: <bool> True if valid, False if not

|

||||

"""

|

||||

|

||||

last_block = chain[0]

|

||||

current_index = 1

|

||||

|

||||

while current_index < len(chain):

|

||||

block = chain[current_index]

|

||||

print(f'{last_block}')

|

||||

print(f'{block}')

|

||||

print("\n-----------\n")

|

||||

# Check that the hash of the block is correct

|

||||

if block['previous_hash'] != self.hash(last_block):

|

||||

return False

|

||||

|

||||

# Check that the Proof of Work is correct

|

||||

if not self.valid_proof(last_block['proof'], block['proof']):

|

||||

return False

|

||||

|

||||

last_block = block

|

||||

current_index += 1

|

||||

|

||||

return True

|

||||

|

||||

def resolve_conflicts(self):

|

||||

"""

|

||||

This is our Consensus Algorithm, it resolves conflicts

|

||||

by replacing our chain with the longest one in the network.

|

||||

:return: <bool> True if our chain was replaced, False if not

|

||||

"""

|

||||

|

||||

neighbours = self.nodes

|

||||

new_chain = None

|

||||

|

||||

# We're only looking for chains longer than ours

|

||||

max_length = len(self.chain)

|

||||

|

||||

# Grab and verify the chains from all the nodes in our network

|

||||

for node in neighbours:

|

||||

response = requests.get(f'http://{node}/chain')

|

||||

|

||||

if response.status_code == 200:

|

||||

length = response.json()['length']

|

||||

chain = response.json()['chain']

|

||||

|

||||

# Check if the length is longer and the chain is valid

|

||||

if length > max_length and self.valid_chain(chain):

|

||||

max_length = length

|

||||

new_chain = chain

|

||||

|

||||

# Replace our chain if we discovered a new, valid chain longer than ours

|

||||

if new_chain:

|

||||

self.chain = new_chain

|

||||

return True

|

||||

|

||||

return False

|

||||

```

|

||||

|

||||

The first method valid_chain() is responsible for checking if a chain is valid by looping through each block and verifying both the hash and the proof.

|

||||

|

||||

resolve_conflicts() is a method which loops through all our neighbouring nodes, downloads their chains and verifies them using the above method. If a valid chain is found, whose length is greater than ours, we replace ours.

|

||||

|

||||

Let’s register the two endpoints to our API, one for adding neighbouring nodes and the another for resolving conflicts:

|

||||

|

||||

```

|

||||

@app.route('/nodes/register', methods=['POST'])

|

||||

def register_nodes():

|

||||

values = request.get_json()

|

||||

|

||||

nodes = values.get('nodes')

|

||||

if nodes is None:

|

||||

return "Error: Please supply a valid list of nodes", 400

|

||||

|

||||

for node in nodes:

|

||||

blockchain.register_node(node)

|

||||

|

||||

response = {

|

||||

'message': 'New nodes have been added',

|

||||

'total_nodes': list(blockchain.nodes),

|

||||

}

|

||||

return jsonify(response), 201

|

||||

|

||||

|

||||

@app.route('/nodes/resolve', methods=['GET'])

|

||||

def consensus():

|

||||

replaced = blockchain.resolve_conflicts()

|

||||

|

||||

if replaced:

|

||||

response = {

|

||||

'message': 'Our chain was replaced',

|

||||

'new_chain': blockchain.chain

|

||||

}

|

||||

else:

|

||||

response = {

|

||||

'message': 'Our chain is authoritative',

|

||||

'chain': blockchain.chain

|

||||

}

|

||||

|

||||

return jsonify(response), 200

|

||||

```

|

||||

|

||||

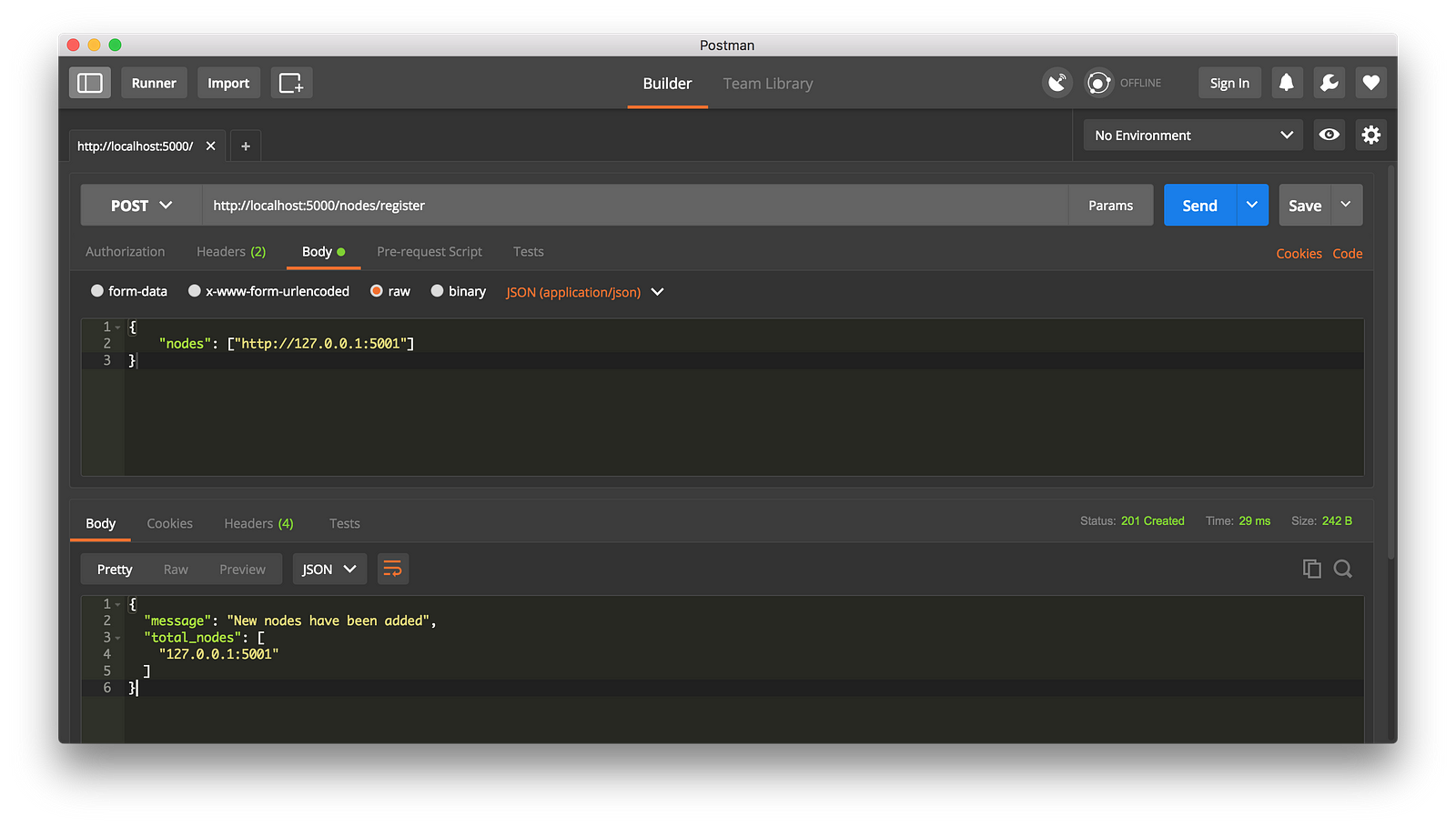

At this point you can grab a different machine if you like, and spin up different nodes on your network. Or spin up processes using different ports on the same machine. I spun up another node on my machine, on a different port, and registered it with my current node. Thus, I have two nodes: [http://localhost:5000][9] and http://localhost:5001.

|

||||

|

||||

|

||||

Registering a new Node

|

||||

|

||||

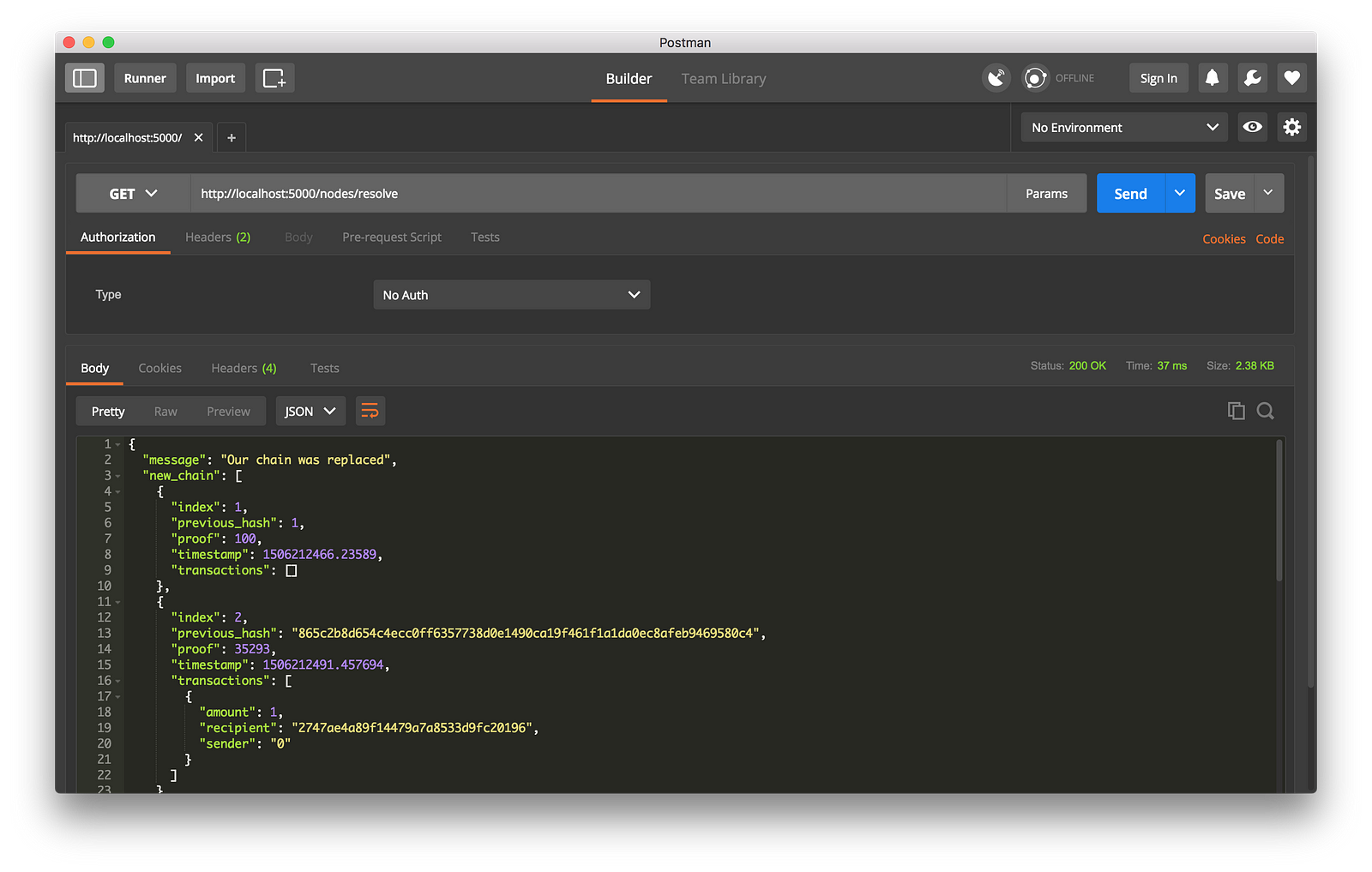

I then mined some new Blocks on node 2, to ensure the chain was longer. Afterward, I called GET /nodes/resolve on node 1, where the chain was replaced by the Consensus Algorithm:

|

||||

|

||||

|

||||

Consensus Algorithm at Work

|

||||

|

||||

And that’s a wrap... Go get some friends together to help test out your Blockchain.

|

||||

|

||||

* * *

|

||||

|

||||

I hope that this has inspired you to create something new. I’m ecstatic about Cryptocurrencies because I believe that Blockchains will rapidly change the way we think about economies, governments and record-keeping.

|

||||

|

||||

**Update:** I’m planning on following up with a Part 2, where we’ll extend our Blockchain to have a Transaction Validation Mechanism as well as discuss some ways in which you can productionize your Blockchain.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://hackernoon.com/learn-blockchains-by-building-one-117428612f46

|

||||

|

||||

作者:[Daniel van Flymen][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://hackernoon.com/@vanflymen?source=post_header_lockup

|

||||

[1]:https://learncryptography.com/hash-functions/what-are-hash-functions

|

||||

[2]:https://www.python.org/downloads/

|

||||

[3]:https://www.getpostman.com

|

||||

[4]:https://github.com/dvf/blockchain

|

||||

[5]:https://www.jetbrains.com/pycharm/

|

||||

[6]:https://github.com/dvf/blockchain

|

||||

[7]:http://flask.pocoo.org/docs/0.12/quickstart/#a-minimal-application

|

||||

[8]:http://localhost:5000/transactions/new

|

||||

[9]:http://localhost:5000

|

||||

@ -1,3 +1,5 @@

|

||||

lontow translating

|

||||

|

||||

5 ways open source can strengthen your job search

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

Translating By MjSeven

|

||||

|

||||

How to install software applications on Linux

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

The Type Command Tutorial With Examples For Beginners

|

||||

======

|

||||

|

||||

|

||||

@ -1,195 +0,0 @@

|

||||

hankchow translating

|

||||

|

||||

How to measure particulate matter with a Raspberry Pi

|

||||

======

|

||||

|

||||

|

||||

We regularly measure particulate matter in the air at our school in Southeast Asia. The values here are very high, particularly between February and May, when weather conditions are very dry and hot, and many fields burn. These factors negatively affect the quality of the air. In this article, I will show you how to measure particulate matter using a Raspberry Pi.

|

||||

|

||||

### What is particulate matter?

|

||||

|

||||

Particulate matter is fine dust or very small particles in the air. A distinction is made between PM10 and PM2.5: PM10 refers to particles that are smaller than 10µm; PM2.5 refers to particles that are smaller than 2.5µm. The smaller the particles—i.e., anything smaller than 2.5µm—the more dangerous they are to one's health, as they can penetrate into the alveoli and impact the respiratory system.

|

||||

|

||||

The World Health Organization recommends [limiting particulate matter][1] to the following values:

|

||||

|

||||

* Annual average PM10 20 µg/m³

|

||||

* Annual average PM2,5 10 µg/m³ per year

|

||||

* Daily average PM10 50 µg/m³ without permitted days on which exceeding is possible.

|

||||

* Daily average PM2,5 25 µg/m³ without permitted days on which exceeding is possible.

|

||||

|

||||

|

||||

|

||||

These values are below the limits set in most countries. In the European Union, an annual average of 40 µg/m³ for PM10 is allowed.

|

||||

|

||||

### What is the Air Quality Index (AQI)?

|

||||

|

||||

The Air Quality Index indicates how “good” or “bad” air is based on its particulate measurement. Unfortunately, there is no uniform standard for AQI because not all countries calculate it the same way. The Wikipedia article on the [Air Quality Index][2] offers a helpful overview. At our school, we are guided by the classification established by the United States' [Environmental Protection Agency][3].

|

||||

|

||||

|

||||

![Air quality index][5]

|

||||

|

||||

Air quality index

|

||||

|

||||

### What do we need to measure particulate matter?

|

||||

|

||||

Measuring particulate matter requires only two things:

|

||||

|

||||

* A Raspberry Pi (every model works; a model with WiFi is best)

|

||||

* A particulates sensor SDS011

|

||||

|

||||

|

||||

|

||||

![Particulate sensor][7]

|

||||

|

||||

Particulate sensor

|

||||

|

||||

If you are using a Raspberry Pi Zero W, you will also need an adapter cable to a standard USB port because the Zero has only a Micro USB. These are available for about $20. The sensor comes with a USB adapter for the serial interface.

|

||||

|

||||

### Installation

|

||||

|

||||

For our Raspberry Pi we download the corresponding Raspbian Lite Image and [write it on the Micro SD card][8]. (I will not go into the details of setting up the WLAN connection; many tutorials are available online).

|

||||

|

||||

If you want to have SSH enabled after booting, you need to create an empty file named `ssh` in the boot partition. The IP of the Raspberry Pi can best be obtained via your own router/DHCP server. You can then log in via SSH (the default password is raspberry):

|

||||

```

|

||||

$ ssh pi@192.168.1.5

|

||||

|

||||

```

|

||||

|

||||

First we need to install some packages on the Pi:

|

||||

```

|

||||

$ sudo apt install git-core python-serial python-enum lighttpd

|

||||

|

||||

```

|

||||

|

||||

Before we can start, we need to know which serial port the USB adapter is connected to. `dmesg` helps us:

|

||||

```

|

||||

$ dmesg

|

||||

|

||||

[ 5.559802] usbcore: registered new interface driver usbserial

|

||||

|

||||

[ 5.559930] usbcore: registered new interface driver usbserial_generic

|

||||

|

||||

[ 5.560049] usbserial: USB Serial support registered for generic

|

||||

|

||||

[ 5.569938] usbcore: registered new interface driver ch341

|

||||

|

||||

[ 5.570079] usbserial: USB Serial support registered for ch341-uart

|

||||

|

||||

[ 5.570217] ch341 1–1.4:1.0: ch341-uart converter detected

|

||||

|

||||

[ 5.575686] usb 1–1.4: ch341-uart converter now attached to ttyUSB0

|

||||

|

||||

```

|

||||

|

||||

In the last line, you can see our interface: `ttyUSB0`. We now need a small Python script that reads the data and saves it in a JSON file, and then we will create a small HTML page that reads and displays the data.

|

||||

|

||||

### Reading data on the Raspberry Pi

|

||||

|

||||

We first create an instance of the sensor and then read the sensor every 5 minutes, for 30 seconds. These values can, of course, be adjusted. Between the measuring intervals, we put the sensor into a sleep mode to increase its lifespan (according to the manufacturer, the lifespan totals approximately 8000 hours).

|

||||

|

||||

We can download the script with this command:

|

||||

```

|

||||

$ wget -O /home/pi/aqi.py https://raw.githubusercontent.com/zefanja/aqi/master/python/aqi.py

|

||||

|

||||

```

|

||||

|

||||

For the script to run without errors, two small things are still needed:

|

||||

```

|

||||

$ sudo chown pi:pi /var/wwww/html/

|

||||

|

||||

$ echo[] > /var/wwww/html/aqi.json

|

||||

|

||||

```

|

||||

|

||||

Now you can start the script:

|

||||

```

|

||||

$ chmod +x aqi.py

|

||||

|

||||

$ ./aqi.py

|

||||

|

||||

PM2.5:55.3, PM10:47.5

|

||||

|

||||

PM2.5:55.5, PM10:47.7

|

||||

|

||||

PM2.5:55.7, PM10:47.8

|

||||

|

||||

PM2.5:53.9, PM10:47.6

|

||||

|

||||

PM2.5:53.6, PM10:47.4

|

||||

|

||||

PM2.5:54.2, PM10:47.3

|

||||

|

||||

…

|

||||

|

||||

```

|

||||

|

||||

### Run the script automatically

|

||||

|

||||

So that we don’t have to start the script manually every time, we can let it start with a cronjob, e.g., with every restart of the Raspberry Pi. To do this, open the crontab file:

|

||||

```

|

||||

$ crontab -e

|

||||

|

||||

```

|

||||

|

||||

and add the following line at the end:

|

||||

```

|

||||

@reboot cd /home/pi/ && ./aqi.py

|

||||

|

||||

```

|

||||

|

||||

Now our script starts automatically with every restart.

|

||||

|

||||

### HTML page for displaying measured values and AQI

|

||||

|

||||

We have already installed a lightweight webserver, `lighttpd`. So we need to save our HTML, JavaScript, and CSS files in the directory `/var/www/html/` so that we can access the data from another computer or smartphone. With the next three commands, we simply download the corresponding files:

|

||||

```

|

||||

$ wget -O /var/wwww/html/index.html https://raw.githubusercontent.com/zefanja/aqi/master/html/index.html

|

||||

|

||||

$ wget -O /var/wwww/html/aqi.js https://raw.githubusercontent.com/zefanja/aqi/master/html/aqi.js

|

||||

|

||||

$ wget -O /var/wwww/html/style.css https://raw.githubusercontent.com/zefanja/aqi/master/html/style.css

|

||||

|

||||

```

|

||||

|

||||

The main work is done in the JavaScript file, which opens our JSON file, takes the last value, and calculates the AQI based on this value. Then the background colors are adjusted according to the scale of the EPA.

|

||||

|

||||

Now you simply open the address of the Raspberry Pi in your browser and look at the current particulates values, e.g., [http://192.168.1.5:][9]

|

||||

|

||||

The page is very simple and can be extended, for example, with a chart showing the history of the last hours, etc. Pull requests are welcome.

|

||||

|

||||

The complete [source code is available on Github][10].

|

||||

|

||||

**[Enter our[Raspberry Pi week giveaway][11] for a chance at this arcade gaming kit.]**

|

||||

|

||||

### Wrapping up

|

||||

|

||||

For relatively little money, we can measure particulate matter with a Raspberry Pi. There are many possible applications, from a permanent outdoor installation to a mobile measuring device. At our school, we use both: There is a sensor that measures outdoor values day and night, and a mobile sensor that checks the effectiveness of the air conditioning filters in our classrooms.

|

||||

|

||||

[Luftdaten.info][12] offers guidance to build a similar sensor. The software is delivered ready to use, and the measuring device is even more compact because it does not use a Raspberry Pi. Great project!

|

||||

|

||||

Creating a particulates sensor is an excellent project to do with students in computer science classes or a workshop.

|

||||

|

||||

What do you use a [Raspberry Pi][13] for?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/how-measure-particulate-matter-raspberry-pi

|

||||

|

||||

作者:[Stephan Tetzel][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/stephan

|

||||

[1]:https://en.wikipedia.org/wiki/Particulates

|

||||

[2]:https://en.wikipedia.org/wiki/Air_quality_index

|

||||

[3]:https://en.wikipedia.org/wiki/United_States_Environmental_Protection_Agency

|

||||

[5]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/images/life-uploads/air_quality_index.png?itok=FwmGf1ZS (Air quality index)

|

||||

[7]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/images/life-uploads/particulate_sensor.jpg?itok=ddH3bBwO (Particulate sensor)

|

||||

[8]:https://www.raspberrypi.org/documentation/installation/installing-images/README.md

|

||||

[9]:http://192.168.1.5/

|

||||

[10]:https://github.com/zefanja/aqi

|

||||

[11]:https://opensource.com/article/18/3/raspberry-pi-week-giveaway

|

||||

[12]:http://luftdaten.info/

|

||||

[13]:https://openschoolsolutions.org/shutdown-servers-case-power-failure%e2%80%8a-%e2%80%8aups-nut-co/

|

||||

@ -0,0 +1,201 @@

|

||||

A Command Line Productivity Tool For Tracking Work Hours

|

||||

======

|

||||

|

||||

|

||||

Keeping track of your work hours will give you an insight about the amount of work you get done in a specific time frame. There are plenty of GUI-based productivity tools available on the Internet for tracking work hours. However, I couldn’t find a good CLI-based tool. Today, I stumbled upon a a simple, yet useful tool named **“Moro”** for tracking work hours. Moro is a Finnish word which means “Hello”. Using Moro, you can find how much time you take to complete a specific task. It is free, open source and written using **NodeJS**.

|

||||

|

||||

### Moro – A Command Line Productivity Tool For Tracking Work Hours

|

||||

|

||||

Since Moro is written using NodeJS, make sure you have installed it on your system. If you haven’t installed it already, follow the link given below to install NodeJS and NPM in your Linux box.

|

||||

|

||||

Once NodeJS and Npm installed, run the following command to install Moro.

|

||||

```

|

||||

$ npm install -g moro

|

||||

|

||||

```

|

||||

|

||||

### Usage

|

||||

|

||||

Moro’s working concept is very simple. It saves your work staring time, ending time and the break time in your system. At the end of each day, it will tell you how many hours you have worked!

|

||||

|

||||

When you reached to office, just type:

|

||||

```

|

||||

$ moro

|

||||

|

||||

```

|

||||

|

||||

Sample output:

|

||||

```

|

||||

💙 Moro \o/

|

||||

|

||||

✔ You clocked in at: 9:20

|

||||

|

||||

```

|

||||

|

||||

Moro will register this time as your starting time.

|

||||

|

||||

When you leave the office, again type:

|

||||

```

|

||||

$ moro

|

||||

|

||||

```

|

||||

|

||||

Sample output:

|

||||

```

|

||||

💙 Moro \o/

|

||||

|

||||

✔ You clocked out at: 19:22

|

||||

|

||||

ℹ Today looks like this so far:

|

||||

|

||||

┌──────────────────┬─────────────────────────┐

|

||||

│ Today you worked │ 9 Hours and 72 Minutes │

|

||||

├──────────────────┼─────────────────────────┤

|

||||

│ Clock in │ 9:20 │

|

||||

├──────────────────┼─────────────────────────┤

|

||||

│ Clock out │ 19:22 │

|

||||

├──────────────────┼─────────────────────────┤

|

||||

│ Break duration │ 30 minutes │

|

||||

├──────────────────┼─────────────────────────┤

|

||||

│ Date │ 2018-03-19 │

|

||||

└──────────────────┴─────────────────────────┘

|

||||

ℹ Run moro --help to learn how to edit your clock in, clock out or break duration for today

|

||||

|

||||

```

|

||||

|

||||

Moro will registers that time as your ending time.

|

||||

|

||||

Now, More will subtract the starting time from ending time and then subtracts another 30 minutes for break time from the total and gives you the total working hours on that day. Sorry I am really terrible at explaining Math calculations. Let us say you came to work at 10 am in the morning and left 17.30 in the evening. So, the total hours you spent on the office is 7.30 hours (i.e 17.30-10). Then subtract the break time (default is 30 minutes) from the total. Hence, your total working time is 7 hours. Understood? Great!

|

||||

|

||||

**Note:** Don’t confuse “moro” with “more” command like I did while writing this guide.

|

||||

|

||||

To see all your registered hours, run:

|

||||

```

|

||||

$ moro report --all

|

||||

|

||||

```

|

||||

|

||||

Just in case, you forgot to register the start time or end time, you can specify that later on the same.

|

||||

|

||||

For example, to register 10 am as start time, run:

|

||||

```

|

||||

$ moro hi 10:00

|

||||

|

||||

💙 Moro \o/

|

||||

|

||||

✔ You clocked in at: 10:00

|

||||

|

||||

⏰ Working until 18:00 will make it a full (7.5 hours) day

|

||||

|

||||

```

|

||||

|

||||

To register 17.30 as end time:

|

||||

```

|

||||

$ moro bye 17:30

|

||||

|

||||

💙 Moro \o/

|

||||

|

||||

✔ You clocked out at: 17:30

|

||||

|

||||

ℹ Today looks like this so far:

|

||||

|

||||

┌──────────────────┬───────────────────────┐

|

||||

│ Today you worked │ 7 Hours and 0 Minutes │

|

||||

├──────────────────┼───────────────────────┤

|

||||

│ Clock in │ 10:00 │

|

||||

├──────────────────┼───────────────────────┤

|

||||

│ Clock out │ 17:30 │

|

||||

├──────────────────┼───────────────────────┤

|

||||

│ Break duration │ 30 minutes │

|

||||

├──────────────────┼───────────────────────┤

|

||||

│ Date │ 2018-03-19 │

|

||||

└──────────────────┴───────────────────────┘

|

||||

ℹ Run moro --help to learn how to edit your clock in, clock out or break duration for today

|

||||

|

||||

```

|

||||

|

||||

You already know Moro will subtract 30 minutes for break time, by default. If you wanted to set a custom break time, you could simply set it using command:

|

||||

```

|

||||

$ moro break 45

|

||||

|

||||

```

|

||||

|

||||

Now, the break time is 45 minutes.

|

||||

|

||||

To clear all data:

|

||||

```

|

||||

$ moro clear --yes

|

||||

|

||||

💙 Moro \o/

|

||||

|

||||

✔ Database file deleted successfully

|

||||

|

||||

```

|

||||

|

||||

**Add notes**

|

||||

|

||||

Sometimes, you may want to add note while working. Don’t look for a separate note taking application. Moro will help you to add notes. To add a note, just run:

|

||||

```

|

||||

$ moro note mynotes

|

||||

|

||||

```

|

||||

|

||||

To search for the registered notes at later time, simply do:

|

||||

```

|

||||

$ moro search mynotes

|

||||

|

||||

```

|

||||

|

||||

**Change default settings**

|

||||

|

||||

The default full work day is 7.5 hours. Since the developer is from Finland, it’s the official work hours. You can, however, change this settings as per your country’s work hours.

|

||||

|

||||

Say for example, to set it 7 hours, run:

|

||||

```

|

||||

$ moro config --day 7

|

||||

|

||||

```

|

||||

|

||||

Also the default break time can be changed from 30 minutes like below:

|

||||

```

|

||||

$ moro config --break 45

|

||||

|

||||

```

|

||||

|

||||

**Backup your data**

|

||||

|

||||

Like I already said, Moro stores the tracking time data in your home directory, and the file name is **.moro-data.db**.

|

||||

|

||||

You can can, however, save the backup database file to different location. To do so, move the **.more-data.db** file to a different location of your choice and tell Moro to use that database file like below.

|

||||

```

|

||||

$ moro config --database-path /home/sk/personal/moro-data.db

|

||||

|

||||

```

|

||||

|

||||

As per above command, I have assigned the default database file’s location to **/home/sk/personal** directory.

|

||||

|

||||

For help, run:

|

||||

```

|

||||

$ moro --help

|

||||

|

||||

```

|

||||

|

||||

As you can see, Moro is very simple, yet useful to track how much time you’ve spent to get your work done. It is will be useful for freelancers and also anyone who must get things done under a limited time frame.

|

||||

|

||||

And, that’s all for today. Hope this helps. More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/moro-a-command-line-productivity-tool-for-tracking-work-hours/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

@ -1,207 +0,0 @@

|

||||

hankchow translating

|

||||

|

||||

How to use Ansible to patch systems and install applications

|

||||

======

|

||||

|

||||

|

||||

Have you ever wondered how to patch your systems, reboot, and continue working?

|

||||

|

||||

If so, you'll be interested in [Ansible][1] , a simple configuration management tool that can make some of the hardest work easy. For example, system administration tasks that can be complicated, take hours to complete, or have complex requirements for security.

|

||||

|

||||

In my experience, one of the hardest parts of being a sysadmin is patching systems. Every time you get a Common Vulnerabilities and Exposure (CVE) notification or Information Assurance Vulnerability Alert (IAVA) mandated by security, you have to kick into high gear to close the security gaps. (And, believe me, your security officer will hunt you down unless the vulnerabilities are patched.)

|

||||

|

||||

Ansible can reduce the time it takes to patch systems by running [packaging modules][2]. To demonstrate, let's use the [yum module][3] to update the system. Ansible can install, update, remove, or install from another location (e.g., `rpmbuild` from continuous integration/continuous development). Here is the task for updating the system:

|

||||

```

|

||||

- name: update the system

|

||||

|

||||

yum:

|

||||

|

||||

name: "*"

|

||||

|

||||

state: latest

|

||||

|

||||

```

|

||||

|

||||

In the first line, we give the task a meaningful `name` so we know what Ansible is doing. In the next line, the `yum module` updates the CentOS virtual machine (VM), then `name: "*"` tells yum to update everything, and, finally, `state: latest` updates to the latest RPM.

|

||||

|

||||

After updating the system, we need to restart and reconnect:

|

||||

```

|

||||

- name: restart system to reboot to newest kernel

|

||||

|

||||

shell: "sleep 5 && reboot"

|

||||

|

||||

async: 1

|

||||

|

||||

poll: 0

|

||||

|

||||

|

||||

|

||||

- name: wait for 10 seconds

|

||||

|

||||

pause:

|

||||

|

||||

seconds: 10

|

||||

|

||||

|

||||

|

||||

- name: wait for the system to reboot

|

||||

|

||||

wait_for_connection:

|

||||

|

||||

connect_timeout: 20

|

||||

|

||||

sleep: 5

|

||||

|

||||

delay: 5

|

||||

|

||||

timeout: 60

|

||||

|

||||

|

||||

|

||||

- name: install epel-release

|

||||

|

||||

yum:

|

||||

|

||||

name: epel-release

|

||||

|

||||

state: latest

|

||||

|

||||

```

|

||||

|

||||

The `shell module` puts the system to sleep for 5 seconds then reboots. We use `sleep` to prevent the connection from breaking, `async` to avoid timeout, and `poll` to fire & forget. We pause for 10 seconds to wait for the VM to come back and use `wait_for_connection` to connect back to the VM as soon as it can make a connection. Then we `install epel-release` to test the RPM installation. You can run this playbook multiple times to show the `idempotent`, and the only task that will show as changed is the reboot since we are using the `shell` module. You can use `changed_when: False` to ignore the change when using the `shell` module if you expect no actual changes.

|

||||

|

||||

So far we've learned how to update a system, restart the VM, reconnect, and install a RPM. Next we will install NGINX using the role in [Ansible Lightbulb][4].

|

||||

```

|

||||

- name: Ensure nginx packages are present

|

||||

|

||||

yum:

|

||||

|

||||

name: nginx, python-pip, python-devel, devel

|

||||

|

||||

state: present

|

||||

|

||||

notify: restart-nginx-service

|

||||

|

||||

|

||||

|

||||

- name: Ensure uwsgi package is present

|

||||

|

||||

pip:

|

||||

|

||||

name: uwsgi

|

||||

|

||||

state: present

|

||||

|

||||

notify: restart-nginx-service

|

||||

|

||||

|

||||

|

||||

- name: Ensure latest default.conf is present

|

||||

|

||||

template:

|

||||

|

||||

src: templates/nginx.conf.j2

|

||||

|

||||

dest: /etc/nginx/nginx.conf

|

||||

|

||||

backup: yes

|

||||

|

||||

notify: restart-nginx-service

|

||||

|

||||

|

||||

|

||||

- name: Ensure latest index.html is present

|

||||

|

||||

template:

|

||||

|

||||

src: templates/index.html.j2

|

||||

|

||||

dest: /usr/share/nginx/html/index.html

|

||||

|

||||

|

||||

|

||||

- name: Ensure nginx service is started and enabled

|

||||

|

||||

service:

|

||||

|

||||

name: nginx

|

||||

|

||||

state: started

|

||||

|

||||

enabled: yes

|

||||

|

||||

|

||||

|

||||

- name: Ensure proper response from localhost can be received

|

||||

|

||||

uri:

|

||||

|

||||

url: "http://localhost:80/"

|

||||

|

||||

return_content: yes

|

||||

|

||||

register: response

|

||||

|

||||

until: 'nginx_test_message in response.content'

|

||||

|

||||

retries: 10

|

||||

|

||||

delay: 1

|

||||

|

||||

```

|

||||

|

||||

And the handler that restarts the nginx service:

|

||||

```

|

||||

# handlers file for nginx-example

|

||||

|

||||

- name: restart-nginx-service

|

||||

|

||||

service:

|

||||

|

||||

name: nginx

|

||||

|

||||

state: restarted

|

||||

|

||||

```

|

||||

|

||||

In this role, we install the RPMs `nginx`, `python-pip`, `python-devel`, and `devel` and install `uwsgi` with PIP. Next, we use the `template` module to copy over the `nginx.conf` and `index.html` for the page to display. After that, we make sure the service is enabled on boot and started. Then we use the `uri` module to check the connection to the page.

|

||||

|

||||

Here is a playbook showing an example of updating, restarting, and installing an RPM. Then continue installing nginx. This can be done with any other roles/applications you want.

|

||||

```

|

||||

- hosts: all

|

||||

|

||||

roles:

|

||||

|

||||

- centos-update

|

||||

|

||||

- nginx-simple

|

||||

|

||||

```

|

||||

|

||||

Watch this demo video for more insight on the process.

|

||||

|

||||

[demo](https://asciinema.org/a/166437/embed?)

|

||||

|

||||

This was just a simple example of how to update, reboot, and continue. For simplicity, I added the packages without [variables][5]. Once you start working with a large number of hosts, you will need to change a few settings:

|

||||

|

||||

This is because on your production environment you might want to update one system at a time (not fire & forget) and actually wait a longer time for your system to reboot and continue.

|

||||

|

||||

For more ways to automate your work with this tool, take a look at the other [Ansible articles on Opensource.com][6].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/ansible-patch-systems

|

||||

|

||||

作者:[Jonathan Lozada De La Matta][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jlozadad

|

||||

[1]:https://www.ansible.com/overview/how-ansible-works

|

||||

[2]:https://docs.ansible.com/ansible/latest/list_of_packaging_modules.html

|

||||

[3]:https://docs.ansible.com/ansible/latest/yum_module.html

|

||||

[4]:https://github.com/ansible/lightbulb/tree/master/examples/nginx-role

|

||||

[5]:https://docs.ansible.com/ansible/latest/playbooks_variables.html

|

||||

[6]:https://opensource.com/tags/ansible

|

||||

139

sources/tech/20180322 Simple Load Balancing with DNS on Linux.md

Normal file

139

sources/tech/20180322 Simple Load Balancing with DNS on Linux.md

Normal file

@ -0,0 +1,139 @@

|

||||

Simple Load Balancing with DNS on Linux

|

||||

======

|

||||

|

||||

|

||||

When you have server back ends built of multiple servers, such as clustered or mirrowed web or file servers, a load balancer provides a single point of entry. Large busy shops spend big money on high-end load balancers that perform a wide range of tasks: proxy, caching, health checks, SSL processing, configurable prioritization, traffic shaping, and lots more.

|

||||

|

||||

But you don't want all that. You need a simple method for distributing workloads across all of your servers and providing a bit of failover and don't care whether it is perfectly efficient. DNS round-robin and subdomain delegation with round-robin provide two simple methods to achieve this.

|

||||

|

||||

DNS round-robin is mapping multiple servers to the same hostname, so that when users visit foo.example.com multiple servers are available to handle their requests.

|

||||

|

||||

Subdomain delegation with round-robin is useful when you have multiple subdomains or when your servers are geographically dispersed. You have a primary nameserver, and then your subdomains have their own nameservers. Your primary nameserver refers all subdomain requests to their own nameservers. This usually improves response times, as the DNS protocol will automatically look for the fastest links.

|

||||

|

||||

### Round-Robin DNS

|

||||

|

||||

Round-robin has nothing to do with robins. According to my favorite librarian, it was originally a French phrase, _ruban rond_ , or round ribbon. Way back in olden times, French government officials signed grievance petitions in non-hierarchical circular, wavy, or spoke patterns to conceal whoever originated the petition.

|

||||

|

||||

Round-robin DNS is also non-hierarchical, a simple configuration that takes a list of servers and sends requests to each server in turn. It does not perform true load-balancing as it does not measure loads, and does no health checks, so if one of the servers is down, requests are still sent to that server. Its virtue lies in simplicity. If you have a little cluster of file or web servers and want to spread the load between them in the simplest way, then round-robin DNS is for you.

|

||||

|

||||

All you do is create multiple A or AAAA records, mapping multiple servers to a single host name. This BIND example uses both IPv4 and IPv6 private address classes:

|

||||

```

|

||||

fileserv.example.com. IN A 172.16.10.10

|

||||

fileserv.example.com. IN A 172.16.10.11

|

||||

fileserv.example.com. IN A 172.16.10.12

|

||||

|

||||

fileserv.example.com. IN AAAA fd02:faea:f561:8fa0:1::10

|

||||

fileserv.example.com. IN AAAA fd02:faea:f561:8fa0:1::11

|

||||

fileserv.example.com. IN AAAA fd02:faea:f561:8fa0:1::12

|

||||

|

||||

```

|

||||

|

||||

Dnsmasq uses _/etc/hosts_ for A and AAAA records:

|

||||

```

|

||||

172.16.1.10 fileserv fileserv.example.com

|

||||

172.16.1.11 fileserv fileserv.example.com

|

||||

172.16.1.12 fileserv fileserv.example.com

|

||||

fd02:faea:f561:8fa0:1::10 fileserv fileserv.example.com

|

||||

fd02:faea:f561:8fa0:1::11 fileserv fileserv.example.com

|

||||

fd02:faea:f561:8fa0:1::12 fileserv fileserv.example.com

|

||||

|

||||

```

|

||||

|

||||

Note that these examples are simplified, and there are multiple ways to resolve fully-qualified domain names, so please study up on configuring DNS.

|

||||

|

||||

Use the `dig` command to check your work. Replace `ns.example.com` with your name server:

|

||||

```

|

||||

$ dig @ns.example.com fileserv A fileserv AAA

|

||||

|

||||

```

|

||||

|

||||

That should display both IPv4 and IPv6 round-robin records.

|

||||

|

||||

### Subdomain Delegation and Round-Robin

|

||||

|

||||

Subdomain delegation combined with round-robin is more work to set up, but it has some advantages. Use this when you have multiple subdomains or geographically-dispersed servers. Response times are often quicker, and a down server will not respond, so clients will not get hung up waiting for a reply. A short TTL, such as 60 seconds, helps this.

|

||||

|

||||